Chapter 16: Diving Deeper with the IMU

In Chapter 12, IMU Programming with Python, we read data from an inertial measurement unit (IMU). We've now learned a bit more about processing sensor data, using math and pipelines to make decisions.

In this chapter, we will learn how to get calibrated data from the IMU, combine data from the sensors, and use this to make a robot have absolute orientation-based behavior. On the way, we'll see algorithms for better precision/speed or accuracy.

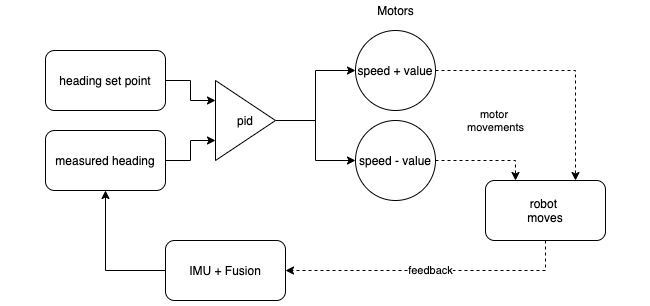

By the end of the chapter, you will be able to detect a robot's absolute orientation, display it on a screen, and incorporate this with the Proportional-Integral-Derivative (PID) behaviors.

In this chapter, we're going to cover the following main topics:

- Programming a virtual robot

- Detecting rotation with the gyroscope

- Detecting pitch and roll with the accelerometer

- Detecting a heading with the magnetometer

- Getting a rough heading from the magnetometer...