In this chapter, we will cover the following topics:

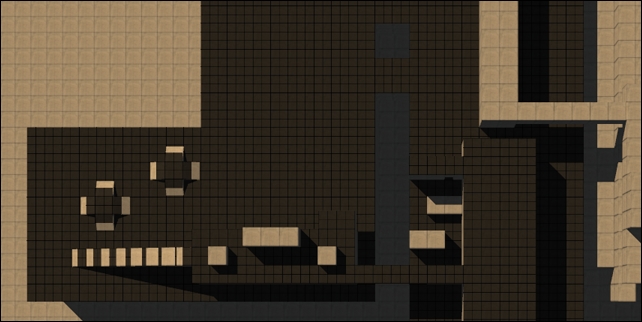

Generating layer-based influence maps

Drawing individual influence map layers

Learning about influence map modifiers

Manipulating and spreading influence values

Creating an influence map for agent occupancy

Creating a tactical influence map for potentially dangerous regions

So far, our agents have a limited number of data sources that allow for spatial reasoning about their surroundings. Navigation meshes allow agents to determine all walkable positions, and perception gives our agents the ability to see and hear; the lack of a fine-grained quantized view of the sandbox prevents our agents from reasoning about other possible places of interest within the sandbox.