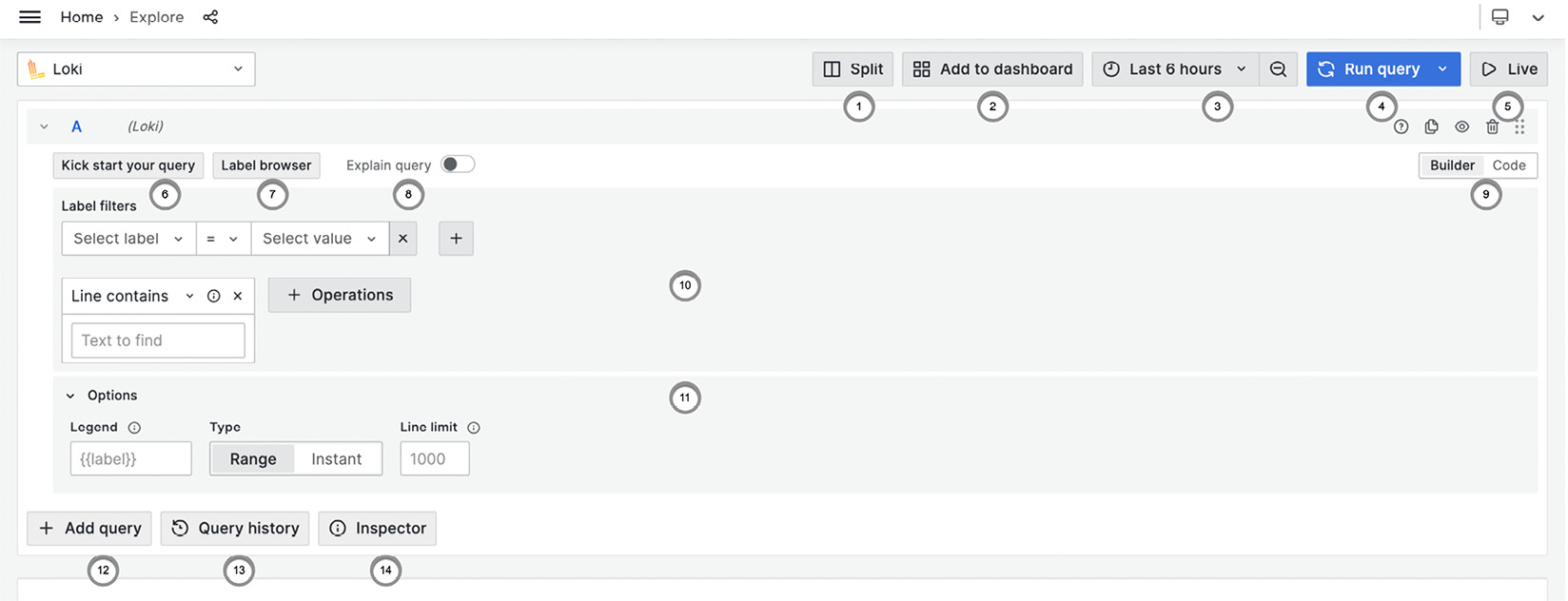

Exploring Log Data with Grafana’s Loki

In this final chapter of Part 2, Real-World Grafana, we’re going to shift gears a bit. So far, we’ve been operating under a dashboard-oriented paradigm in terms of how we use Grafana. This is not too unusual since Grafana has always been structured around the dashboard metaphor. The introduction of Explore in Grafana 6 brought an alternative workflow – one that is data-driven and, dare I say it, exploratory.

Grafana really shines when working with numerical and some forms of textual data, but what if the data includes substantial amounts of log data? Every day, countless applications disgorge not only standard numerical metrics but also copious text logs. If you’ve ever enabled debug mode in an application, then you’ve seen how a few meager kilobytes of information can quickly become a flood of gigabytes worth of repetitive, inscrutable gibberish. Diagnosing a problem by enabling the debugging code...