Let's take a look at the crucial elements of 3D worlds, and how Unity lets you develop games in the third dimension.

If you have worked with any 3D artworking application before, you'll likely be familiar with the concept of the Z-axis. The Z-axis, in addition to the existing X for horizontal and Y for vertical, represents depth. In 3D applications, you'll see information on objects laid out in X, Y, Z — format this is known as the Cartesian coordinate method. Dimensions, rotational values, and positions in the 3D world can all be described in this way. In this book, like in other documentation of 3D, you'll see such information written with parenthesis, shown as follows:

(10, 15, 10)

This is mostly for neatness, and also due to the fact that in programming, these values must be written in this way. Regardless of their presentation, you can assume that any sets of three values separated by commas will be in X, Y, Z order.

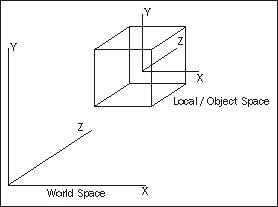

A crucial concept to begin looking at is the difference between Local space and World space. In any 3D package, the world you will work in is technically infinite, and it can be difficult to keep track of the location of objects within it. In every 3D world, there is a point of origin, often referred to as zero, as it is represented by the position (0,0,0).

All world positions of objects in 3D are relative to world zero. However, to make things simpler, we also use Local space (also known as Object space) to define object positions in relation to one another. Local space assumes that every object has its own zero point, which is the point from which its axis handles emerge. This is usually the center of the object, and by creating relationships between objects, we can compare their positions in relation to one another. Such relationships, known as parent-child relationships, mean that we can calculate distances from other objects using Local space, with the parent object's position becoming the new zero point for any of its child objects. For more information on parent-child relationships, see Chapter 3.

You'll also see 3D vectors described in Cartesian coordinates. Like their 2D counterparts, 3D vectors are simply lines drawn in the 3D world that have a direction and a length. Vectors can be moved in world space, but remain unchanged themselves. Vectors are useful in a game engine context, as they allow us to calculate distances, relative angles between objects, and the direction of objects.

Cameras are essential in the 3D world, as they act as the viewport for the screen. Having a pyramid-shaped field of vision, cameras can be placed at any point in the world, animated, or attached to characters or objects as part of a game scenario.

With adjustable Field of Vision (FOV), 3D cameras are your viewport on the 3D world. In game engines, you'll notice that effects such as lighting, motion blurs, and other effects are applied to the camera to help with game simulation of a person's eye view of the world — you can even add a few cinematic effects that the human eye will never experience, such as lens flares when looking at the sun!

Most modern 3D games utilize multiple cameras to show parts of the game world that the character camera is not currently looking at — like a 'cutaway' in cinematic terms. Unity does this with ease by allowing many cameras in a single scene, which can be scripted to act as the main camera at any point during runtime. Multiple cameras can also be used in a game to control the rendering of particular 2D and 3D elements separately as part of the optimization process. For example, objects may be grouped in layers, and cameras may be assigned to render objects in particular layers. This gives us more control over individual renders of certain elements in the game.

In constructing 3D shapes, all objects are ultimately made up of interconnected 2D shapes known as polygons. On importing models from a modelling application, Unity converts all polygons to polygon triangles. Polygon triangles (also referred to as faces) are in turn made up of three connected edges. The locations at which these vertices meet are known as points or vertices. By knowing these locations, game engines are able to make calculations regarding the points of impact, known as collisions, when using complex collision detection with Mesh Colliders, such as in shooting games to detect the exact location at which a bullet has hit another object. By combining many linked polygons, 3D modelling applications allow us to build complex shapes, known as meshes. In addition to building 3D shapes, the data stored in meshes can have many other uses. For example, it can be used as surface navigational data by making objects in a game, by following the vertices.

In game projects, it is crucial for the developer to understand the importance of polygon count. The polygon count is the total number of polygons, often in reference to a model, but also in reference to an entire game level. The higher the number of polygons, the more work your computer must do to render the objects onscreen. This is why, in the past decade or so, we've seen an increase in the level of detail from early 3D games to those of today — simply compare the visual detail in a game, such as Id's Quake (1996) with the details seen in a game, such as Epic's Gears Of War (2006). As a result of faster technology, game developers are now able to model 3D characters and worlds for games that contain a much higher polygon count and this trend will inevitably continue.

Materials are a common concept to all 3D applications, as they provide the means to set the visual appearance of a 3D model. From basic colors to reflective image-based surfaces, materials handle everything.

Starting with a simple color and the option of using one or more images — known as textures — in a single material, the material works with the shader, which is a script in charge of the style of rendering. For example, in a reflective shader, the material will render reflections of surrounding objects, but maintain its color or the look of the image applied as its texture.

In Unity, the use of materials is easy. Any materials created in your 3D modelling package will be imported and recreated automatically by the engine and created as assets to use later. You can also create your own materials from scratch, assigning images as texture files, and selecting a shader from a large library that comes built-in. You may also write your own shader scripts, or implement those written by members of the Unity community, giving you more freedom for expansion beyond the included set.

Crucially, when creating textures for a game in a graphics package such as Photoshop, you must be aware of the resolution. Game textures are expected to be square, and sized to a power of 2. This means that numbers should run as follows:

128 x 128

256 x 256

512 x 512

1024 x 1024

Creating textures of these sizes will mean that they can be tiled successfully by the game engine. You should also be aware that the larger the texture file you use, the more processing power you'll be demanding from the player's computer. Therefore, always remember to try resizing your graphics to the smallest power of 2 dimensions possible, without sacrificing too much in the way of quality.

For developers working with game engines, physics engines provide an accompanying way of simulating real-world responses for objects in games. In Unity, the game engine uses Nvidia's PhysX engine, a popular and highly accurate commercial physics engine.

In game engines, there is no assumption that an object should be affected by physics —firstly because it requires a lot of processing power, and secondly because it simply doesn't make sense. For example, in a 3D driving game, it makes sense for the cars to be under the influence of the physics engine, but not the track or surrounding objects, such as trees, walls, and so on — they simply don't need to be. For this reason, when making games, a Rigid Body component is given to any object you want under the control of the physics engine.

Physics engines for games use the Rigid Body dynamics system of creating realistic motion. This simply means that instead of objects being static in the 3D world, they can have the following properties:

As the power of hardware and software increases, rigid body physics is becoming more widely applied in games, as it offers the potential for more varied and realistic simulation. We'll be utilizing rigid body dynamics as part of our game in Chapter 6.

While more crucial in game engines than in 3D animation, collision detection is the way we analyze our 3D world for inter-object collisions. By giving an object a Collider component, we are effectively placing an invisible net around it. This net mimics its shape and is in charge of reporting any collisions with other colliders, making the game engine respond accordingly. For example, in a ten-pin bowling game, a simple spherical collider will surround the ball, while the pins themselves will have either a simple capsule collider, or for a more realistic collision, employ a Mesh collider. On impact, the colliders of any affected objects will report to the physics engine, which will dictate their reaction, based on the direction of impact, speed, and other factors.

In this example, employing a mesh collider to fit exactly to the shape of the pin model would be more accurate but is more expensive in processing terms. This simply means that it demands more processing power from the computer, the cost of which is reflected in slower performance — hence the term expensive.