Now that your tracked platform can move around, you'll want to add a more complex sensor to provide information to it—the webcam. Using a webcam, you can allow your robot to see its environment. You'll learn how to use a powerful open source software platform called OpenCV to add powerful vision algorithms to your robotic platform.

In this chapter, you will be doing the following:

Connecting a webcam

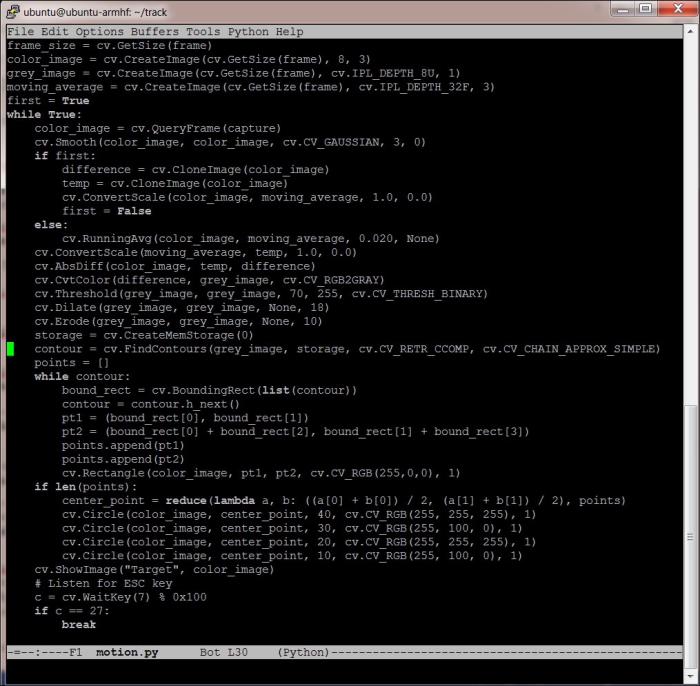

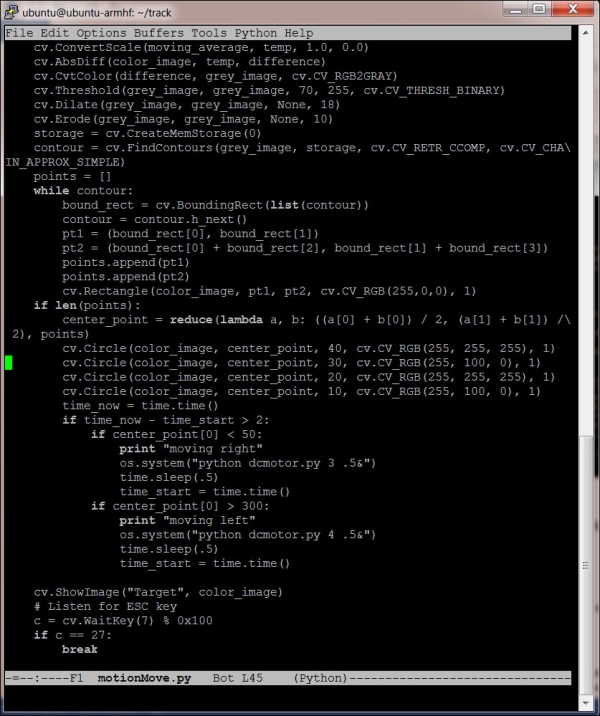

Learning image processing using OpenCV

Discovering edge detection for barrier finding

Adding color and motion detection for targeting