Object detection is one of the important applications of computer vision used in self-driving cars. Object detection in images means not only identifying the kind of object but also localizing it within the image by generating the coordinates of a bounding box that contains the object. We can summarize object detection as follows:

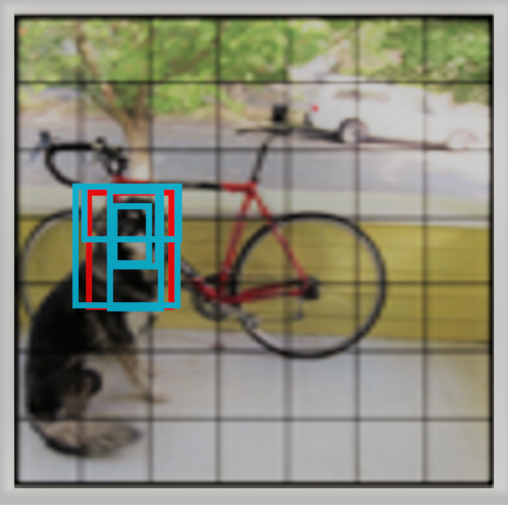

An example of object detection can be seen in the following image:

Here, you can see that the biker is detected as a person and that the bike is detected as a motorbike.

In this chapter, we are going to use OpenCV and You Only Look Once (YOLO) as the deep learning architecture for vehicle detection. Due to this, we'll learn about the state-of-the-art image detection algorithm known as YOLO. YOLO can view an image...