Spark - evaluating history data

In this example, we combine the previous sections to look at some historical data and determine some useful attributes.

The historical data we are using is the guest list for The Jon Stewart Show. A typical record from the data looks like this:

1999,actor,1/11/99,Acting,Michael J. Fox

It contains the year, occupation of the guest, date of appearance, logical grouping of the occupation, and the name of the guest.

For our analysis, we will be looking at number of appearances per year, the most appearing occupation, and the most appearing personality.

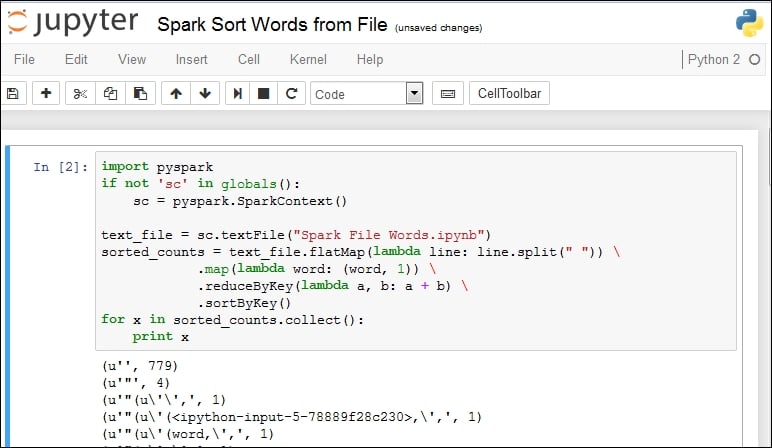

We will be using this script:

import pyspark

import csv

import operator

import itertools

import collections

if not 'sc' in globals():

sc = pyspark.SparkContext()

years = {}

occupations = {}

guests = {}

#The file header contains these column descriptors

#YEAR,GoogleKnowlege_Occupation,Show,Group,Raw_Guest_List

with open('daily_show_guests.csv', 'rb') as csvfile:

reader = csv.DictReader(csvfile)

for row...