In this chapter, we will cover the following recipes:

- Using variables in SSIS Script task

- Execute complex filesystem operations with the Script task

- Reading data profiling XML results with the Script task

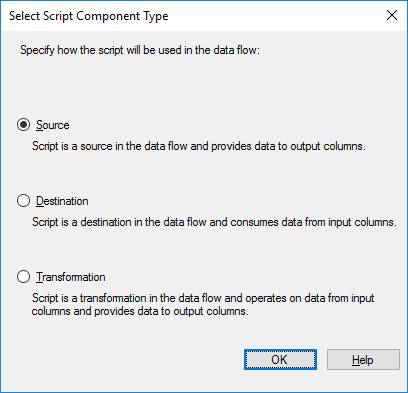

- Correcting data with the Script component

- Validating data using regular expressions in a Script component

- Using the Script component as a source

- Using the Script component as a destination