In this chapter, you are going to learn about augmented reality and how you can use it to build cool applications. We will discuss pose estimation and plane tracking. You will learn how to map the coordinates from 2D to 3D, and how we can overlay graphics on top of a live video.

By the end of this chapter, you will know:

What is the premise of augmented reality

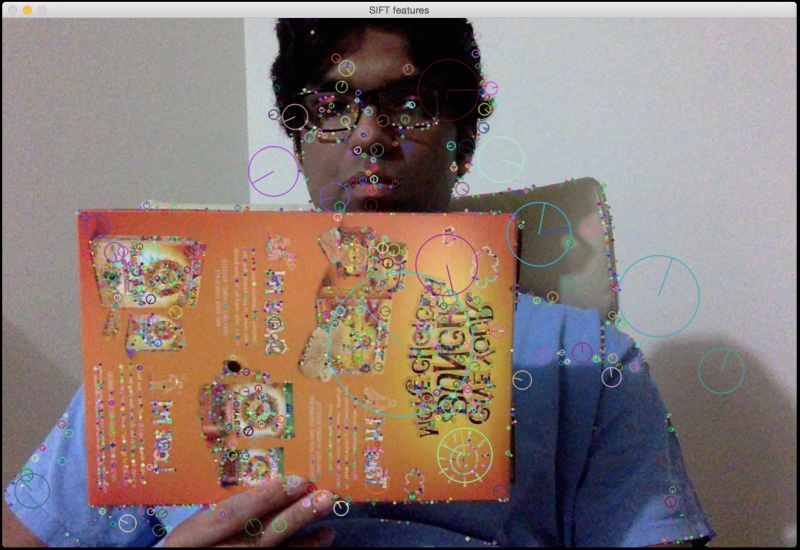

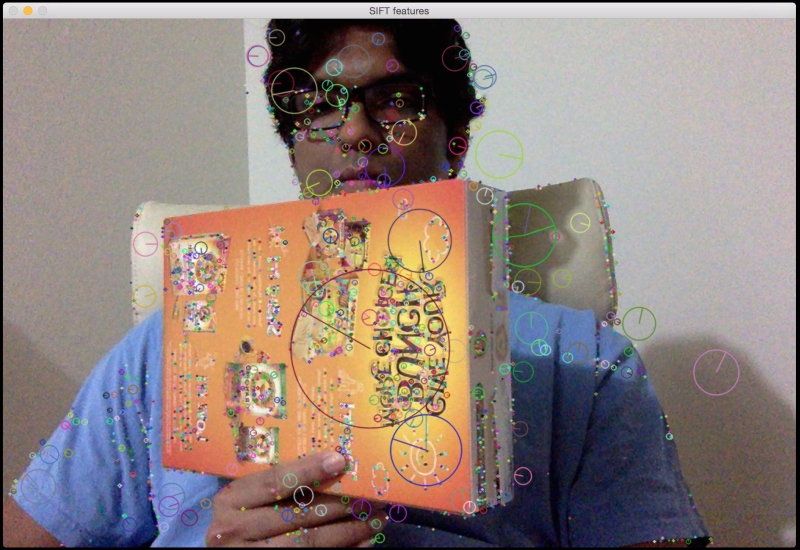

What is pose estimation

How to track a planar object

How to map coordinates from 3D to 2D

How to overlay graphics on top of a video in real time