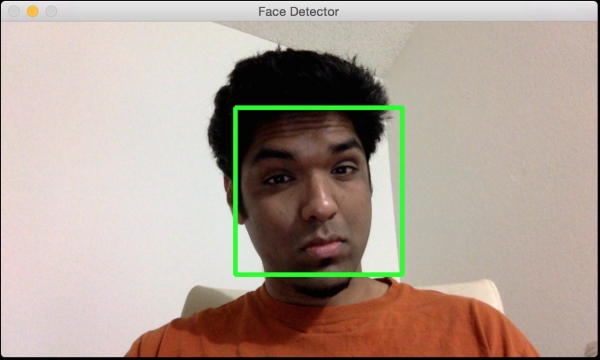

In this chapter, we are going to learn how to detect and track different body parts in a live video stream. We will start by discussing the face detection pipeline and how it's built from the ground up. We will learn how to use this framework to detect and track other body parts, such as eyes, ears, mouth, and nose.

By the end of this chapter, you will know:

How to use Haar cascades

What are integral images

What is adaptive boosting

How to detect and track faces in a live video stream

How to detect and track eyes in a live video stream

How to automatically overlay sunglasses on top of a person's face

How to detect ears, nose, and mouth

How to detect pupils using shape analysis