Clustering, discussed in the last chapter, is an unsupervised algorithm. It is now time to switch back to a supervised algorithm. Classification is a class of problems that surfaces quite frequently in predictive modelling and in various forms. Accordingly, to deal with all of them, a family of classification algorithms is used.

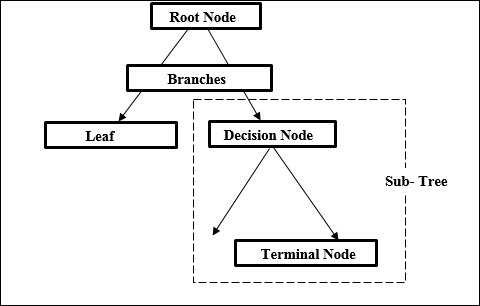

A decision tree is a supervised classification algorithm that is used when the target variable is a discrete or categorical variable (having two or more than two classes) and the predictor variables are either categorical or numerical variables. A decision tree can be thought of as a set of if-then rules for a classification problem where the target variables are discrete or categorical variables. The if-then rules are represented as a tree.

A decision tree is used when the decision is based on multiple-staged criteria and variables. A decision tree is very effective as a decision making tool as it has a pictorial output...