In the previous chapter, we learned about linear regression. We saw that linear regression is one of the most basic models that assumes that there is a linear relationship between a predictor variable and an output variable.

In this chapter, we will be discussing the details of logistic regression. We will be covering the following topics in this chapter:

Math behind logistic regression: Logistic regression relies on concepts such as conditional probability and odds ratio. In this chapter, we will understand what they mean and how they are applied. We will also see how the odds ratio is transformed to establish a linear relationship with the predictor variable. We will analyze the final logistic regression equation and understand the meaning of each term and coefficient.

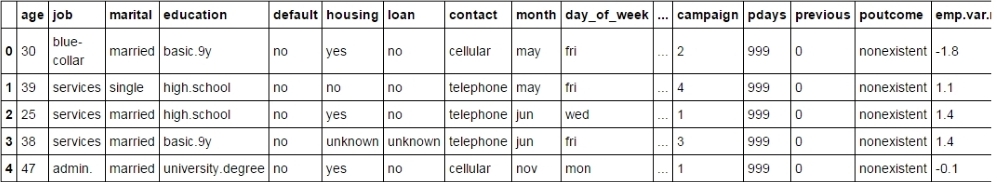

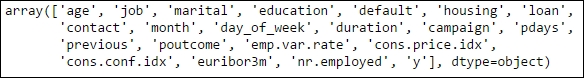

Implementing logistic regression with Python: Similar to what we did in the last chapter, we will take a dataset and implement a logistic regression model on it to understand the...