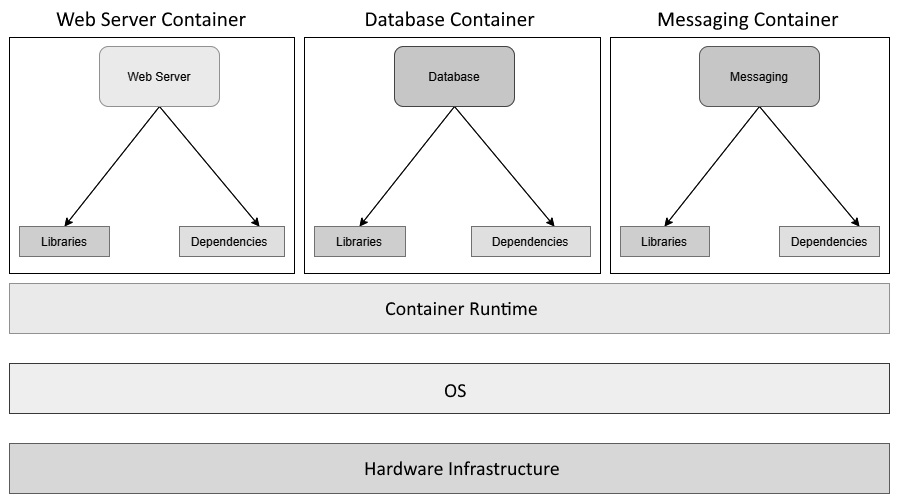

Container architecture

You can visualize containers as mini virtual machines – at least, they seem like they are in most cases. In reality, they are just computer programs running within an operating system. Let's look at a high-level diagram of what an application stack within containers looks like:

Figure 1.4 – Applications on containers

As we can see, we have the compute infrastructure right at the bottom forming the base, followed by the host operating system and a container runtime (in this case, Docker) running on top of it. We then have multiple containerized applications using the container runtime, running as separate processes over the host operating system using namespaces and cgroups.

As you may have noticed, we do not have a guest OS layer within it, which is something we have with virtual machines. Each container is a software program that runs on the Kernel userspace and shares the same operating system and associated runtime and other dependencies, with only the required libraries and dependencies within the container. Containers do not inherit the OS environment variables. You have to set them separately for each container.

Containers replicate the filesystem, and though they are present on disk, they are isolated from other containers. That makes containers run applications in a secure environment. A separate container filesystem means that containers don't have to communicate to and fro with the OS filesystem, which results in faster execution than Virtual Machines.

Containers were designed to use Linux namespaces to provide isolation and cgroups to offer restrictions on CPU, memory, and disk I/O consumption.

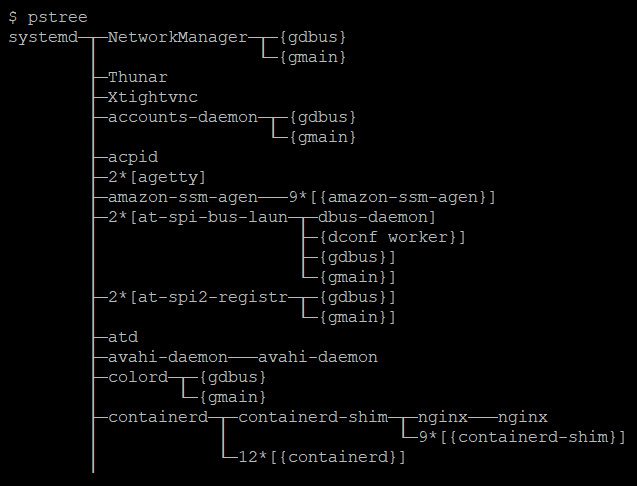

This means that if you list the OS processes, you will see the container process running along with other processes, as shown in the following screenshot:

Figure 1.5 – OS processes

However, when you list the container's processes, you would only see the container process, as follows:

$ docker exec -it mynginx1 bash root@4ee264d964f8:/# pstree nginx---nginx

This is how namespaces provide a degree of isolation between containers.

Cgroups play a role in limiting the amount of computing resources a group of processes can use. If you add processes to a cgroup, you can limit the CPU, memory, and disk I/O that the processes can use. You can measure and monitor resource usage and stop a group of processes when an application goes astray. All these features form the core of containerization technology, which we will see later in this book.

Now, if we have independently running containers, we also need to understand how they interact. Therefore, we'll have a look at container networking in the next section.

Container networking

Containers are separate network entities within the operating system. Docker runtimes use network drivers to define networking between containers, and they are software-defined networks. Container networking works by using software to manipulate the host iptables, connect with external network interfaces, create tunnel networks, and perform other activities to allow connections to and from containers.

While there are various types of network configurations you can implement with containers, it is good to know about some widely used ones. Don't worry too much if the details are overwhelming, as you would understand them while doing the hands-on exercises later in the book, and it is not a hard requirement to know all of this for following the text. For now, let's list down various types of container networks that you can define, as follows:

- None: This is a fully isolated network, and your containers cannot communicate with the external world. They are assigned a loopback interface and cannot connect with an external network interface. You can use it if you want to test your containers, stage your container for future use, or run a container that does not require any external connection, such as batch processing.

- Bridge: The bridge network is the default network type in most container runtimes, including Docker, which uses the

docker0interface for default containers. The bridge network manipulates IP tables to provide Network Address Translation (NAT) between the container and host network, allowing external network connectivity. It also does not result in port conflicts as it enables network isolation between containers running on a host. Therefore, you can run multiple applications that use the same container port within a single host. A bridge network allows containers within a single host to communicate using the container IP addresses. However, they don't permit communication to containers running on a different host. Therefore, you should not use the bridge network for clustered configuration. - Host: Host networking uses the network namespace of the host machine for all the containers. It is similar to running multiple applications within your host. While a host network is simple to implement, visualize, and troubleshoot, it is prone to port-conflict issues. While containers use the host network for all communications, it does not have the power to manipulate the host network interfaces unless it is running in privileged mode. Host networking does not use NAT, so it is fast and communicates at bare metal speeds. You can use host networking to optimize performance. However, since it has no network isolation between containers, from a security and management point of view, in most cases, you should avoid using the host network.

- Underlay: Underlay exposes the host network interfaces directly to containers. This means you can choose to run your containers directly on the network interfaces instead of using a bridge network. There are several underlay networks – notably MACvlan and IPvlan. MACvlan allows you to assign a MAC address to every container so that your container now looks like a physical device. It is beneficial for migrating your existing stack to containers, especially when your application needs to run on a physical machine. MACvlan also provides complete isolation to your host networking, so you can use this mode if you have a strict security requirement. MACvlan has limitations in that it cannot work with network switches with a security policy to disallow MAC spoofing. It is also constrained to the MAC address ceiling of some network interface cards, such as Broadcom, which only allows 512 MAC addresses per interface.

- Overlay: Don't confuse overlay with underlay – even though they seem like antonyms, they are not. Overlay networks allow communication between containers running on different host machines via a networking tunnel. Therefore, from a container's perspective, it seems that they are interacting with containers on a single host, even when they are located elsewhere. It overcomes the bridge network's limitation and is especially useful for cluster configuration, especially when you're using a container orchestrator such as Kubernetes or Docker Swarm. Some popular overlay technologies that are used by container runtimes and orchestrators are flannel, calico, and vxlan.

Before we delve into the technicalities of different kinds of networks, let's understand the nuances of container networking. For this discussion, let's talk particularly about Docker.

Every Docker container running on a host is assigned a unique IP address. If you exec (open a shell session) into the container and run hostname -I, you should see something like the following:

$ docker exec -it mynginx1 bash root@4ee264d964f8:/# hostname -I 172.17.0.2

This allows different containers to communicate with each other through a simple TCP/IP link. The Docker daemon does the DHCP server role for every container. You can define virtual networks for a group of containers and club them together so that you can provide network isolation if you so desire. You can also connect a container to multiple networks if you want to share it for two different roles.

Docker assigns every container a unique hostname that defaults to the container ID. However, this can be overridden easily, provided you use unique hostnames in a particular network. So, if you exec into a container and run hostname, you should see the container ID as the hostname, as follows:

$ docker exec -it mynginx1 bash root@4ee264d964f8:/# hostname 4ee264d964f8

This allows containers to act as separate network entities rather than simple software programs, and you can easily visualize containers as mini virtual machines.

Containers also inherit the host OS's DNS settings, so you don't have to worry too much if you want all the containers to share the same DNS settings. If you're going to define a separate DNS configuration for your containers, you can easily do so by passing a few flags. Docker containers do not inherit entries in the /etc/hosts file, so you need to define them by declaring them while creating the container using the docker run command.

If your containers need a proxy server, you will have to set that either in the Docker container's environment variables or by adding the default proxy to the ~/.docker/config.json file.

So far, we've been discussing containers and what they are. Now, let's discuss how containers are revolutionizing the world of DevOps and how it was necessary to spell this outright at the beginning.

But before we delve into containers and modern DevOps practices, let's understand modern DevOps practices and how it is different from traditional DevOps.