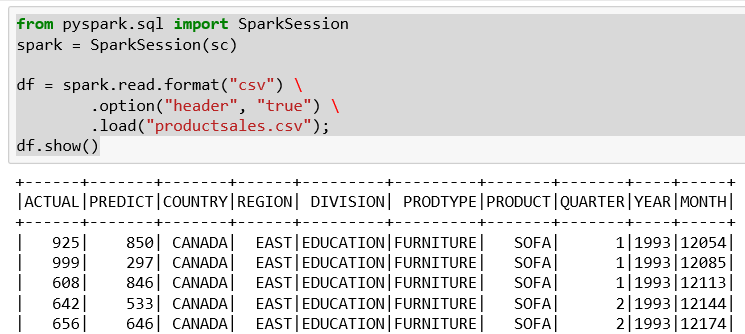

PySpark exposes the Spark programming model to Python. Spark is a fast, general engine for large-scale data processing. We can use Python under Jupyter. So, we can use Spark in Jupyter.

Installing Spark requires the following components to be installed on your machine:

- Java JDK.

- Scala from http://www.scala-lang.org/download/.

- Python recommend downloading Anaconda with Python (from http://continuum.io).

- Spark from https://spark.apache.org/downloads.html.

winutils: This is a command-line utility that exposes Linux commands to Windows. There are 32-bit and 64-bit versions available at:- 32-bit

winutils.exeat https://code.google.com/p/rrd-hadoop-win32/source/checkout - 64-bit

winutils.exeat https://github.com/steveloughran/winutils/tree/master/hadoop-2.6.0/bin

- 32-bit

Then set environment variables that show the position of the preceding components:

JAVA_HOME: The bin directory where you installed JDKPYTHONPATH: Directory where Python was installedHADOOP_HOME: Directory...