In this chapter, we will cover the following recipes:

Running a simple MapReduce program

Hadoop streaming

Configuring YARN history server

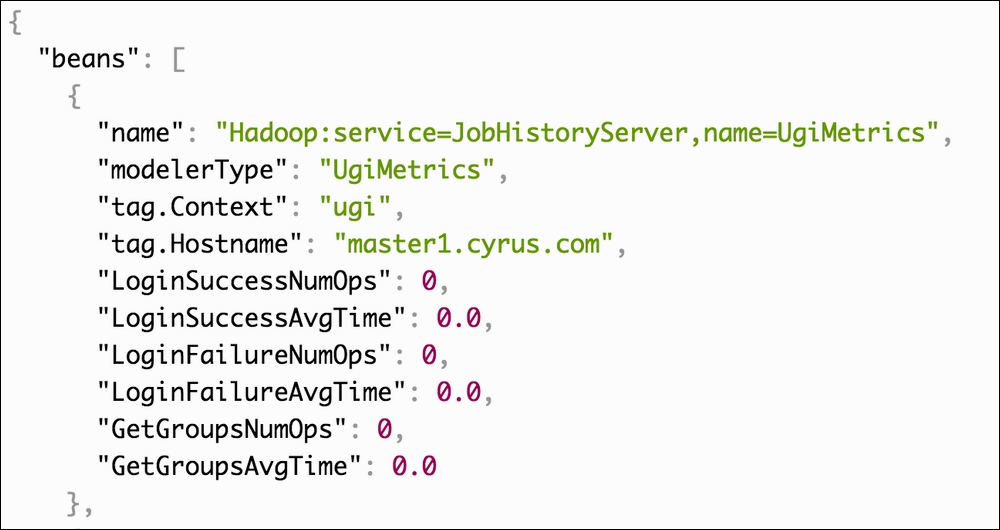

Job history web interface and metrics

Configuring ResourceManager components

YARN containers resource allocations

ResourceManager Web UI and JMX metrics

Preserving ResourceManager states