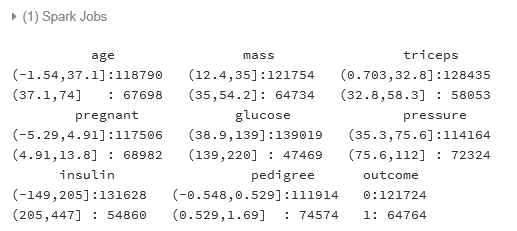

In this section, we will learn how to implement some rule-based algorithms. The method in which these algorithms can be implemented depends upon the language interface you are using and the version of Spark which is running.

For Spark 2.0, the only languages which support rule-based decision trees are Scala and Python. So in order to demonstrate how decision rules can be constructed directly in Spark, we will illustrate an example that uses Python to determine the rules for being frisked.

For other languages, such as R, there is currently no facility to run a decision tree algorithm directly on a Spark dataframe; however, there are other methods that can be used which will yield accurate trees.

We will demonstrate how to first extract a sample from Spark, download it to base R, and run our usual tools, such as rpart. Big datasets will typically contain much more data than you might need for a decision tree, so it makes perfect sense to sample appropriately...