Hunk can be used not only for doing analytics on data stored in Hadoop. We will discover other options using special integration applications. These come from the https://splunkbase.splunk.com/ portal, which has hundreds of published applications. This chapter is devoted to integration schemes between the popular NoSQL document-oriented Mongo and Hunk stores.

You're reading from Learning Hunk

Mongo is a popular NoSQL solution. There are many pros and cons for using Mongo. It's a great choice when you want to get simple and rather fast persistent key-value storage with a nice JavaScript interface for querying stored data. We recommend you start with Mongo if you don't really need a strict SQL schema and your data volumes are estimated in terabytes. Mongo is amazingly simple compared to the whole Hadoop ecosystem; probably it's the right option to start exploring the denormalized NoSQL world.

Mongo is already installed and ready to use. Mongo installation is not described. We use Mongo version 3.0.

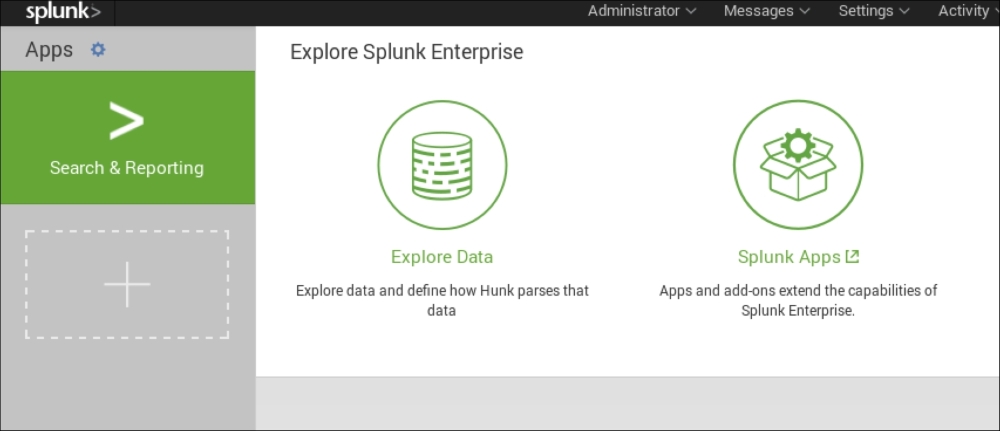

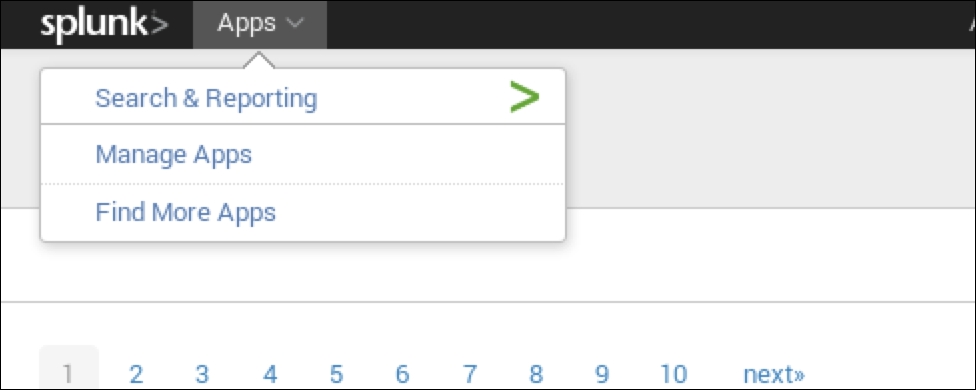

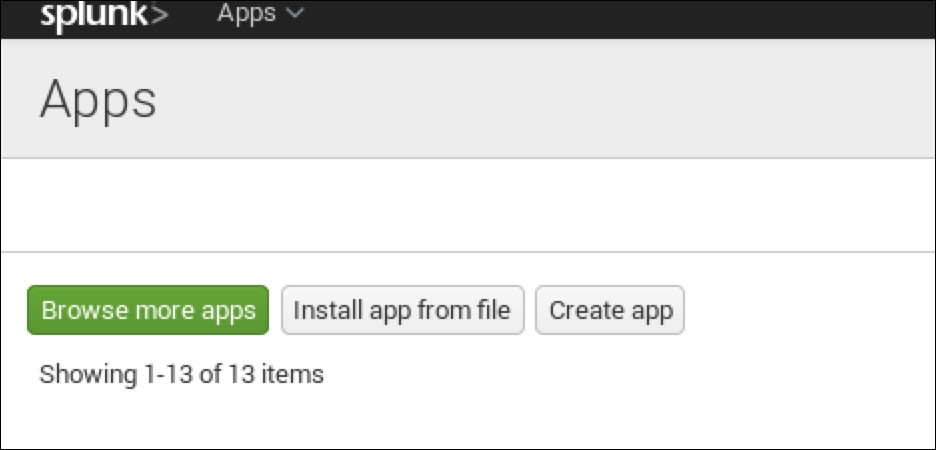

You will install the special Hunk app that integrates Mongo and Hunk.

Visit https://splunkbase.splunk.com/app/1810/#/documentation and download the app. You should use the VM browser to download it:

We can access our daily data stored in separated collections and virtual indexes using a pattern. Let's count the events in each collection and sort by the collection size:

Use this expression:

index=clicks_2015_* | stats count by index | sort – count

We can see the trend: users visit shops during working days more often (the 1st of February is Sunday, the 5th is Thursday) so we get more clicks from them:

Next is the query related to metadata. We don't query the exact index; we use a wildcard to query several indexes at once:

index=clicks_2015_*

© 2015 Packt Publishing Limited All Rights Reserved

© 2015 Packt Publishing Limited All Rights Reserved