Chapter 2: Getting Started with the Azure Cloud

In the first chapter, we covered the history of, and the ideas behind, virtualization and cloud computing. After that, you read about the Microsoft Azure cloud. This chapter will help you take your first steps into the world of Azure, get access to Azure, explore the different Linux offerings, and deploy your first Linux virtual machine.

After deployment, you will need access to your virtual machine using Secure Shell (SSH) with password authentication or using an SSH key pair.

To take the first steps on your journey into the Azure cloud, it is important to complete all the exercises and examine the outcomes. In this chapter, we will be using PowerShell as well as the Azure CLI. Feel free to follow whichever you're comfortable with; however, learning both won't hurt. The key objectives of this chapter are:

- Setting up your Azure account.

- Logging in to Azure using the Azure CLI and PowerShell.

- Interacting...

Technical Requirements

If you want to try all the examples in this chapter, you'll need a browser at the very least. For stability reasons, it's important to use a very recent version of a browser. Microsoft offers a list of supported browsers in the official Azure documentation:

- Microsoft Edge (latest version)

- Internet Explorer 11

- Safari (latest version, Mac only)

- Chrome (latest version)

- Firefox (latest version)

Based on personal experience, we recommend using Google Chrome or a browser based on a recent version of its engine, such as Vivaldi.

You can do all the exercises in your browser, even the exercises involving the command line. In practice, it's a good idea to use a local installation of the Azure CLI or PowerShell; it's faster, it's easier to copy and paste code, and you can save history and the output from the commands.

Getting Access to Azure

To start with Azure, the first thing you'll need is an account. Go to https://azure.microsoft.com and get yourself a free account to get started, or use the corporate account that is already in use. Another possibility is to use Azure with a Visual Studio Professional or Enterprise subscription, which will give you Microsoft Developer Network (MSDN) credits for Azure. If your organization already has an Enterprise Agreement with Microsoft, you can use your Enterprise subscription, or you can sign up for a pay-as-you-go subscription (if you have already used your free trial).

If you are using a free account, you'll get some credits to start, some of the popular services for a limited time, and some services that will stay free always, such as the container service. You can find the most recent list of free services at https://azure.microsoft.com/en-us/free. You won't be charged during the trial period, except for virtual machines that need additional...

Linux and Azure

Linux is almost everywhere, on many different devices, and in many different environments. There are many different flavors, and the choice of what to use is yours. So, what do you choose? There are many questions, and many different answers are possible. But one thing's for sure: in corporate environments, support is important.

Linux distributions

As noted earlier, there are many different Linux distributions around. But there are reasons why there are so many options:

- A Linux distribution is a collection of software. Some collections are there for a specific objective. A good example of such a distribution is Kali Linux, which is an advanced penetration testing Linux distribution.

- Linux is a multi-purpose operating system. Thanks to the large number of customization options we have for Linux, if you don't want a particular package or feature on your operating system, you can remove it and add your own. This is one of the primary reasons...

Deploying a Linux Virtual Machine

We've covered the Linux distributions available in Azure and the level of support you can get. In the previous section, we set up the initial environment by creating the resource group and storage; now it's time to deploy our first virtual machine.

Your First Virtual Machine

The resource group has been created, a storage account has been created in this resource group, and now you are ready to create your first Linux virtual machine in Azure.

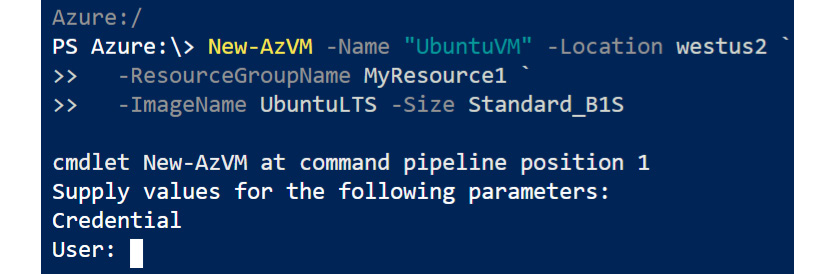

In PowerShell, use the following command:

New-AzVM -Name "UbuntuVM" -Location westus2 ' -ResourceGroupName MyResource1 ' -ImageName UbuntuLTS -Size Standard_B1S

The cmdlet will prompt you to provide a username and password for your virtual machine:

Figure 2.4: Providing user credentials for your virtual machine

In Bash, you can use the following command:

az vm create --name UbuntuVM --resource-group MyResource2...

Connecting to Linux

The virtual machine is running, ready for you to log in remotely using the credentials (username and password) you provided during the deployment of your first virtual machine. Another, more secure method to connect to your Linux virtual machine is by using SSH key pairs. SSH keys are more secure due to their complexity and length. On top of this, Linux on Azure supports sign-in using Azure Active Directory (Azure AD), where users will be able to authenticate using their AD credentials.

Logging into Your Linux virtual machine Using Password Authentication

In the Virtual Machine Networking section, the public IP address of a virtual machine was queried. We will be using this public IP to connect to the virtual machine via SSH using an SSH client installed locally.

SSH, or Secure Shell, is an encrypted network protocol used to manage and communicate with servers. Linux, macOS, Windows Subsystem for Linux (WSL), and the recent update of Windows 10 come with...

Summary

This chapter covered the first steps into Microsoft Azure. The first step always involves creating a new account or using an existing company account. With an account, you're able to log in and start discovering the Azure cloud.

In this chapter, the discovery of the Azure cloud was done using the Azure CLI command az, or via PowerShell; example by example, you learned about the following:

- The Azure login process

- Regions

- The storage account

- Images provided by publishers

- The creation of virtual machines

- Querying information attached to a virtual machine

- What Linux is and the support available for Linux virtual machines

- Accessing your Linux virtual machine using SSH and an SSH keypair

The next chapter starts here, with a new journey: the Linux operating system.

Questions

- What are the advantages of using the command line to access Microsoft Azure?

- What is the purpose of a storage account?

- Can you think of a reason why you would get the following error message?

Code=StorageAccountAlreadyTaken Message=The storage account named mystorage is already taken.

- What is the difference between an offer and an image?

- What is the difference between a stopped and a deallocated virtual machine?

- What is the advantage of using the private SSH key for authentication to your Linux virtual machine?

- The

az vm createcommand has a--generate-ssh-keysparameter. Which keys are created, and where are they stored?

Further Reading

By no means is this chapter a tutorial on using PowerShell. But if you want a better understanding of the examples, or want to learn more about PowerShell, we can recommend you read Mastering Windows PowerShell Scripting – Second Edition (ISBN: 9781787126305), by Packt Publishing. We suggest that you start with the second chapter, Working with PowerShell, and continue until at least the fourth chapter, Working with Objects in PowerShell.

You can find plenty of online documentation about using SSH. A very good place to start is this wikibook: https://en.wikibooks.org/wiki/OpenSSH.

If you wish to explore more about Linux administration, Linux Administration Cookbook by Packt Publishing is a good resource, especially for system engineers.

To dive deep into security and admin tasks, this is a good read: Mastering Linux Security and Hardening, written by Donald A. Tevault and published by Packt Publishing.

Download code from GitHub

Download code from GitHub