The driverless car is popularly known as an self-driving car (SDC), an autonomous vehicle, or a robot car. The purpose of an autonomous car is to drive automatically without a driver. The SDC is the sleeping giant that might improve everything from road safety to universal mobility, while dramatically reducing the costs of driving. According to McKinsey & Company, the widespread use of robotic cars in the US could save up to $180 billion annually in healthcare and automotive maintenance alone based on a realistic estimate of a 90% reduction in crash rates.

Although self-driving automotive technologies have been in development for many decades, it is only in recent years that breakthroughs have been achieved. SDCs have proved to be much safer than human drivers, and automotive firms as well as other tech firms are investing billions in bringing this technology into the real world. They struggle to find great engineers to contribute to the field. This book will teach you what you need to know to kick-start a career in the autonomous driving industry. Whether you're coming from academia, or from within the industry, this book will provide you with the foundational knowledge and practical skills you will need to help build a future with Advanced Driver-Assistance Systems (ADAS) engineers or SDC engineers. Throughout this book, you will study real-world data and scenarios from recent research in autonomous cars.

This book can also help you learn and implement the state-of-the-art technologies for computer vision that are currently used in the automotive industry in the real world. By the end of this book, you will be familiar with different deep learning and computer vision techniques for SDCs. We'll finish this book off with six projects that will give you a detailed insight into various real-world issues that are important to SDC engineers.

In this chapter, we will cover the following topics:

- Introduction to SDCs

- Advancement in SDCs

- Levels of autonomy

- Deep learning and computer vision approaches for SDCs

Let's get started!

Introduction to SDCs

The following is an image of an SDC by WAYMO undergoing testing in Los Altos, California:

You can check out the image at https://en.wikipedia.org/wiki/File:Waymo_Chrysler_Pacifica_in_Los_Altos,_2017.jpg.

The idea of the autonomous car has existed for decades, but we saw enormous improvement from 2002 onward when the Defense Advanced Research Projects Agency (DARPA) announced the first of its grand challenges, called the DARPA Grand Challenge (2004). That would forever change the world's perception of what autonomous robots can do. The first event was held in 2004 and DARPA offered the winners a one-million-dollar prize if they could build an autonomous vehicle that was able to navigate 142 miles through the Mojave Desert. Although the first event saw only a few teams get off the start line (Carnegie Mellon's red team took first place, having driven only 7 miles), it was clear that the task of driving without any human aid was indeed possible. In the second DARPA Grand Challenge in 2005, five of the 23 teams smashed expectations and successfully completed the track without any human intervention at all. Stanford's vehicle, Stanley, won the challenge, followed by Carnegie Mellon's Sandstorm, an autonomous vehicle. With this, the era of driverless cars had arrived.

Later, the 2007 installment, called the DARPA Urban Challenge, invited universities to show off their autonomous vehicles on busy roads with professional stunt drivers. This time, after a harrowing 30-minute delay that occurred due to a jumbotron screen blocking their vehicle from receiving GPS signals, the Carnegie Mellon team came out on top, while the Stanford Junior vehicle came second.

Collectively, these three grand challenges were truly a watershed moment in the development of SDCs, changing the way the public (and more importantly, the technology and automotive industries) thought about the feasibility of full vehicular autonomy. It was now clear that a massive new market was opening up, and the race was on. Google immediately brought in the team leads from both Carnegie Mellon and Stanford (Chris Thompson and Mike Monte-Carlo, respectively) to push their designs onto public roads. By 2010, Google's SDC had logged over 140 thousand miles in California, and they later wrote in a blog post that they were confident about cutting the number of traffic deaths by half using SDCs. blog by Google: What we're driving at — Sebastian Thrun (https://googleblog.blogspot.com/2010/10/what-were-driving-at.html)

As per a study released by the Virginia Tech Transportation Institute (VTTI) and the National Highway Traffic Safety Administration (NHTSA), 80% of car accidents involve human distraction (https://seriousaccidents.com/legal-advice/top-causes-of-car-accidents/driver-distractions/). An SDC can, therefore, become a useful and safe solution for the whole of society to reduce these accidents. In order to propose a path that an intelligent car should follow, we require several software applications to process data using artificial intelligence (AI).

Google succeeded in creating the world's first autonomous car 2 years ago (at the time of writing). The problem with Google's car was its expensive 3D RADAR, which is worth about $75,000.

The solution to this cost is to use multiple, cheaper cameras that are mounted to the car to capture images that recognize the lane lines on the road, as well as the real-time position of the car.

In addition, a driverless car can reduce the distance between cars, thereby reducing the degree of road loads, reducing the number of traffic jams. Furthermore, they greatly reduce the capacity for human errors to occur while driving and allow people with disabilities to drive long distances.

A machine as a driver will never make a mistake; it will be able to calculate the distance between cars very accurately. Parking will be more efficiently spaced, and the fuel consumption of cars will be optimized.

The driverless car is a vehicle equipped with sensors and cameras for detecting the environment, and it can navigate (almost) without any real-time input from a human. Many companies are investing billions of dollars in order to advance this toward an accessible reality. Now, a world where AI takes control of driving has never been closer.

Nowadays, self-driving car engineers are exploring several different approaches in order to develop an autonomous system. The most successful and popularly used among them are as follows:

- The robotics approach

- The deep learning approach

In reality, in the development of SDCs, both robotics and deep learning methods are being actively pursued by developers and engineers.

The robotic approach works by fusing output from a set of sensors to analyze a vehicle's environment directly and prompt it to navigate accordingly. For many years, self-driving automotive engineers have been working on and improving robotic approaches. However, more recently, engineering teams have started developing autonomous vehicles using a deep learning approach.

Deep neural networks enable SDCs to learn how to drive by imitating the behavior of human driving.

The five core components of SDCs are computer vision, sensor fusion, localization, path planning, and control of the vehicle.

In the following diagram, we can see the five core components of SDCs:

Let's take a brief look at these core components:

- Computer vision can be considered the eyes of the SDC, and it helps us figure out what the world around it looks like.

- Sensor fusion is a way of incorporating the data from various sensors such as RADAR, LIDAR, and LASER to gain a deeper understanding of our surroundings.

- Once we have a deeper understanding of what the world around it looks like, we want to know where we are in the world, and localization helps with this.

- After understanding what the world looks like and where we are in the world, we want to know where we would like to go, so we use path planning to chart the course of our travel. Path planning is built for trajectory execution.

- Finally, control helps with turning the steering wheel, changing the car's gears, and applying the brakes.

Getting the car to autonomously follow the path you want requires a lot of effort, but researchers have made the possible with the help of advanced systems engineering. Details regarding systems engineering will be provided later in this chapter.

Benefits of SDCs

Indeed, some people may be afraid of autonomous driving, but it is hard to deny its benefits. Let's explore a few of the benefits of autonomous vehicles:

- Greater safety on roads: Government data identifies that a driver's error is the cause of 94% of crashes (https://crashstats.nhtsa.dot.gov/Api/Public/ViewPublication/812115). Higher levels of autonomy can reduce accidents by eliminating driver errors. The most significant outcome of autonomous driving could be to reduce the devastation caused by unsafe driving, and in particular, driving under the influence of drugs or alcohol. Furthermore, it could reduce the heightened risks for unbelted occupants of vehicles, vehicles traveling at higher speeds, and distractions that affect human drivers. SDCs will address these issues and increase safety, which we will see in more detail in the Levels of autonomy section of this chapter.

- Greater independence for those with mobility problems: Full automation generally offers us more personal freedom. People with special needs, particularly those with mobility limitations, will be more self-reliant. People with limited vision who may be unable to drive themselves will be able to access the freedom afforded by motorized transport. These vehicles can also play an essential role in enhancing the independence of senior citizens. Furthermore, mobility will also become more affordable for people who cannot afford it as ride-sharing will reduce personal transportation costs.

- Reduced congestion: Using SDCs could address several causes of traffic congestion. Fewer accidents will mean fewer backups on the highway. More efficient, safer distances between vehicles and a reduction in the number of stop-and-go waves will reduce the overall congestion on the road.

- Reduced environmental impact: Most autonomous vehicles are designed to be fully electric, which is why the autonomous vehicle has the potential to reduce fuel consumption and carbon emissions, which will save fuel and reduce greenhouse gas emissions from unnecessary engine idling.

There are, however, potential disadvantages to SDCs:

- The loss of vehicle-driving jobs in the transport industry as a direct impact of the widespread adoption of automated vehicles.

- Loss of privacy due to the location and position of an SDC being integrated into an interface. If it can be accessed by other people, then it can be misused for any crime.

- A risk of automotive hacking, particularly when vehicles communicate with each other.

- The risk of terrorist attacks also exists; there is a real possibility of SDCs, charged with explosives, being used as remote car bombs.

Despite these disadvantages, automobile companies, along with governments, need to come up with solutions to the aforementioned issues before we can have fully automated cars on the roads.

Advancements in SDCs

The idea of SDCs on our streets seemed like a crazy sci-fi fantasy until just a few years ago. However, the rapid progress made in recent years in both AI and autonomous technology proves that the SDC is becoming a reality. But while this technology appears to have emerged virtually overnight, it has been a long and winding path to achieving the self-driving vehicles of today. In reality, it wasn't long after the invention of the motor car when inventors started thinking about autonomous vehicles.

In 1925, former US army electrical engineer and founder of The Houdina Radio Control Co., Francis P Houdina, developed a radio-operated automobile. He equipped a Chandler motor car with a transmitting antenna and operated it from a second car that followed it using a transmitter.

In the early 1990s, Dean Pomerleau, who is a PhD researcher from Carnegie Mellon University, did something interesting in the field of SDCs. Firstly, he described how neural networks could allow an SDC to take images of the road and predict steering control in real time. Then, in 1995, along with his fellow researcher Todd Jochem, he drove an SDC that they created on the road. Although their SDC required driver control of the speed and brakes, it traveled around 2,797 miles.

Then came the grand challenge by DARPA in 2002, which we discussed previously. This competition offered a $1 million prize to any researcher who could build a driverless vehicle. It was stipulated that the vehicle should be able to navigate 142 miles through the Mojave Desert. The challenge kicked off in 2004, but none of the competitors were able to complete the course. The winning team traveled for less than 8 miles in a couple of hours.

In the early 2000s, when the autonomous car was still futuristic, self-parking systems began to evolve. Toyota's Japanese Prius hybrid vehicle started offering automatic parking assistance in 2003. This was later followed by BMW and Ford in 2009.

Google secretly started an SDC project in 2009. The project was initially led by Sabastian Thrun, the former director of the Stanford Artificial Intelligence Laboratory and co-inventor of Google Street View. The project is now called Waymo. In August 2012, Google revealed that their driverless car had driven 300,000 miles without a single accident occurring.

Since the 1980s, various companies such as General Motors, Ford, Mercedes-Benz, Volvo, Toyota, and BMW have started working on their own autonomous vehicles. As of 2019, 29 US states have passed legislation enabling autonomous vehicles.

Nvidia Xavier is an SDC chip that has incorporated AI capabilities. Nvidia also announced a collaboration with Volkswagen to transform this dream into a reality, by developing AI for SDCs.

On this journey to becoming a reality, the driverless car has launched into a frenzied race that includes tech companies and start-ups, as well as traditional automakers.

The market for self-driving vehicles, including cars and trucks, is categorized into transport and defense, based on their application. Transportation is expected to emerge in the future which is further divided into industrial, commercial, and consumer applications.

The SDC and self-driving truck market size is estimated to grow to 6.7 per thousand vehicular units globally in 2020 and is expected to increase at a compound annual growth rate (CAGR) of 63.1% from 2021 to 2030.

It is expected that the highest adoption of driverless vehicles will be in the US due to the increase in government support for the market gateway of the autonomous vehicle. The US transportation secretary Elaine Chao signaled strong support for SDCs in the CES tech conference, Las Vegas, which was organized by the Consumer Technology Association on January 7th, 2020.

Additionally, it is expected that Europe will also emerge as a potentially lucrative market for technological advancements in self-driving vehicles with increasing consumer preference.

In the next section, we will learn about the challenges in current deployments of autonomous driving.

Challenges in current deployments

Companies have started public testing with autonomous taxi services in the US, which are often driven at low speeds and nearly always with a security driver.

A few of these autonomous taxi services are listed in the following table:

| Voyage | In the villages of Florida |

| Drive.ai | Arlington, Texas |

| Waymo One | Phoenix, Arizona |

| Uber | Pittsburgh, PA |

| Aurora | San Francisco and Pittsburgh |

| Optimus Ride | Union Point, MA |

| May Mobility | Detroit, Michigan |

| Nuro | Scottsdale, Arizona |

| Aptiv | Las Vegas, Boston, Pittsburgh, and Singapore |

| Cruise | San Francisco, Arizona, and Michigan |

The fully autonomous vehicle announcements (including testing and beyond) are listed in the following table:

| Tesla | Expected in 2019/2020 |

| Honda | Expected in 2020 |

| Renault-Nissan | Expected in 2020 (for urban areas) |

| Volvo | Expected in 2021 (for highways) |

| Ford | Expected in 2021 |

| Nissan | Expected in 2020 |

| Daimler | Expected between 2021 and 2025 |

| Hyundai | Expected in 2021 (for highways) |

| Toyota | Expected in 2020 (for highways) |

| BMW | Expected in 2021 |

| Fiat-Chrysler | Expected in 2021 |

However, despite these advances, there is one question we must ask: SDC development has existed for decades, but why is it taking so long to become a reality? The reason is that there are lots of components to SDCs, and the dream can only become a reality with the proper integration of these components. So, what we have today is multiple prototypes of SDCs from multiple companies to showcase their promising technologies.

The key ingredients or differentiators of SDCs are the sensors, hardware, software, and algorithms that are used. Lots of system and software engineering is required to bring all these four differentiators together. Even the choice of these differentiators plays an important role in SDC development.

In this section, we will cover existing deployments and their associated challenges in SDCs. Tesla has recently revealed their advancements and the research they've conducted on SDCs. Currently, most Tesla vehicles are capable of supplementing the driver's abilities. It can take over the tedious task of maintaining lanes on highways; monitoring and matching the speeds of surrounding vehicles; and can even be summoned to you while you are not in the vehicle. These capabilities are impressive and, in some cases, even life-saving, but it is still far from a full SDC. Tesla's current output still requires regular input from the driver to ensure they are paying attention and capable of taking over when needed.

There are four primary challenges that automakers such as Tesla need to overcome in order to succeed in replacing the human driver. We'll go over these now.

Building safe systems

The first one is building a safe system. In order to replace human drivers, the SDC needs to be safer than a human driver. So, how do we quantify that? It is impossible to guarantee that accidents will not occur without real-world testing, which comes with that innate risk.

We can start by quantifying how good human drivers are. In the US, the current fatality rate is about one death per one million hours of driving. This includes human error and irresponsible driving, so we can probably hold the vehicles to a higher standard, but that's the benchmark nonetheless. Therefore, the SDC vehicle needs to have fewer fatalities than once every one million hours, and currently, that is not the case. We do not have enough data to calculate accurate statistics here, but we do know that Uber's SDC required a human to intervene approximately every 19 kilometers (KM). The first case of pedestrian fatality was reported in 2018 after a pedestrian was hit by Uber's autonomous test vehicle.

The car was in self-driving mode, sitting in the driving seat with a human backup driver. Uber halted testing of SDCs in Arizona, where such testing had been approved since August 2016. Uber opted not to extend its California self-driving trial permit when it expired at the end of March 2018. Uber's vehicle that hit the pedestrian was using LIDAR sensors that didn't work using light coming from camera sensors. However, Uber's test vehicle made no effort to slow down, even though the vehicle was occupied by the human backup driver, who wasn't careful and was not paying attention.

According to the data obtained by Uber, the vehicle first observed the pedestrian 6 seconds before the impact with its RADAR and LIDAR sensors. At the time of the hazard, the vehicle was traveling at 70 kilometers per hour. The vehicle continued at the same speed and when the paths of the pedestrian and the car converged, the classification algorithm of the machine was seen trying to classify what object was in its view. The system switched its identification from an unidentified object, to a car, to a cyclist with no identification of the driving path of the pedestrian. Just 1.3 seconds before the crash, the vehicle was able to recognize the pedestrian. The vehicle was required to perform an emergency brake but didn't as it was programmed not to brake.

As per the algorithm's prediction, the vehicle performed a speed deceleration of more than 6.5 meters per square second. Also, the human operator was expected to intervene, but the vehicle was not designed to alert the driver. The driver did intervene a few seconds before the impact by engaging the steering wheel and braking and bringing the vehicle's speed to 62 kilometers per hour, but it was too late to save the pedestrian. Nothing malfunctioned in the car and everything worked as planned, but it was clearly a case of bad programming. In this case, the internal computer was clearly not programmed to deal with this uncertainty, whereas a human would normally slow down when confronted with an unknown hazard. Even with high-resolution LIDAR, the vehicle failed to recognize the pedestrian.

The cheapest computer and hardware

Computer and hardware architecture plays a significant role in SDCs. As we know, a large part of that lies in the hardware itself and the programming that goes into it. Tesla unveiled its new, purpose-built computer; a chip specifically optimized for running a neural network. It has been designed to be retrofitted in existing vehicles. This computer is of a similar size and power to the existing self-driving computers. This has increased Tesla's SDC computer capabilities by 2,100% as it allows it to process 2,300 frames per second, 2,190 frames more than the previous iteration. This is a massive performance jump, and that processing power will be needed to analyze footage from the suit of sensors Tesla has.

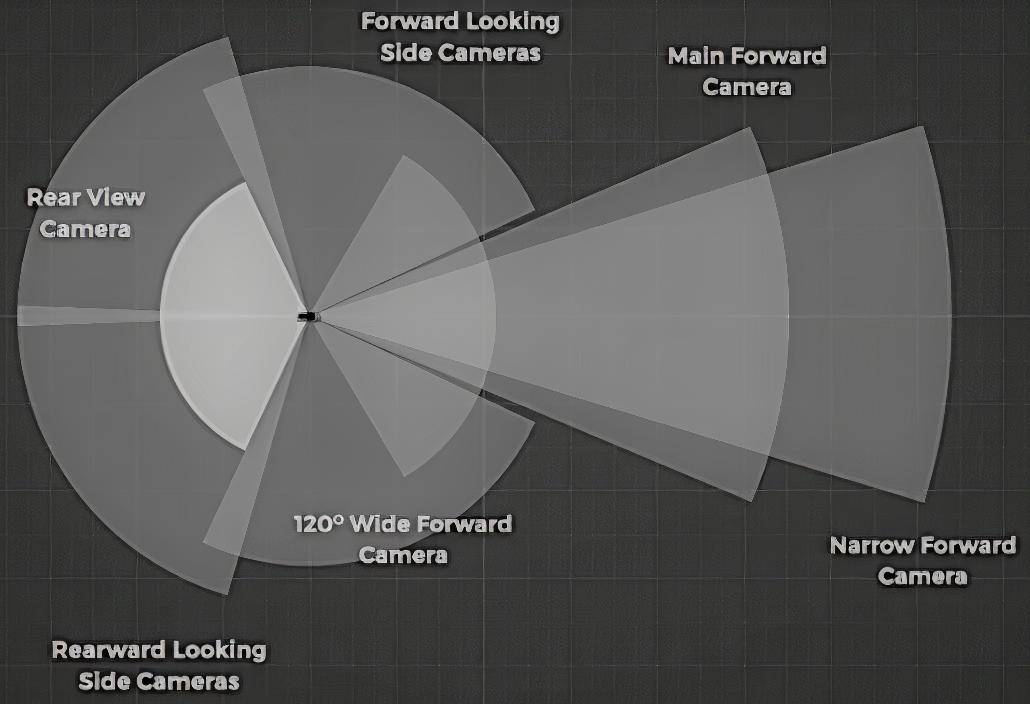

The Tesla autopilot model currently consists of three forward-facing cameras, all mounted behind the windshield. One is a 120-degree wide-angle fish-eye lens, which gives situational awareness by capturing traffic lights and objects moving into the path of travel. The second camera is a narrow-angle lens that provides longer-range information needed for high-speed driving. The third is the main camera, which sits in the middle of these two cameras. There are four additional cameras on the sides of the vehicle that check for vehicles unexpectedly entering any lane, and provide the information needed to safely enter intersections and change lanes. The eight and final camera is located at the rear, which doubles as a parking camera, but is also used to avoid crashes from rear hazards.

The vehicle does not completely rely on visual cameras. It also makes use of 12 ultrasonic sensors, which provide a 360-degree picture of the immediate area around the vehicle, and one forward-facing RADAR:

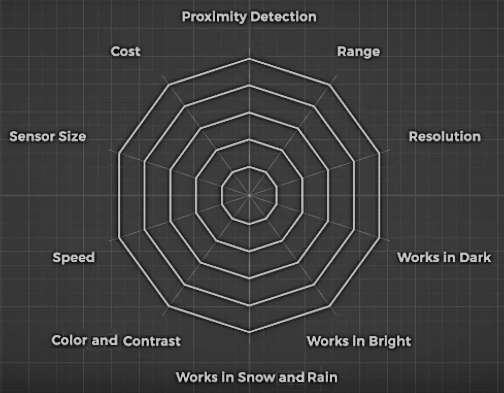

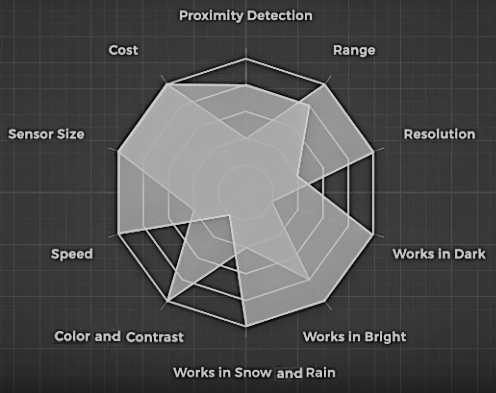

Finding the correct sensor fusion has been a subject of debate among competing SDC companies. Elon Musk recently stated that anyone relying on LIDAR sensors (which work similarly to RADAR but utilize light instead of radio waves) is doomed. To understand why he said this, we will plot the strengths of each sensor on a RADAR chart, as follows:

RADAR has great resolution; it provides highly detailed information about what it's detecting. It works in low and high light situations and is also capable of measuring speed. It has a good range and works moderately well in poor weather conditions. Its biggest weakness is that these sensors are expensive and bulky. This is where the second challenge of building a SDC comes into play: building an affordable system that the average person can buy.

Let's look at the following RADAR chart:

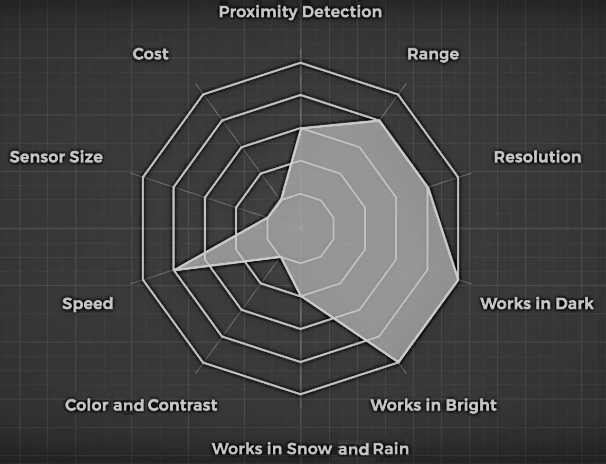

LIDAR sensors are the big sensors we see on Waymo, Uber, and most competing SDC companies output. Elon Musk has become more aware of LIDAR's potential after SpaceX utilized it in their dragon-eye navigation sensor. It's a drawback for Tesla for now, who focused on building not just a cost-effective vehicle, but a good-looking vehicle. Fortunately, LIDAR technology is gradually becoming smaller and cheaper.

Waymo, a subsidiary of Google's parent company Alphabet, sells its LIDAR sensors to any company that does not intend to compete with its plans for a self-driving taxi service. When they started in 2009, the per-unit cost of a LIDAR sensor was around $75,000, but they have managed to reduce this to $7,500 as of 2019 by manufacturing the units themselves. Waymo vehicles use four LIDAR sensors on each side of the vehicle, placing the total cost of these sensors for the third party at $30,000. This sort of pricing does not line up with Tesla's mission as their mission is to speed up the world so that it moves toward sustainable transport. This issue has pushed Tesla toward a cheaper sensor fusion setup.

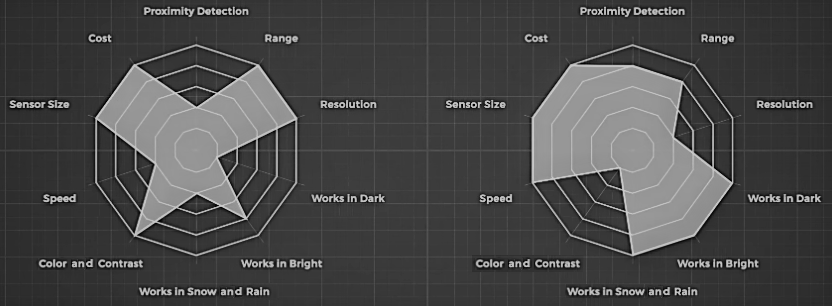

Let's look at the strength and weaknesses of the three other sensor types – RADAR, camera sensor, and ultrasonic sensor – to see how Tesla is moving forward without LIDAR.

First, let's look at RADAR. It works well in all conditions. RADAR sensors are small and cheap, capable of detecting speed, and their range is good for short- and long-distance detection. Where they fall short is in the low-resolution data they provide, but this weakness can easily be augmented by combining it with cameras. The plot for RADAR and its cameras can be seen in the following image:

When we combine the two, this yields the following plot:

This combination has excellent range and resolution, provides color and contrast information for reading street signs, and is extremely small and cheap. Combining RADAR and cameras allows each to cover the weaknesses of the other. They are still weak in terms of proximity detection, but using two cameras in stereo can allow the camera to work like our eyes to estimate distance. When fine-tuned distance measurements are needed, we can use the ultrasonic sensor. An example of an ultrasonic sensor can be seen in the following photo:

These are circular sensors dotted around the car. In Tesla cars, eight surround cameras have coverage of 360 degrees around the car at a range of up to 250 meters. This vision is complemented by 12 upgraded ultrasonic sensors, which allow the detection of both hard and soft objects that are nearly twice the distance away from the prior device. A forward-facing RADAR with improved processing offers additional data about the world at a redundant wavelength and can be seen through heavy rain, fog, dust, and even the car ahead. This is a cost-effective solution. According to Tesla, their hardware is already capable of allowing their vehicles to self-drive. Now, they just need to continue improving the software algorithms. Tesla is in a fantastic position to make it work.

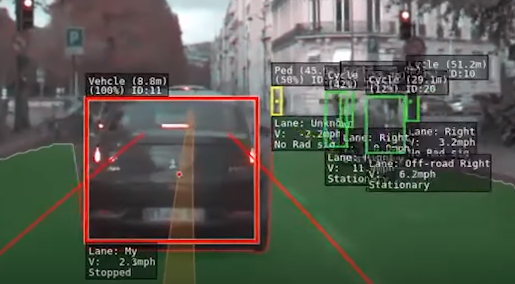

In the following screenshot, we can see the object detection camera sensor used by Tesla cars:

When training a neural network, data is key. Waymo has logged millions of kilometers driven in order to gain data, while Tesla has logged over a billion. 33% of all driving with Tesla cars is with the autopilot engaged. This data collection extends past autopilot engagement. Tesla cars also drive manually and collect data in areas where autopilot is not allowed, such as in the city or streets. Accounting for all the unpredictability of driving requires an immense amount of training for a machine learning algorithm, and this is where Tesla's data gives them an advantage. We will read about neural networks in later chapters. One key point to note is that the more data you have to train a neural network, the better it is going to be. Tesla's machine vision does a decent job, but there are still plenty of gaps there.

The Tesla software places bounding boxes around the objects it detects while categorizing them as cars, trucks, bicycles, and pedestrians. It labels each with a relative velocity to the vehicle, and what lane they occupy. It highlights drivable areas, marks the lane dividers, and sets a projected path between them. This frequently struggles in complicated scenarios, and Tesla is working on improving the accuracy of the models by adding new functionalities. Their latest SDC computer is going to radically increase its processing power, which will allow Tesla to continue adding functionality without needing to refresh information constantly. However, even if they manage to develop the perfect computer vision application, programming the vehicle on how to handle every scenario is another hurdle. This is a vital part of building not only a safe vehicle but a practical self-driving vehicle.

Software programming

Another challenge is programming for safety and practicality, which are often at odds with each other. If we program a vehicle purely for safety, its safest option is not to drive. Driving is an inherently dangerous operation, and programming for the multiple scenarios that arise while driving is an insanely difficult task. It is easy to say follow the rules of the road, but the problem is, humans don't follow the rules of the road perfectly. Therefore, programmers need to enable SDCs to react to this. Sometimes, the computer will need to make difficult decisions and may need to make a decision that involves endangering the life of its occupants or people outside the vehicle. This is a dangerous task, but if we continue improving on the technology, we could start to see reduced road deaths, all while making taxi services drastically cheaper and freeing many people from the financial burden of purchasing a vehicle.

Tesla is in a fantastic position to gradually update their software as they master each scenario. They don't need to create the perfect SDC out of the gate, and with this latest computer, they are going to be able to continue their technological growth.

Fast internet

Finally, for many processes in SDCs, fast internet is required. The 4G network is good for online streaming and playing smartphone games, but when it comes to SDCs, next-generation technology such as 5G is required. Companies such as Nokia, Ericsson, and Huawei are researching how to bring out efficient internet technology to specifically meet the needs of SDCs.

In the next section, we will read about the levels of autonomy of autonomous vehicles, which are defined by the Society of Automotive Engineering.

Levels of autonomy

Although the terms self-driving and autonomous are often used, not all vehicles have the same capabilities. The automotive industry uses the Society of Automotive Engineering's (SAE's) autonomy scale to determine various levels of autonomous capacity. The levels of autonomy help us understand where we stand in terms of this fast-moving technology.

In the following sections, we will provide simple explanations of each autonomy level.

Level 0 – manual cars

In Level 0 autonomy, both the steering and the speed of the car are controlled by the driver. Level 0 autonomy may include issuing a warning to the driver, but the vehicle itself may not take any action.

Level 1 – driver support

In level 1 autonomy, the driver of the car takes care of controlling most of the features of the car. They look at all the surrounding environments, acceleration, braking, and steering. An example of level 1 is that if the vehicle gets too close to another vehicle, it will apply the brake automatically.

Level 2 – partial automation

In level 2 autonomy, the vehicle will be partially automated. The vehicle can take over the steering or speed acceleration and will try to eliminate drivers from performing a few basic tasks. However, the driver is still required in the car for monitoring critical safety functions and environmental factors.

Level 3 – conditional automation

With level 3 autonomy and higher, the vehicle itself performs all environmental monitoring (using sensors such as LIDARs). At this level, the vehicle can drive in autonomous mode in certain situations, but the driver should be ready to take over when the vehicle is in a situation that may exceed the vehicle's control limit.

Level 4 – high automation

Level 4 autonomy is just one level below full automation. In this level of autonomy, the vehicle can control the steering, brakes, and acceleration of the car. It can even monitor the vehicle itself, as well as pedestrians, and the highway as a whole. Here, the vehicle can drive in autonomous mode for the majority of the time; however, a human is still required to take over in uncontrollable situations, such as in crowded places such as cities and streets.

Level 5 – complete automation

In level 5 autonomy, the vehicle will be completely autonomous. Here, no human driver is required; the vehicle controls all the critical tasks such as steering, the brakes, and the pedals. It will monitor the environment and identify and react to all unique driving conditions such as traffic jams.

Now, let's move on and look at the approaches of deep learning and computer vision for SDCs.

Deep learning and computer vision approaches for SDCs

Perhaps the most exciting new technology in the world today is deep neural networks, especially convolutional neural networks. This is known collectively as deep learning. These networks are conquering some of AI's and pattern recognition's most common problems. Due to the rise in computational power, the milestones in AI have been achieved increasingly commonly over recent years, and have often exceeded human capabilities. Deep learning offers some exciting features such as its ability to learn complex mapping functions automatically and being able to scale up automatically. In many real-world applications, such as large-scale image classification and recognition tasks, such properties are essential. After a certain point, most machine learning algorithms reach plateaus, while deep neural network algorithms continually perform better with more and more data. The deep neural network is probably the only machine learning algorithm that can leverage the enormous amounts of training data from autonomous car sensors.

With the use of various sensor fusion algorithms, many autonomous car manufacturers are developing their own solutions, such as LIDAR by Google and Tesla's purpose-built computer; a chip specifically optimized for running a neural network.

Neural network systems have improved in terms of gauging image recognition problems over the past several years, and have exceeded human capabilities.

SDCs can be used to process this sensory data and make informed decisions, such as the following:

- Lane detection: This is useful for driving correctly, as the car needs to know which side of the road it is on. Lane detection also makes it easy to follow a curved road.

- Road sign recognition: The system must recognize road signs and be able to act accordingly.

- Pedestrian detection: The system must detect pedestrians as it drives through a scene. Whether an object is a pedestrian or not, the system needs to know so that it can put more emphasis on not hitting pedestrians. It needs to drive more carefully around pedestrians than other objects that are less important, such as litter.

- Traffic light detection: The vehicle needs to detect and recognize traffic lights so that, just like human drivers, it can comply with road rules.

- Car detection: The presence of other cars in the environment must also be detected.

- Face recognition: There is a need for an SDC to identify and recognize the driver's face, other people inside the car, and perhaps even those who are outside it. If the vehicle is connected to a specific network, it can match those faces against a database to recognize car thieves.

- Obstacle detection: Obstacles can be detected using other means, such as ultrasound, but the car also needs to use its camera systems to identify any obstacles.

- Vehicle action recognition: The vehicle should know how to interact with other drivers since autonomous cars will drive alongside non-autonomous cars for many years to come.

The list of requirements goes on. Indeed, deep learning systems are compelling tools, but there are certain properties that can affect their practicality, particularly when it comes to autonomous cars. We will implement solutions for all of these problems in later chapters.

LIDAR and computer vision for SDC vision

Some people may be surprised to know that early generation cars from Google barely used their cameras. The LIDAR sensor is useful, but it could not see lights and color, and the camera was mostly used to recognize things such as red and green lights.

Google has since become one of the world's leading players in neural network technology. It has made a substantial effort to execute the sensor fusion of LIDARs, cameras, and other sensors. Sensor fusion is likely to be very good at using neural networks to assist Google's vehicles. Other firms, such as Daimler, have also demonstrated an excellent ability to fuse camera and LIDAR information together. LIDARs are working today, and are expected to become cheaper. However, we have still not crossed that threshold to make the leap toward new neural network technology.

One of the shortcomings of LIDAR is that it usually has a low resolution; so, while not sensing an object in front of the car is very unlikely, it may not figure out what exactly the barrier is. We have already seen an example in the section, The cheapest computer and hardware, on how fusing the camera with convolutional neural networks and LIDAR will make these systems much better in this area, and knowing and recognizing what things are means making better predictions regarding where they are going to be in the future.

Many people claim that computer vision systems would be good enough to allow a car to drive on any road without a map, in the same manner as a human being. This methodology applies mostly to very basic roads, such as highways. They are identical in terms of directions and that they are easy to understand. Autonomous systems are not inherently intended to function as human beings do. The vision system plays an important role because it can classify all the objects well enough, but maps are important and we cannot neglect them. This is because, without such data, we might end up driving down unknown roads.

Summary

This chapter addressed the question of how SDCs are becoming a reality. We discovered that SDC technology has existed for decades. We learned how it has evolved, and about advanced research through the arrival of computational power such as GPUs. We also learned about the advantages, disadvantages, challenges, and levels of autonomy of an SDC.

Don't worry if you feel a bit confused or overwhelmed after reading this chapter. The purpose of this chapter was to provide you with an extremely high-level overview of SDCs.

In the next chapter, we are going to study the concept of deep learning more closely. This is the most interesting and important topic of this book, and after reading Chapter 2, Dive Deep into Deep Neural Networks, you will be well versed in deep learning.

Download code from GitHub

Download code from GitHub