The last chapter focused on solving board games. In this chapter, we will look at the more complex problem of training AI to play computer games. Unlike with board games, the rules of the game are not known ahead of time. The AI cannot tell what will happen if it takes an action. It can't simulate a range of button presses and their effect on the state of the game to see which receive the best scores. It must instead learn the rules and constraints of the game purely from watching, playing, and experimenting.

In this chapter, we will cover the following topics:

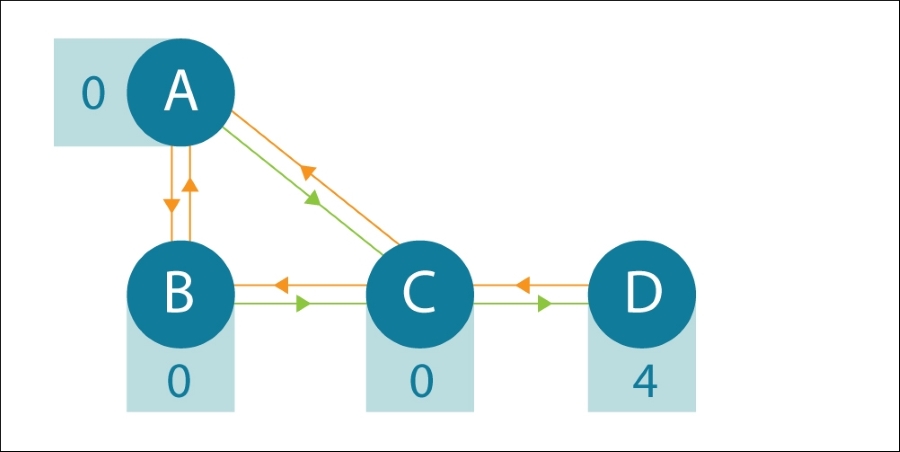

Q-learning

Experience replay

Actor-critic

Model-based approaches