Each update of Business Central includes new features and capabilities. Microsoft Dynamics 365 Business Central is no exception. It is our responsibility to understand how to apply these new features, use them intelligently in our designs, and develop them with both their strengths and limitations in mind. Our goal in the end is not only to provide workmanlike results but, if possible, to delight our users with the quality of the experience and the ease to use of our products.

In this chapter, we will cover the following topics:

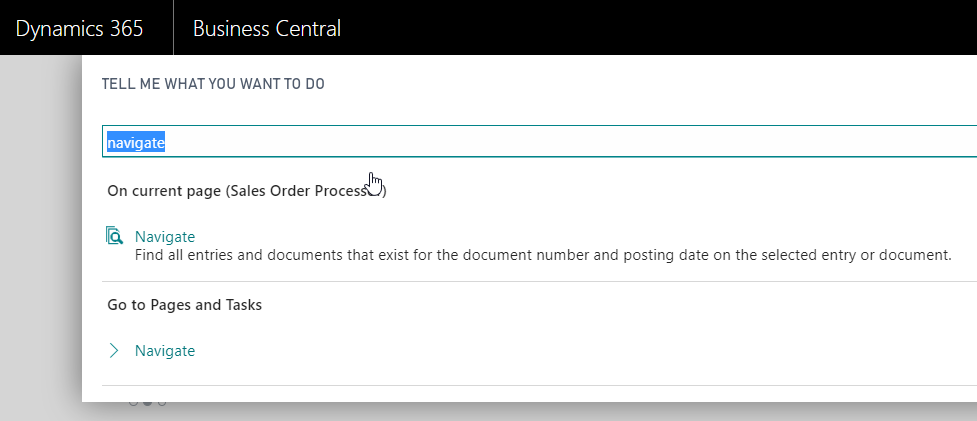

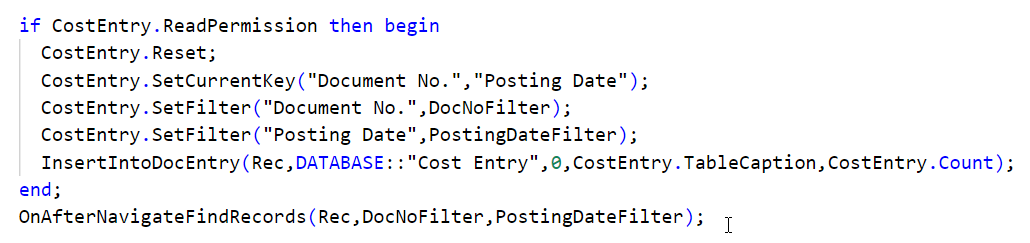

- Reviewing Business Central objects that contain functions we can use directly or as templates...