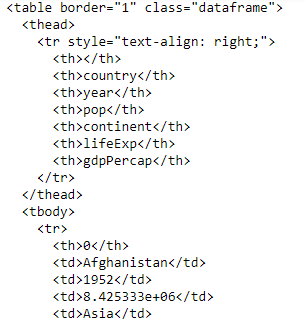

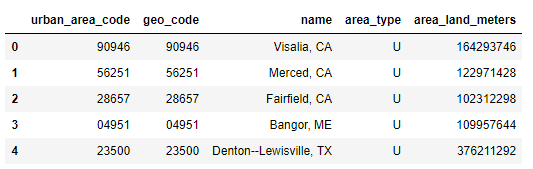

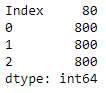

A data scientist has to work on data that comes from a variety of sources and hence in a variety of formats. The most common are the ubiquitous spreadsheets, Excel sheets, and CSV and text files. But there are many others, such as URL, API, JSON, XML, HDF, Feather and so on, depending on where it is being accessed. In this chapter, we will cover the following topics among others:

- Data sources and pandas methods

- CSV and TXT

- URL and S3

- JSON

- Reading HDF formats

Let's get started!