In the last chapter, we saw how to perform several signal-processing tasks while leveraging the predictive power of feedforward neural networks. This foundational architecture allowed us to introduce many of the basic features that comprise the learning mechanisms of Artificial Neural Networks (ANNs).

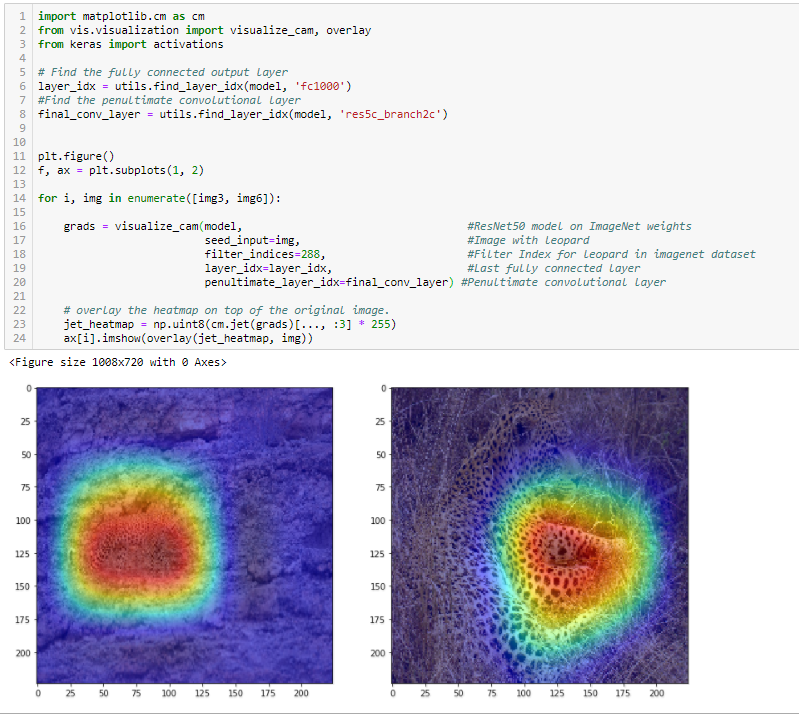

In this chapter, we dive deeper to explore another type of ANN, namely the Convolutional Neural Network (CNN), famous for its adeptness at visual tasks such as image recognition, object detection, and semantic segmentation, to name a few. Indeed, the inspiration for these particular architectures also refers back to our own biology. Soon, we will go over the experiments and discoveries of the human race that led to the inspiration for these complex systems that perform so well. The latest iterations of this idea can be traced back to the ImageNet classification...