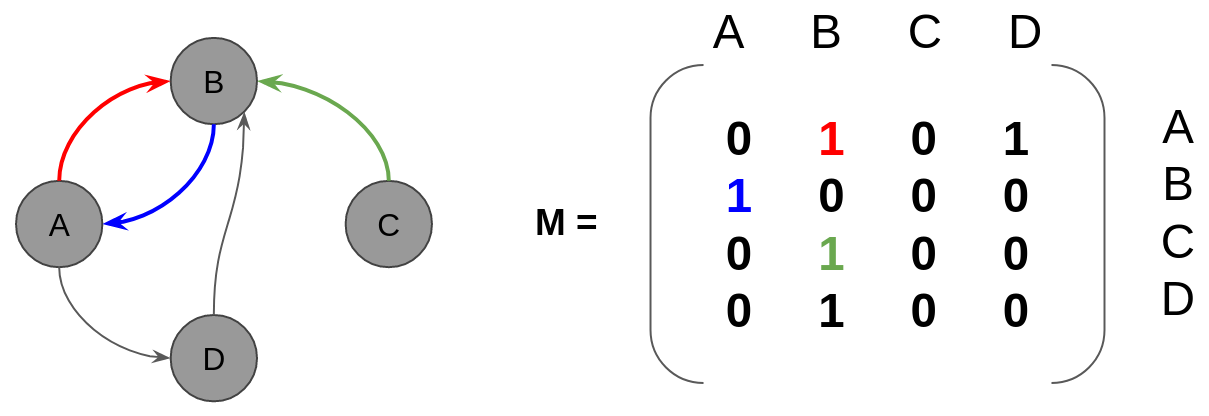

In this chapter, we will continue to explore the topic of graph analytics and address the last piece of the puzzle: feature learning through graphs via embedding. Embedding became popular thanks to the word embedding used in Natural Language Processing (NLP). In this chapter, we will first address why embedding is important and learn about the different types of analyses covered by the term graph embedding. Following that, we will start learning about embedding algorithms from a number of algorithms based on the graph adjacency matrix trying to reduce its size.

Later on, we will continue our journey by discovering how neural networks can help with embedding. Starting with the example of word embedding, we will learn about the skip-gram model and draw parallels with graphs with the DeepWalk algorithm. Finally, in the last section, we will...