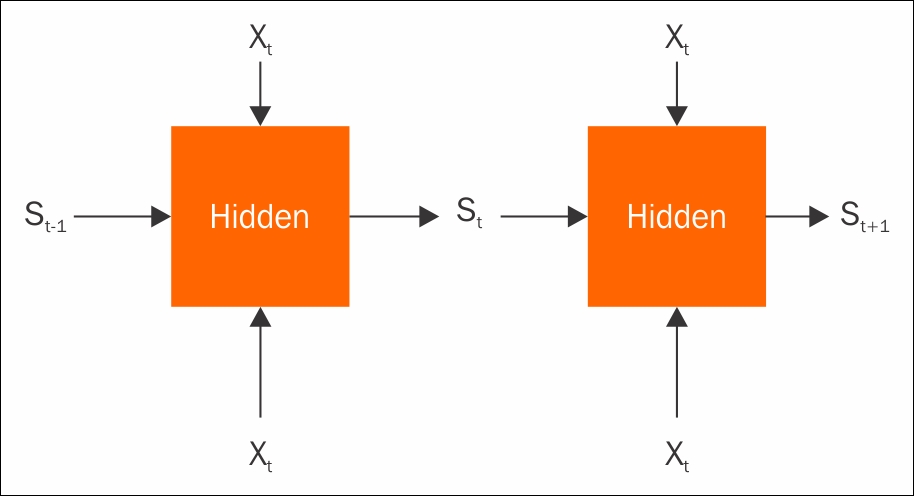

In the previous chapter, you learned about convolutional networks. Now, it's time to move on to a new type of model and problem—Recurrent Neural Networks (RNNs). In this chapter, we'll explain the workings of RNNs, and implement one in TensorFlow. Our example problem will be a simple season predictor with weather information. We will also take a look at skflow, a simplified interface to TensorFlow. This will let us quickly re-implement both our old image classification models and the new RNN. At the end of this chapter, you will have a good understanding of the following concepts:

Exploring RNNs

TensorFlow learn

Dense Neural Network (DNN)