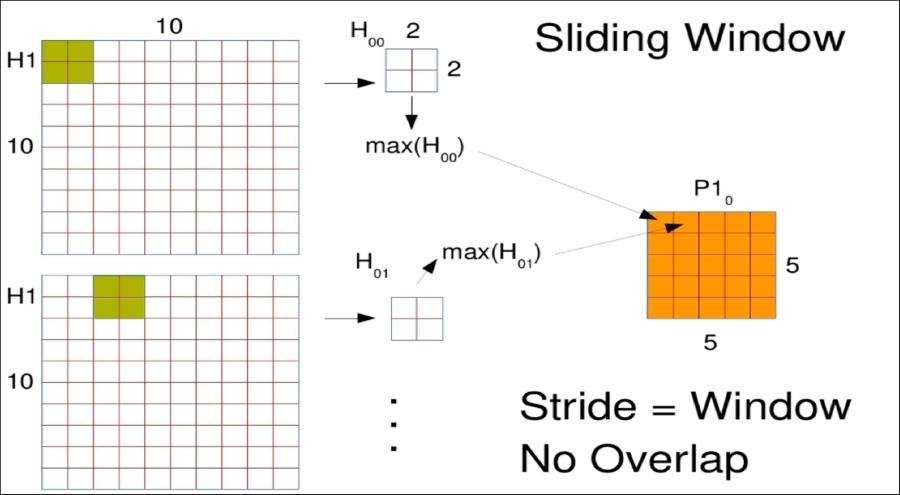

In the previous chapter we explored deep neural networks, which required ever more parameters to fit. This chapter will guide you through one of the most powerful developments in deep learning and let us use some of our knowledge about the problem space to improve the model. First we're going to explain what a convolutional layer is in a neural net followed by a TensorFlow example. Then we'll do the same for what's called a pooling layer. Finally, we'll adapt our font classification model into a Convolutional Neural Network (CNN) and see how it does.

In this chapter, we will look at the background of convolutional neural nets. We will also implement a convolutional layer in TensorFlow. We will learn max pooling layers and put them into practice and implement a single pooling layer as an example.

At the end of this chapter, you will have great control over the following concepts:

Convolutional layer motivation

Convolutional layer application

Pooling layer...