Well, now things are getting fun! Our models can now learn more complex functions, and we are now ready for a wonderful tour around the more contemporary and surprisingly effective models

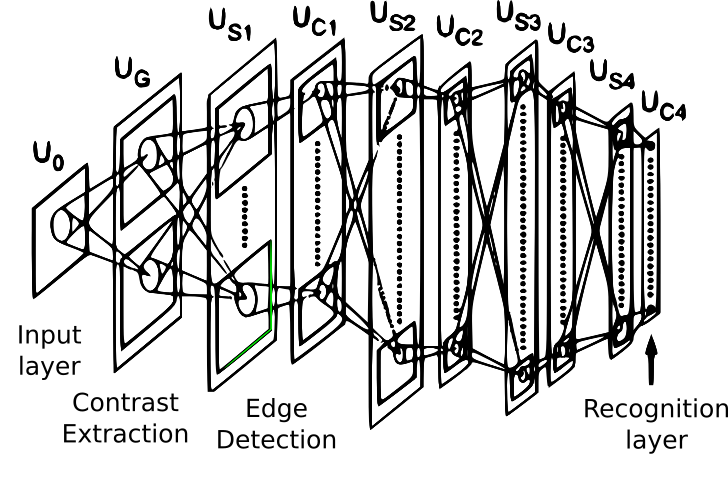

After piling layers of neurons became the most popular solution to improving models, new ideas for richer nodes appeared, starting with models based on human vision. They started as little more than research themes, and after the image datasets and more processing power became available, they allowed researchers to reach almost human accuracy in classification challenges, and we are now ready to leverage that power in our projects.

The topics we will cover in this chapter are as follows:

- Origins of convolutional neural networks

- Simple implementation of discrete convolution

- Other operation types: pooling, dropout

- Transfer learning