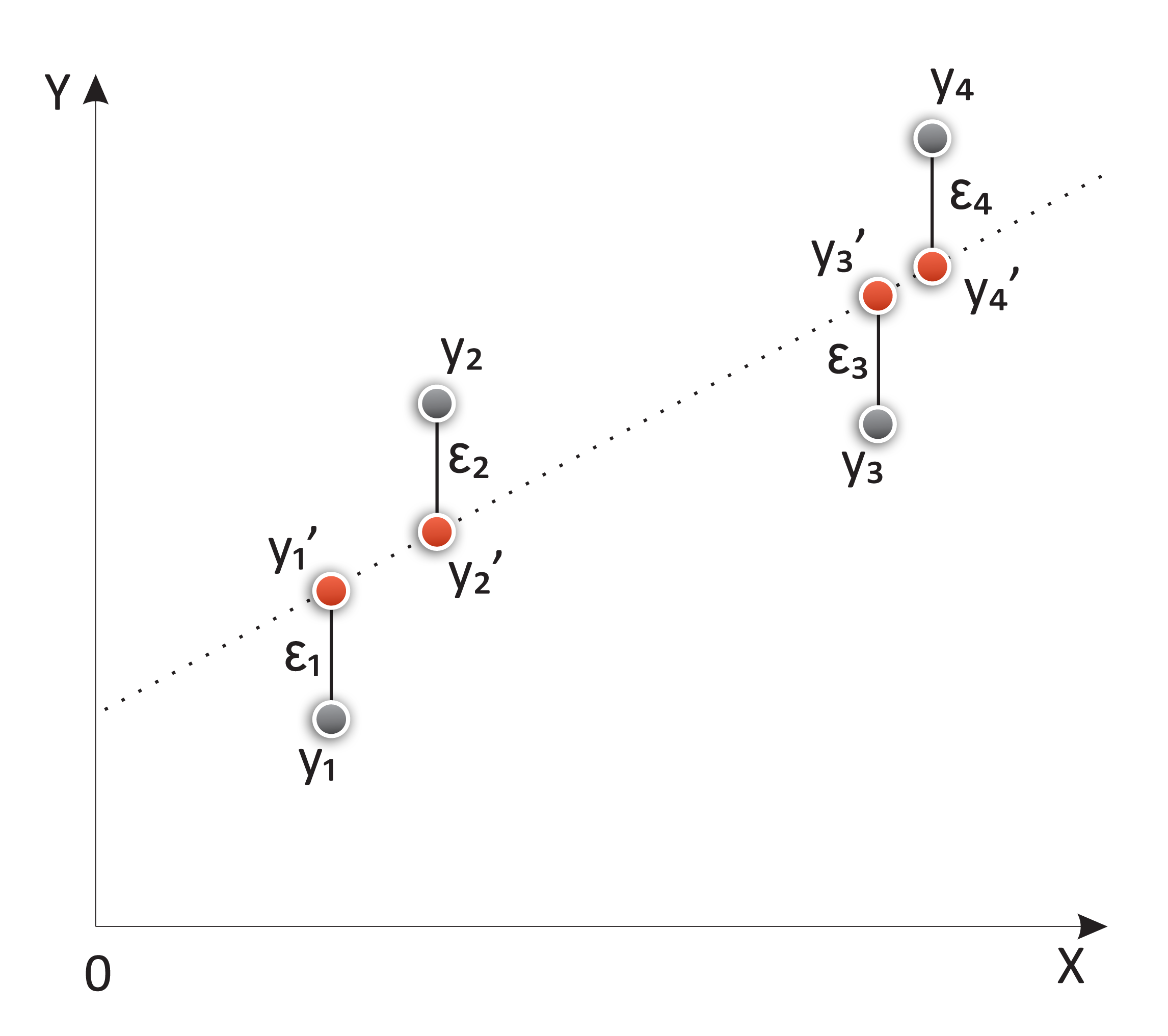

After the insights we gained by grouping similar information using common features, it's time to get a bit more mathematical and start to search for a way to describe the data by using a distinct function that will condense a large amount of information, and will allow us to predict future outcomes, assuming that the data samples maintain their previous properties.

In this chapter, we will cover the following topics:

- Linear regression with a step-by-step implementation

- Polynomial regression

- Logistic regression and its implementation

- Softmax regression