In this chapter, we will discuss and exemplify those parts of TensorFlow 2 that are needed for the construction, training, and evaluation of artificial neural networks and their utilization purposes for inference. Initially, we will not present a complete application. Instead, we will focus on individual concepts and techniques before putting them all together and presenting full models in subsequent chapters.

In this chapter, we will cover the following topics:

- Presenting data to an Artificial Neural Network (ANN)

- ANN layers

- Gradient calculations for gradient descent algorithms

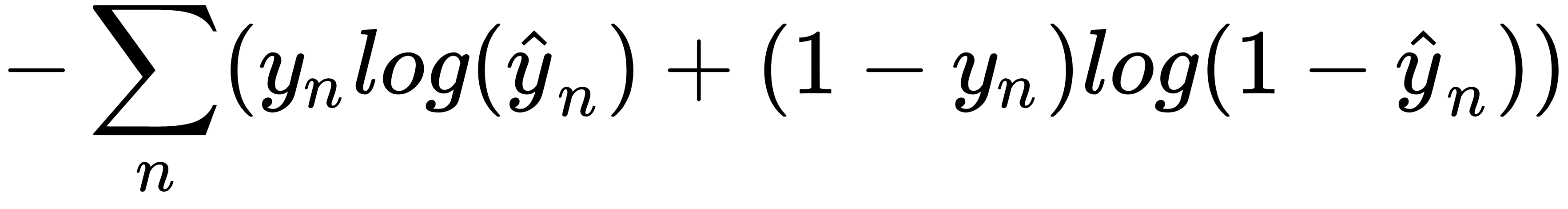

- Loss functions

,

, is the predicted label value.

is the predicted label value.