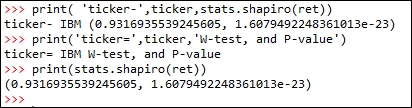

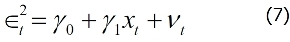

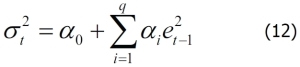

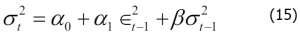

In finance, we know that risk is defined as uncertainty since we are unable to predict the future more accurately. Based on the assumption that prices follow a lognormal distribution and returns follow a normal distribution, we could define risk as standard deviation or variance of the returns of a security. We call this our conventional definition of volatility (uncertainty). Since a normal distribution is symmetric, it will treat a positive deviation from a mean in the same manner as it would a negative deviation. This is against our conventional wisdom since we treat them differently. To overcome this, Sortino (1983) suggests a lower partial standard deviation. Up to now, we assume that the volatility of a time series is a constant. Obviously this is not true. Another observation is volatility clustering, which means that high volatility is usually followed by a high-volatility period, and this is true for low volatility that is usually followed...

Argentina

Argentina

Australia

Australia

Austria

Austria

Belgium

Belgium

Brazil

Brazil

Bulgaria

Bulgaria

Canada

Canada

Chile

Chile

Colombia

Colombia

Cyprus

Cyprus

Czechia

Czechia

Denmark

Denmark

Ecuador

Ecuador

Egypt

Egypt

Estonia

Estonia

Finland

Finland

France

France

Germany

Germany

Great Britain

Great Britain

Greece

Greece

Hungary

Hungary

India

India

Indonesia

Indonesia

Ireland

Ireland

Italy

Italy

Japan

Japan

Latvia

Latvia

Lithuania

Lithuania

Luxembourg

Luxembourg

Malaysia

Malaysia

Malta

Malta

Mexico

Mexico

Netherlands

Netherlands

New Zealand

New Zealand

Norway

Norway

Philippines

Philippines

Poland

Poland

Portugal

Portugal

Romania

Romania

Russia

Russia

Singapore

Singapore

Slovakia

Slovakia

Slovenia

Slovenia

South Africa

South Africa

South Korea

South Korea

Spain

Spain

Sweden

Sweden

Switzerland

Switzerland

Taiwan

Taiwan

Thailand

Thailand

Turkey

Turkey

Ukraine

Ukraine

United States

United States