The Autoregressive Integrated Moving Average (ARIMA) model is the generic name for a family of forecasting models that are based on the Autoregressive (AR) and Moving Average (MA) processes. Among the traditional forecasting models (for example, linear regression, exponential smoothing, and so on), the ARIMA model is considered as the most advanced and robust approach. In this chapter, we will introduce the model components—the AR and MA processes and the differencing component. Furthermore, we will focus on methods and approaches for tuning the model's parameters with the use of differencing, the autocorrelation function (ACF), and the partial autocorrelation function (PACF).

In this chapter, we will cover the following topics:

- The stationary state of time series data

- The random walk process

- The AR and MA processes

- The ARMA and ARIMA...

is the coefficient of the i lag of the series (here,

is the coefficient of the i lag of the series (here,  must be between -1 and 1, otherwise

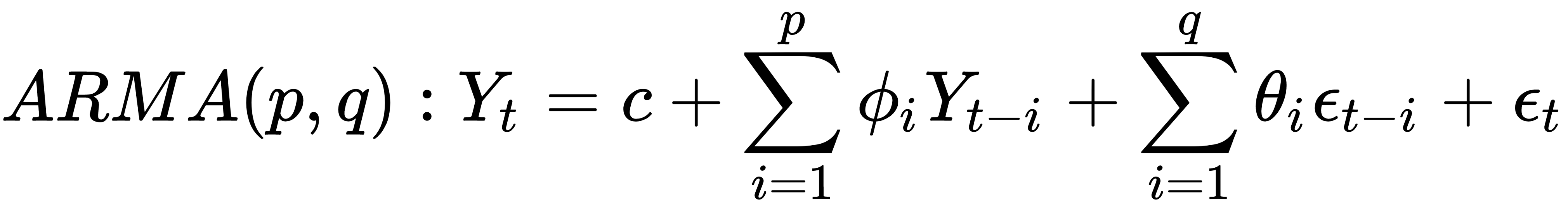

must be between -1 and 1, otherwise . The goal of the moving average process is to capture patterns in the residuals, if they exist, by modeling the relationship between Yt, the error term, ∈t, and the past q error terms of the models (for example,

. The goal of the moving average process is to capture patterns in the residuals, if they exist, by modeling the relationship between Yt, the error term, ∈t, and the past q error terms of the models (for example,  ). The structure of the MA process is fairly similar to the ones of the AR. The following equation defines an MA process with a q order:

). The structure of the MA process is fairly similar to the ones of the AR. The following equation defines an MA process with a q order:

represents the mean of the series

represents the mean of the series are white noise error terms

are white noise error terms is the corresponding coefficient of

is the corresponding coefficient of

is the coefficient of the i lag of the series

is the coefficient of the i lag of the series

are white noise error terms

are white noise error terms represents the error term, which is white noise

represents the error term, which is white noise

, where Φ represents the seasonal coefficient of the SAR process, and f represents the series frequency.

, where Φ represents the seasonal coefficient of the SAR process, and f represents the series frequency.

, is the white noise series

, is the white noise series