Generative models have become an important application in computer vision. Unlike the applications discussed in previous chapters that made predictions from images, generative models can create an image for specific objectives. In this chapter, we will understand:

- The applications of generative models

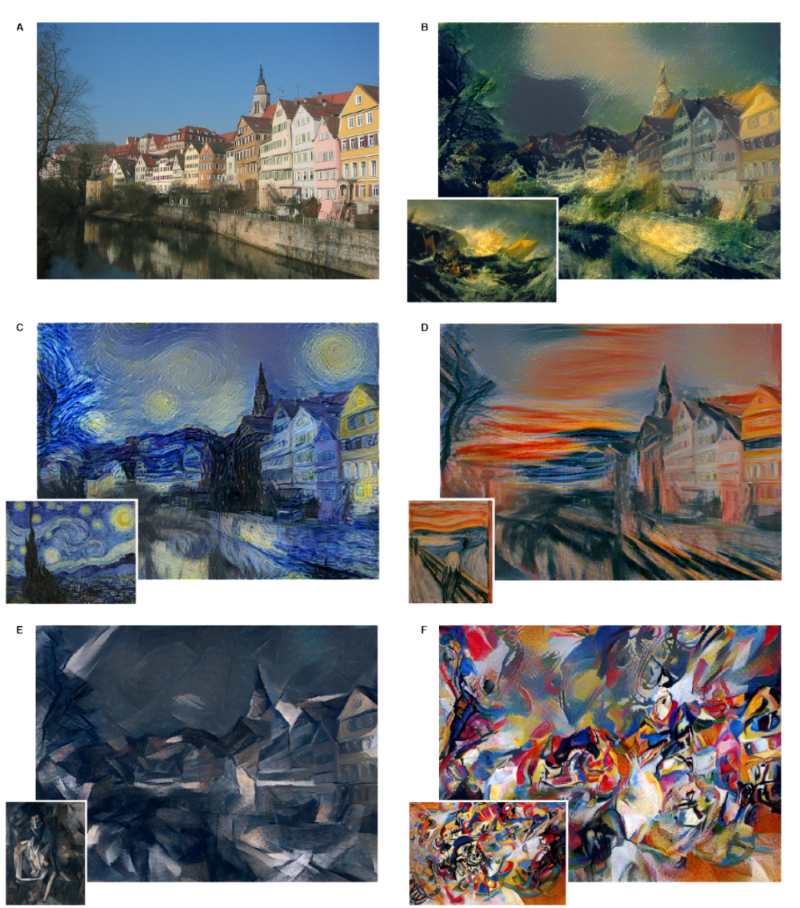

- Algorithms for style transfer

- Training a model for super-resolution of images

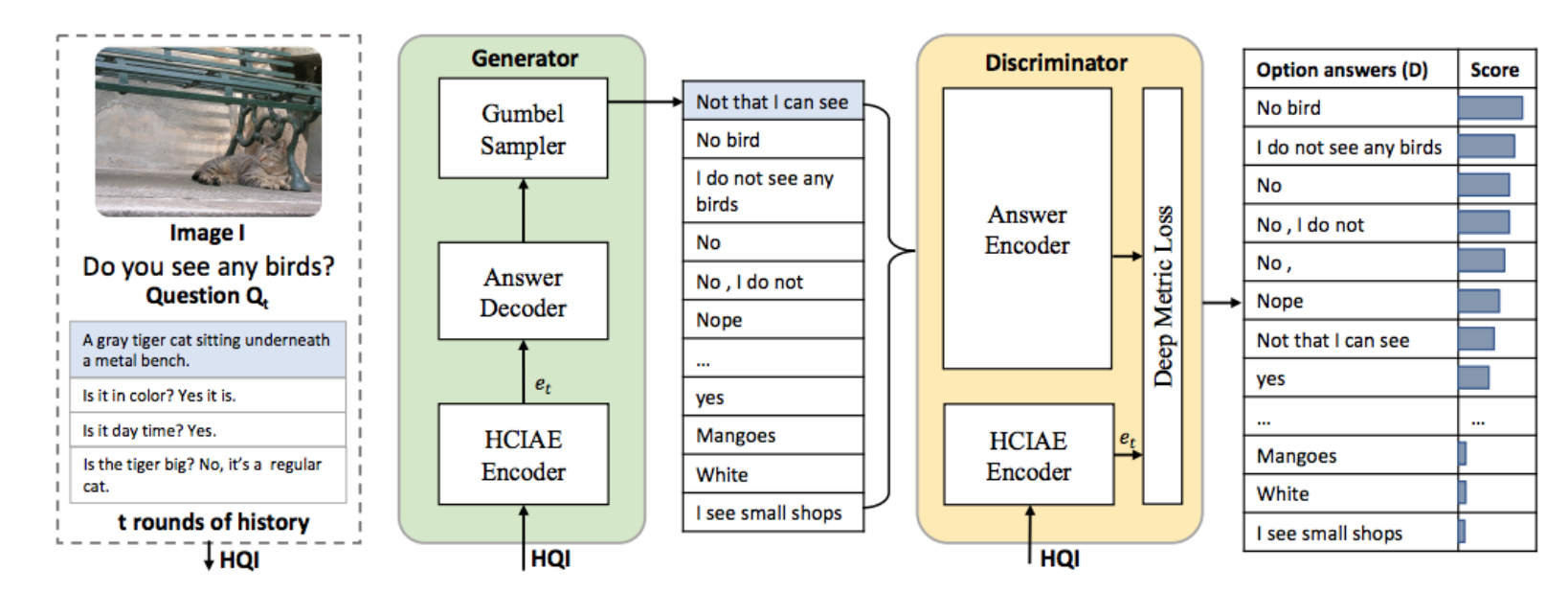

- Implementation and training of generative models

- Drawbacks of current models

By the end of the chapter, you will be able to implement some great applications for transferring style and understand the possibilities, as well as difficulties, associated with generative models.