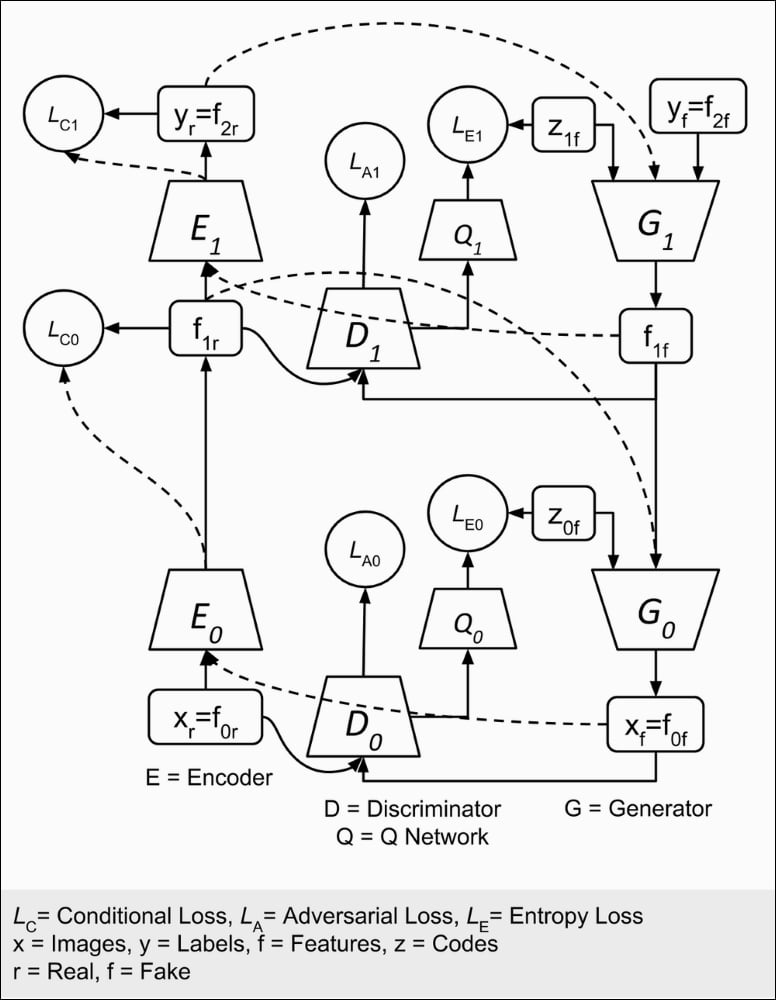

To implement InfoGAN on MNIST dataset, there are some changes that need to be made in the base code of ACGAN. As highlighted in following listing, the generator concatenates both entangled (z noise code) and disentangled codes (one-hot label and continuous codes) to serve as input. The builder functions for the generator and discriminator are also implemented in gan.py in the lib folder.

Note

The complete code is available on GitHub:

https://github.com/PacktPublishing/Advanced-Deep-Learning-with-Keras

Listing 6.1.1, infogan-mnist-6.1.1.py shows us how the InfoGAN generator concatenates both entangled and disentangled codes to serve as input:

def generator(inputs,

image_size,

activation='sigmoid',

labels=None,

codes=None):

"""Build a Generator Model

Stack of BN-ReLU-Conv2DTranpose to generate fake images.

Output activation is sigmoid instead of tanh in [1].

Sigmoid converges easily.

...