We've had a roller coaster ride so far. In the last chapter, we looked at performing ELT with Spark, and most importantly, loading and saving data from and to various data sources. We've looked at structured data streams and NoSQL databases, and during all that time we have tried to keep our attention on using RDDs to work with such data sources. We had slightly touched upon DataFrame and DataSet API, but refrained from going into too much detail around these topics, as we wanted to cover it in full detail in this chapter.

If you have a database background and are still trying to come to terms with RDD API, this is the chapter you'll love the most, as it essentially explains how you can use SQL to exploit the capabilities of the Spark framework.

In this chapter we will be covering the following key topics:

- DataFrame API

- DataSet API

- Catalyst Optimizer

- Spark Session

- Manipulating Spark DataFrames

- Working with Hive, Parquet files, and other databases

Let's get cracking!

SQL has been the defacto language for business analysts for over two decades now. With the evolution and rise of big data came a new way of building business applications - APIs. However, people writing Map-Reduce soon realized that while Map-Reduce is an extremely powerful paradigm, it has limited reach due to the complex programming paradigm, and was akin to sidelining the business analysts who would previously use SQL to solve their business problems. The business analysts are people who have deep business knowledge, but limited knowledge around building applications through APIs and hence it was a huge ask to have them code their business problems in the new and shiny frameworks that promised a lot. This led the open source community to develop projects such as Hive and Impala, which made working with big data easier.

Similarly in the case of Spark, while RDDs are the most powerful APIs, they are perhaps too low level for business users. Spark SQL comes to the rescue...

I believe before looking at what a DataFrame API is, we should probably review what an RDD is and identify what could possibly be improved on the RDD interface. RDD has been the user facing API in Apache Spark since its inception and as discussed earlier can represent unstructured data, is compile-time safe, has dependencies, is evaluated lazily, and represents a distributed collection of data across a Spark cluster. RDDs can have partitions, which can be aided by locality info, thus aiding Spark scheduler to allow the computation to be performed on the machines where the data is already available to reduce the costly network overload.

However from a programming perspective, the computation itself is less transparent, as Spark doesn't know what you are doing, for example, join/filters, and so on. They express the how of a solution better than the what of a solution. The data itself is opaque to the optimizer, which means Spark gets an object either in Scala, Java...

Spark announced Dataset API in Spark 1.6, an extension of DataFrame API representing a strongly-typed immutable collection of objects mapped to a relational schema. Dataset API was developed to take advantage of the Catalyst optimiser by exposing expressions and data fields to the query planner. Dataset brings the compile-type safety, which means you can check your production applications for errors before they are run, an issue that constantly comes up with DataFrame API.

One of the major benefits of DataSet API was a reduction in the amount of memory being used, as Spark framework understood the structure of the data in the dataset and hence created an optimal layout in the memory space when caching datasets. Tests have shown that DataSet API can utilize 4.5x lesser memory space compared to the same data representation with an RDD.

Figure 4.1 shows analysis errors shown by Spark with various APIs for a distributed job with SQL at one end of the spectrum and Datasets...

For some of you who have stayed closed to the announcements of Spark 2.0, you might have heard of the fact that DataFrame API has now been merged with the Dataset API, which means developers now have to learn fewer concepts to learn and work with a single high-level, type-safe API called a Dataset.

The Dataset API takes on two distinct characteristics:

- A strongly typed API

- An untyped API

A DataFrame in Apache Spark 2.0 is just a dataset of generic row objects, which are especially useful in cases when you do not know the fields ahead of time; if you don't know the class that is eventually going to wrap this data, you will want to stay with a generic object that can later be cast into any other class (as soon as you figure out what that is). If you want to switch to a particular class, you can request Spark SQL to enforce types on the previously generated generic row objects using the as method of the DataFrame.

Let us consider a simple example of loading a Product available...

In computer science a session is a semi-permanent interactive information interchange between two communicating devices or between a computer and a user. SparkSession is something similar, which provides a time bounded interaction between a user and the Spark framework and allows you to program with DataFrames and Datasets. We have used SparkContext in the previous chapters while working with RDDs, but Spark Session should be your go-to starting point when starting to work with Data Frames or Datasets.

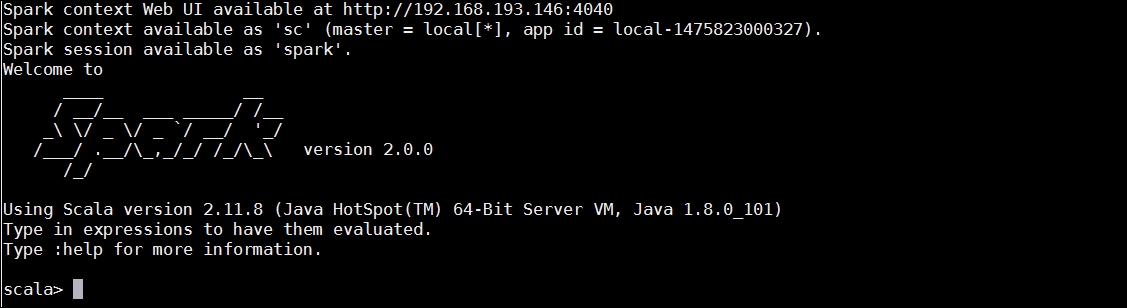

In Scala, Java, and Python you will use the Builder pattern to create a SparkSession. It is important to understand that when you are using spark-shell or pyspark, Spark session will already be available as a spark object:

Figure 4.6: Spark session in Scala shell

The following image shows SparkSession in an Python shell:

Figure 4.7: SparkSession in Python shell

Example 4.1: Scala - Programmatically creating a Spark Session:

import org.apache.spark.sql...

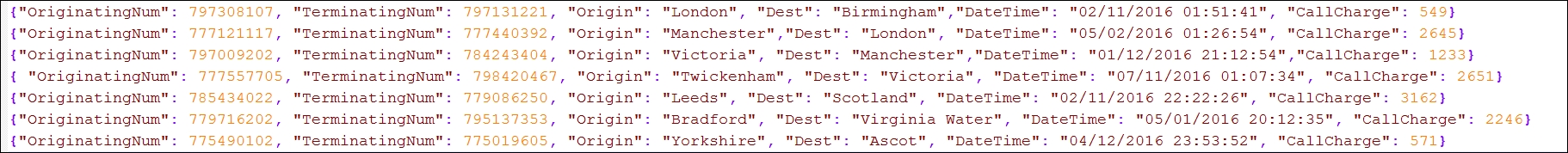

With Spark session object, applications can create DataFrames from an existing RDD, a Hive table, or a number of data sources we mentioned earlier in Chapter 3, ELT with Spark. We have looked at creating DataFrames in our previous chapter especially from TextFiles and JSON documents. We are going to use a Call Detail Records (CDR) dataset for some basic data manipulation with DataFrames. The dataset is available from this book's website if you want to use the same dataset for your practice.

A sample of the data set looks like the following screenshot:

Figure 4.8: Sample CDRs data set

We are going to perform the following actions on this data set:

- Load the dataset as a DataFrame.

- Print the top 20 records from the data frame.

- Display Schema.

- Count total number of calls originating from London.

- Count total revenue with calls originating from revenue and terminating in Manchester.

- Register the dataset as a table to be operated on using SQL.

Apache Parquet is a common columnar format available to any project in the Hadoop ecosystem, regardless of the choice of data processing framework, data model, and programming language. Parquet's design was based on Google's Dremel paper and is considered to be one of the best performing data formats in a number of scenarios. We'll not go into too much detail around Parquet, but if you are interested you might want to have a read at https://parquet.apache.org/. In order to show how Spark can work with Parquet files, we will write the CDR JSON file as a Parquet file, and then load it before doing some basic data manipulation.

Example: Scala - Reading/Writing Parquet Files

#Reading a JSON file as a DataFrame

val callDetailsDF = spark.read.json("/home/spark/sampledata/json/cdrs.json")

# Write the DataFrame out as a Parquet File

callDetailsDF.write.parquet("../../home/spark/sampledata/cdrs.parquet")

# Loading the Parquet File as a DataFrame

val callDetailsParquetDF...

Hive is a data warehousing infrastructure based on Hadoop. Hive provides SQL-like capabilities to work with data on Hadoop. Hadoop, during its infancy was limited to MapReduce as a computer platform, which was a very engineer-centric programming paradigm. Engineers at Facebook in 2008 were writing fairly complex Map-Reduce jobs, but realised that it would not be scalable and it would be difficult to get the best value from the available talent. Having a team that could write Map-Reduce Jobs, and be called upon was considered a poor strategy and hence the team decided to bring SQL to Hadoop (Hive) due for two major reasons:

- An SQL-based declarative language while allowing engineers to plug their own scripts and programs when SQL did not suffice.

- Centralized metadata about all data (Hadoop based datasets) in the organization, to create a data-driven organization.

Spark supports reading and writing data stored in Apache Hive. You would need to configure Hive with Apache Spark...

Spark provides SparkSQL CLI to work with the Hive metastore service in local mode and execute queries input from the command line.

You can start the Spark-SQL CLI as follows:

./bin/spark-sql

Configuration of Hive is done by placing your hive-site.xml, core-site.xml, and hdfs-site.xml files in conf/. You may run ./bin/spark-sql --help for a complete list of all available options.

Working with other databases

We have seen how you can work with Hive, which is fast becoming a defacto data warehouse option in the open source community. However, most of the data in the enterprises beginning with Hadoop or Spark journey is to stored in traditional databases including Oracle, Teradata, Greenplum, and Netezza. Spark provides you with the option to access those data sources using JDBC, which returns results as DataFrames. For the sake of brevity, we'll only share the Scala example of connecting to a Teradata database. Please remember to copy your database's JDBC driver class to all nodes...

The following list of references have been used for the various topics of this chapter. You might want to go through these specific sections to get more detailed understanding of individual sections.

In this chapter, we have covered details around Spark SQL, the DataFrame API, the Dataset API, Catalyst optimiser, the nuances of SparkSession, creating a DataFrame, manipulating a DataFrame, converting a DataFrame to RDD, and vice-versa before providing examples of working with DataFrames. This is by no means a complete reference for SparkSQL and is perhaps just a good starting point for people planning to embark on the journey of Spark via the SQL route. We have looked at how you can use your favorite API without consideration of performance, as Spark will choose an optimum execution plan.

The next chapter is one of my favorite topics - Spark MLLib. Spark provides a rich API for predictive modeling and the use of Spark MLLib is increasing every day. We'll look at the basics of machine learning before providing users with an insight into how the Spark framework provides support for performing predictive analytics. We'll cover topics from building a machine-learning pipeline, feature...