Well, you have taken up this book that means you already know about PowerShell and PowerCLI and in all probability use it in your day to day routine and now you want to learn more in-depths about them. You want to master the art of writing production-grade scripts for your environment or for others, and in the process, master the mysteries of this technology.

In all my years of experience, I have seen that the difference between a normal technical person and the coveted one is the advanced knowledge. To become a master of your technology, you need to know the background of the technology, how it works, how it behaves, and so on. Any person working with a tool knows how to run the tool, knowing the logic behind the tool, knowing how the tool exactly works at the back-end makes you different, it makes you the master of the tool. So, if you want to master PowerCLI, then you need to expand your horizons beyond the normal cmdlets, and you need to go deeper and get to know the intricacies of PowerShell because it is based on it.

My work experience varies widely, but it has all been related to the data center environment. In all my years of work, my programming has been related to writing scripts for automating daily tasks in the data center environment or writing small tools for the DC environment. At the time of learning advanced topics of PowerShell and PowerCLI, most of the books that I read were written more from a developer's perspective, making them 'developer-ish' in nature (sounds familiar?). I also had to look into many different books to find different topics; there was not a single place where I could get all the topics (which covers both PowerShell and PowerCLI), which covered the advanced topics.

This book tries to cover all or most of the advanced topics of PowerShell and PowerCLI to enable you to master the subject and become a master scripter/tool maker, but at the same time, this book is written from the perspective of a system admin. To achieve this, I would try to avoid the developer jargons and replace them with normal, simple examples. Note that this is a 'mastering PowerCLI' book, not a mastering PowerShell book, so the examples given in this book are from the PowerCLI perspective. You can say that I am looking at PowerShell through the eyes of PowerCLI, and we will cover those topics of PowerShell that will enable us to write production-grade scripts for managing VMware environments.

In this chapter, we will cover the following topics:

The essence of PowerShell and PowerCLI

Programming constructs and ways in which they are implemented in PowerShell

Automation through PowerShell scripts

How to run scripts from Command Prompt and as scheduled tasks

Using GitHub

Testing your scripts using Pester

How to connect to a vCenter environment and other VMware environments using PowerCLI cmdlets

In the following sections, we are going to cover a brief background of PowerShell and PowerCLI and the latest changes in both of them. First, I am going to talk about PowerShell and then PowerCLI.

The need for computers came into existence for two purposes: the first purpose was to do number crunching fast and accurately, and the second and most important purpose was to automate tasks. In this age of cloud computing, automation is the most used word and one of the basic characteristics of cloud. From the beginning of the data center age, system administrators started automating their daily repetitive tasks so that they would not have to do the same tedious tasks again and again. Also, the purpose of automation was to avoid human errors that may creep in while writing long instructions or repetitive commands.

Now, the question that comes to mind is how can we automate? There are so many ways in which we can automate a task (ask any developer). For a general system administrator, who is not a developer, there are some basic weapons from which they can choose. But the most basic and widely used method for any system administrator is to use Shell scripts. For any operating system, a Shell is the interface through which you can interact with the operating system. Traditionally, we have used Shell scripts to automate mundane work of daily life and tasks that do not require very extensive programming. Unix and Linux operating systems provide many Shells, such as Bash Shell, C Shell, KORN Shell, and so on. For the Windows environment, we have command.com (in MS-DOS-based installations) and cmd.exe (in Windows NT-based installations). Before we start talking about more advanced ones, let's take a look at scripting and its history.

In general, a scripting language is a high-level programming language that is interpreted by another program at runtime rather than being compiled by the computer's processor as other programming languages are. The first interactive shells were developed in the 1960s and these used Shell Scripts that controlled the running of computer programs in a computer program, the shell. It started with Job Control Language (JCL), moving to Unix shells, REXX, Perl, Tcl, Visual Basic, Python, JavaScript, and so on. For more details, refer to https://en.wikipedia.org/wiki/Scripting_language.

Traditional shell commands and scripts are best suited for command-line-based tasks or console-based environments, but with the advent of more GUI-based servers and operating systems, there is a greater need for a tool that can work with the more sophisticated GUI environment. This particular requirement has become more prominent for the Windows environment since Windows shifted its core from MS-DOS implementations to the NT-based core. Also, traditionally, Windows provided batch scripts in terms of basic scripting functionality, which was not enough for its GUI-based environment.

To solve the situation and comply with the updated environment, Microsoft came up with a novel solution in the form of PowerShell. It is more of a natural progression of the traditional shell in the advanced operating system environment. It is the one of the best and most powerful shell environments I have worked with. Now, more and more serious development is going on in this tool. Today, this has become so important and mainstream that all the major virtualized environments support their general operations being automated through this environment.

The major difference between the traditional Shell and PowerShell is that traditional Shells are inherently text-based; that is, they work on texts (inputs/outputs), but PowerShell works inherently on objects. So, PowerShell is far more modern and powerful than other Shells. Since it also supports and works on objects rather than texts, let's perform tasks, which were not possible with the earlier Shell Scripts.

Windows PowerShell supports running four types of commands:

Cmdlets that are native commands in PowerShell (basically, .NET programs designed to interact with PowerShell)

PowerShell functions

PowerShell scripts

Other standalone executable programs

At the time of writing this book, the latest stable version of PowerShell is 4.0 and Microsoft released a preview version of PowerShell 5.0 (Windows Management Framework 5.0 Preview November 2014). A few of the new features in this preview version are as follows:

The most important addition from the programming perspective is the added support for classes. Like any other object-oriented programming language, you can now use typical keywords, such as

Classes,Enum, and so on.A new

ConvertFrom-Stringcmdlet has been added that extracts and parses the structured objects from the content of text strings.A new

Microsoft.PowerShell.Archivemodule has been added that will allow you to work on the ZIP files from the command line.A new OneGet module has been added that will allow you to discover and install software packages from the Internet.

Note

For details of a list of the enhancements, you can check the documentation from Microsoft at https://technet.microsoft.com/en-us/library/hh857339.aspx.

VMware PowerCLI is a tool from VMware that is used to automate and manage vSphere, vCloud Director, vCloud Air, and Site Recovery Manager environments. It is based on PowerShell and provides modules and snap-ins of cmdlets for PowerShell.

Since it is based on PowerShell, it provides all the benefits of PowerShell scripting, along with the capability to manage and automate the entire VMware environment. It also provides C# and PowerShell interfaces to VMware vSphere, vCloud, and vCenter Site Recovery Manager APIs.

Generally, PowerCLI has the following features:

It is aimed toward system administrators.

It is installed on a Windows machine (client or server) as PowerShell snap-ins and modules.

It is supported at a low level (1:1 mappings of API) and high level (API abstracted) cmdlets.

No licenses are required to use it. It comes free.

At the time of writing of this book, VMware released PowerCLI 6.0 R1. The major new features of this release, among others, are as follows:

PowerCLI 6.0 R1 is backward compatible with vSphere 5.0 and the versions are up to 6.0.

Now, in this version, support for vCloud Air has been added. We can now manage the vCloud Air environment from the same single console.

User guides and 'getting started' PDFs are included as part of the PowerCLI installation.

PowerShell V3 and V4 are supported.

The VSAN support has been added.

The vCloud Suite SDK access has been added.

IO Filter Management can be done.

Hardware Version 11 management has been added.

Traditionally, PowerCLI provided two different components: One for managing the vSphere environment and another for the vCloud Director environment. With this release, there is no separate module for tenants. Now, a single installation package is provided, and at the time of installation, you have the option to install both the components (vSphere PowerCLI and vCloud PowerCLI) and only vSphere PowerCLI (vCloud PowerCLI is an optional component).

Earlier, PowerCLI had two main snap-ins to provide major functionalities, namely, VMware.VimAutomation.Core and VMware.VimAutomation.Cloud. These two will provide the core cmdlets to manage the vSphere environment and vCloud Director environment. In this release, to keep up with the best practices of PowerShell, most of the cmdlets are available as "modules" instead of "snap-ins". So, now in order to use the cmdlets, you need to import the modules into your script or into the Shell. For example, run the following code:

Import-Module 'C:\Program Files(x86)\VMware\Infrastructure\vSphere PowerCLI\Modules\VMware.VimAutomation.Cloud'

This code will import the module into the current running scope and these cmdlets will be available for you to use.

Another big change, especially if you are working with both the vCloud Director and vSphere environment is the RelatedObject parameter of vSphere PowerCLI cmdlets. With this, now you can directly retrieve vSphere inventory objects from cloud resources. This interoperability between the vSphere PowerCLI and vCloud Director PowerCLI components makes life easier for system admins. Since any VM created in vCloud Director will have an UUID attached to the name of their respective VMs in the vCenter server, so extra steps are necessary to correlate a VM in the vCenter environment to its equivalent vApp—VM in vCloud Director. With this parameter, these extra steps are no longer required.

Here is a list of snap-ins and modules that are part of the vSphere PowerCLI package:

Since this is a book on mastering PowerCLI, I will assume that you already know the basics of the language. For example, how variables are declared in PowerShell and various restrictions on them. So, in this section, I am going to provide a short refresher on the different programming constructs and how they are implemented in PowerShell. I will deliberately not go into much detail. For details, check out https://technet.microsoft.com/en-us/magazine/2007.03.powershell.aspx.

In any programming language, the first thing that you need to learn about is the variables. Declaring a variable in PowerShell is pretty easy and straightforward; simply, start the variable name with a $ sign. For example, run the following code:

PS C:\> $newVariable = 10 PS C:\> $dirList = Dir | Select Name

Note that at the time of variable creation, there is no need to mention the variable type.

You can also use the following cmdlets to create different types of variable:

New-VariableGet-VariableSet-VariableClear-VariableRemove-Variable

Best practice for variables is to initialize them properly. If they are not initialized properly, you can have unexpected results at unexpected places, leading to many errors. So, you can use Set-Strictmode in your script so that it can catch any uninitialized variables and thus remove any errors creeping in due to this. For details, check out https://technet.microsoft.com/en-us/library/hh849692.aspx.

When we started programming, we started with flowcharts, then moved on to pseudo code, and then, finally, implemented the pseudo code in any programming language of our choice. But in all this, the basic building blocks were the same. Actually, when we write any code in any programming language, the basic logic always remains the same; only the implementation of those basic building blocks in that particular language differs. For example, when we greet someone in English, we say "Hello" but the same in Hindi is "Namaste". So, the purpose of greeting remains the same and the effect is also the same. The only difference is that depending on the language and understanding, the words change.

Similarly, the building blocks of any logic can be categorized as follows:

Conditional logic

Conditional logic using loops

Now, let's take a look at how these two logics are implemented in PowerShell.

In PowerShell, we have if, elseif, else and switch to use as conditional logic. Also, to use these logics properly, we need some comparison or logical operators. The comparison and logical operators available in PowerShell are as follows:

|

Operator |

Description |

|---|---|

|

|

Equal to |

|

|

Not equal to |

|

|

Less than |

|

|

Greater than |

|

|

Less than or equal to |

|

|

Greater than or equal to |

Logical operators:

|

Operator |

Description |

|---|---|

|

-not |

Logical Not or negate |

|

! |

Logical Not |

|

-and |

Logical AND |

|

-or |

Logical OR |

The syntax for the if statement is as follows:

If (condition) { Script Block}

Elseif (condition) { Script Block}

Else { Script Block}In the preceding statement, both elseif and else are optional. The "condition" is the logic that decides whether the "script block" will be executed or not. If the condition is true, then the script block is executed; otherwise, it is not. A simple example is as follows:

if ($a-gt$b) { Write-Host "$a is bigger than $b"}

elseif ($a-lt$b) { Write-Host "$a is less than $b"}

else { Write-Host " Both $a and $b are equal"}The preceding example compares the two variables $a and $b and depending on their respective values, decides whether $a is greater than, less than, or equal to $b.

The syntax for the Switch statement in PowerShell is as follows:

Switch (value) {

Pattern 1 {Script Block}

Pattern 2 {Script Block}

Pattern n {Script Block}

Default {Script Block}

}If any one of the patterns matches the value, then the respective Script Block is executed. If none of them matches, then the Script Block respective for Default is executed.

The Switch statement is very useful for replacing long if {}, elseif {}, elseif {} or else {} blocks. Also, it is very useful for providing a selection of menu items.

One important point to note is that even if a match is found, the remaining patterns are still checked, and if any other pattern matches, then that script block is also executed. For examples of the Switch case and more details, check out https://technet.microsoft.com/en-us/library/ff730937.aspx.

In PowerShell, you have the following conditional logic loops:

do whilewhiledo untilforForeachForeach-Object

The syntax for do while is as follows:

do {

Script Block

}while (condition)The syntax for the while loop is as follows:

While (condition) { Script Block}The following example shows the preceding while loop. Say, we want to add the numbers 1 through 10.

The do while implementation is as follows:

$sum = 0

$i = 1

do {

$sum = $sum + $i

$i++

}while( $i –le 10)

$sumThe while implementation is as follows

$sum = 0

$i = 1

while($i -le 10)

{

$sum = $sum + $i

$i++

}

$sumIn both the preceding cases, the script block is executed until the condition is true. The main difference is that, in the case of do while, the script block is executed at least once whether the condition is true or false, as the script block is executed first and then the condition is checked. In the case of the while loop, the condition is checked first and then the script block is executed only if the condition is true.

The syntax for the do until loop is as follows:

do {

Script Block

}until (condition)The main difference between do until and the preceding two statements is that, logically, do until is the opposite of do while. This is the script block is that is run until the time the condition is false. The moment it becomes true, the loop is terminated.

The syntax for the for loop is as follows:

for (initialization; condition; repeat)

{code block}The typical use case of a for loop is when you want to run a loop a specified number of times. To write the preceding example in a for loop, we will write it in the following manner:

For($i=0, $sum=0; $i –le 10; $i++)

{

$sum = $sum + $i

}

$sumThe syntax for the foreach loop is as follows:

foreach ($<item> in $<collection>)

{code block}The purpose of the foreach statement is to step through (iterate) a series of values in a collection of items. Note the following example. Here, we are adding each number from 1 to 10 using the foreach loop:

# Initialize the variable $sum

$sum = 0

# foreach statement starts

foreach ($i in 1..10)

{

# Adding value of variable $i to the total $sum

$sum = $sum + $i

} # foreach loop ends

# showing the value of variable $sum

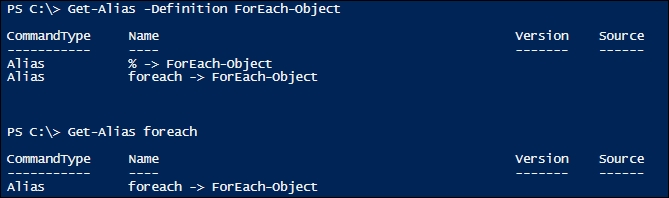

$sumLogically, foreach and Foreach-Object do similar tasks, and sometimes this can create confusion between the two. Both of them are used to iterate through collections and perform an action against each item in the collection. In fact, foreach is an alias for Foreach-Object.

When we use Foreach in the middle of a pipeline, that is, when we pipe into Foreach, it is used as an alias for Foreach-Object, but when used at the beginning of the line, it is used as a PowerShell statement.

Also, the main difference between foreach and Foreach-Object is that when Foreach-Object is used, it executes the statement body as soon as the object is created, but when foreach is used, all the objects are collected first and then the statement body is executed.

So, when we are using foreach, we need to make sure that there is enough memory space available to hold all the objects.

Having said that due to optimizations in foreach, foreach will run much faster than the Foreach-Object statement, so the decision again boils down to that age-old question of performance versus space.

So, depending on the program that you are writing, you need to choose wisely between these two.

From a programming perspective, there is not much difference between a scripting language and a more traditional programming language. You can make pretty complex programs with a scripting language.

The main difference between any traditional programming language and scripts is that in programming language, you can build compiled binary code, which can run as a standalone. In scripting, it cannot run as a standalone. A script depends on another program to execute it at runtime, or you can say that it requires another program to interpret it. Also, the code for the script is normally available for you to read. It is there in plain text form and at run time, it is executed line by line. In the case of a traditional programming language, you get a binary code which cannot be easily converted to the source code.

To summarize, if the runtime can see the code, then it is scripting language. If it is not then it was generated through a more traditional language.

Another more generic difference is that in typical scripting languages such as shell and PowerShell scripts, we use commands, which can be run directly from the command line and can give you the same result. So, in a script, we use those full high-level commands and bind them using basic programming structures (conditional logic, loops, and so on) to get a more sophisticated and complex result, whereas in traditional programming you use basic constructs and create your own program to solve a problem.

In PowerShell, the commands are called cmdlets, and we use these cmdlets and the basic constructs to get what we want to achieve. PowerShell cmdlets typically follow a verb-noun naming convention, where each cmdlet starts with a standard verb, hyphenated with a specific noun. For example, Get-Service, Stop-Service, and so on.

To write a PowerShell script, you can use any text editor, write the required code, and then save the file with a .ps1 extension. Next, from the PowerShell command line, run the script to get the desired result.

There are many ways in which you can run a PowerShell script. Most of the time the PowerShell console or ISE is used to run the scripts. There will be instances (and in the daily life of a system administrator, these instances occur frequently) when you would like to schedule the script so that it runs at a particular time on a daily basis or at one time. These processes are described as follows:

Let's see how we use the PowerShell console/ISE.

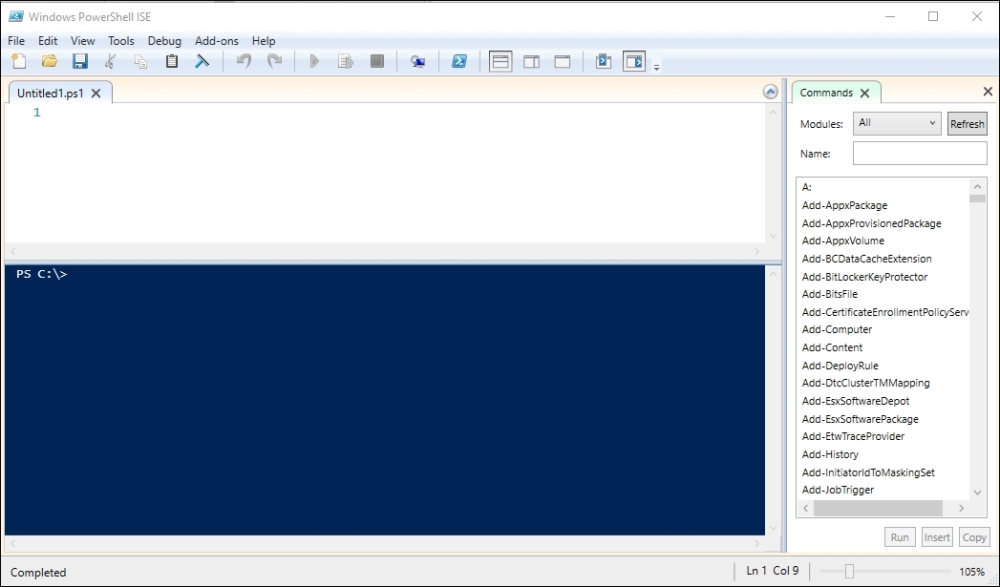

In the PowerShell console, you get only a command-line console, such as the CMD shell, and the ISE provides a more graphical interface with multiple views. As can be seen from the following screenshot, the PowerShell ISE provides three main views. On the left-hand side of the screen you have an editor and a console, and on the right-hand side you have the command window. Using the editor, you can write your scripts quickly and then run them from this window itself. The output is shown on the console given below the editor. Also, from the command selector window, you can search for the command that you are looking for and simply choose to run the insert or copy command. It also gives you multiple options to run the different aspects of the command.

Due to the flexibility of the ISE and ease with which we can work with it, it is my preferred way of working here, and for the rest of the book, it will be used for examples. Although there are many specific editors available for PowerShell, I am not going to cover them in this chapter. In the last chapter, I will cover this topic a bit and talk about my favorite editor.

So, we've started the ISE. Now let's write our first script that consists of a single line:

Write-Host "Welcome $args !!! Congratulations, you have run your first script!!!"

Now, let's save the preceding line in a file named Welcome.ps1. From the ISE command line, go to the location where the file is saved and run the file with the following command line:

PS C:\Scripts\Welcome.ps1

What happened? Were you able to run the command? In all probability, you will get an error message, as shown in the following code snippet (in case you are running the script for the first time):

PS C:\Scripts> .\Welcome.ps1 Sajal Debnath .\Welcome.ps1 : File C:\Scripts\Welcome.ps1 cannot be loaded because running scripts is disabled on this system. For more information, see about_Execution_Policies at http://go.microsoft.com/fwlink/?LinkID=135170. At line:1 char:1 + .\Welcome.ps1 Sajal Debnath + ~~~~~~~~~~~~~ + CategoryInfo : SecurityError: (:) [], PSSecurityException + FullyQualifiedErrorId : UnauthorizedAccess

So, what does it say and what does it mean? It says that running scripts is disabled on the system.

Whether you are allowed to run a script or not is determined by the ExecutionPolicy set in the system. You can check the policy by running the following command:

PS C:\Scripts> Get-ExecutionPolicy Restricted

So, you can see that the execution policy is set to Restricted (which is the default one). Now, let's check what the other options available are:

PS C:\Scripts>Get-Help ExecutionPolicy Name Category Module Synopsis ---- -------- ------ -------- Get-ExecutionPolicy Cmdlet Microsoft.PowerShell.S... Gets the execution policies for the current session. Set-ExecutionPolicy Cmdlet Microsoft.PowerShell.S... Changes the user preference for the Windows PowerSh...

Note that we have Set-ExecutionPolicy as well, so we can set the policy using this cmdlet. Now, let's check the different policies that can be set:

PS C:\Scripts> Get-Help Set-ExecutionPolicy -Detailed

Tip

Perhaps the most useful friend in the PowerShell cmdlet is the Get-Help cmdlet. As the name suggests, it provides help for the cmdlet in question. To find help for a cmdlet, just type Get-Help <cmdlet>. There are many useful parameters with this cmdlet, especially –Full and –Examples. I strongly suggest that you type Get-Help in PowerShell and read the output.

Part of the output shown is as follows:

Specifies the new execution policy. Valid values are:

-- Restricted: Does not load configuration files or run scripts. "Restricted" is the default execution policy.

-- AllSigned: Requires that all scripts and configuration files be signed by a trusted publisher, including scripts that you write on the local computer.

-- RemoteSigned: Requires that all scripts and configuration files downloaded from the Internet be signed by a trusted publisher.

-- Unrestricted: Loads all configuration files and runs all scripts. If you run an unsigned script that was downloaded from the Internet, you are prompted for permission before it runs.

-- Bypass: Nothing is blocked and there are no warnings or prompts.

-- Undefined: Removes the currently assigned execution policy from the current scope. This parameter will not remove an execution policy that is set in a Group Policy scopeFor the purpose of running our script and for the rest of the examples, we will set the policy to Unrestricted. We can do this by running the following command:

PS C:\ Set-ExecutionPolicy Unrestricted

Tip

Although I have set the policy as Unrestricted, it is not at all secure. From the security perspective, and for all other practical purposes, I suggest that you set the policy to RemoteSigned.

Now, if we try to run the earlier script, it runs successfully and gives the desired result:

PS C:\Scripts> .\Welcome.ps1 Sajal Debnath Welcome Sajal Debnath !!! Congratulations you have run your first script!!!

So, we are all set to run our scripts.

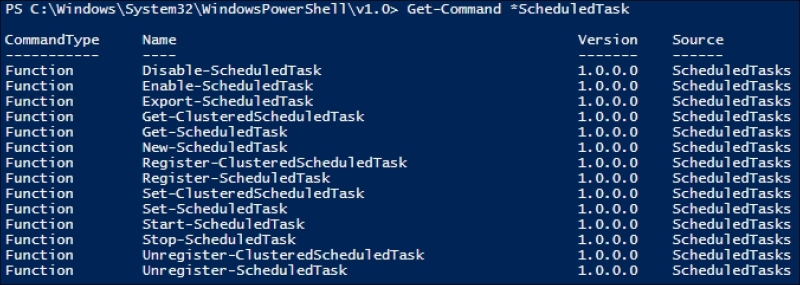

Now, let's take a look at how we can schedule a PowerShell script using Windows Task Scheduler.

Before we go ahead and schedule a task, we need to finalize the command which, when run from the task scheduler, will run the script and give you the desired result. The best way to check this is to run the same command from Start → Run.

For example, if I have a script in C:\ by the name Report.ps1, I can run the script from the command line by running the following command:

powershell –file "C:\Report.ps1"

Another point to note here is that once the preceding command is run, the PowerShell window will close. So, if you want the PowerShell window to be opened so that you can see any error messages, then add the –NoExit switch. So, the command becomes:

Powershell-NoExit –file "C:\Report.ps1"

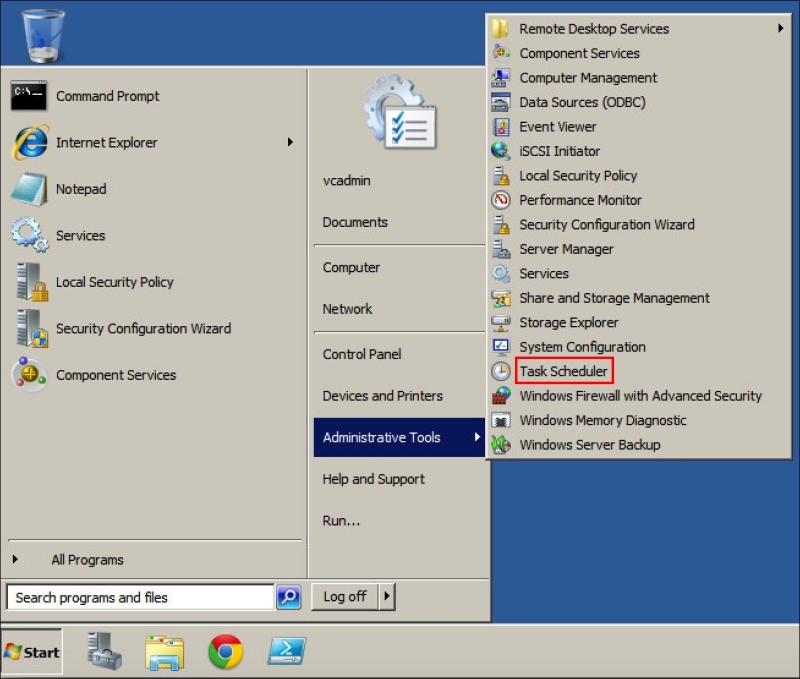

Depending on the version of Windows installed, Windows Task Scheduler is generally found in either Control Panel → System and Security → Administrative Tools → Task Scheduler or Control Panel → Administrative Tools → Task Scheduler, or you can go to Task Scheduler from the Start menu, as shown in the following screenshot:

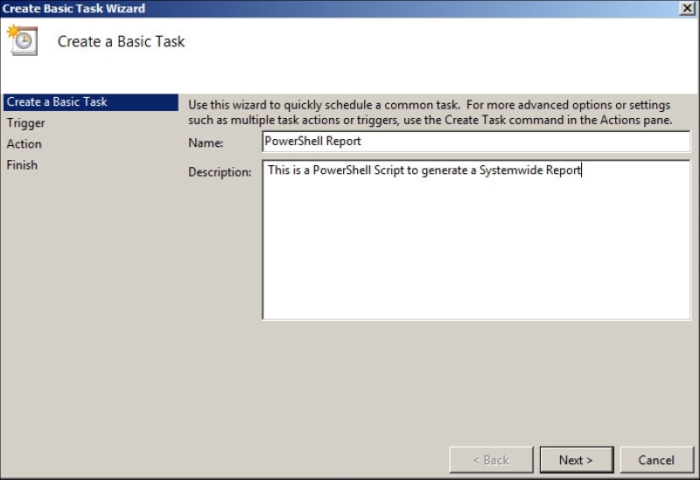

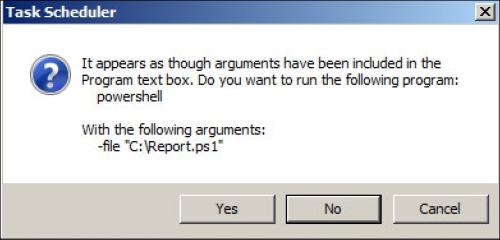

On the right-hand side pane, under Actions, click on Create Basic Task. A new window opens. In this window, provide a task name and description:

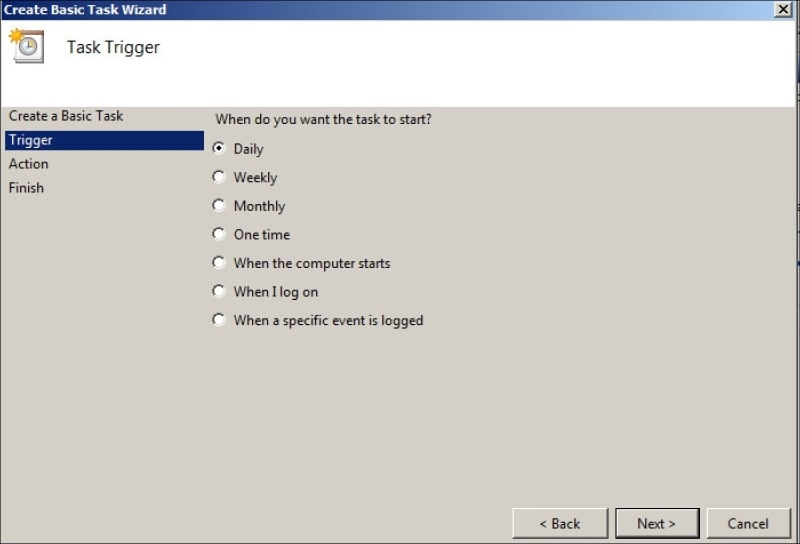

The next window provides you with the trigger details, which will trigger the action. Select the trigger according to your requirements (how frequently you want to run the script).

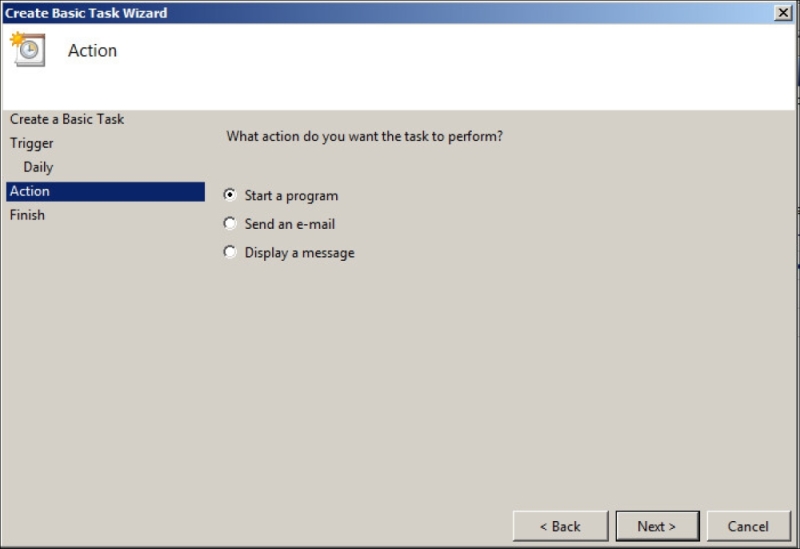

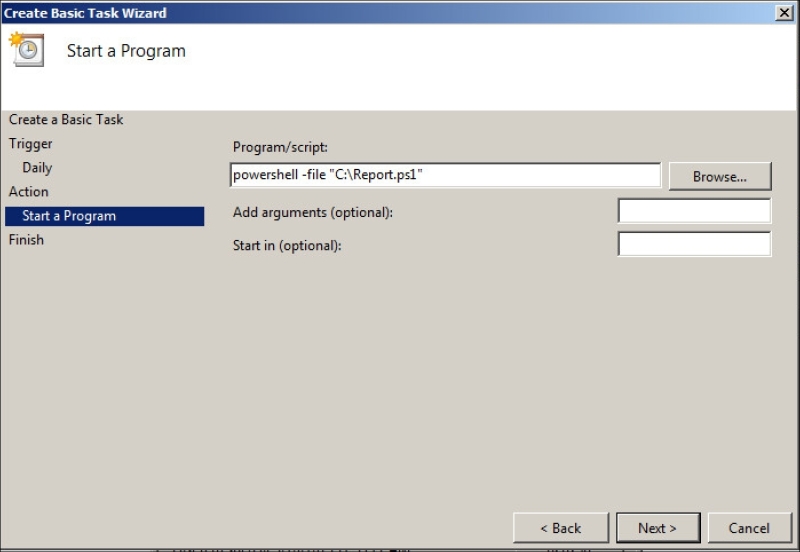

Select a type of action that you want to perform. For our purpose, we will choose Start a program.

In the next window, provide the command that you want to execute (the command that we checked by running it in Start → Run). In our example, it is as follows:

Powershell –file "C:\Report.ps1"

In the next confirmatory window, select Yes.

The last window will provide you an overview of all the options. Select Finish to complete the creation of the scheduled task. Now, the script will run automatically at your predefined time and interval.

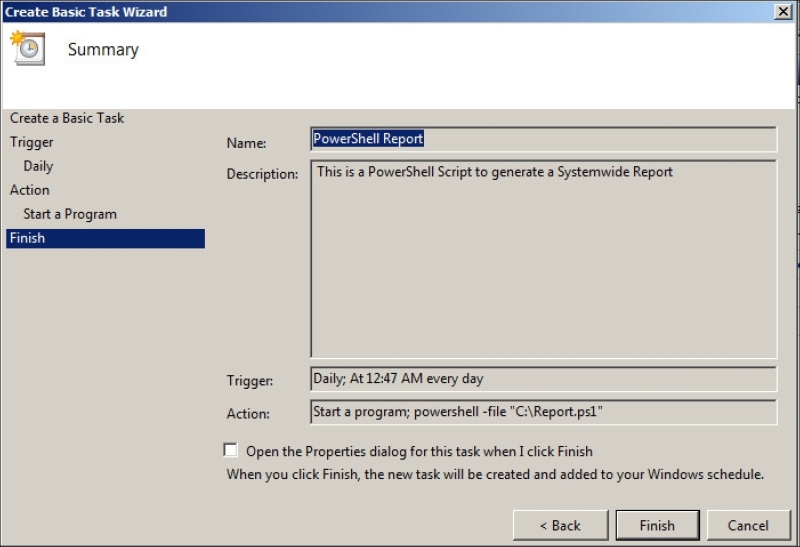

Instead of using the GUI interface, you can create, modify, and control scheduled tasks from the PowerShell command line as well. For a detailed list of the commands, you can run the following command:

PS C:\> Get-Command *ScheduledTask

From the list of the available cmdlets, you can select the one you need and run it. For more details on the command, you can use the Get-Help cmdlet.

Version control is a process by which you can track and record changes made to a particular file or set of files so that when they are needed, you can get back specific versions later. Although versioning can be and is done on any kind of a file in a computer, for the purpose of this book, we will talk about the version that controls your scripts. Traditionally, every one of us does some or the other type of versions of our work by either creating multiple versions of it or copying it to another location. But this process is very error-prone. So, for a more reliable and error-free solution, special version control systems were devised. In today's world, especially, in software development projects, where many people work on the same project, the version control system for the purpose of keeping track of who does what is extremely important and these tools are a must for maintaining and managing the projects.

To solve the preceding problem, many centralized version control systems came into existence, of which CVS, Subversion, and Perforce are a few. These local, centralized version control systems were easy to maintain but they had the serious issue of putting every egg in a single basket. If for some reason, the centralized version control server breaks down during that time frame, no one would be able to collaborate and work. Also, if due to some problem, the hard disk crashes or data gets corrupted, then all the data is lost.

Due to the preceding problem, distributed version control systems came into existence. In a distributed version control system, the clients can not only check the snapshots of the files kept in the centralized server, but they can also maintain a full replica of those files in their local system. So, if something happens to the main server, a local copy is always maintained and the server is restored by simply copying the data from any of the local systems. Thus, in a distributed version control system, all the local clients act as a full backup system of the central data of the server. A few such tools are Git, Mercurial, Darcs, and so on.

Among all the distributed version control systems, Git is the most widely used because of the following advantages:

The following are a few of the differences between Git and other version control systems:

In a traditional version control system, the difference between the files is saved as the difference between the two versions. Only the deltas are saved, whereas in Git, the versions are saved as snapshots. So, when the traditional tools treat the data as files and changes are made to the files over time, Git treats the data as a series of snapshots.

Most of the operations in Git are local. You can make changes to the files, or if you want to check something in the history or want to get an old version, you no longer need to be connected to a remote server. Also, you don't need to be connected to make changes to the files; they will be locally saved, and you can push the changes back to the remote server at a later stage. This gives Git the speed to work.

Whatever is saved in Git is saved with a checksum. So, making changes to the saved information in Git and expecting that Git will not know about it is nearly impossible.

In Git, we generally always add data. So, it is very difficult to lose data in Git. In most cases, as the data is pushed to other repositories as well, we can always recover from any unexpected corruption.

This was a very short discussion on Git because GitHub is based entirely on Git. In this book, we will talk about GitHub and check how it is used because of the following reasons:

Git is a command line tool and can be intimidating for a not-so-daily programmer. For a scripter, GitHub is a far better solution.

Bringing your scripts to GitHub connects you to the social network of collaboration, and you can work with others in a more collaborative way.

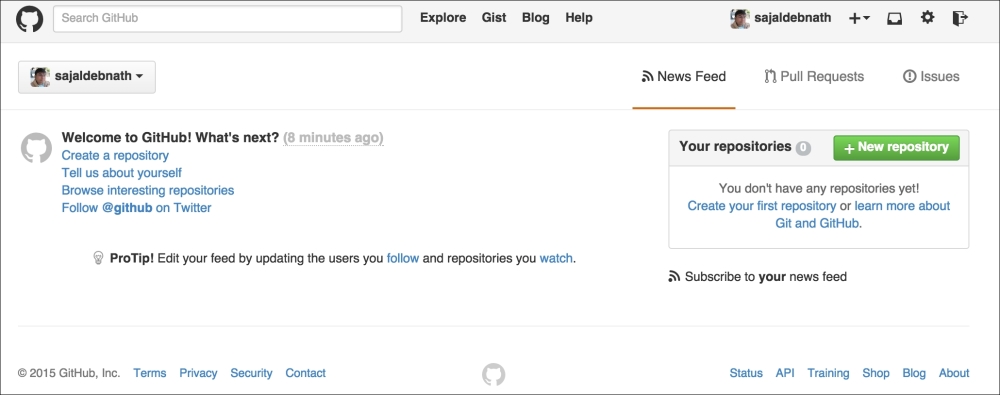

So, without further ado, let's dive into GitHub. GitHub is the largest host for Git repositories. Millions of developers work on thousands of projects in GitHub. To use GitHub, the first thing you need to do is create an account in GitHub. We can create an account in GitHub by simply visiting https://github.com/ and signing up in the section provided for sign up. Note the e-mail ID that you used to create the account, as you will use the same e-mail ID to connect to this account from the local repository at a later stage.

One point to note is that once you log in to GitHub, you can create an SSH key pair to work with your local account and the GitHub repository. For security reasons, you should create a two-factor authentication for your account. To do so, perform the following steps:

Log in to your account and go to Settings (top-right hand corner).

On the left-hand side, under the Personal settings category, choose Security.

Next, click on Set up two-factor authentication.

Then, you can use an app or send an SMS.

So, you have created an account and set up two-factor authentication. Now, since we want to work on our local systems as well, we need to install it on the local system. So, go ahead and download the respective version for your system from http://git-scm.com/downloads.

Now, we can configure it two ways. Git is either included or can be installed as part of it in GitHub for Windows/Mac/Linux. GitHub uses a GUI tool. First, let's start with the command-line tool. For my examples I have used GitHub Desktop, which can be downloaded from https://desktop.github.com/.

Open the command-line tool and run the following commands to configure the environment:

git config --global user.name "Your Name" git config --global user.email "email@email.com"

You need to replace Your Name with your name and email@email.com with the e-mail with which you created your account in GitHub.

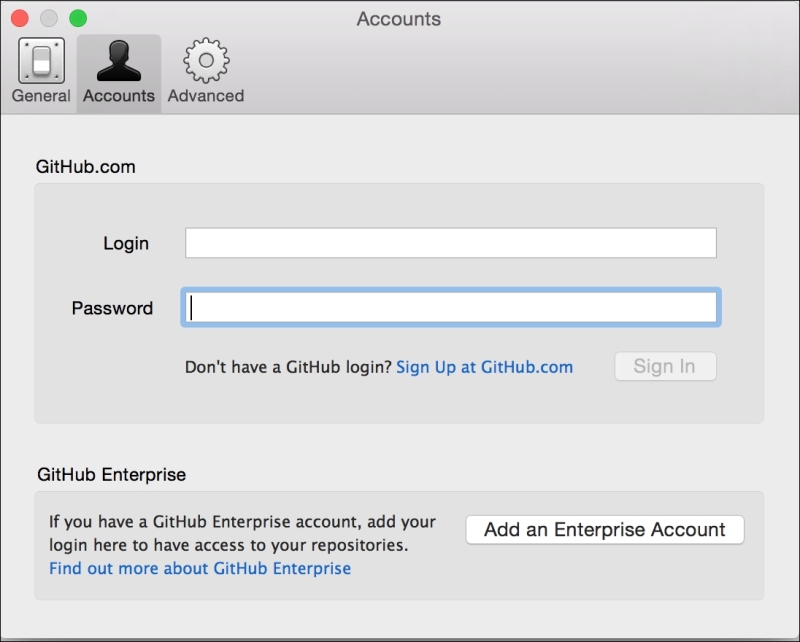

You can set the same using the GitHub tool as well. Once you install GitHub, go to Preferences and then Accounts. Log in with your account that you created on the GitHub site. This will connect you to your account in GitHub.

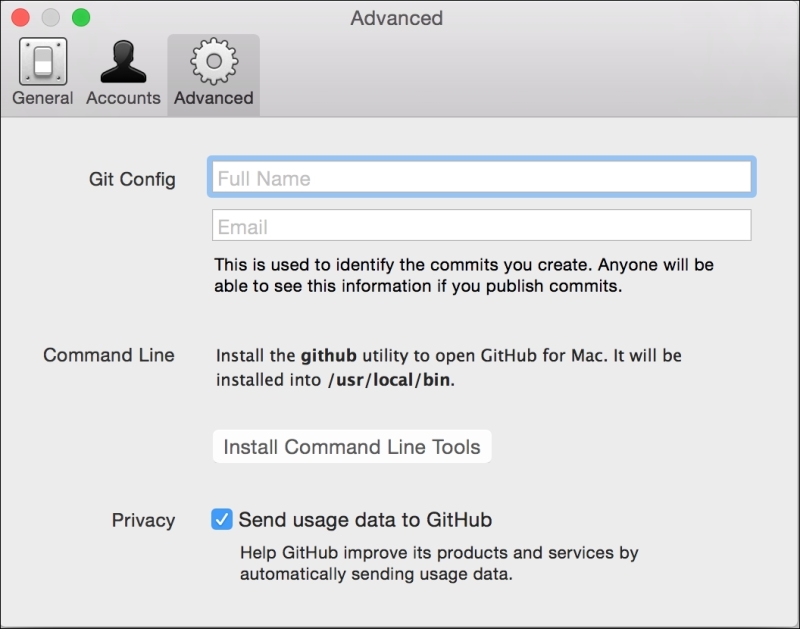

Next, go to the Advanced tab and fill in the details that you provided in the previous configuration under the Git Config section. Also, under the Command Line section, click on Install Command Line Tools. This will install the GitHub command-line utility on the system.

Okay, so now we have installed everything that we require, so let's go ahead and create our first repository.

To create a repository, log in to your account in GitHub, and then click on the +New Repository tab:

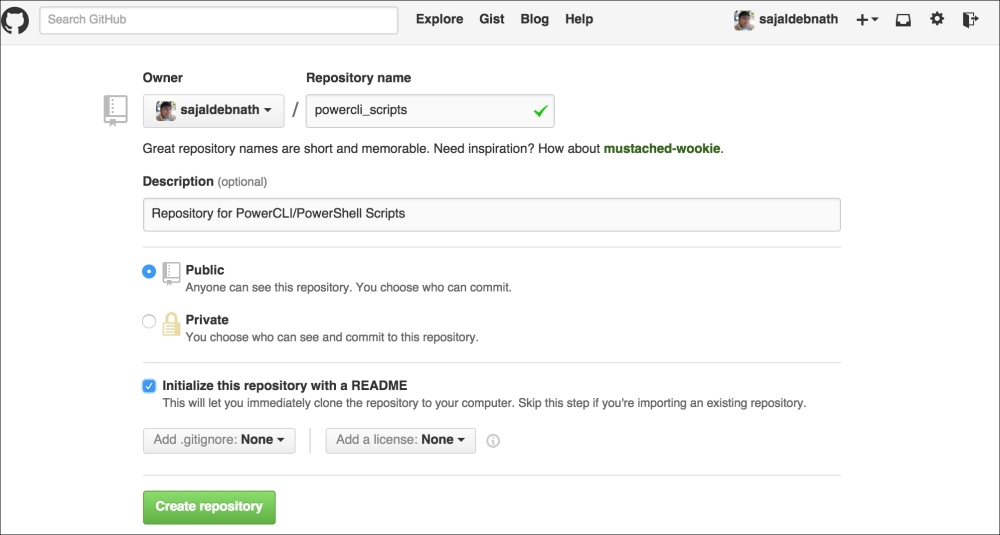

Next, provide a name for the repository, provide a description, and select whether you want to make it Private or Public. You can also select Initialize this repository with a README.

Once the preceding information is provided, click on Create Repository. This will create a new repository under your name and you would be the owner of the repository.

Before we go ahead and talk more about using GitHub, let's talk about a few concepts and how they work in GitHub.

There are two collaborative models in which GitHub works.

In this model, anyone can fork an existing repository and push changes to their personal fork. To do this, they do not need to have permissions granted to access the source repository. When the changes made to the personal repository are ready to be pushed to the original repository, the changes must be pulled back by the project owner. This reduces the initial collaboration required between team members and collaborators, who can work more independently. This is a popular model between open source collaborators.

In the shared repository model, everyone working on the project is granted push access to the original repository, and thus, anyone working on this project can update the original project. This is mainly used in small teams or private projects where organizations collaborate to work on a project.

Pull requests are more useful in the fork & pull model as they notify the project maintainer about the changes that have been made. They also initiate the code review and discussions on the changes made before they can be pushed back to the original project.

When you create a repository, it is, by default, the master repository. So, how does another person work on the same project? They create a branch for themselves. A branch is a replica of the main repository. You can make all the changes to the branch, and when you are ready, you can merge your changes to the main repository.

To summarize, a typical GitHub workflow is as follows:

Create a branch of the

masterrepository.Make some changes of your own.

Push this branch to your GitHub project.

Open a pull request in GitHub.

Discuss the changes, and if required, continue working on the changes.

The project owner merges or closes the pull request.

Now, we can work on the preceding workflow from the command line using Git or use GUI from GitHub. The commands are as provided below (for those who prefer CLI to GUI):

git init: This command initializes a directory as a new Git repository in your local system. Until this is done, there's no difference between a normal directory and a Git repository.git help: This command will show you a list of commands available with Git.git status: This command checks the status of the repository.git commit: This command asks Git to mark changes made to a repository. It will take a snapshot of the repository.git branch: This command allows you to make a branch of an existing repository.git checkout: This command allows you check the contents of the repository without going inside the repository.git merge: As the name suggests, this command allows you to merge the changes that you made to the master.git push: This particular command allows you to push the changes you made on your local computer back to the GitHub online repository so that other collaborators are able to see them.git pull: If you are working on your local computer and want to bring the latest changes from the GitHub repository to your local computer, you can use this command to pull down the changes back to the local system.

Since we have already created an online repository named powercli_scripts, let's create a local repository and sync them.

To create a local repository, all you need to do is create a local directory, and then from inside the directory, run the git init command:

sdebnath:~ sdebnath$ mkdir git sdebnath:~ sdebnath$ cd git sdebnath\git$ git init Initialized empty Git repository in /Users/sdebnath/git/.git/.

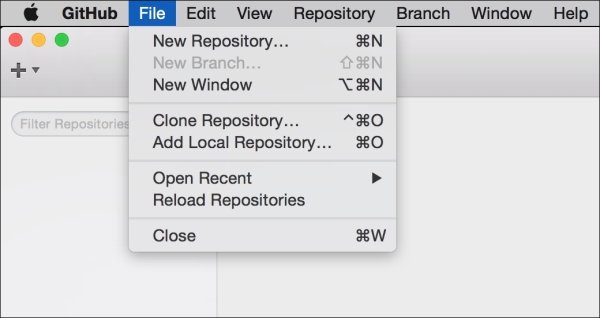

To use the GUI tool open the GitHub application, and then from the File menu, select Add Local Repository.

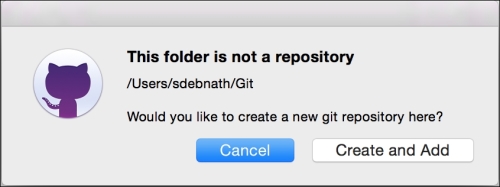

This will bring up a pop-up window saying that This folder is not a repository and asking if you want to create and add the repository. Click on Create and Add. This will create a local repository for you.

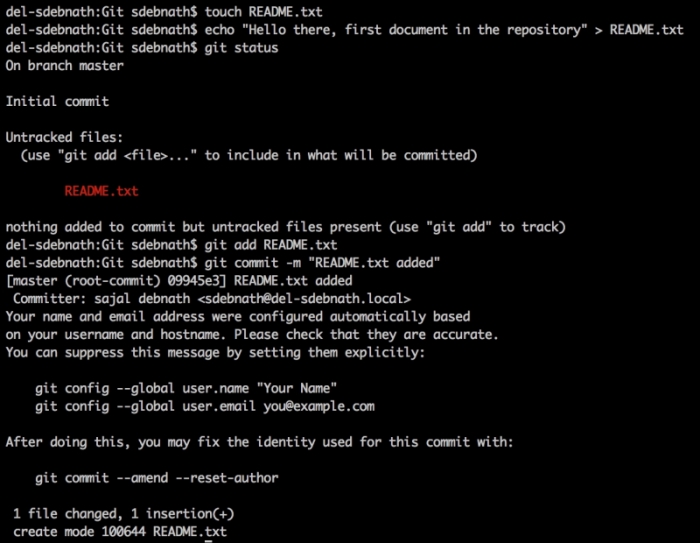

Now, let's go to the directory and create a file and put some text into it. Once the file is created, we will check the status of the repository that will tell us that there are untracked files in the repository. Once done, we will notify Git that there is a file that has changed. Then, we will commit the change to Git so that Git can take its snapshot. Here is a list of commands:

$ cd Git $ touch README.txt $ echo "Hello there, first document in the repository" > README.txt $ git status $ git add README.txt $ git commit -m "README.txt added"

The following is a screenshot of the above commands and the output that we get for a successful run.

Now, we need to add the remote repository. We do this by running the following command:

$ git remote add origin https://github.com/yourname/repository.git

Replace yourname with your username and repository with your repository name.

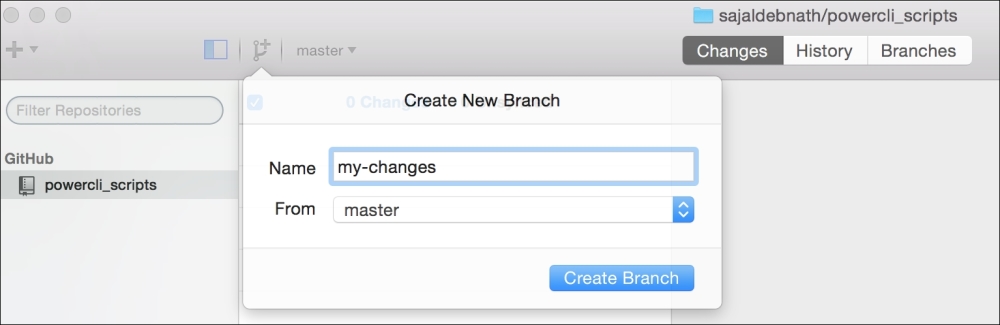

You can do the same work through the GitHub's GUI application as well. Once you open the application, go to Preferences, and then under Accounts, log in to your GitHub account with your account details. Once done, you can create a branch of the repository.

Let's create a my-changes branch from master. Click on the Branch icon next to master (as shown in the following screenshot):

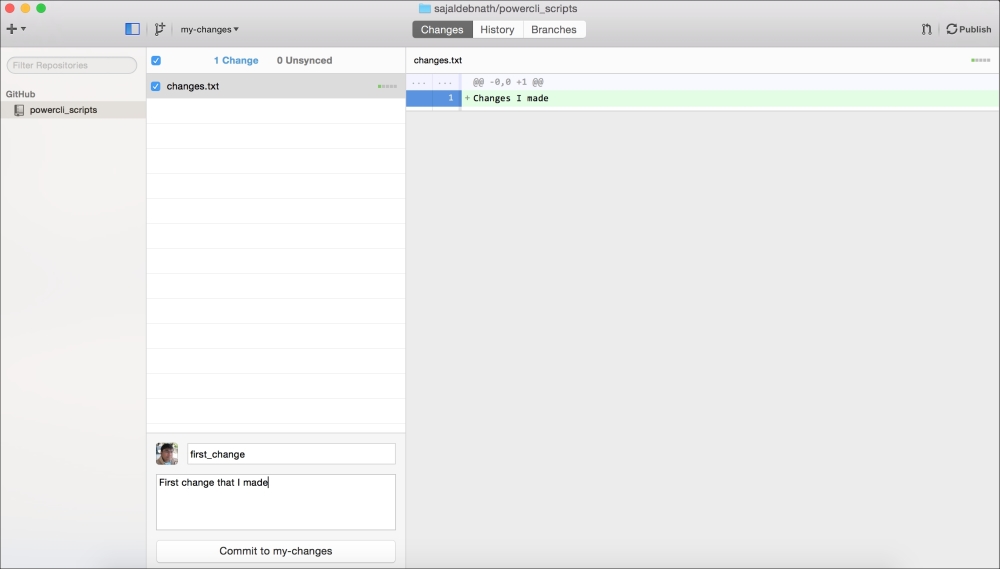

Once you do this, your working branch changes to my-changes. Now, add a file to your local repository, say, changes.txt, and add some text to it:

$ touch changes.txt $ echo "Changes I made" > changes.txt

The changes that you made will immediately be visible in the GitHub application. You commit the changes made to the my-changes repository.

In the Repository option, select the Push option to push the changes to GitHub.

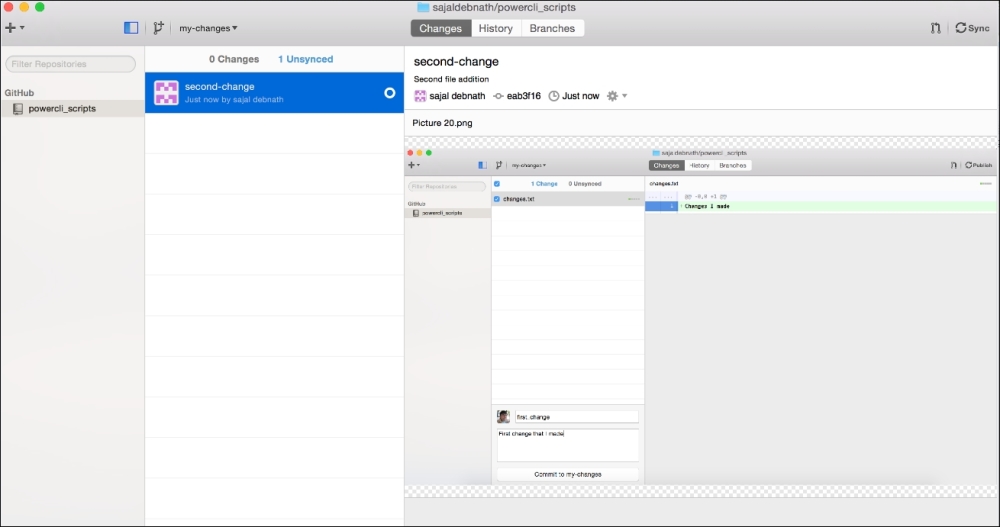

Next, I have added another file and again committed to my-changes.

This will keep the status of the local and remote repositories as Unsynced. Click on the right-hand side Sync button to sync the repositories.

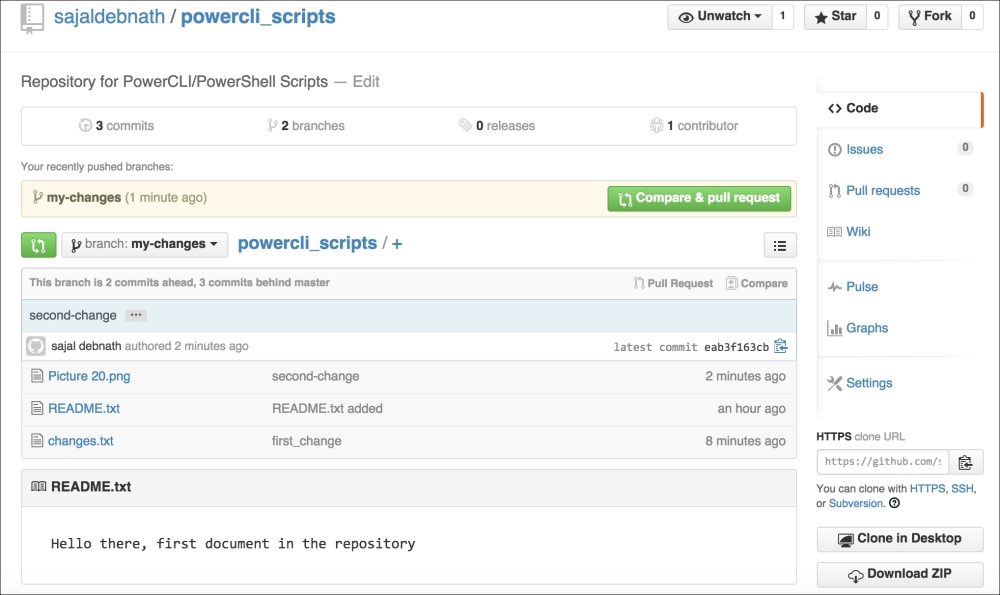

Now, if I go back to the GitHub site, I can see the changes that I made to the my-changes branch.

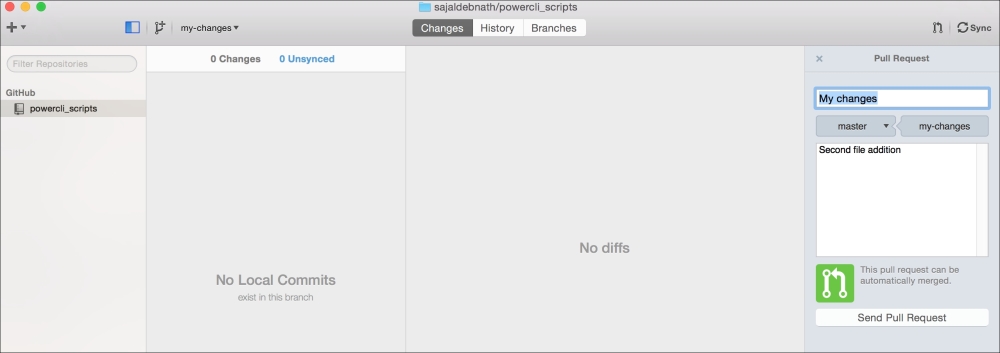

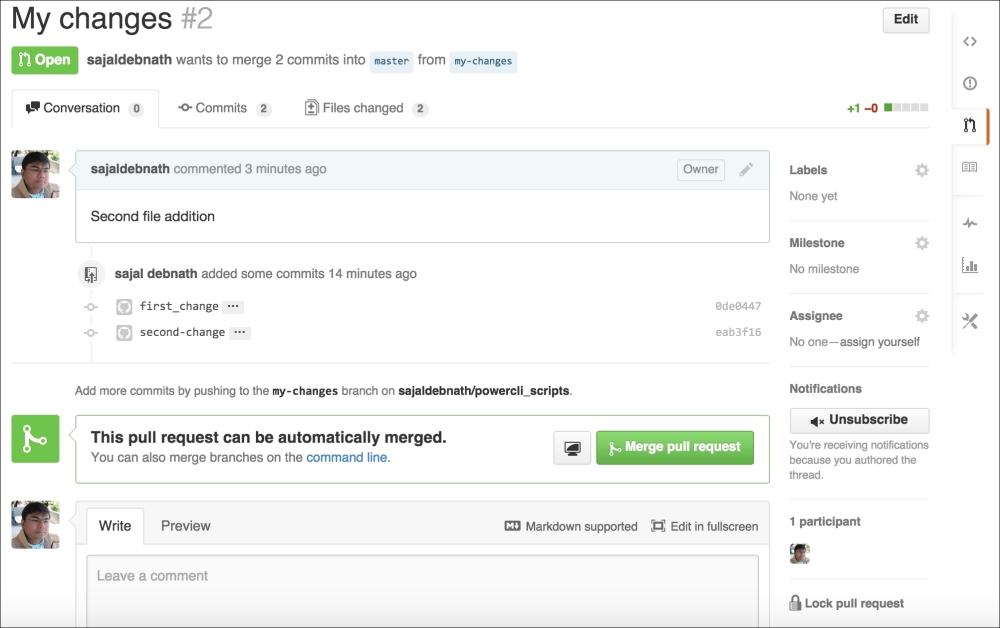

Next, I want to merge the branch into the main branch. I create a pull request. I can do this directly from the GitHub online page or from the GitHub application. In the GitHub application on the local system, go to Repository, and then click on Create Pull Request. Provide it a name and description, and click on Create. This will create a pull request in the main branch.

Now, go back to the GitHub page, and you will be able to see the details of the pull request.

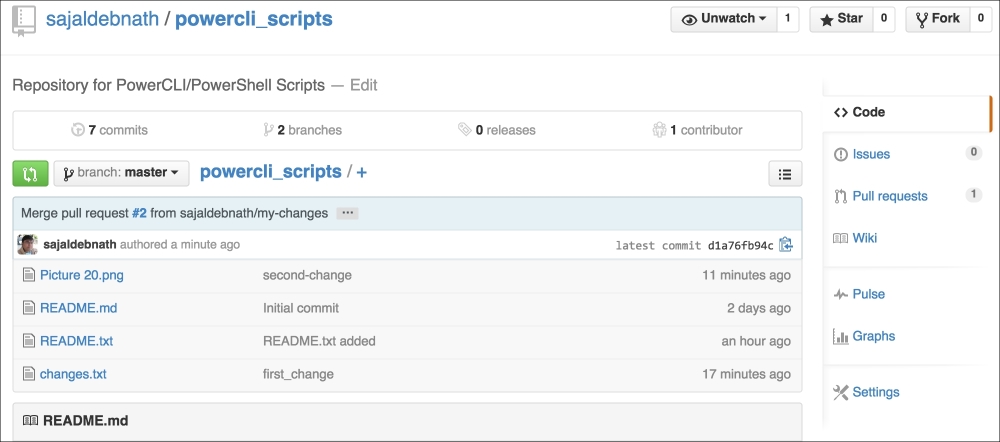

Click on Merge pull request, provide your comment, and click on Confirm merge to merge the change. Now, you can click on Delete the branch.

Also, if you go back to your main branch (which is powercli_scripts in my case), you will be able to see the changes in the main branch.

This concludes this section. Now, you should be able to create your own project or fork and work on existing projects.

In the previous topic, we discussed how to maintain and version your code, and how to collaborate with others to work on the same project using GitHub. In this topic, we will discuss how to test your code with Pester. But before we go ahead and jump into the topic, let me ask you some questions: How do you code? How long are those scripts? You might think "testing my code, but I am not a developer, I am a system administrator, and whenever I have a task at hand, I write some lines of code (well to be honest, it can be few hundred as well), do some testing, and then leave it as it is". Well, since the beginning of this book, I have been using the word "code" more and more, so we should accept the fact and start writing amazing new programs to make our lives easier. If you have started reading this book, then you are definitely going to write complex pieces of programs, and you should definitely know how to test them in order to perfect them. So, what is software testing?

Software testing is an investigative process conducted on the written code to find out the quality of the code. It may not find all the problems or bugs in the code, but it guarantees the working of the code under certain conditions.

Pester is an unit testing framework for PowerShell, which works great for both white box and black box testing.

Tip

White box testing (also known as clear box testing, glass box testing, transparent box testing, and structural testing) is a method of testing software that tests the internal structures or workings of an application, as opposed to its functionality (that is, black box testing). In white box testing, an internal perspective of the system, as well as programming skills, are used to design test cases. The tester chooses inputs to exercise paths through the code and determine the appropriate outputs. This is analogous to testing nodes in a circuit, for example, in-circuit testing (ICT).

Black box testing is a method of software testing that examines the functionality of an application without peering into its internal structures or workings. This method of testing can be applied to virtually every level of software testing: unit, integration, system, and acceptance. It typically comprises of mostly all higher level testing, but also dominates unit testing as well.

You can refer to https://en.wikipedia.org/wiki/White-box_testing and https://en.wikipedia.org/wiki/Black-box_testing.

Pester is a framework based on behavior-driven development (BDD), which is again based on the test-driven development (TDD) methodology. Well, I have used a lot of development jargons. Let me clarify them one by one.

Earlier, we used to write the entire code, define the test cases, and run the tests based on those definitions. In recent times, with the development of philosophies, such as "Extreme Programming", came a new concept of testing. Instead of writing some code to solve some problems, we first define what we want to achieve in the form of test cases. We run the tests and make sure that all the tests fail. Then, we write the minimum amount of code to remove the errors and iterate through the process to make sure that all the tests pass. Once this is done, we refactor the code to an acceptable level and get the final code. It's just the opposite of a traditional way of development. This is called test-driven development or TDD for short.

Behavior-driven development is a software development process, which is based on TDD, and is based on the behavioral specification of the software units. TDD, by default, is very nonspecific in nature; it can allow tests in the form of a high-level requirement or a low-level technical requirement. BDD brings more structure and makes more specific choices than TDD.

Well, now that you understand what testing is and the methodologies used, let's dive into Pester.

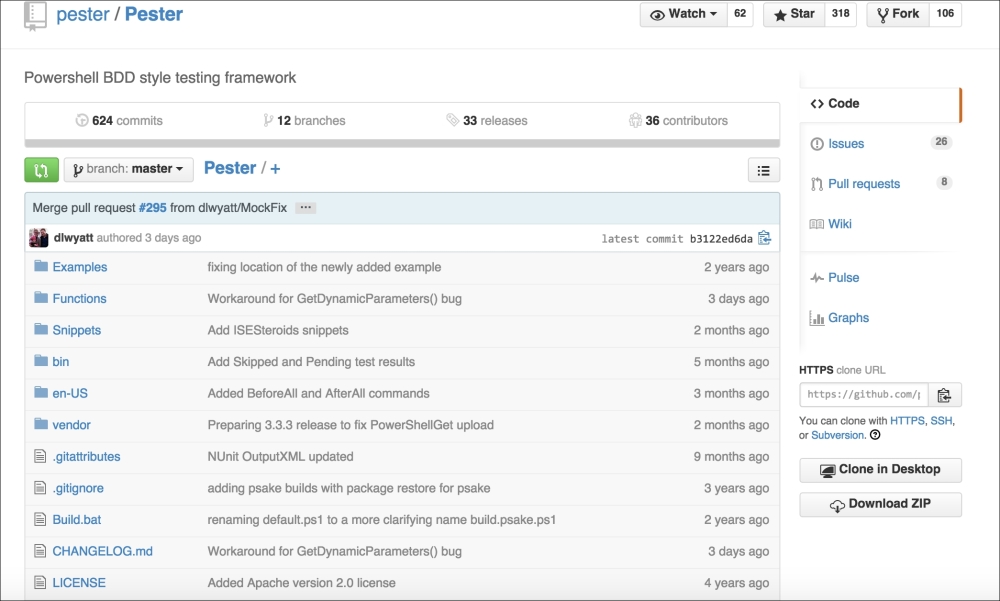

Pester is a PowerShell module developed by Scott Muc and is improved by the community. It is available for free on GitHub. So, all that you need to do is download it, extract it, put it into the Modules folder, and then import it to the PowerShell session to use it (since it is a module, you need to import it just like any other module).

To download it, go to https://github.com/pester/Pester.

In the lower right-hand corner, click on Download Zip.

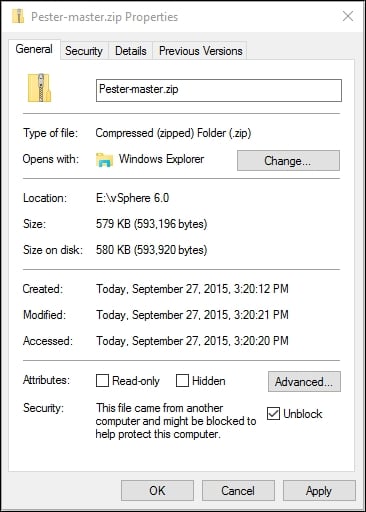

Once you download the ZIP file, you need to unblock it. To unblock it, right-click on the file and select Properties. From the Properties menu, select Unblock.

You can unblock the file from the PowerShell command line as well. Since I have downloaded the file into the C:\PowerShell Scripts folder, I will run the command as follows. Change the location according to your download location:

PS C:\> Unblock-File -Path 'C:\PowerShell Scripts\Pester-master.zip' -Verbose VERBOSE: Performing the operation "Unblock-File" on target "C:\PowerShell Scripts\Pester-master.zip".

Now, copy the unzipped folder Pester-master to C:\Program Files\WindowsPowerShell\Modules or C:\Windows\System32\WindowsPowerShell\v1.0\Modules.

For simplicity, rename the folder to Pester from Pester-master.

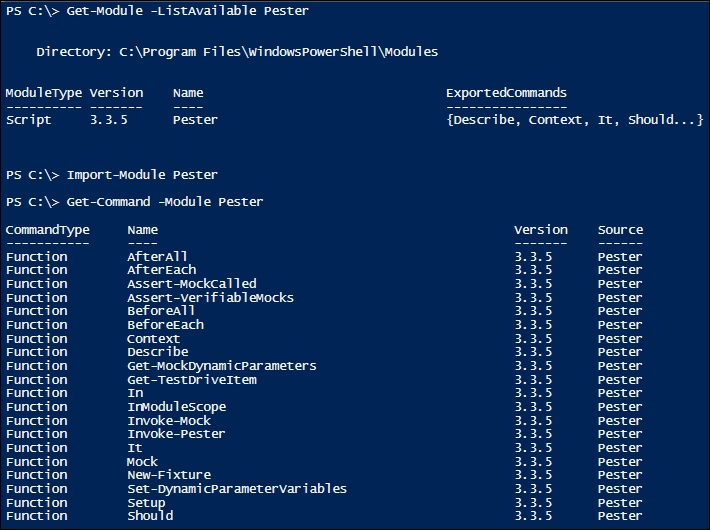

Now open a PowerShell session by opening the PowerShell ISE. In the console, run the following commands:

PS C:\> Get-Module –ListAvailable –Name Pester PS C:\>Import-Module Pester PS C:\> (Get-Module –Name Pester).ExportedCommands

The last command will give you a list of all the commands imported from the Pester module.

You can get a list of cmdlets available in the module by running the following command as well:

PS C:\>Get-Command -Module Pester

Now let's start writing our code and test it. Let's first decide what we want to achieve. We want to create a small script that will access any name as the command-line parameter and generate as output a greeting to the name. So, let's first create a New-Fixture:

PS C:\PowerShell Scripts> New-Fixture -Path .\HelloExample -Name Say-Hello Directory: C:\PowerShell Scripts\HelloExample Mode LastWriteTime Length Name ---- ------------- ------ ---- -a---- 3/23/2015 12:39 AM 30 Say-Hello.ps1 -a---- 3/23/2015 12:39 AM 252 Say-Hello.Tests.ps1

Notice that one folder named HelloExample, and two files are created in this folder. The Say-Hello.ps1 file is the file for the actual code, and the second file, Say-Hello.Tests.ps1, is the test file.

Now go to the directory and set the location as the current location:

PS C:\PowerShell Scripts> cd .\HelloExample PS C:\PowerShell Scripts\HelloExample> Set-Location -Path 'C:\PowerShell Scripts\HelloExample'

Now, let's examine the contents of these two files.

We can see that Say-Hello.ps1 is a file with the following lines:

function Say-Hello {

}The contents of the file Say-Hello.Tests.ps1 are far more informative, as shown here:

$here = Split-Path -Parent $MyInvocation.MyCommand.Path

$sut = (Split-Path -Leaf $MyInvocation.MyCommand.Path).Replace(".Tests.", ".")

. "$here\$sut"

Describe "Say-Hello" {

It "does something useful" {

$true | Should Be $false

}

}The first three lines extract the filename of the main script file, and then the dot sources it to the current running environment so that the functions defined in the script will be available in the current scope.

Now, we need to define what the test should do. So, we define our test cases. I have made the necessary modifications:

$here = Split-Path -Parent $MyInvocation.MyCommand.Path

$sut = (Split-Path -Leaf $MyInvocation.MyCommand.Path).Replace(".Tests.", ".")

. "$here\$sut"

Describe "Say-Hello" {

It "Outputs Hello Sajal, Welcome to Pester" {

Say-Hello -name Sajal | Should Be 'Hello Sajal, Welcome to Pester'

}

}What I am expecting here is that when Say-Hello.ps1 is run from the command line with a name as a parameter, it should return Hello <name>, Welcome to Pester.

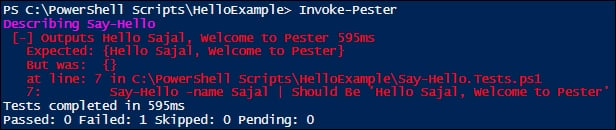

Let's run the first test. As expected, we get a failed test.

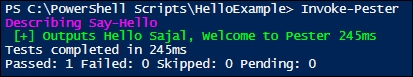

Now, let's correct the code with the following code snippet:

function Say-Hello {

param (

[Parameter(Mandatory)]

$name

)

"Hello $name, Welcome to Pester"

}Let's run the same test again. Now, it passes the test successfully.

Since Pester is based on BDD, which is again modeled around a domain-specific language (support natural language), Pester uses a set of defined words to specify the test cases.

We first start by using a Describe block to define what we are testing. Then, Context is used to define the context in which the test block is being run. We take the help of It and Should to define the test:

Describe "<function name>" {

Context "<context in which it is run>" {

It "<what the function does>" {

<function> | Should <do what>

}

}

Context "<context in which it is run>" {

It "<what the function does>" {

<function> | Should <do what>

}

}

}Remember one point that though we call Describe, Context, and It as keywords, they are basically functions. So, when we use script blocks to call them, we need to use them in a specific way. So, the following is incorrect:

Context "defines script block incorrectly"

{

#some tests

}The checkpoints or assertions are as follows:

Should Be

Should BeExactly

Should BeNullOrEmpty

Should Match

Should MatchExactly

Should Exist

Should Contain

Should ContainExactly

Should Throw

Also, all the assertions have an opposite, negative meaning, which we get by adding a 'Not' in between. For example, Should Not Be, Should Not Match, and so on.

Now, you should be able to go ahead and start testing your scripts with Pester. For more details, check out the Pester Wiki at https://github.com/pester/Pester/wiki/Pester.

The last topic of this chapter is how to connect to vCenter and other VMware environments using PowerCLI cmdlets.

To use the PowerCLI cmdlets, you need to add the snap-ins and import the modules accordingly. As mentioned previously in the sections The vSphere PowerCLI package and The vCloud PowerCLI package, to connect to a vCenter environment, you first need to add the Snap-in VMware.VimAutomation.Core, and to connect to a vCloud Director environment you need to import the VMware.VimAutomation.Cloud module. If you install the package in its default location and your system is a 64-bit OS, then the location of the module is given in the following folder:

PS C:\>Add-PSSnapin VMware.VimAutomation.Core PS C:\>Import-Module 'C:\Program Files (x86)\VMware\Infrastructure\vSphere PowerCLI\Modules\VMware.VimAutomation.Cloud'

Once the snap-in is added and the module is imported, you will have access to all the PowerCLI commands from the PowerShell ISE console.

Now, let's connect to the vCenter environment by running the following command:

PS C:\>Connect-VIServer -Server <server name> -User <user name>-Password <Password>

Similarly, to connect to the vCloud Director server, run the following command:

PS C:\> Connect-CIServer -Server <server name> -User <user name>-Password <Password>

There are many other options. You can see the details using the Get-help cmdlet or the online help for these commands.

In this chapter, we touched on the basics of PowerShell, covering the main added advantages of the PowerShell 5.0 preview and basic programming constructs, and how they are implemented in PowerShell. We also discussed PowerCLI, what's new in Version 6.0, and how to run PowerCLI scripts from Command Prompt or as a scheduled task. Next, we discussed version control and how to use GitHub to fully utilize the concepts. At the end of this chapter, we saw how to use Pester to test PowerShell scripts and how to connect to vCenter and vCloud Director environments.

In the next chapter, we are going to cover advanced functions and parameters in PowerShell. We will also cover how to write your own help files and error handling in PowerShell.