In this highly competitive and rapidly changing world, for enterprises to be at the leading edge a highly reliable, cost effective, and infinitely scalable IT infrastructure is required. It is very important for enterprises to adapt to changing customer needs, fail fast, learn, and reinvent the wheel. Ever since hardware costs have come down the emphasis has shifted to making the most out of the capital investments made on physical infrastructure or reducing the amount of investments to build or rent new infrastructure. This fundamentally means running more applications/services out of the existing IT landscape.

Virtualized infrastructure solves the preceding problems and it caters to all the IT needs of a modern enterprise. Virtualization provides an abstraction over compute, storage, or networking resources and provides a unified platform for managing the infrastructure. Virtualization facilitates resource optimization, governance over costs, effective utilization of physical space, high availability of line-of-business (LOB) applications, resilient systems, infinite scalability, fault-tolerance environments, and hybrid computing.

The following are a few more features of virtualization:

- Virtualization is a software which when installed on your IT infrastructure allows you to run more virtual machines (VMs) in a single physical server, thereby increasing the density of machines per square feet of area

- Virtualization is not just for enabling more computers, it also allows collaborating all storage devices to form a single large virtual storage space, which can be pooled across machines and provisioned on demand

- It also provides benefits of hybrid computing by enabling you to run different types of operating systems (OSes) in parallel, therefore catering to large and varied customers

- It centralizes the IT infrastructure and provides one place to manage machines and cost, execute patch updates, or reallocate resources on demand

- It reduces carbon footprint, cooling needs, and power consumption

Cloud computing is also an implementation of virtualization. Apart from virtualizing the hardware resources, the cloud also promises to offer rich services such as reliability, self-service, and Internet level scalability on a pay-per-use basis.

Due to reduced costs, today's VMs offered by public or private cloud vendors are highly powerful. But are our applications or services utilizing the server capacity effectively? What percentage of compute and storage are the applications actually using? The answer is very low. Traditional applications are not so resource heavy (except a few batch processing systems, big data systems with heavy scientific calculations, and gaming engines that fully utilize the PC's power). In order to provide high scalability and isolation to the customers we end up running many instances of the application in each VM with 10%-30% utilization. And also it takes substantial amounts of time to procure a machine, configure it for the application and its dependencies, make it ready to use, and of course the number of VMs that you can run on your private data center is limited to the physical space you own. Is it really possible to further optimize resource utilization but still have the same isolation and scalability benefits? Can we get more throughput out of our IT infrastructure than we get today? Can we reduce the amount of preparation work required to onboard an application and make it ready to use? Can we run more services using the same physical infrastructure? Yes, all of this is possible, and containerization is our magic wand.

Containerization is an alternative to VM virtualization from which enterprises can benefit from running multiple software components in a single physical/virtualized machine with the same isolation, security, reliability, and scalability benefits. Apart from effective utilization, containerization also promotes rapid application deployment capabilities with options to package, ship, and deploy software components as independent deployment units called containers.

In this chapter, we are going to learn:

- Levels of virtualization

- Virtualization challenges

- Containerization and its benefits

- Windows Server Containers

- Hyper-V Containers

- Cluster management

- Terminology and tooling support

Microsoft's journey with VM/hardware virtualization began with its first hypervisor called Hyper-V. In the year 2008, Microsoft released Windows Server 2008 and 2008 R2 with Hyper-V role, which is capable of hosting multiple VMs inside a physical machine. Windows Server 2008 was available in different flavors such as Standard, Enterprise, and Datacenter. They all differ in the number of VMs or guest OS that can be hosted for free per server. For example, in Windows Server 2008 Standard edition you can run one guest OS for free and new guest OS licenses have to be purchased for running more VMs. Windows Server 2008 Datacenter edition comes with unlimited Windows guest OS licenses.

Note

Often when talking about virtualization we use words such as host OS and guest OS. Host OS is the OS running on a physical machine (or VM if the OS allows nested virtualization) that provides the virtualization environment. Host OS provides the platform for running multiple VMs. Guest OS refers to the OS running inside each VM.

At about the same time, Microsoft also shipped another hypervisor called Hyper-V Server 2008 with a limited set of features, such as Windows Server Core, CLI, and Hyper-V role. The basic difference between a server with role and Hyper-V versions is the licensing norms. Microsoft Hyper-V Server is a free edition and it allows you to run a virtualized environment by using existing Windows Server licenses. But of course you would miss the other coolest OS features of full Windows Server as host OS, such as managing the OS using neat and clean GUI. Hyper-V can only be interacted via remote interfacing and a CLI. Hyper-V server is a trimmed down version for catering to the needs of running a virtualized environment.

In the year 2008, Microsoft announced its cloud platform called Windows Azure (now Microsoft Azure), which uses a customized Hyper-V to run a multitenant environment of compute, storage, and network resources using Windows Server machines. Azure provides a rich set of services categorized as Platform as a Service (PaaS) and Infrastructure as a Service (IaaS) using the virtualized infrastructure spread across varied geographical locations.

In August 2012, Windows Server 2012 and 2012 R2 bought significant improvements to the server technology, such as improved multitenancy, private virtual LAN, increased security, multiple live migrations, live storage migrations, less expensive business recovery options, and so on.

Windows Server 2016 marks a significant change in the server OSes from the core. Launched in the second half of the year 2016, Windows Server 2016 has noteworthy benefits, especially for new trends such as containerization and thin OS:

- Windows Server 2016 with Windows Server Containers and Hyper-V Containers: Windows Server 2016 comes with a container role that provides support for containerization. With containers role enabled applications can be easily packaged and deployed as independent containers with a high degree of isolation inside a single VM. Windows Server 2016 comes with two flavors of containers: Windows Server Containers and Hyper-V Containers. Windows Server Containers run directly on Windows Server OS while Hyper-V Containers are the new thin VMs that can run on a Hyper-V. Windows Server 2016 also comes with enhanced Hyper-V capabilities such as nested virtualization.

- Windows Server 2016 Nano Server: Nano Server is a scaled down version of Windows Server OS, which is around 93% smaller than the traditional server. Nano Servers are designed primarily for hosting modern cloud applications called microservices in both private and public clouds.

Other virtualization platforms from Microsoft:

- Microsoft also offers a hosted virtualization platform called Virtual PC acquired from Connectix in 2003. Hosted virtualization is different from regular hypervisor platforms. Hosted virtualization can run on a 32/64-bit system such as traditional desktop PCs from Windows 7 OS and above, whereas the traditional hypervisors run on special hardware and 64-bit systems only.

- A few more virtualization solutions offered by Microsoft are hosted virtualizations called Microsoft Virtual Server 2005 for Windows Server 2003, Application Virtualization (App-V), MED-V for legacy application compatibility, terminal services, and virtual desktop infrastructure (VDI).

Depending on how the underlying infrastructure is abstracted away from the users and the isolation level, various virtualization technologies have evolved. The following sections discuss a few virtualization levels in brief, which eventually lead to containerization.

During the pre-virtualization era, a physical machine was considered a singleton entity that could host one operation system and could contain more than one application. Enterprises that run highly critical businesses or multitenant environments need isolation between applications. This limits from using one server for many applications. Hardware virtualization or VM virtualization helped to scale out single physical servers as they host multiple VMs within a single server where each VM can run in complete isolation. Each VM's CPU and memory needs can be configured as per the application's demand.

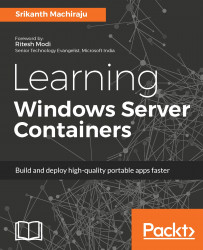

A discrete software unit called hypervisor or Virtual Machine Manager (VMM) runs on top of virtualized hardware and facilitates server virtualization. Modern cloud platforms, both public and private, are the best examples of hardware virtualization. Each physical server runs an operation system called host OS, which runs multiple VMs each with their own OS called guest OS. The underlying memory and CPU of the host OS is shared across the VMs depending on how the VMs are configured while creating. Server virtualization also enables hybrid computing, which means the guest OS can be of any type, for example, a machine running Windows with Hyper-V role enabled can host VMs running Linux and Windows OSes (for example Windows 10 and Windows 8.1) or even another Windows Server OS. Some examples of server virtualization are VMware, Citrix XenServer, and MS Hyper-V.

In a nutshell, this is what platform virtualization looks like:

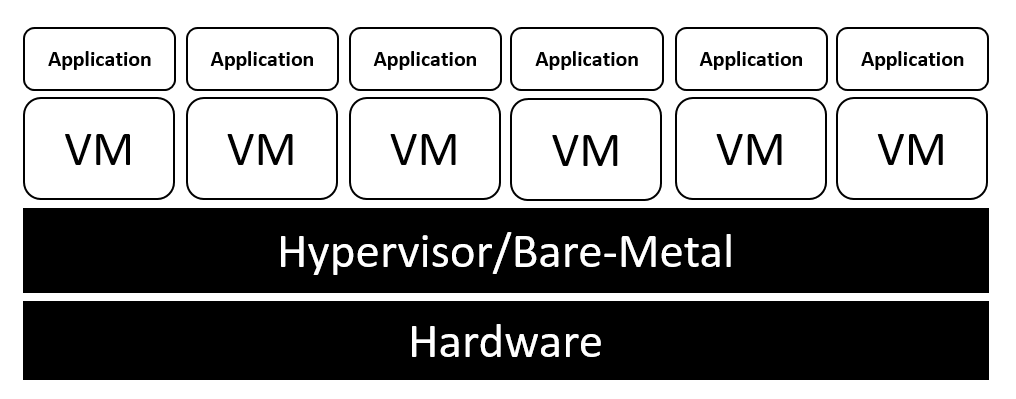

Storage virtualization refers to pooling of storage resources to provide a single large storage space, which can be managed from a single console. Storage virtualization offers administrative benefits such as managing backups, archiving, on demand storage allocation, and so on.

For example, Windows Azure VMs by default contain two disk drives for storage, but on demand we can add any number of disk drives to the VM within minutes (limited to the VM tier). This allows instant scalability and better utilization since we are only paying for what we use and expand/shrink as per demand.

This is what storage virtualization looks like:

Network virtualization is the ability to create and manage a logical network of compute, storage, or other network resources. The components of a virtual network can be remotely located in the same or different physical networks across different geographical locations. Virtual networks help us create custom address spaces, logical subnets, custom network security groups for configuring restricted access to a group of nodes, custom IP configuration (few applications demand static IPs or IPs within a specific range), domain defined traffic routing, and so on.

Most of the LOB applications demand logical separation between business components for enhanced security, isolation, and scalability needs. Network virtualization helps build the isolation configuring subnet level security policies, restrict access to logical subnets or nodes using access control list (ACL), and restrict inbound/outbound traffic using custom routing without running a physical network. Public cloud vendors provide network virtualization on pay per use basis for small to medium scale business who cannot afford running a private IT infrastructure. For example, Microsoft Azure allows you to create a virtual network with network security boundaries, secure VPN tunnel to connect to your personal laptops, or on-premise infrastructure, high bandwidth private channels, and so on using pay-per-use pricing. You can run your applications on cloud with tight security among nodes using logical separation without even investing on any network devices.

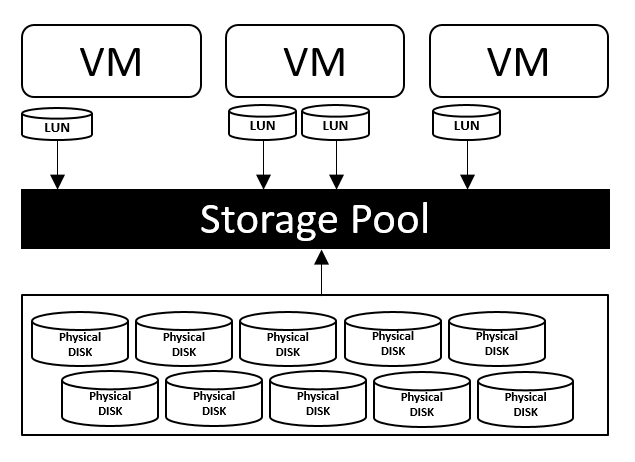

The topic of this book is associated with OS virtualization. OS virtualization enables the kernel to be shared across multiple processes inside a single VM with isolation. OS virtualization is also called user-mode or user-space virtualization as it is one level up from the kernel. Individual user-space instances are called containers. The kernel provides all the features for resource management across containers.

This is highly helpful while consolidating a set of services spread across multiple servers into a single server. Few benefits of OS virtualization are high security due to reduced surface of contact for a breach or viruses, better resource management, easy migration of applications or services across hosts, and also instant and dynamic load balancing. OS virtualization does not require any hardware support, so it is easy to implement than other technologies. The most recent implementations of OS virtualization are Linux LXC, Docker, and Windows Server Containers.This is what OS virtualization looks like:

There are a few limitations with the hardware or VM virtualization, which leads to containerization. Let's look at a few of them.

VMs run a fully-fledged OS. Every time a machine needs to be started, restarted, or shut down it involves running the full OS life cycle and booting procedure. A few enterprises employ rigid policies for procuring new IT resources. All of this increases the time required by the team to deliver a VM or to upgrade an existing one because each new request should be fulfilled by a whole set of steps. For example, a machine provisioning involves gathering the requirements, provisioning a new VM, procuring a license and installing OS, allocating storage, network configuration, and setting up redundancy and security policies.

Every time you wish to deploy your application you also have to ensure application specific software requirements such as web servers, database servers, runtimes, and any support software such as plugin drivers are installed on the machine. With teams obliged to deliver at light speed, the current VM virtualization will create more friction and latency.

The preceding problem can be partially solved by using the cloud platforms, which offer on-demand resource provisioning, but again public cloud vendors come up with a predefined set of VM configuration and not every application utilizes all allocated compute and memory.

In a common enterprise scenario every small application is deployed in a separate VM for isolation and security benefits. Further for ensuring scalability and availability identical VMs are created and traffic is balanced among them. If the application utilizes only 5-10% of the CPU's capacity, the IT infrastructure is heavily underutilized. Power and cooling needs for such systems are also high, which adds up to the costs. Few applications are used seasonally or by limited set of users, but still the servers have to be up and running. Another important drawback of VMs is that inside a VM OS and supporting services occupy more size than the application itself.

Every IT organization needs an operations team to manage the infrastructure's regular maintenance activities. The team's responsibility is to ensure that activities such as procuring machines, maintaining SOX Compliance, executing regular updates, and security patches are done in a timely manner. The following are a few drawbacks that add up to operational costs due to VM virtualization:

- The size of the operations team is proportional to the size of the IT. Large infrastructures require larger teams, therefore more costs to maintain.

- Every enterprise is obliged to provide continuous business to its customers for which it has to employ redundant and recovery systems. Recovery systems often take the same amount of resources and configuration as original ones, which means twice the original costs.

- Enterprises also have to pay for licenses for each guest OS no matter how little the usage may be.

VMs are not easily shippable. Every application has to be tested on developer machines, proper instruction sets have to be documented for operations or deployment teams to prepare the machine and deploy the application. No matter how well you document and take precautions in many instances the deployments fail because at the end of the day the application runs on a completely different environment than it is tested on which makes it riskier.

Let us imagine you have successfully installed the application on VM, but still VMs are not easily sharable as application packages due to their extremely large sizes, which makes them misfit for DevOps type work cultures. Imagine your applications need to go through rigorous testing cycles to ensure high quality. Every time you want to deploy and test a developed feature a new environment needs to be created and configured. The application should be deployed on the machine and then the test cases should be executed. In agile teams, release happens quite often, so the turnaround time for the testing phase to begin and results to be out will be quite high because of the machine provisioning and preparation work.

Choosing between VM virtualization or containerization is purely a matter of scope and need. It might not always be feasible to use containers. One advantage, for example, is in VM virtualization the guest OS of the VM and the host OS need not be the same. A Linux VM and a Windows VM can run in parallel on Hyper-V. This is possible because in VM virtualization only the hardware layer is virtualized. Since containers share the kernel OS of the host, a Linux container cannot be shipped to a Windows machine. Having said that, the future holds good things for both containers and VMs in both private and public clouds. There might be cases where an enterprise opts to use a hybrid model depending on scope and need.

Containerization is an ability to build and package applications as shippable containers. Containers run in isolation in a user-mode using a shared kernel. A kernel is the heart of the operating system which accepts the user inputs and converts/translates them as processing instructions for CPU. In a shared kernel mode containers do the same as what VMs do to physical machines. They isolate the applications from the underlying OS needs. Let's see a few key implementations of this technology.

Some of the key implementations of containers are as follows:

- The word container has been around since 1982 with the introduction of chroot by Unix, which introduced process isolation. Chroot creates a virtual root directory for a process and its child processes, the process running under chroot cannot access anything outside the environment. Such modified environments are also called chroot jails.

- In 2000, a new isolation mechanism for FreeBSD (a free Unix like OS) was introduced by R&D Associates, Inc.'s owner, Derrick T. Woolworth, it was named jails. Jails are isolated virtual instances of FreeBSD under a single kernel. Each jail has its own files, processes, users, and super accounts. Each jail is sealed from other jails.

- Solaris introduced its OS virtualization platform called zones in the year 2004 with Solaris 10. One or more applications can run within a zone in isolation. Inter-zone communication was also possible using network APIs.

- In 2006, Google launched process containers, a technology designed for limiting, accounting, and isolating resource usage. It was later renamed to control groups (cgroups) and merged into the Linux kernel 2.6.24.

- In 2008, Linux launched its first out-of-the-box implementation of containers called Linux containers (LXC) a derivative of OpenVZ (OpenVZ developed an extension to Linux with the same features earlier). It was implemented using cgroups and namespaces. The cgroups allow management and prioritization for CPU, memory, block I/O, and network. Namespaces provided isolation.

Solomon Hykes, CTO of dotCloud a PaaS (Platform as a Service) company, launched Docker in the year 2013, which reintroduced containerization. Before Docker, containers were just isolated processes and application portability as containers across discrete environments was never guaranteed. Docker introduced application packaging and shipping with containers. Docker isolated applications from infrastructure, which allowed developers to write and test the applications on traditional desktop OS and then easily package and ship it to production servers with less trouble.

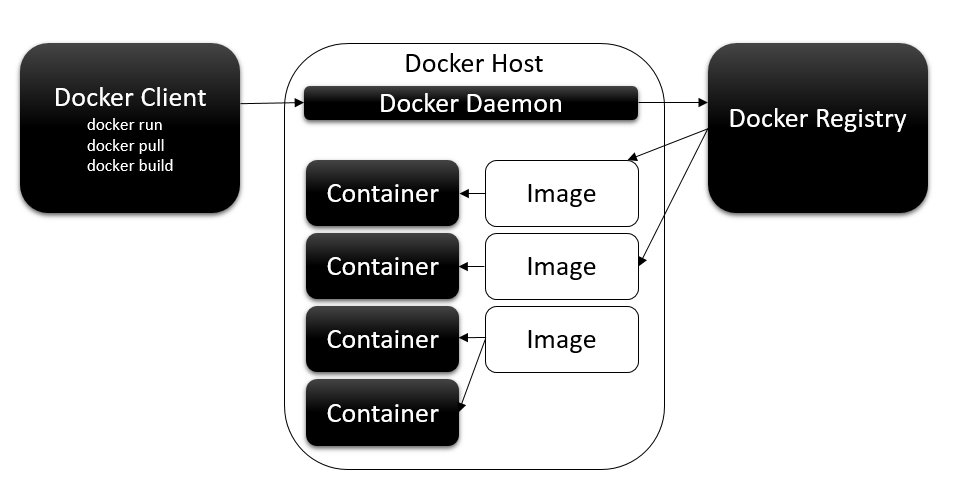

Docker uses client-server architecture. The Docker daemon is the heart of the Docker platform, and it should be present on every host, as it acts as a server. The Docker daemon is responsible for creating the containers, managing their life cycle, creating and storing images, and many other key things around containers. Applications are designed and developed as containers on developer's desktop and packaged as Docker images. Docker images are read-only templates that encapsulate the application and its dependent components. Docker also provides a set of base images that contain a pretty thin OS to start application development. Docker containers are nothing but instances of Docker images. Any number of containers can be created from an image within a host. Containers run directly on the Linux kernel in an isolated environment.

The Docker repository is the storage for Docker images. Docker provides both public and private repositories. Docker's public image repository is called Docker Hub, anyone can search the images in the public repository from Docker CLI or a web browser, download the image, and customize as per the application's needs. Since public repositories are not well suited for enterprise scenarios, which demand more security, Docker provides private repositories. Private repositories restrict access to your images for users within your enterprise; unlike Docker Hub, private repositories are not free. Docker registry is a repository for custom user images, users can pull any publicly available image or push to store and share across other users. The Docker daemon manages a registry per host too, when asked for an image the daemon first searches within the local registry and then the public repositories aka Docker Hub. This mechanism eliminates downloading images from the public repository each time.

Docker uses several Linux features to deliver the functionality. For example, Docker uses namespaces for providing isolation, cgroups for resource management, and union filesystem for making the containers extremely lightweight and fast. Docker client is the command-line interface, which is the only user interface for interacting with the Docker daemon. The Docker client and daemon can run out of a single system serving as both client and server. When on the server, users can use the client to communicate with the local server. Docker also provides an API that can be used to interact with remote Docker hosts. This can be seen in the following image:

The Docker development life cycle can be explained with the help of the following steps:

- Docker container development starts with downloading a base image from Docker Hub. Ubuntu and Fedora are a few images available from Docker Hub. An application can be containerized post application development too. It is not necessary to always start with Dockerizing the application.

- The image is customized as per application requirement using a few Docker-specific instructions. These sets of instructions are stored in a file called Dockerfile. When deployed the Docker daemon reads the Dockerfile and prepares the final image.

- The image can then be published to a public/private repository.

- When users run the following command on any Docker host, the Docker daemon searches for images on the local machine first and then on Docker Hub if the images are not found locally. A Docker container is created if the image is found. Once the container is up and running,

[command]is called on the running container:

$ docker run -i -t [imagename] [command]

Docker made enterprises life easy, applications can now be contained and easily shipped across hosts and distributed with less friction between teams. Docker Hub today offers 450,000 images publicly available for reuse. A few of the famous ones are ngnix web server, Ubuntu, Redis, swarm, MySQL, and so on. Each one is downloaded by more than 10 million users. Docker Engine has been downloaded 4 billion times so far and still growing. Docker has enormous community support with 2,900 community contributors and 250 meet up groups. Docker is now available on Windows and Macintosh. Microsoft officially supports Docker through its public cloud platform Azure.

eBay, a major e-commerce giant, uses Docker to run their same day delivery business. eBay uses Docker in their continuous integration (CI) process. Containerized applications can be easily moved from a developer's laptop to test and then production machines seamlessly. Docker also enables the application to run alike on developer machines and also on production instances.

ING, a global finance services organization, faced nightmares with constant changes required to its monolithic applications built using legacy technologies. Implementing each change involved a laborious process of going through 68 documents to move a change to production. ING integrated Docker into its continuous delivery platform, which provided more automation capabilities for test and deployment, optimized utilization, reduced hardware costs, and saved time.

Up to Docker release 0.9, containers were built using LXC, which is a Linux centric technology. Docker 0.9 introduced a new driver called libcontainer alongside LXC. The libcontainer is now a growing open source, open governed, non-profit library. The libcontainer is written using Go language for creating containers using Linux kernel API without relying on any close coupled features such as user-spaces or LXC. This means a lot to companies trending towards containerization. Docker has now moved out of being a Linux centric technology, in the future we might also see Docker adapting other platforms discussed previously, such as Solaris Zones, BSD jails, and so on. Libcontainer is openly available with contributions from major tech giants such as Google, Microsoft, Amazon, Red Hat, VMware, and so on. Docker being at its core is responsible for developing the core runtime and container format. Public cloud vendors such as Azure and AWS support Docker on their cloud platforms as first class citizens.

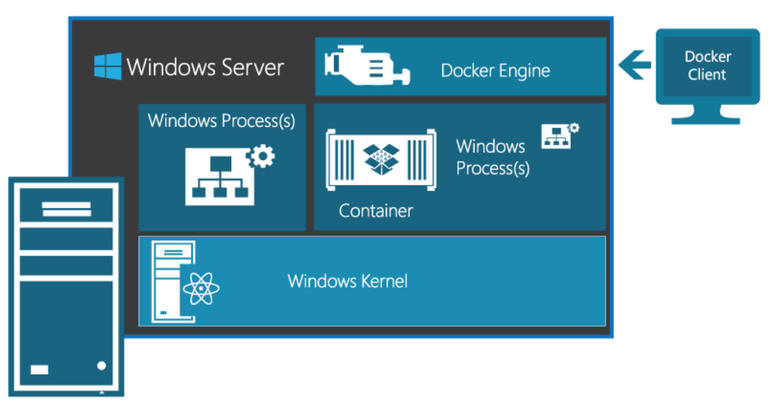

Windows Server Containers is an OS virtualization technology from Microsoft that is available from Windows Server 2016 onwards. Windows Server Containers are lightweight processes that are isolated from each other and have a similar view of the OS, processes, filesystem, and network. Technically speaking, Windows Server Containers are similar to Linux containers, which we discussed earlier. The only difference being the underlying kernel and workloads that run on these containers. The following image describes Windows Server Containers:

Here's a little background about Windows Server Containers:

- Microsoft's research team worked on a program called Drawbridge for bringing the famous container technologies to Windows Server ecosystem

- In the year 2014, Microsoft started working with Docker to bring the Docker technology to Windows

- In July 2015, Microsoft joined Open Container Initiative (OCI) extending its support for universal container standards, formats, and runtime

- In 2015, Mark Russinovich, CTO at Microsoft Azure, demonstrated the integration of containers with Windows

- In October 2015, Microsoft announced the availability of Windows Server with containers and Docker support in its new Windows Server 2016 Technical Preview 3 and Windows 10

Microsoft adapted Docker in its famous Windows Server ecosystem so developers can now develop, package, and deploy containers to Windows/Linux machines using the same toolset. Apart from support for the Docker toolset on Windows Server, Microsoft is also launching native support to work with containers using PowerShell and Windows CLI. Since OS virtualization is a kernel level feature there is no cross platform portability yet, which means a Linux container cannot be ported to Windows or vice versa.

Windows Server Containers are available on the following OS versions:

- Windows Server 2016 Core (no GUI): A typical Windows Server 2016 server with minimal installation. The Server Core version reduces the space required on disk and the surface of attack. CLI is the only tool available for interacting with the Server Core, either locally/remotely. You can still launch PowerShell using the

start powershellcommand from the CLI. You can also callget-windowsfeatureto see what features are available on Windows Server Core. - Windows Server 2016 (full GUI): This is a full version of Windows Server 2016 with standard Desktop Experience. The server roles can be installed using Server Manager. It is not possible to convert Windows Server Core to full Desktop Experience. This should be a decision taken during installation only.

- Windows 10: Windows Server Containers are available for Windows 10 Pro and Enterprise versions (insider builds 14372 and up). The containers and Hyper-V/virtualization feature should be enabled before working with containers. Creating container and images with Windows 10 will be discussed in following chapters.

- Nano Server: Nano Server is a headless Windows Server 30 times or 93% smaller than the Windows Server Core. It takes far less space, requires fewer updates, high security, and boots much faster than the other versions. There is a dedicated chapter in this book on working with Nano Servers and Windows Containers.

The most important part is that Windows Server Containers are available in two flavors, Windows Server Containers and Hyper-V Containers. All of the previously mentioned OS types can run Windows Server Containers and Hyper-V Containers. Both the containers can be managed using Docker API, Docker CLI, and PowerShell.

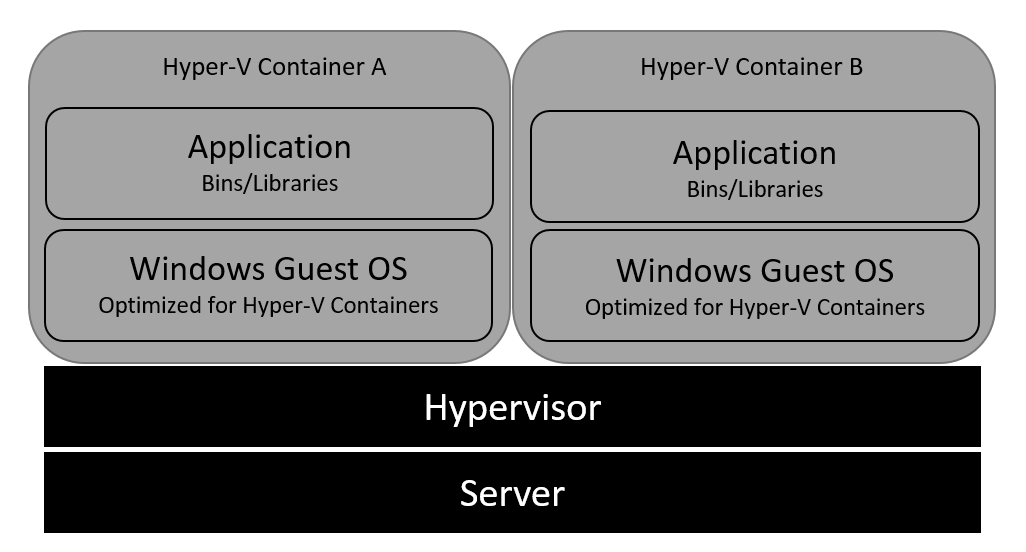

Hyper-V Containers are a special type of container with a higher degree of isolation and security. Unlike Windows Server Containers, which share the kernel, Hyper-V Containers do not share kernels and instead each container runs its own kernel, which makes them special VMs. The following image represents Hyper-V Containers:

The following are some of the other container types:

- Windows Server Containers run as isolated containers on a shared kernel. In a single tenant environment or private clouds this is not a problem, since the containers run in a trusted environment. But Windows Containers are not ideal for a multitenant environment. There could be security or performance related issues such as noisy neighbors or intentional attacks on neighboring containers.

- Since Windows Container shares the host OS, patching the host OS disrupts the normal functioning of applications hosted in the OS.

This is where Hyper-V Containers make perfect sense. Windows OS consists of two layers, kernel mode and user-mode. Windows Containers share the same kernel mode, but virtualize the user-mode, to create multiple container user-modes, one for each container. Hyper-V Containers run their own kernel mode, user-mode and container user-mode. This provides an isolation layer among Hyper-V Containers. Hyper-V Containers are very similar to VMs, but they run a stripped down version of an OS with a non-sharable kernel. In other words, we can call this a nested virtualization, a Hyper-V Container running within a virtual container host running on a physical/virtual host.

The good news is that Windows Server Containers and Hyper-V Containers are compatible. In fact, which container type to use is a deployment time decision. We can easily switch the container types once the application is deployed. Hyper-V Containers also have a faster boot time, faster than the Nano Server. Hyper-V Containers can be created using the same Docker CLI commands/PowerShell commands using an additional switch that determines the type of the container. Hyper-V Containers run on Windows 10 Enterprise (insider builds), which enables developers to develop and test applications on native machines to production instances, either as Windows Containers or Hyper-V Containers. Developers can directly ship the containers to Windows Server OS without making any changes. Hyper-V Containers are slower than Windows Containers as they run a thin OS. Windows Containers are suitable for general purpose workloads in private clouds or single tenant infrastructure. Hyper-V Containers are more suitable for highly secure workloads.

Windows Container terminology is very much similar to Docker. It is important to understand the terminology before teams start working on containers as it makes it easy to communicate across developer and operation teams. Windows Containers contain the following core terms.

A container host is a machine that is running an OS that is supported by Windows Containers, such as Windows Server 2016 (full/Core), Windows 10, and Nano Server. Hyper-V Containers hosts are required to have nested virtualization enabled, since the containers have to be hosted on a VM.

Container OS images or base images are the very first layer that makes up every container; these images are provided by Microsoft. Two of the images available today are windowsservercore and nanoserver.

OS image provides the OS libraries for the container. Developers can create Windows Server Containers by downloading the base image and adding more layers as per the application needs. OS images are nothing but .wim files that get downloaded from OneGet, a PowerShell package manager for Windows. OneGet is included in Windows 10 and Windows Server 2016 versions. Base OS images are sometimes huge, but Microsoft allows us to download the file once and save it offline for reusing without Internet connection or within an enterprise network.

Container images are created by users deriving from base OS image. Container images are read-only templates that can be used to create containers. For example, a user can download Windows Server Core image install IIS and the application components, and then create an image. Every container made out of this image comes with IIS and the application preinstalled. You can also reuse prebuilt images that come preinstalled with MySQL, nginx, node, or Redis by searching from the remote repository and customize as per your needs. Once customized, it can be converted to an image again.

Container registry is a repository for prebuilt images. Windows Server 2016 maintains a local repository as well. Every time a user tries to install an image it first searches for a local repository and then the public repository. Images can also be stored in a public repository (Docker Hub) or private repository. Base OS images have to be installed first before using any prebuilt images such as MySQL, nginx, and so on. Use docker search to search the repository.

Dockerfile is a text file containing instruction sets that are executed in sequential order for preparing a new container image. Docker instruction sets are divided into three categories, instructions for downloading a base OS image, for creating the new image, and finally instructions on what to run when new containers are created using the new image. Dockerfile goes as an input to the Docker build step, which creates the image. Users can also use PowerShell scripts within the instruction sets.

The following are a few benefits of containerization:

- Monolithic apps to microservices: Microservices is an architecture pattern in which a single monolithic application is split into thin manageable components. This promotes having focused development teams, smoother operations, and instant scalability at each module. Containers are ideal deployment targets for microservices. For example, you can have a frontend web app run in an individual container and other background services such as e-mail sending process, thumbnail generator, and so on, run in separate containers. This makes them update individually, scale as per load, and better resource management.

- Configuration as code: Containers allow you to create, start, stop, and configure containers using clean instruction sets. Integrating code as part of the application build system enables a lot of automation options. For example, you can create and configure containers as part of your CI and CD pipelines automatically so that development teams can ship increments at a faster pace.

- Favors DevOps: DevOps is a cultural shift in the way operations and developer teams work together seamlessly to validate and push increments to production systems. Containers help faster provisioning of dev/test environments for running various intermediate steps such as unit testing, security testing, integration testing, and so on. Many of these might need preplanning for infra procurement, provisioning, and environment setup. Containers can be quickly packaged and deployed using an existing infrastructure.

- Modern app development with containers: Many new open source technologies are designed using containers or microservices in mind, so that when applications are built using these technologies they are inherently container aware. For example, ASP.NET Core/ASP.NET 5 applications can be deployed to Linux/Windows alike because ASP.NET Core is technically isolated from web servers and the runtime engine. It can run on Linux with net core as a runtime engine and Kestrel as a web server.

Azure has grown by leaps and bounds to become a top class public cloud platform for on demand provisioning of VMs or pay-per-use services. With the focus shifting towards resource optimization and microservices, Azure also provides a plethora of options for running both LXC and Windows Containers on Azure.

Windows Server 2016 Core with containers image is readily available on Azure. Developers can log in to the portal and create a Windows Server 2016 Core machine and run containers within minutes. It comes preinstalled with Docker runtime environment. Users can download the Remote Desktop Client from the portal and run Docker native commands using Windows CLI or PowerShell. Windows Server Containers are the only option on Azure, it does not support Hyper-V Containers. In order to use Hyper-V Containers on premise, a container host is required.

Azure Container Service (ACS) is a PaaS offering from Microsoft, which helps you create and manage a cluster of containers using orchestration services such as Swarm or DC/OS. ACS can be used as a hosted cluster environment, managed using your favorite open source tools or APIs. For example, you can log in to the portal and create a Docker Swarm by filling a few parameters such as Agent count, Agent virtual machine size, Master count, and DNS prefix for container service. Once the cluster is created it can be managed using your favorite tool set such as Docker CLI or API in this case.

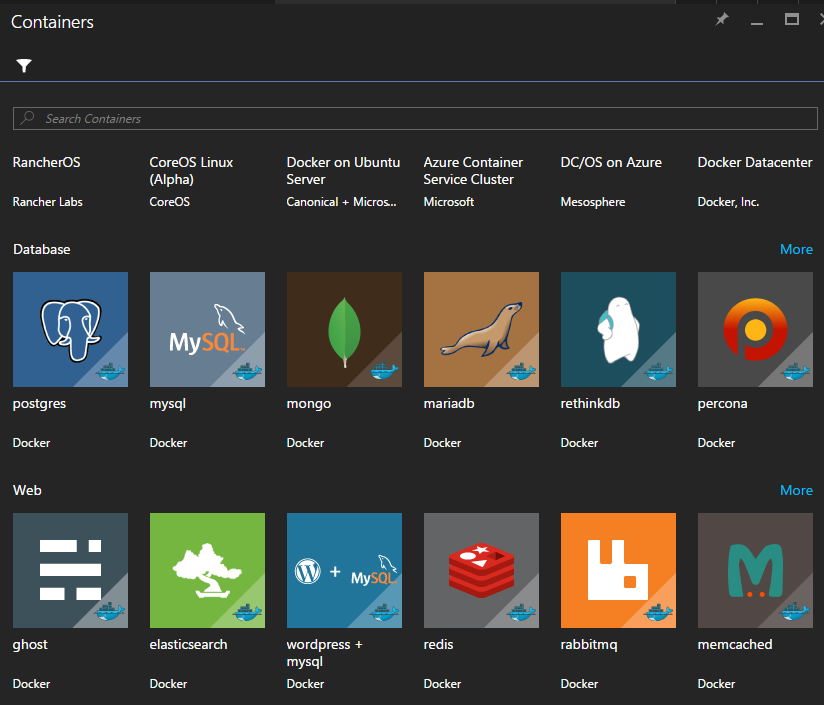

Azure also provides ready to deploy Azure Resource Manager (ARM) templates to automate provisioning of Windows Server Core with containers. Azure ARM templates can be used for deploying dev/test Docker Swarm on Azure within minutes. ARM (JSON) templates are great tools to integrate with your continuous build and deployment process. Azure also provides prebuilt Docker images such as MySQL, MongoDB, Redis, and so on, as shown in the following screenshot:

Azure provides a free trial account for a period of one month. Microsoft offers you $200 for a 30-day period, which helps you learn anything on Azure.

Containers, or VMs, intend to solve a common problem of resource wastage, manual efforts in resource procurement, and high costs for running monolithic applications. Comparing VMs with containers is ideal since each share their own set of pros and cons, a few comparisons are:

- Virtualization layer: Containers are very different from virtualization by design. VM virtualization is at a hardware level, which allows multiple VMs to run in parallel on a single hardware, whereas the containers run out of a single host OS as if each container is running in its own OS. This design has a disadvantage of disallowing containers to be of varied OS. But this can be easily overcome by using a hybrid computing model combining VM and container virtualization.

- Size: VMs are heavyweight, whereas containers are extremely light. VMs contain the complete OS, kernel, system libraries, system configuration files, and all the directory structure required by the OS. Containers only contain application specific files, which makes it extremely lightweight and easily sharable. Also a VM that is not being utilized or if it is running some background process eats memory, which restricts the number of VMs than can run on the host. Containers occupy very less space and they can be easily suspended/restarted due to their extremely low boot timings.

- Portability: The same size constraints are a huge disadvantage for VMs. For example, developers writing code cannot test the applications as if they are running in production instances. But with containers it is possible since containers run alike on developer machines and production servers. Since containers are lightweight they can easily be shared by uploading to any shared storage. This can be partially overcome by using modern thin server OS such as Nano Server or Windows Server Core, which we will discuss in following chapters.

- Security: Undoubtedly VMs have an upper hand here due to the isolation at the very bottom level. Containers are more vulnerable to OS level attacks; if the OS is compromised all the containers running it will also be compromised. That being said, it is possible to make a container highly secure by implementing proper configuration. In a shared or multitenant environment noisy neighbors could also create potential attacks by demanding more resources, affecting the other containers running on the machine.

The microservices architecture influences application packaging and transferring across hosts/environments at a faster pace, as a result enterprises adapting to containerization face problems with increasing number of containers. Containers created new administration challenges for enterprises, such as managing a group of containers or a cluster of container hosts. A container cluster is a group of nodes, each node is a container host and it has containers running inside. It is important for enterprises/teams to facilitate manage the containers hosts and facilitate communication channels across container hosts.

Cluster management tools help operations teams or administrators to manage containers and container hosts from single management consoles. They assist in moving containers from one host to another, control resource management (CPU, memory, and network allocation), executing workflows, scheduling jobs/tasks (jobs/tasks is a set of steps to be executed on a cluster(s)), monitoring, reliability, scalability, and so on. Now that you know what clusters are, let's see the variety of offerings available on the market today and the core values offered by each one. Cluster management will be discussed in more detail in following chapters.

Swarm is a native cluster management solution from Docker. Swarm helps you manage a pool of containers hosts as a single unit. Swarm is delivered as an image called Docker Swarm image. Docker Swarm image should be installed on a node and the container hosts should be configured with TCP ports and TLS certificates to connect to the swarm. Swarm provides an API layer to access the container or cluster services so that developers can build their own management interface. Swarm follows Plug and Play (PnP) architecture, so most of the components of swarm can be replaced as per needs. A few of the cluster management capabilities provided by swarm are:

- Discovery services for discovering images using public/private hosts or event list of IPs

- Docker Compose, which can be used for orchestrating multicontainer deployment in a single file

- Advanced scheduling for strategically placing containers on selective nodes depending on the ranking/priority and filtering nodes

Kubernetes is a cluster manager from Google. Google was the first company to introduce the concept of container clusters. Kubernetes has many amazing features for cluster management. A few of them are:

- Pods: Kubernetes pods are used for logically grouping containers. Pods are scheduled and managed as independent units. Pods can also share data and communication channels. On the down side if one container in a pod dies, the whole pod dies. This might be valid in the cases of these containers being interdependent or closely coupled.

- Replication controllers: Replication controllers ensure reliability across hosts. For example, let's say you always want three pod units/pods of the backend service, replication controllers ensure that three pods are running by checking their health on a regular basis. If any pod doesn't respond, replication controllers immediately spin up another instance of pod and therefore ensure reliability and availability.

- Labels: Labels are used to collectively name a set of pods so that teams can operate them as collective units. Naming can be done using environments such as dev, staging and production, or using geographical locations. Replication controllers can be used to collectively migrate collection of pods across nodes, grouping them by labels.

- Service proxy: Within a huge container cluster, you would need a neat and clean mechanism to resolve pods/container hosts using labels or name queries. Service proxy helps you resolve requests to a single logical set of pods using label-driven selectors. In the future you might see custom proxies that resolve to a pod based on custom configuration. For example, if you want to serve your premium customers using one set of frontend pods that are configured for quick response times and basic customers using another set of frontend pods, you can configure the environment accordingly and route traffic based on smart domain driven decisions.

DC/OS is another distributed kernel OS built using Apache Mesos for cluster management. Apache Mesos is a cluster manager that integrates seamlessly with container technologies such as Docker for scheduling and fault tolerance. Apache Mesos is in fact a generic cluster management tool that is also used in big data environments such as Hadoop and Cassandra. It also gels well with several batch scheduling, PaaS platforms, long-running applications, and mass data storage systems. It provides a web dashboard for cluster management.

Apache Mesos's complex architecture, configuration, and management makes it difficult to adapt directly. DC/OS makes it easy and significantly straightforward. DC/OS runs on top of Mesos and does the job of what kernel does on your laptop OS, but over a cluster of machines. It provides services such as scheduling, DNS, service discovery, package management, and fault tolerance over a collection of CPUs, RAM, and network resources. DC/OS is supported by a wealth of developer community, rich diagnostics support, and management tools using GUI, CLI, and API.

ACS, which was discussed previously, has a reference implementation for DC/OS. Within a few clicks Azure makes it easy to build a DC/OS cluster on the cloud and makes it ready to deploy applications. The same sets of services are provided for on premise data centers or private clouds using Azure Stack (Azure Stack is an OS provided by Microsoft for managing private clouds). You also get an additional benefit of integrating with other rich sets of services provided by Azure for increasing the agility and scalability.

Two other cluster managers that are not discussed here are Amazon EC2 Container Service, which is built on top of Amazon EC2 instances and uses shared state scheduling services, and CoreOS Tectonic, which is Kubernetes as a service on AWS (Amazon's cloud offering).

Microsoft has released an amazing set of tools for working with Windows Containers for both application developers and IT administrators. Let's look at a few of them.

Visual Studio Tools for Docker comes with Visual Studio 2015, which helps you deploy ASP.NET Core apps as containers to Windows Container/LXC hosts. It comes with context options for Dockerizing an existing application. These tools also provide rich debugging functionalities to debug code from containers. By just pressing F5 you can run and debug applications using local Docker containers and then push to production Windows Server machines with containers with a single click.

Visual Studio Code is another source code editor by Microsoft that is available for free. Visual Studio Code also runs on non-windows OSes such as Linux and OS X. It includes support for debugging, GIT, IntelliSense, and code refactoring. Docker Toolbox extension is also available for Visual Studio Code. Auto code completion makes it easy to author Docker and Docker Compose files.

Not just for application deployment, Microsoft also provides automation capabilities through its cloud repository, Visual Studio Online (VSO) or Visual Studio Team Services (VSTS). VSO provides lots of features to automate, build, and deploy to container hosts or a cluster. VSO provides predefined build steps to convert an application into a Docker image during build. It also provides ARM templates for one-click deployment and service endpoints to private Docker repositories for accessing existing images from VSTS as part of your build. Pretty cool, isn't it?

For simulating a Docker environment on developer systems we can use Docker for Windows. Docker for Windows uses Windows Hyper-V features to provide the Docker Engine on Windows and Linux kernel-specific features for the Docker daemon. Since Docker for Windows uses Hyper-V with containers features it runs only on 64-bit Windows 10 Pro, Enterprise and Education Build 10586 or later. VMware, VirtualBox, or any other hosted virtualization software cannot run in parallel with Docker for Windows. Docker for Windows can be used to run containers, build custom images, share drives with containers, configure networks, and allocate container specific CPU and RAM memory for performance or load testing.

For PCs that do not meet the requirements of Docker for Windows, there is an alternative solution called Docker Toolbox for Windows. Unlike Docker for Windows, Docker Toolbox do not run natively, instead they run on a customized Linux VM called a Docker Machine. Docker Toolbox for Windows runs on 64-bit Windows 7, 7.1, 8, and 8.1 only. Docker Machine is specially customized to run as a Docker host. Once it is installed the host can be accessed from Windows using Docker client, CLI, or PowerShell. Docker Toolbox for Windows comes with a plethora of options to run and manage Docker containers, images, Docker Compose, Kitematic, a GUI for running Docker on Windows, and Macintosh. Docker Toolbox for Windows also needs virtualization to be enabled. Boot2Docker, an emulator for Windows, is now deprecated.

The heat is on! It is not just Docker, Linux, and Microsoft in the race anymore, with enterprises witnessing the benefits of containerization and the pace at which adaptability is growing more companies have started putting in effort to the build new products or services around containers. A few of them are listed in the following sections.

Windows Containers, which we have learned so far run on a kernel modified to adapt containers, Turbo allows you to package applications and their dependencies into lightweight, isolated virtual environments called containers. Containers can then be run on any Windows machine that has Turbo installed. This makes it extremely easy to adapt for Windows.

Turbo is built on top of Spoon VMs. Spoon is an application virtualization engine that provides lightweight namespace isolation of the Windows Core OS features such as filesystem, registry, process, network, and threading. These containers are portable, which means no client is required to run. Turbo can containerize from simple desktop applications to complex server objects such as Microsoft SQL Server. Turbo VMs are extremely light and also possess streaming capabilities. Teams can share Spoon VMs using a shared repository called Turbo Hub.

Docker is no longer the only container available on Linux. CoreOS developed a new container technology called Rocket, which is quite different from Docker in architecture. Rocket does not have a daemon process; Rocket containers (called App Containers) are created as child processes to the host process, which are then used to launch the container. Each running container has a unique identity. Docker images are also convertible to App Container Image (a naming convention used for Rocket images). Rocket runs on a container runtime called App Container Runtime.

In this chapter we covered the following points:

- VMs help create isolated environments, each with guest OS. Software applications or services can run inside a VM with complete isolation from another VM.

- Hypervisors can run discrete VMs such as Linux and Windows together.

- VMs suffer from portability and packaging due to the huge size and intense orchestration needs.

- Containerization helps run software systems as isolated processes inside a machine. Containers increase the density of applications per machine, and also provide application packaging and shipping capabilities.

- Windows Server 2016 supports containerization using kernel features such as filesystems, namespaces, and registry.

- Windows Server 2016 runs two types of containers, Windows Server Containers and Hyper-V Containers. Windows Server Containers share OS kernels, whereas Hyper-V Containers run their own OS.

- Nano Server is a deeply refactored version of Windows Server, which is 93% smaller, remotely-administered, and ideal for microservices.

- Microsoft Azure supports cluster management solutions such as DC/OS and swarm.

Download code from GitHub

Download code from GitHub