Foundation of VMware Cloud on AWS

This chapter provides an introduction to VMware Cloud on AWS, hybrid cloud challenges, and how VMware Cloud on AWS solves them. In addition, you will learn about the different use cases that VMware Cloud on AWS addresses and its architecture principles.

This chapter covers the following topics:

- Introduction to VMware Cloud on AWS

- Introducing hybrid and public cloud challenges

- Understanding VMware Cloud on AWS use cases

- Understanding the VMware Cloud on AWS high-level architecture

- Discovering vCenter, the Cloud Services Platform (CSP), and CSP console

- Demystifying VMware vSAN, the primary storage technology for VMware Cloud on AWS

Introduction to VMware Cloud on AWS

VMware Cloud on AWS is a product jointly engineered by VMware and AWS enabling customers to run proven, enterprise-grade VMware software-defined data centers (SDDCs) on top of bare metal AWS hardware. VMware Cloud on AWS enables enterprise IT and operations teams to continue to add value to their business in the AWS cloud while maximizing their VMware investments, without the need to buy new hardware. This offering enables customers to quickly and confidently scale capacity up or down, without change or friction, for any workload with access to native cloud services.

Understanding VMware Cloud on AWS is not possible without knowing the broad range of capabilities of the AWS cloud (https://aws.amazon.com/resources/analyst-reports/gartner-mq-cips-2021/). VMware Cloud on AWS helps customers design their environments using different cloud models, facilitating connections between on-premises deployments and public clouds

AWS was officially launched in 2006 and has grown rapidly to become one of the world’s largest cloud providers. AWS operates in over 25 geographic regions worldwide, with plans to expand to more regions. This means that users can deploy their applications and services in locations closest to their customers, improving performance and reducing latency. AWS provides over 175 fully featured services for computing, storage, databases, analytics, machine learning, Internet of Things (IoT), security, and more.

AWS is used by millions of customers worldwide, including start-ups, large enterprises, and government organizations

One of the key benefits of the AWS cloud is its flexibility and scalability. Users can quickly and easily provision the needed resources and only pay for what they use.

The AWS cloud also offers a range of deployment options, including private, public, and hybrid cloud models. This allows users to tailor their cloud environment to their specific needs, depending on security requirements, compliance regulations, and performance goals.

VMware Cloud on AWS is a jointly engineered and fully managed service that brings VMware’s enterprise-grade SDDC software and Amazon Elastic Compute Cloud (EC2) bare-metal instances running on the AWS Global Infrastructure. This integration enables customers to seamlessly migrate their workloads to VMware Cloud on AWS without re-platforming their virtual machines (https://aws.amazon.com/resources/analyst-reports/gartner-mq-cips-2021/).

Introduction to cloud deployment models

Companies can choose from different models to deploy cloud services. The deployment model will be driven by the application requirements, business use cases, and existing IT investments. The following section describes the different approaches.

Public cloud

Cloud computing delivers IT services with flexible pay-as-you-go pricing and consumption models. Customers can access as many resources as they need, often immediately. Charges are only applicable to resources that have been used or reserved. Customers can consume fully managed services that encompass computing, storage, networks, databases, containers, application platforms, functions and much more.

An application can be created directly in the cloud, known as a cloud-native application, or migrated from an existing on-premises infrastructure to take advantage of the public cloud benefits through a modernization process. Customers break the application’s monolith architecture into microservices, also known as refactoring.

Private cloud or on-premises

The private cloud approach is the deployment of resources on-premises, using physical facilities, hardware, virtualization infrastructure, and automation software dedicated to an organization in most cases.

Usually, customers will own the facilities and physical IT hardware in their on-premises environment. Private clouds are often used to meet compliance with data governance regulations or to leverage investment into existing IT infrastructure.

Customers who were not born in the cloud have a significant part of their workloads running in their on-premises infrastructure. VMware is a leader in the on-premises SDDC with its VMware Cloud Foundation (VCF) software stack and vSphere’s virtualization solution.

Hybrid cloud

A hybrid cloud is an IT architecture and an operational deployment model that enables customers to leverage public and private clouds. A hybrid cloud enables delivering applications and connecting infrastructure with common orchestration and management tools between on-premises and public cloud providers.

Processes and workloads established for on-premises need to be integrated with the workloads and processes in the public cloud to ensure unified management of data, applications, and their associated governance, life cycle management, and security policies.

Hybrid cloud solution core principles

The following are the hybrid cloud solution core principles:

- Seamless workload mobility between private cloud and public cloud environments

- Provision and scale resources through an API or self-service portal in the public cloud provider

- Network connectivity between environments through a high-speed, reliable, and secure solution

- Automate processes across environments with a common automation process, toolset, and APIs

- Manage and monitor environments with unified tools between environments

Different approaches to the hybrid cloud

Public cloud providers have solutions bringing their native centralized data center services to run in their customer’s on-premise environments – for example, Google with its Anthos solution for Kubernetes workloads, AWS with Outposts and Amazon EKS Anywhere for Kubernetes workloads, and Microsoft with Azure Stack and Azure Arc.

VMware’s approach is to extend existing VMware on-premises infrastructure to the cloud rather than building new infrastructure in customer data centers that implement point solutions of the different hyperscales.

It can help organizations benefit from hybridity with public clouds without rethinking their application delivery and security model, governance, policies, or procedures.

Multi-cloud

Multi-cloud is an operational model that combines more than one public cloud and potentially a private cloud. Many customers rely on multiple public cloud providers. Often, this adoption of multi-cloud is developed bottom-up in organizations, where different business units and development teams procure their cloud services without IT knowledge or guidance, or through a merger and acquisition process where two organization operational teams need to converge after adopting different cloud strategies.

For instance, using the Google App Engine for Platform as a Service (PaaS) services and AWS for EC2 with Lambda for Infrastructure as a Service (IaaS) and Function as a Service (FaaS) services, while, at the same time, running a third private cloud with VMware on-premises.

Note

Workloads are not portable between public cloud providers by default, and vendor lock-in concerns customers.

The VMware hybrid cloud stack can run on major public cloud providers, not only AWS. It enables customers to migrate their IaaS workloads between different public clouds and their private cloud without public cloud vendor lock-in.

Hybrid cloud challenges

Customers trying to naively implement a hybrid cloud strategy encounter challenges in the five pillars of operational inconsistencies, different skill sets and tools, disparate management tools and security controls, inconsistent application service-level agreements (SLAs), and incompatible machine formats. Without making proper adjustments to those pillars, customers may encounter decreased agility and an increase in cost and risk.

The following figure summarizes those pillars:

Figure 1.1 – Five challenges of implementing a hybrid cloud strategy

Now, let’s explore those challenges in further detail in the following section.

Describing the challenges of the hybrid cloud

Cloud infrastructures have become more attractive to organizations driven by business transformation initiatives. The cloud improves agility with faster testing and development cycles and reduces costs and risks. Organizations are migrating to the cloud for those reasons.

While providing positive business values, many challenges arise when moving from on-premises to the public cloud. Many customers don’t realize the changes they need to go through to properly take advantage of the public cloud’s benefits. A cloud strategy that addresses the hybrid cloud challenges needs to consider people, processes, and technology.

Operational inconsistency

The tools and procedures that operation teams are leveraging to manage the life cycle of their applications and workloads on-premises are different from the public cloud.

For example, application and infrastructure monitoring and observability tools, automation, management, and CI/CD tools for deploying applications need to be repurposed from vSphere-based APIs/SDKs to AWS APIs and native monitoring services such as CloudTrail, CloudWatch, and adopting infrastructure as code with tools such as HashiCorp’s Terraform.

Disparate security controls

Expanding on operational inconsistency, customers achieve security and compliance through existing security procedures and tools. Adaptation ranges from how users consume authentication, identity access management, network security controls – such as firewalls, intrusion prevention systems (IPSs), and web application firewalls (WAFs) – and application-level protection, monitoring, and logging for Security Operation Center (SOC) environments.

Skill sets and certifications

IT personnel managing VMware-based infrastructure require an investment in recertification and retraining to operate workloads in the public cloud. Skilled IT and DevOps personnel are in short supply in the market.

Inconsistent application SLAs

Migrating workloads in a high-availability architecture while providing production-grade SLAs requires application-level architecture adjustments to enjoy the resiliency of public cloud services. For instance, migrating a virtual machine to an EC2 service in the cloud doesn’t make it highly available. On-premises resiliency mechanisms such as vSphere High Availability (HA) and Distributed Resource Scheduler (DRS) are unavailable on an EC2 service without making architecture adjustments.

Incompatible machine formats

Migration requires a manual conversion for each virtual machine, which includes the hypervisor format, operating system disks, and networking IP address configurations. This process takes into account unsupported configurations in the cloud, especially for legacy end-of-life and 32-bit operating systems. Additionally, the format conversion problem creates a vendor lock-in challenge.

Customers not considering those challenges in advance may experience a decrease in the developer’s agility instead of an increase, an increase in the risk of the project instead of a decrease, and an increase in costs instead of a decrease.

VMware Cloud on AWS was designed to address all of those challenges of the hybrid cloud deployment model.

Understanding VMware Cloud on AWS use cases

This section will describe the most common use cases of hybrid cloud with VMware Cloud on AWS.

The use cases that we’ll explore in this section are data center extension, next-generation application modernization, cloud migrations, and disaster recovery.

The following figure summarizes the four use cases:

Figure 1.2 – The use cases of VMware Cloud on AWS

Let’s describe each of the use cases in further detail in the following section.

Data center extension

Customers look to integrate their existing data center infrastructure into the public cloud. They want to enjoy the benefits offered by the public cloud without being affected by the hybrid cloud challenges described in earlier sections – for instance, when the on-premises environment fails to deliver IT capacity on time to meet business needs. Limited capacity can be because of a lack of physical space, supply chain issues, or a need for a temporary workload.

With VMware Cloud on AWS, a consistent infrastructure between vSphere environments in the data center and the vSphere SDDC that VMware manages in the AWS cloud enables customers to move applications to the AWS cloud or back seamlessly.

VMware Aria is a cloud management platform that allows customers to manage VMware Cloud on AWS as an extension to an existing customer data center. Workload types that are quick wins are testing/development and virtual desktop infrastructure (VDI) environments. Kubernetes workloads running on-premises can migrate into the VMware Cloud on AWS with the Tanzu portfolio integration.

Cloud migration

Cloud migration, also known as re-platforming or lift-and-shift in AWS terms or relocating in VMware Cloud on AWS terms, involves migrating existing brownfield applications to the public cloud from on-premises with minimal to no adjustments to the application code or VM format.

Business driver customers may have an expiring lease on a data center colocation facility, a management decision to evacuate an existing data center because of a cloud-first approach, or a hardware refresh because of end-of-life. An additional use case is mergers and acquisitions, where one company needs to absorb the IT infrastructure of the acquired company, as well as consolidate branch sites and data centers by migrating applications from the on-premises data center to the public cloud to reduce the total cost of ownership.

VMware Cloud on AWS is the fastest way for customers to migrate VMware vSphere-based workloads to the cloud because they can relocate their workloads in a way that is faster than a standard lift and shift. Consistent infrastructure is delivered using the same VMware on-premises stack leveraging vSphere as the hypervisor. Customers use this on-premises and the cloud enables the migration of workloads without lift-and-shift adjustments or refactoring their applications.

Next-generation apps

Customers looking to go through a development process of refactoring, such as breaking up a monolith application into a microservice architecture, can do this integrally on the platform leveraging the Tanzu portfolio, which is included in VMware Cloud on AWS.

VMware Cloud on AWS provides high bandwidth and low latency connectivity to native services that AWS offers. This integration provides a consistent and easy way for virtual machines and containers to access AWS services. These innovative AWS services can be seamlessly integrated with customers’ applications to enable incremental refactoring and modernization enhancements.

Disaster recovery

The VMware Disaster Recovery as a Service (DRaaS) service is available with the VMware Cloud on AWS offering. It enables customers to recover and protect applications without needing to maintain an on-premises secondary or a third DR site. VMware delivers and manages it as a service. IT teams manage their cloud-based resources using familiar VMware tools without learning new skills or performing a lift-and-shift migration.

Customers using on-premises traditional DR build a secondary site with a replica of the production site. They need to prioritize which workloads will be protected because of costs. The operations of the secondary DR site are associated with complexity because of manual processes and siloed IT solutions.

DRaaS with VMware Cloud on AWS can reduce secondary site costs, simplify DR operations, and help customers meet or improve their recovery time objective (RTO) and recovery point objective (RPO). VMware Cloud Disaster Recovery (VCDR) and VMware Site Recovery Service (VSR), powered by VMware Site Recovery Manager (SRM), are offered as part of the DRaaS service with VMware Cloud on AWS.

Understanding the VMware Cloud on AWS high-level architecture

This section will describe the high-level architecture of the main components that comprise VMware Cloud on AWS.

VMware Cloud on AWS is integrated into VMware’s Cloud Services Platform (CSP). The VMware Cloud Services Provider (CSP) console allows customers to manage their organization’s billing and identity, and grant access to VMware Cloud services. You can leverage the VMware Cloud Tech Zone Getting Started resource (https://vmc.techzone.vmware.com/getting-started-vmware-cloud-aws) to get familiar with the process of setting up an organization and configuring access in the CSP console.

The VMware CSP console allows you to manage VMware Cloud on AWS. You will use VMware CSP console to deploy VMware Cloud on AWS. Once the service is deployed, you leverage VMware CSP console to manage the SDDC.

The following figure shows the high-level design of the VMware Cloud on AWS architecture, showing both a VMware Cloud customer organization running the VMware Cloud services alongside an AWS-native organization running AWS services:

Figure 1.3 – High-level architecture of VMware Cloud on AWS

Now, let us switch to the Tanzu Kubernetes service available with VMware Cloud on AWS.

Tanzu Kubernetes with VMware Cloud on AWS

VMware Cloud on AWS includes VMware Tanzu Kubernetes Grid as a service. VMware currently offers several Tanzu Kubernetes Grid (TKG) flavors for running Kubernetes:

- vSphere with Tanzu or the TKG service: This solution has made vSphere a platform that can run Kubernetes workloads directly on the hypervisor layer. This can be enabled on a vSphere cluster and allows Kubernetes workloads to be run directly on ESXi hosts. Additionally, it can create upstream Kubernetes clusters in dedicated resource pools. This flavor is integrated into the VMware Cloud on AWS platform, providing a Container as a Service (CaaS) service, and is included in the basic pricing service.

- Tanzu Kubernetes Grid Multi-Cloud (TKGm) is an installer-driven wizard that sets up Kubernetes environments for use across public cloud environments and on-premises SDDCs. This flavor is supported but not included on VMware Cloud on AWS’s basic pricing service, but it can be consumed with a separate license.

- Tanzu Kubernetes Integrated Edition: VMware Tanzu Kubernetes Integrated (previously known as VMware Enterprise PKS) is a Kubernetes-based container solution that includes advanced networking, a private registry, and life cycle management. It is beyond the scope of this book.

VMware Tanzu Mission Control (TMC) is a SaaS offering for multi-cloud Kubernetes cluster management and can be accessed through VMware CSP console. It provides the following:

- Kubernetes cluster deployment and management on a centralized platform across multiple clouds

- He ability to centralize operations and management

- A policy engine that automates access control policies across multiple clusters

- The ability to centralize authorization and authentication with federated identity

The following figure presents a high-level architecture of services available between the on-premises and the VMware Cloud solution in order to provide hybrid operations:

Figure 1.4 – Hybrid operation components connecting on-premises to VMware Cloud

SDDC cluster design

A VMware Cloud on AWS SDDC includes compute (vSphere), storage (vSAN), and networking (NSX) resources grouped together into one or more clusters managed by a single VMware vCenter Server instance.

Host types

VMware Cloud on AWS runs on dedicated bare-metal Amazon EC2 instances. When deploying an SDDC, VMware ESXi software is deployed directly to the physical host without nested virtualization. In contrast to the pricing structure for other Amazon EC2 instances running on AWS Nitro System (which generally follows a pay-per-usage model per running EC2 instance), the pricing model for VMware Cloud on AWS is priced for the entire bare-metal instance, regardless of the number of virtual machines running on it.

Multiple host types are available for you when designing an SDDC. Each host has different data storage or performance specifications. Depending on the workload and use case, customers can mix multiple host types within different clusters of an SDDC to provide better performance and economics, as depicted in the following figure:

Figure 1.5 – VMware Cloud SDDC with two clusters, one each of i3.metal and i3en.metal host types

At the time of writing this book (2023), three different host types can be used to provision an SDDC.

i3.metal

The i3.metal type is VMware Cloud on AWS’s first host type. I3 hosts are ideal for general-purpose workloads. This host instance type may be used in any cluster, including single-, two-, or three-node clusters and stretched cluster deployments. The i3.metal host specification can be found in the following table:

Figure 1.6 – i3.metal host specification

This instance type has a dual-socket Intel Broadwell CPU, with 36 cores per host.

As in all hosts in the VMware Cloud on AWS service, it boots from an attached 12 GB EBS volume.

The host vSAN configuration is comprised of eight 1.74 TB disks, and two disks per disk group are allocated for the caching tier and are not counted as part of the raw capacity pool.

It is important to note that hyperthreading is disabled on this instance type and that both deduplication and compression are enabled on the vSAN storage side. As VMware moves toward consuming new host types, it’s anticipated that use cases for i3.metal will become rare.

i3en.metal

The i3en hosts are designed to support data-intensive workloads. They can be used for storage-heavy or general-purpose workload requirements that cannot be met by the standard i3.metal instance. It makes economic sense in storage-heavy clusters because of the significantly higher storage capacity as compared to the i3.metal host: it has four times as much raw storage space at a lower price per GB.

This host instance type may be used in stretched cluster deployments and regular cluster deployments (two-node and above).

The i3en.metal host specification can be found in the following table:

Figure 1.7 – i3en.metal host specification

The i3en.metal type comes with hyperthreading enabled by default, to provide 96 cores and 768 GB of memory.

The host vSAN configuration is comprised of eight 7.5 TB physical disks, using NVMe namespaces. Each physical disk is broken up into 4 virtual disk namespaces, creating a total of 32 NVMe namespaces. Four namespaces per host are allocated for the caching tier and are not counted as raw capacity.

This host type offers a significantly larger disk, with more RAM and CPU cores. Additionally, there is network traffic encryption on the NIC level, and only compression is enabled on the vSAN storage side; deduplication is disabled.

Note

VMware on AWS customer-facing vSAN storage information is provided in TiB units and not in TB units. This may cause confusion when performing storage sizing.

i4i.metal

VMware and AWS announced the availability of a brand new instance type in September 2022 – i4i.metal. With this new hardware platform, customers can now benefit from the latest Intel CPU architecture (Ice Lake), increased memory size and speed, and twice as much storage capacity compared to i3. The host specification can be found in the following table:

Figure 1.8 – i4i.metal host specification

Based on a recent performance study (https://blogs.vmware.com/performance/2022/11/sql-performance-vmware-cloud-on-aws-i3-i3en-i4i.html) using a Microsoft SQL Server workload, i4i outperforms i3.metal on a magnitude of 3x.

In the next section, we will evaluate how the VMware Cloud on AWS SDDC is mapped to AWS Availability Zones.

AWS Availability Zones

The following figure describes the relationship between a Region and Availability Zones in AWS:

Figure 1.9 – Architecture of a Region and Availability Zones

Each AWS Region is made up of multiple Availability Zones. These are data centers that are physically isolated. High-speed and low-latency connections connect Availability Zones within the same Region. Availability Zones are placed differently in floodplains, equipped with uninterruptible power supplies and on-site backup generators.

If available, they can be connected to different power grids or utility companies. Each Availability Zone has redundant connections to multiple ISPs. By default, an SDDC is deployed on a single Availability Zone.

The following figure describes the essential building blocks of VMware Cloud on AWS SDDC clusters, which are, in turn, built from compute hosts:

Figure 1.10 – Architecture of a VMware Cloud on AWS SDDC, with clusters and hosts

A cluster is built from a minimum of two hosts and can have a host added or removed at will from the VMware Cloud on AWS SDDC console.

Cluster types and sizes

VMware Cloud on AWS supports many different types of clusters. They can accommodate various use cases from Proof of Concept (PoC) to business-critical applications. There are three types of standard clusters (single availability zone) in an SDDC.

Single-host SDDC

A cluster refers to a compute pool of multiple hosts; a single-host SDDC is an exception to that rule, as it provides a fully functional SDDC with VMware vSAN, NSX, and vSphere on a single host instead of multiple hosts. This option allows customers to experiment with VMware Cloud on AWS for a low price.

Information

Customers need to know that single-host SDDC clusters can’t be patched or software updated within their 60-day lifespan. These clusters cease automatically after 60 days. All virtual machines and data are deleted. VMware doesn’t back up the data, and in the case of host failure, there will be data loss.

An SLA does not cover single-host SDDCs, and they should not be used for production purposes. Customers can choose to convert a single-host SDDC into a 2-host SDDC cluster at any time during their 60-day operational period. Once converted, the 2-host SDDC cluster will be ready for production workloads. All the data will be migrated to both hosts on the 2-host SDDC cluster.

VMware will manage the multi-host production cluster and keep it up to date with the latest software updates and security patches. This can be the path from PoC to production.

Two-host SDDC clusters

The 2-host SDDC cluster allows for a fully redundant data replica suitable for entry-level production use cases. This deployment is good for customers beginning their public cloud journey. It is also suitable for DR pilot light deployments that are part of VCDR services, which will be covered later in the book.

The 2-host SDDDC cluster has no time restrictions and is SLA-eligible. VMware will patch and upgrade all the hosts in the SDDC Clusters with zero downtime similar to how a multi-host SDDC cluster running production workloads is patched or updated.

The two-host cluster leverages a virtual EC2 m5.2xlarge instance as a vSAN witness to store and update the witness metadata; it allows for resiliency in case of a hardware failure in any one of the hosts. When scaling up to three hosts, the metadata witness is terminated. On the contrary, the metadata witness instance is recreated when scaled down from three hosts.

Note

At launch time, a three-host cluster couldn’t be scaled down to two hosts; however, this limitation was addressed in early 2022. There are still limitations associated with the number of virtual machines that can be turned on concurrently (36) and support of large VM vMotion through HCX is limited with i3 host types.

More information can be found at https://vmc.techzone.vmware.com/resource/entry-level-clusters-vmware-cloud-aws.

Three-host SDDC clusters

A three-host cluster can scale the number of hosts up and down in a cluster from 3 to 16, without the previously described limitations, and is recommended for larger production environments.

Multi-cluster SDDC

There can be up to 20 clusters in an SDDC. The management appliances will always be in cluster number 1. Each cluster needs to contain the same type of hosts, but different clusters can operate different host types.

The following figure describes at a high level how, in a single customer organization, there can be multiple SDDCs, and within the SDDC, multiple clusters, with each SDDC having its own management appliances residing in cluster 1:

Figure 1.11 – Detailed view of multiple SDDCs and clusters in an organization

So far, we have gone through a single Availability Zone cluster type. Next, let’s explore how customers can architect their SDDC resiliency to withstand a full Availability Zone (AZ) failure leveraging stretched clusters.

Stretched clusters

When designing application resiliency across AZs with native AWS services, resiliency must be achieved via application-level availability or services such as AWS RDS. Traditional vSphere-based applications need to be refactored to enjoy those resiliency capabilities.

In contrast, VMware Cloud on AWS offers AZ failure resilience via vSAN stretched clusters.

Cross-AZ availability is possible by extending the vSphere cluster across two AWS AZs. The stretched vSAN cluster makes use of vSANs synchronous writes across AZs. It has a zero RPO and a near-zero RTO, depending on the vSphere HA reboot time. This functionality is transparent for applications running inside of a VM.

Figure 1.12 – High-level architecture of VMware Cloud on AWS standard cluster

Customers can select the two AZs that they wish their SDDC to be stretched across in the stretched cluster deployment. VMware automation will then pick the correct third AZ for your witness node to deploy in. The VMware Cloud on AWS service covers the cost of provisioning of the witness node, which operates on an Amazon EC2 instance created from an Amazon Machine Images (AMI) converted from a VMware OVA. The stretched cluster and witness deployments are fully automated once the initial parameters have been set, just like a single AZ SDDC installation.

Stretched clusters can span AZs within the same AWS Region and require a minimum number of four hosts. vSphere HA is turned on, and the host isolation response is set to shut down and start virtual machines. In an AZ failure, a vSphere HA event is triggered. vSAN fault domains are used to maintain vSAN data integrity.

The SLA definition of a standard cluster has an availability commitment of 99.9% uptime, and a stretched cluster will have availability commitment of 99.99% uptime. If it utilizes 3+3 hosts or more in each AZ, and a 2+2 cluster will have an availability commitment 99.9% uptime. This enables continuous operations if an AWS AZ fails.

The VMware SLA for VMware Cloud on AWS is available here: https://www.vmware.com/content/dam/digitalmarketing/vmware/en/pdf/support/vmw-cloud-aws-service-level-agreement.pdf.

Elastic Distributed Resource Scheduler

The Elastic DRS system employs an algorithm designed to uphold an optimal count of provisioned hosts, ensuring high cluster utilization while meeting specified CPU, memory, and storage performance criteria. Elastic DRS continually assesses the current demand within your SDDC and utilizes its algorithm to propose either scaling in or scaling out of the cluster. When a scale-out recommendation is received, a decision engine acts by provisioning a new host into the cluster. Conversely, when a scale-in recommendation is generated, the least-utilized host is removed from the cluster.

It’s important to note that Elastic DRS is not compatible with single-host starter SDDCs. To implement Elastic DRS, a minimum of three hosts is required for a single-AZ SDDC and six hosts for a multi-AZ SDDC. Upon the initiation of a scale-out by the Elastic DRS algorithm, all users within the organization receive notifications both in the VMware Cloud Console and via email.

Figure 1.13 – Elastic DRS cluster threshold monitoring and adjustments

You can control the Elastic DRS configuration through Elastic DRS policies. The default Elastic DRS baseline policy is always active and is configured to monitor the utilization of the vSAN datastore exclusively. Once the utilization reaches 80%, Elastic DRS will initiate the host addition process. Customers can opt to use different Elastic DRS policies depending on the use cases and requirements. The following policies are available:

- Optimize for best performance (recommended when hosting mission-critical applications): When using this policy, Elastic DRS will monitor CPU, memory, and storage resources. When generating scale-in or scale-out recommendations, the policy uses aggressive high thresholds and moderate low thresholds for scale-in.

- Optimize for the lowest cost (recommended when running general-purpose workloads with costs factoring over performance): This policy, as opposed to the previous one, has more aggressive low thresholds and is configured to tolerate longer spikes of high utilization. Using this policy might lead to overcommitting compute resources and performance drops, but it helps to maintain the lowest number of hosts within a cluster.

- Optimize for rapid scaling (recommended for DR, VDI, or any workloads that have predictable spike characteristics): When opting for this policy, you can define how many hosts will be added to the cluster in parallel. While the default setting is 2 hosts, you can select up to 16 hosts in a batch. With this policy, you can address the demand of workloads with high spikes in resource utilization – for example, VDI desktops starting up on Monday morning. Also, use this policy with VCDR to achieve low cost and high readiness of the environment for a DR situation.

The resource (storage, CPU, and memory) thresholds will vary depending on the preceding policies.

|

Elastic DRS Policy |

Storage Thresholds |

CPU Thresholds |

Memory Thresholds |

|

Baseline Policy |

Scale-Out Threshold: 80% |

(Storage Only) |

|

|

Optimize for Best Performance |

Scale-Out Threshold: 80% |

Scale-Out Threshold: 90% |

Scale-Out Threshold: 80% |

|

Optimize for the Lowest Cost |

Scale-Out Threshold: 80% Scale-In Threshold: 40% |

Scale-Out Threshold: 90% Scale-In Threshold: 60% |

Scale-Out Threshold: 80% Scale-In Threshold: 60% |

|

Rapid Scaling |

Scale-Out Threshold: 80% Scale-In Threshold: 40% |

Scale-Out Threshold: 80% Scale-In Threshold: 50% |

Scale-Out Threshold: 80% Scale-In Threshold: 50% |

Table 1.1 – Elastic DRS policy default thresholds

Note

VMware will automatically add hosts to the cluster if storage utilization exceeds 80%. This is because the baseline Elastic DRS policy is, by default, enabled on all SDDC clusters; it cannot be disabled. This is a preventative measure to ensure that vSAN has enough “slack” storage to support applications and workloads.

Automatic cluster remediation

One of the ultimate benefits of running VMware SDDC on an AWS public cloud is access to elastic resource capacity. It tremendously helps to address not only resource demands (see the preceding Elastic DRS section) but also to quickly recover from a hardware failure.

The auto-remediation service monitors ESXi hosts for different types of hardware failures. Once a failure is detected, the auto-remediation service triggers the autoscaler mechanism to add a host to the cluster and place the failed host into maintenance mode if possible. vSphere DRS will automatically migrate resources or vSphere HA will restart the affected virtual machines, depending on the severity of the failure. vSAN will synchronize the Virtual Machine Disk (VMDK) files. Once this process is complete, the auto-remediation service will initiate the removal of the failed host.

The following diagram describes how the autoscaler service monitors for alerts in the SDDC and makes remediation actions accordingly:

Figure 1.14 – Autoscaler service high-level architecture

All these operations are transparent to customers and also do not incur additional costs – a newly added host is non-billable for the whole time of the remediation.

Understanding Cloud Service Platform and VMware Cloud Console

In this section, we will depict how customers operate the VMware Cloud on AWS environment.

Cloud Service Platform and VMware Cloud Console

A VMware Cloud Services account gives customers access to the VMware Cloud Console and other VMware Cloud services, such as VMware vRealize Log Insight Cloud, HCX, and TMC. The VMware Cloud Services Console allows customers to manage their organization’s billing, identity, access, and other aspects of VMware Cloud on AWS. VMware Cloud on AWS is based on an organization as a basic management object containing cloud services and users. Customers can subscribe to different Cloud services through service roles inside the organization.

The first user will need to have a valid MyVMware account to create a Cloud Service platform account and a VMware Cloud organization. There are three types of organization roles in an organization:

- Fund Owner: Prior to onboarding, your VMware representative will request that you identify a fund owner during the deal process. Once the deal is completed, a welcome email is sent to the fund owner. This email contains the link that you need to sign up for the Cloud Service platform. This link can only be used once. The link will redirect to the VMware Cloud Services Portal site, where you can log in to the VMware Cloud on AWS service with your

MyVMwarecredentials. YourMyVMwareaccount is used to create an organization and become an organization owner. - Organization Owner: This user can add, remove, and modify users. This user can also access VMware Cloud services. Multiple owners are possible per organization.

- Organization Members: These users can access cloud services. However, they cannot add, remove, or modify users. The Cloud Services Console allows customers to assign service roles to specific organization members. The VMware Cloud on AWS service lets customers assign Administrator, Administrator (Delete Restricted), NSX Cloud Auditor, and NSX CloudAdmin roles.

As mentioned, the Cloud Service Platform (CSP) console handles the IAM with MyVMware accounts as the identity source. However, a federation with an external IDP source such as Okta or Azure AD is supported and configured from the CSP console. The CSP authentication and federation are unrelated to the VMware Cloud on AWS authentication path, which uses a different mechanism. Support tickets can be managed either from the CSP console or from the online chat inside the VMware Cloud service.

VMware Cloud console

The VMware Cloud console is a service within an organization accessed from the CSP described in the preceding section. The VMware Cloud console manages SDDCs, one or more clusters of bare-metal hosts installed with VMware ESXi, vSphere, vCenter Server (vCenter), VMware NSX, and VMware vSAN.

The VMware Cloud console facilitates access to the standalone NSX Manager UI through the reverse proxy. To access the vSphere Web Client, used to manage the VMware Cloud on AWS SDDC, you will use the default CloudAdmin user. The initial provision of the CloudAdmin user includes a randomly generated password that can be accessed via the SDDC view on the VMware Cloud console.

Note

VMware Cloud Console does not reflect the current password of Cloud Admin if the password has been changed using the Web Client or API. The VMware Cloud console can manage multiple SDDCs. Each SDDC can reside in a different Region. An SDDC is created within one AWS Region. An organization can create up to 2 SDDCs by default, but this is a soft limit that can be extended to 20.

The SDDC console offers quick summary information about each SDDC and its clusters. It includes the Region, AZ, host count, and cluster resources available (CPU, RAM, and storage). Once an SDDC has been created, the customer can control the SDDC’s capacity by adding/removing hosts and clusters and modifying the Elastic DRS policy. The VMware Cloud console also provides access to the SDDC groups.

SDDC groups are where the VMware Transit Connect service is managed, which is based on the AWS transit gateway (TGW) service. VMware Transit Connect is a VMware-managed TGW (vTGW) that can be used to connect multiple SDDCs and VPCs over a high bandwidth router utilizing a VPC attachment and TGW attachment. The vTGW can be used to connect to native AWS TGW and VPC to form hybrid architectures – more on this later in the NSX section.

The SDDC Console provides additional operational functionality:

- Activation of complementary services such as HCX, NSX advanced firewall, VMware site recovery (VSR), and Aria Automation

- Activation of Microsoft Services Provider License Agreement (SPLA) licenses

- Open support tickets and live chat with technical support

- Support-related environment information such as SDDC and organization ID

- Network connectivity troubleshooting

- VMware maintenance notifications

- Subscription management for hosts, site recovery, and NSX advanced firewall

- Activity logs for SDDC-related activities, alerts, audit trails, and notifications

- API explorer for the different services and access SDKs

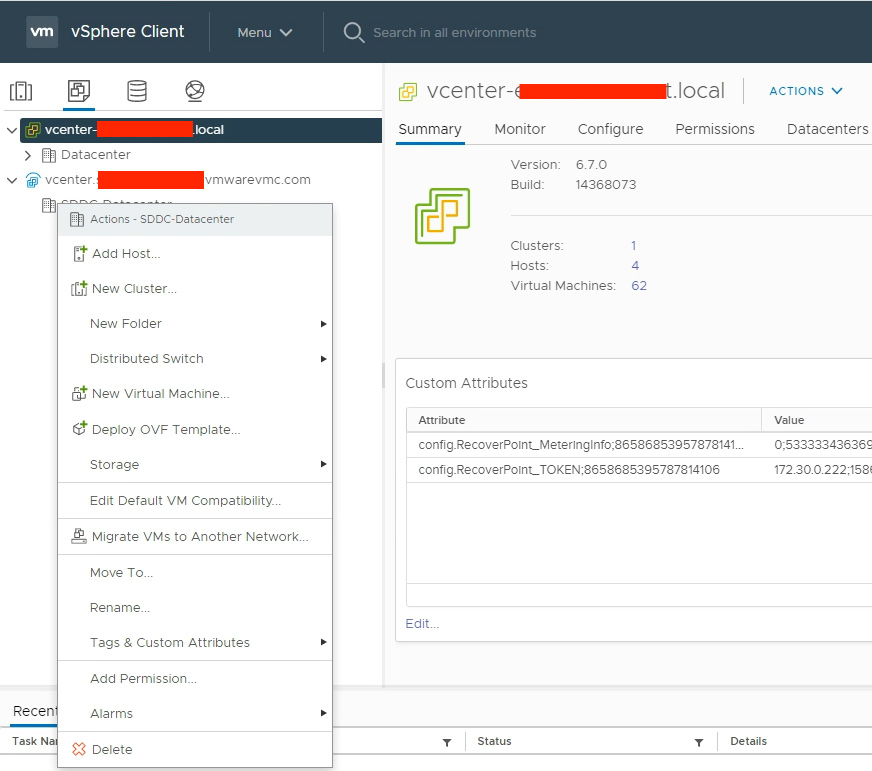

VMware vCenter Server

vCenter Server facilitates the management of the VMware Cloud on AWS SDDC. vCenter running in VMware Cloud on AWS is the same product that customers use in their on-premises environment to manage VMware vSphere. The Cloud gateway appliance (CGA) enables the linking of vCenters through a hybrid-linked mode to the on-premises environment, enabling hybrid operations with a single pane of glass.

The following screenshot shows how two vCenter Servers are managed with a single pane of glass through the cloud gateway from the vSphere Web Client:

Figure 1.15 – Cloud gateway managing two vCenters in a single management pane of glass

You use vSphere Web Client to access VMware Cloud on AWS vCenter Server and operate the VMware Cloud on AWS SDDC. It’s vital to understand the access model used within the VMware Cloud on AWS SDDC.

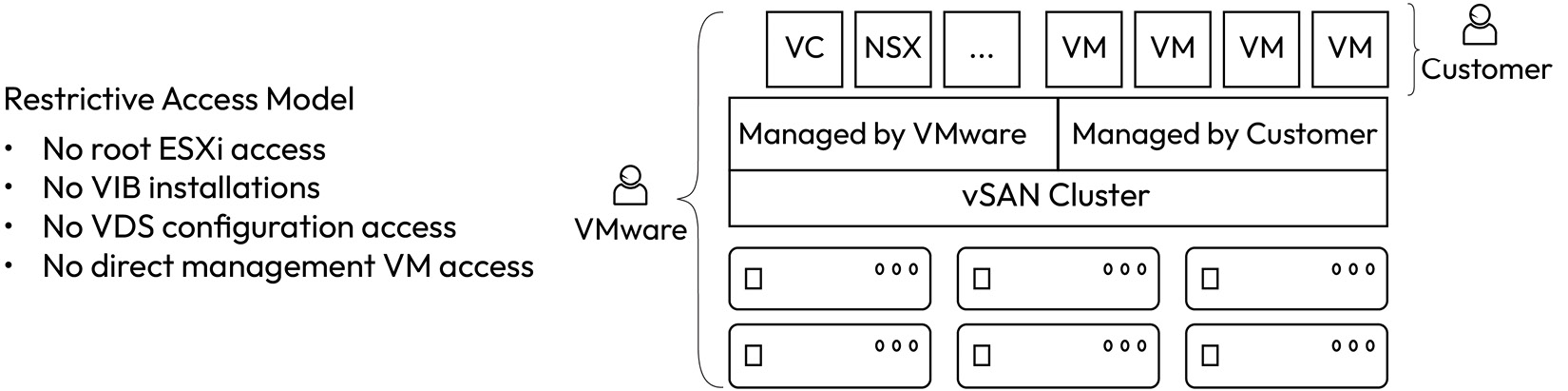

Restrictive access model

The VMware Cloud on AWS SDDC permissions model allows VMware’s Site Reliability Engineering (SRE) and customers to manage the vCenter deployment mutually according to the shared responsibility model. The permissions model has the following high-level goals:

- Enable access to VMware operators and VMware Cloud on AWS customers

- Permit customers to manage their workloads and users/groups using Active Directory (AD) / Lightweight Directory Access Protocol (LDAP), tags, permissions (on their inventory items), roles (from subsets of their permissions), and so on

- Protect administrator-managed objects (management appliances, users/groups, global policies, roles, permissions, hosts, storage, and so on)

- Enable SRE teams to manage vSphere and underlying AWS infrastructure life cycle management, including deployment, configuration, upgrade, patching, monitoring, remediating, and applying emergency updates for security vulnerabilities

Because of this access model, certain third-party vendors that require root ESXi access and vSphere Installation Bundle (VIB) installation are not compatible with VMware Cloud on AWS.

Note

To see which third-party solutions are certified with VMware Cloud on AWS, please refer to the VMware compatibility guide at https://www.vmware.com/resources/compatibility/search.php?deviceCategory=vsanps or the third-party vendor’s certification website.

If a host goes down or is found to be inoperable, a new host is added to the cluster. All data from the affected host is then rebuilt on the new host. After being fully replaced by the new host, the faulty host will be removed from the cluster using the DRS capability and EC2 API automation.

VMware Cloud automation automatically configures all VM kernels and logical networks when a new host is connected to the cluster; the customer doesn’t have access to the host or cluster configuration. After the host is connected, the vSAN database is automatically expanded. This allows the cluster to take advantage of the newly added storage capacity. This happens completely automatically without any intervention from the customer or VMware SRE.

CloudAdmins can’t modify the default level 3 DRS migration threshold. This is to avoid unnecessary vMotion operation.

Note

A new mechanism known as compute policies is used in VMware Cloud on AWS to create affinity or anti-affinity rules.

The following figure graphically summarizes the restricted access and shared responsibility model between customers and VMware:

Figure 1.16 – Restricted access and shared responsibility model

Information

More information can be found at https://docs.vmware.com/en/VMware-Cloud-on-AWS/solutions/VMware-Cloud-on-AWS.39646badb412ba21bd6770ef62ae00a2/GUID-31CC90E5EB22075B2313FA674D567F2A.html.

Let’s look further into the default user role and group in VMware Cloud on AWS.

CloudAdmin user

Customers will be able to access vCenter via the cloudadmin@vmc.local username account.

CloudAdmin role and group

The SDDC CloudAdmin role determines the highest level of permissions of the customer.

The CloudAdmin group was granted a CloudAdmin role with Global permissions and the data center object in vCenter. It has been granted read-only permission set to vCenter’s management resources (storage, networks, resource pools, virtual machines, and the Discovered Virtual Machines folder). Customer users within AD/LDAP are assigned to the CloudAdmin group (or AD/LDAP groups with a custom role and a subset of permissions) within vCenter.

vSphere architecture

Now, let’s describe in detail the vSphere configuration and vCenter inventory.

Data center

An SDDC has a single virtual data center named SDDC-Datacenter. This data center contains the compute resources organized in vSphere clusters.

vSphere HA is enabled on VMware Cloud On AWS by default. DRS is a feature that balances computing workloads on available resources. These features can only be configured by VMware. In the event of a host failure, application workloads are restarted on all healthy hosts within the cluster using vSphere HA.

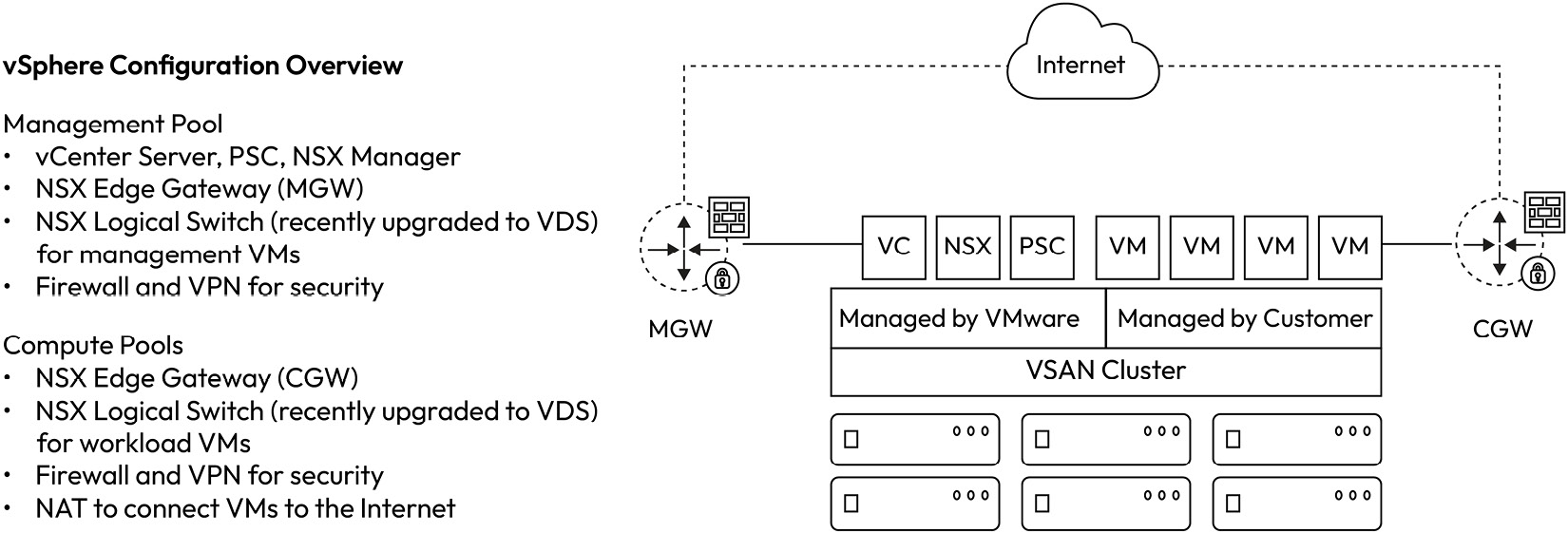

The following figure describes at a high level the vSphere configuration inside a vCenter virtual data center managed by VMware and customer administrators:

Figure 1.17 – Overview of vSphere configuration inside an SDDC data center

All hosts in the SDDC will be present within a cluster. An SDDC will have a single cluster called Cluster-1, which will serve both management appliances and end user workloads. The SDDC can have additional clusters as required. Clusters are named with the naming convention Cluster-x, where x is the cluster’s number. Clusters, by default, are limited to a single AWS AZ. Stretched clustering allows hosts in a cluster to be distributed across two AZs in an AWS Region.

Note

Customers cannot add a stretched cluster to an SDDC that is deployed in a single AZ. This needs to be a decision that is made at deployment time – that is, stretched or standard cluster.

Resource pools

An SDDC’s resource pools primarily protect management appliances. They are a way to preserve compute resources for management appliances and they serve as the target object when permissions are granted to management appliances.

The SDDC creates two resource pools from the base cluster:

Mgmt-ResourcePoolis a resource pool that contains management appliancesCompute-ResourcePoolis a resource pool that accommodates end user workloads

Datastores

The SDDC-based cluster contains two datastores:

vsanDatastoreis only used to store SDDC management appliancesWorkloadDatastorecan be used to store workloads for end users

These datastores represent the same underlying vSAN pool but are presented as separate entities to enforce permissions within SDDC. Specifically, vsanDatastore can’t be modified. Management appliances can only be found in the first cluster.

Additional clusters to the SDDC will appear as an additional datastore for end user workloads. The datastores will be named WorkloadDatastore x, where x refers to the cluster number.

Demystifying vSAN and host storage architecture

Let us explore the architecture of the storage subsystem within the VMware Cloud on AWS SDDC.

VMware vSAN overview

vSAN stands for virtual storage area network, an object-based storage solution leveraging locally attached physical drives. VMware has offered vSAN technology to the market for some time. Now, it’s a mature storage solution, well represented in Gartner’s magical quadrant and powering millions of customer workloads.

vSAN combines locally attached hard disks into a single, cluster-wide datastore, supporting simultaneous access from multiple ESXi hosts. All vSAN traffic traverses over a physical network using a dedicated vSAN VM kernel interface. A shared datastore across all hosts in a vSphere cluster enables usage of distinguished vSphere features, including live vMotion between hosts, vSphere HA to restart virtual machines from a failed host on the surviving host in the cluster, and DRS.

The vSAN distributed architecture with local storage fits perfectly into the cloud world, eliminating dependencies on external storage. Easily scalable with the addition of a new host, providing enterprise-level storage functionality (deduplication, compression, data-in-rest encryption, etc.), vSAN builds the foundation of VMware Cloud on AWS.

vSAN on VMware Cloud on AWS high-level architecture

While VMware Cloud on AWS leverages VMware vSAN in a way very similar to on-premises, there are still a number of distinguished architecture differences.

NVMe HDDs with a 4096 native physical sector size are used by all instance types in VMware Cloud on AWS.

At the moment, VMware Cloud on AWS features vSAN v1 (OSA) with a distinction between the caching and capacity tiers. Each host type features its own configuration of disk groups. With the release of vSphere 8, VMware brings a new vSAN architecture model, the so-called vSAN ESA. With the recent 1.24 SDDC release, vSAN ESA has been made available to selected customers under preview (https://vmc.techzone.vmware.com/resource/vsan-esa-vmware-cloud-aws-technical-deep-dive).

To facilitate logical separation between customer-managed and VMware-managed virtual machines, a single vSAN datastore is represented as two logical datastores. Only workload datastores are available for customer workloads. Both logical datastores share the same physical capacity and throughput.

Storage encryption

In VMware Cloud on AWS, vSAN encrypts all user data-a-rest. Encryption is automatically activated by default on every cluster deployed in your SDDC, and cannot be disabled. Additionally, with newer host types (i3en and i4i) vSAN traffic between hosts is encrypted as well (so-called data-in-transit encryption).

Figure 1.18 – vSAN cluster configuration and shared responsibility model

Storage policies

vSAN has been designed from the beginning to support major enterprise storage features. However, there is an important architectural difference between external storage and vSAN. When using an external storage, you will enable storage features on a physical Logical Unit Number (LUN) level and each LUN will be connected as a separate datastore, featuring different performance and availability patterns. With vSAN, all of these features are activated on a virtual machine or even on an individual VMDK level. To control performance and data availability for your workload, you assign different storage policies. vSAN storage policy management and policy monitoring are done from the vCenter using the vCenter Web Client. Customers control their configurations through virtual machine (VM) storage policies, also known as storage policy-based management (SPBM).

Each virtual machine or disk has a policy assigned to it, and the policy includes, among others, disk RAID and fault tolerance parameters and additional configurations such as disk stripers, I/O SLA, and encryption.

In the following diagram, you can see a graphical summary of the SPBM values:

Figure 1.19 – SBPM configuration graphical summary

Let’s go deeper and review the configuration available with vSAN storage policies.

Failures to Tolerate

Failures to Tolerate (FTT) defines the number of disk device or host failures a virtual machine can tolerate within a cluster. You can choose between 0 (no protection) and 3 (a cluster can tolerate a simultaneous failure of up to three hosts without affecting virtual machine workloads).

Note

VMware Cloud on AWS SLAs dictate a minimum FTT configuration to be eligible for SLA credit.

FTT is configured together with the appropriate Redundant Array of Independent Disks (RAID) policy according to the number of hosts in the cluster. Customers can choose a RAID policy optimized for either performance (mirroring) or capacity (erasure coding).

The FTT policy overhead per storage object depends on the selected FTT and RAID policy. For example, an FTT1 and RAID-1 policy creates two copies of a storage object. FTT2 and RAID-1 creates three copies of the storage object, and FTT3 and RAID-1 creates four copies of the storage object.

The following list describes the trade-offs between different RAID options:

- RAID-1 uses Mirroring and requires more disk space overhead, but gives better write I/O performance, can survive a single host failure with FTT1, and is available from two hosts and above. RAID-1 is also used in conjunction with FTT2 for clusters larger than six hosts as an alternative to RAID-6 for improved write I/O performances, or FTT3 for clusters larger than seven hosts.

- RAID-5 uses Erasure Coding and has less disk space overhead, which results in lower performance, but it can survive a single host failure and is available from four hosts and above.

- RAID-6 is similar to RAID-5; however, it can survive two host failures (FTT2). This RAID type is available starting with six hosts.

- RAID-0 (No Data Redundancy) uses no extra disk overhead and provides potentially the best performance (eliminating overhead to create redundant copies of the data), but cannot survive any failure.

Note

The RAID-0 policy is configurable but not eligible for SLA. If the host or disk fails, this can result in data loss.

The following table summarizes the RAID configuration, FTT policy options, and the minimal host count:

|

RAID Configuration |

FTT Policy |

Hosts Required |

|

RAID-0 (No Data Redundancy) |

0 |

1+ |

|

RAID-1 (Mirroring) |

1 |

2+ |

|

RAID-5 (Erasure Coding) |

1 |

4+ |

|

RAID-1 (Mirroring) |

2 |

5+ |

|

RAID-6 (Erasure Coding) |

2 |

6+ |

|

RAID-1 (Mirroring) |

3 |

7+ |

Table 1.2 – Table summary of the RAID, FTT, and minimal host count options

Managed Storage Policy

VMware Cloud on AWS is provided as a service to customers. As a part of the service agreement, VMware commits to SLAs for customers using the service, including a VM uptime guarantee. Depending on the SDDC configuration, the SDDC is eligible for either a 99.9% (standard cluster) or 99.99% (stretched cluster with 6+ hosts) uptime availability commitment. To facilitate this strength of SLAs, VMware dictates a certain level of FTT configuration for customer workloads.

The following figure describes VM storage policies required for SLA-eligible workloads:

Figure 1.20 – Table of storage policy configuration for SLA eligibility

Information

A single-host SDDC is not covered by an SLA and should not be used for production workloads.

VMware Cloud on AWS introduced a new concept called Managed Storage Policy Profiles to help customers adhere to SLAs. Each vSphere cluster has a default storage policy managed by VMware and configured to adhere to SLAs requirements (e.g., FTT1, RAID-1 in a cluster with 3 ESXi hosts). As the cluster size changes, the Managed Storage Policy Profile is updated with the appropriate RAID and FTT configuration.

Customers can create their own policies, based on their needs, which may differ from the managed policies, and in that case, it will be the customer’s responsibility to adjust the policy parameters to meet the SLA compliance requirements. Customers can configure values not aligned with the SLA parameters – for instance, use RAID-1 FTT1 in a six-host cluster. In that case, customers will receive notifications that they have non-SLA-compliant storage objects in a cluster in their SDDC. Such a cluster is not entitled to receive any SLA credits. As in the following example, customers using non-compliant policies will receive periodic email notifications about it:

Figure 1.21 – Screenshot of a non-SLA-compliant storage object email notification

The Managed Storage Policy Profile offers customers an easy way to deploy workloads with SLA-compliant storage policies without overthinking the current cluster configuration.

Note

Do not modify the default storage policy in your SDDC. Your changes will be rewritten by the next invocation of SDDC monitoring. These changes might cause performance penalties for your workload causing vSAN to reapply changes within a short period of time. Instead, create a custom policy and assign it only to a subset of your virtual machines.

Summary

In this chapter, we have learned about AWS Cloud, hybrid cloud challenges, and how VMware Cloud on AWS solves these challenges. We have described the different use cases that VMware Cloud on AWS addresses and described the architecture of VMware Cloud’s main components, including CSP, VMware Cloud console, vCenter, vSAN, and NSX. We also looked at the different cluster types, underlying hosts, and high-level networking architecture.

NSX and networking are essential and extensive topics; therefore, we have decided to dedicate the following chapter to describe the unique NSX architecture in VMware Cloud on AWS, including the security and firewall architecture, security capabilities such as micro-segmentation, IPS/IDS, networking capabilities such as routing, VPN, and native AWS integrations in detail.

In the next chapter, you will discover the best practices on how to implement networking and security in VMware Cloud on AWS.