In this chapter, we will cover the following recipes:

Deserializing and running a classifier

Getting confidence estimates from a classifier

Getting data from the Twitter API

Applying a classifier to a

.csvfileEvaluation of classifiers – the confusion matrix

Training your own language model classifier

How to train and evaluate with cross validation

Viewing error categories – false positives

Understanding precision and recall

How to serialize a LingPipe object – classifier example

Eliminate near duplicates with the Jaccard distance

How to classify sentiment – simple version

This chapter introduces the LingPipe toolkit in the context of its competition and then dives straight into text classifiers. Text classifiers assign a category to text, for example, they assign the language to a sentence or tell us if a tweet is positive, negative, or neutral in sentiment. This chapter covers how to use, evaluate, and create text classifiers based on language models. These are the simplest machine learning-based classifiers in the LingPipe API. What makes them simple is that they operate over characters only—later, classifiers will have notions of words/tokens and even more. However, don't be fooled, character-language models are ideal for language identification, and they were the basis of some of the world's earliest commercial sentiment systems.

This chapter also covers crucial evaluation infrastructure—it turns out that almost everything we do turns out to be a classifier at some level of interpretation. So, do not skimp on the power of cross validation, definitions of precision/recall, and F-measure.

The best part is that you will learn how to programmatically access Twitter data to train up and evaluate your own classifiers. There is a boring bit concerning the mechanics of reading and writing LingPipe objects from/to disk, but other than that, this is a fun chapter. The goal of this chapter is to get you up and running quickly with the basic care and feeding of machine-learning techniques in the domain of natural language processing (NLP).

LingPipe is a Java toolkit for NLP-oriented applications. This book will show you how to solve common NLP problems with LingPipe in a problem/solution format that allows developers to quickly deploy solutions to common tasks.

LingPipe 1.0 was released in 2003 as a dual-licensed open source NLP Java library. At the time of writing this book, we are coming up on 2000 hits on Google Scholar and have thousands of commercial installs, ranging from universities to government agencies to Fortune 500 companies.

Current licensing is either AGPL (http://www.gnu.org/licenses/agpl-3.0.html) or our commercial license that offers more traditional features such as indemnification and non-sharing of code as well as support.

Nearly all NLP projects have awful acronyms so we will lay bare our own. LingPipe is the short form for linguistic pipeline, which was the name of the cvs directory in which Bob Carpenter put the initial code.

LingPipe has lots of competition in the NLP space. The following are some of the more popular ones with a focus on Java:

NLTK: This is the dominant Python library for NLP processing.

OpenNLP: This is an Apache project built by a bunch of smart folks.

JavaNLP: This is a rebranding of Stanford NLP tools, again built by a bunch of smart folks.

ClearTK: This is a University of Boulder toolkit that wraps lots of popular machine learning frameworks.

DkPro: Technische Universität Darmstadt from Germany produced this UIMA-based project that wraps many common components in a useful manner. UIMA is a common framework for NLP.

GATE: GATE is really more of a framework than competition. In fact, LingPipe components are part of their standard distribution. It has a nice graphical "hook the components up" capability.

Learning Based Java (LBJ): LBJ is a special-purpose programming language based on Java, and it is geared toward machine learning and NLP. It was developed at the Cognitive Computation Group of the University of Illinois at Urbana Champaign.

Mallet: This name is the short form of MAchine Learning for LanguagE Toolkit. Apparently, reasonable acronym generation is short in supply these days. Smart folks built this too.

Here are some pure machine learning frameworks that have broader appeal but are not necessarily tailored for NLP tasks:

Vowpal Wabbit: This is very focused on scalability around Logistic Regression, Latent Dirichelet Allocation, and so on. Smart folks drive this.

Factorie: It is from UMass, Amherst and an alternative offering to Mallet. Initially it focused primarily on graphic models, but now it also supports NLP tasks.

Support Vector Machine (SVM): SVM light and

libsvmare very popular SVM implementations. There is no SVM implementation in LingPipe, because logistic regression does this as well.

It is very reasonable to ask why choose LingPipe with such outstanding free competition mentioned earlier. There are a few reasons:

Documentation: The class-level documentation in LingPipe is very thorough. If the work is based on academic work, that work is cited. Algorithms are laid out, the underlying math is explained, and explanations are precise. What the documentation lacks is a "how to get things done" perspective; however, this is covered in this book.

Enterprise/server optimized: LingPipe is designed from the ground up for server applications, not for command-line usage (though we will be using the command line extensively throughout the book).

Coded in the Java dialect: LingPipe is a native Java API that is designed according to standard Java class design principles (Joshua Bloch's Effective Java, by Addison-Wesley), such as consistency checks on construction, immutability, type safety, backward-compatible serializability, and thread safety.

Error handling: Considerable attention is paid to error handling through exceptions and configurable message streams for long-running processes.

Support: LingPipe has paid employees whose job is to answer your questions and make sure that LingPipe is doing its job. The rare bug gets fixed in under 24 hours typically. They respond to questions very quickly and are very willing to help people.

Consulting: You can hire experts in LingPipe to build systems for you. Generally, they teach developers how to build NLP systems as a byproduct.

Consistency: The LingPipe API was designed by one person, Bob Carpenter, with an obsession of consistency. While it is not perfect, you will find a regularity and eye to design that can be missing in academic efforts. Graduate students come and go, and the resulting contributions to university toolkits can be quite varied.

Open source: There are many commercial providers, but their software is a black box. The open source nature of LingPipe provides transparency and confidence that the code is doing what we ask it to do. When the documentation fails, it is a huge relief to have access to code to understand it better.

You will need to download the source code for this cookbook, with supporting models and data from http://alias-i.com/book.html. Untar and uncompress it using the following command:

tar –xvzf lingpipeCookbook.tgz

Tip

Downloading the example code

You can download the example code files for all Packt books you have purchased from your account at http://www.packtpub.com. If you purchased this book elsewhere, you can visit http://www.packtpub.com/support and register to have the files e-mailed directly to you.

Alternatively, your operating system might provide other ways of extracting the archive. All recipes assume that you are running the commands in the resulting cookbook directory.

Downloading LingPipe is not strictly necessary, but you will likely want to be able to look at the source and have a local copy of the Javadoc.

The download and installation instructions for LingPipe can be found at http://alias-i.com/lingpipe/web/install.html.

The examples from this chapter use command-line invocation, but it is assumed that the reader has sufficient development skills to map the examples to their preferred IDE/ant or other environment.

This recipe does two things: introduces a very simple and effective language ID classifier and demonstrates how to deserialize a LingPipe class. If you find yourself here from a later chapter, trying to understand deserialization, I encourage you to run the example program anyway. It will take 5 minutes, and you might learn something useful.

Our language ID classifier is based on character language models. Each language model gives you the probability of the text, given that it is generated in that language. The model that is most familiar with the text is the first best fit. This one has already been built, but later in the chapter, you will learn to make your own.

Perform the following steps to deserialize and run a classifier:

Go to the

cookbookdirectory for the book and run the command for OSX, Unix, and Linux:java -cp lingpipe-cookbook.1.0.jar:lib/lingpipe-4.1.0.jar com.lingpipe.cookbook.chapter1.RunClassifierFromDiskFor Windows invocation (quote the classpath and use

;instead of:):java -cp "lingpipe-cookbook.1.0.jar;lib\lingpipe-4.1.0.jar" com.lingpipe.cookbook.chapter1.RunClassifierFromDiskWe will use the Unix style command line in this book.

The program reports the model being loaded and a default, and prompts for a sentence to classify:

Loading: models/3LangId.LMClassifier Type a string to be classified. Empty string to quit. The rain in Spain falls mainly on the plain. english Type a string to be classified. Empty string to quit. la lluvia en España cae principalmente en el llano. spanish Type a string to be classified. Empty string to quit. スペインの雨は主に平野に落ちる。 japanese

The classifier is trained on English, Spanish, and Japanese. We have entered an example of each—to get some Japanese, go to http://ja.wikipedia.org/wiki/. These are the only languages it knows about, but it will guess on any text. So, let's try some Arabic:

Type a string to be classified. Empty string to quit. المطر في اسبانيا يقع أساسا على سهل. japanese

It thinks it is Japanese because this language has more characters than English or Spanish. This in turn leads that model to expect more unknown characters. All the Arabic characters are unknown.

If you are working with a Windows terminal, you might encounter difficulty entering UTF-8 characters.

The code in the jar is cookbook/src/com/lingpipe/cookbook/chapter1/ RunClassifierFromDisk.java. What is happening is that a pre-built model for language identification is deserialized and made available. It has been trained on English, Japanese, and Spanish. The training data came from Wikipedia pages for each language. You can see the data in data/3LangId.csv. The focus of this recipe is to show you how to deserialize the classifier and run it—training is handled in the Training your own language model classifier recipe in this chapter. The entire code for the RunClassifier FromDisk.java class starts with the package; then it imports the start of the RunClassifierFromDisk class and the start of main():

package com.lingpipe.cookbook.chapter1;

import java.io.File;

import java.io.IOException;

import com.aliasi.classify.BaseClassifier;

import com.aliasi.util.AbstractExternalizable;

import com.lingpipe.cookbook.Util;

public class RunClassifierFromDisk {

public static void main(String[] args) throws

IOException, ClassNotFoundException {The preceding code is a very standard Java code, and we present it without explanation. Next is a feature in most recipes that supplies a default value for a file if the command line does not contain one. This allows you to use your own data if you have it, otherwise it will run from files in the distribution. In this case, a default classifier is supplied if there is no argument on the command line:

String classifierPath = args.length > 0 ? args[0] : "models/3LangId.LMClassifier";

System.out.println("Loading: " + classifierPath);Next, we will see how to deserialize a classifier or another LingPipe object from disk:

File serializedClassifier = new File(classifierPath);

@SuppressWarnings("unchecked")

BaseClassifier<String> classifier

= (BaseClassifier<String>)

AbstractExternalizable.readObject(serializedClassifier);The preceding code snippet is the first LingPipe-specific code, where the classifier is built using the static AbstractExternalizable.readObject method.

This class is employed throughout LingPipe to carry out a compilation of classes for two reasons. First, it allows the compiled objects to have final variables set, which supports LingPipe's extensive use of immutables. Second, it avoids the messiness of exposing the I/O methods required for externalization and deserialization, most notably, the no-argument constructor. This class is used as the superclass of a private internal class that does the actual compilation. This private internal class implements the required no-arg constructor and stores the object required for readResolve().

Note

The reason we use Externalizable instead of Serializable is to avoid breaking backward compatibility when changing any method signatures or member variables. Externalizable extends Serializable and allows control of how the object is read or written. For more information on this, refer to the excellent chapter on serialization in Josh Bloch's book, Effective Java, 2nd Edition.

BaseClassifier<E> is the foundational classifier interface, with E being the type of object being classified in LingPipe. Look at the Javadoc to see the range of classifiers that implements the interface—there are 10 of them. Deserializing to BaseClassifier<E> hides a good bit of complexity, which we will explore later in the How to serialize a LingPipe object – classifier example recipe in this chapter.

The last line calls a utility method, which we will use frequently in this book:

Util.consoleInputBestCategory(classifier);

This method handles interactions with the command line. The code is in src/com/lingpipe/cookbook/Util.java:

public static void consoleInputBestCategory( BaseClassifier<CharSequence> classifier) throws IOException { BufferedReader reader = new BufferedReader(new InputStreamReader(System.in)); while (true) { System.out.println("\nType a string to be classified. " + " Empty string to quit."); String data = reader.readLine(); if (data.equals("")) { return; } Classification classification = classifier.classify(data); System.out.println("Best Category: " + classification.bestCategory()); } }

Once the string is read in from the console, then classifier.classify(input) is called, which returns Classification. This, in turn, provides a String label that is printed out. That's it! You have run a classifier.

Classifiers tend to be a lot more useful if they give more information about how confident they are of the classification—this is usually a score or a probability. We often threshold classifiers to help fit the performance requirements of an installation. For example, if it was vital that the classifier never makes a mistake, then we could require that the classification be very confident before committing to a decision.

LingPipe classifiers exist on a hierarchy based on the kinds of estimates they provide. The backbone is a series of interfaces—don't freak out; it is actually pretty simple. You don't need to understand it now, but we do need to write it down somewhere for future reference:

BaseClassifier<E>: This is just your basic classifier of objects of typeE. It has aclassify()method that returns a classification, which in turn has abestCategory()method and atoString()method that is of some informative use.RankedClassifier<E> extends BaseClassifier<E>: Theclassify()method returnsRankedClassification, which extendsClassificationand adds methods forcategory(int rank)that says what the 1st to nth classifications are. There is also asize()method that indicates how many classifications there are.ScoredClassifier<E> extends RankedClassifier<E>: The returnedScoredClassificationadds ascore(int rank)method.ConditionalClassifier<E> extends RankedClassifier<E>:ConditionalClassificationproduced by this has the property that the sum of scores for all categories must sum to 1 as accessed via theconditionalProbability(int rank)method andconditionalProbability(String category). There's more; you can read the Javadoc for this. This classification will be the work horse of the book when things get fancy, and we want to know the confidence that the tweet is English versus the tweet is Japanese versus the tweet is Spanish. These estimates will have to sum to 1.JointClassifier<E> extends ConditionalClassifier<E>: This providesJointClassificationof the input and category in the space of all the possible inputs, and all such estimates sum to 1. This is a sparse space, so values are log based to avoid underflow errors. We don't see a lot of use of this estimate directly in production.

It is obvious that there has been a great deal of thought put into the classification stack presented. This is because huge numbers of industrial NLP problems are handled by a classification system in the end.

It turns out that our simplest classifier—in some arbitrary sense of simple—produces the richest estimates, which are joint classifications. Let's dive in.

In the previous recipe, we blithely deserialized to BaseClassifier<String> that hid all the details of what was going on. The reality is a bit more complex than suggested by the hazy abstract class. Note that the file on disk that was loaded is named 3LangId.LMClassifier. By convention, we name serialized models with the type of object it will deserialize to, which, in this case, is LMClassifier, and it extends BaseClassifier. The most specific typing for the classifier is:

LMClassifier<CompiledNGramBoundaryLM, MultivariateDistribution> classifier = (LMClassifier <CompiledNGramBoundaryLM, MultivariateDistribution>) AbstractExternalizable.readObject(new File(args[0]));

The cast to LMClassifier<CompiledNGramBoundaryLM, MultivariateDistribution> specifies the type of distribution to be MultivariateDistribution. The Javadoc for com.aliasi.stats.MultivariateDistribution is quite explicit and helpful in describing what this is.

Note

MultivariateDistribution implements a discrete distribution over a finite set of outcomes, numbered consecutively from zero.

The Javadoc goes into a lot of detail about MultivariateDistribution, but it basically means that we can have an n-way assignment of probabilities that sum to 1.

The next class in the cast is for CompiledNGramBoundaryLM, which is the "memory" of the LMClassifier. In fact, each language gets its own. This means that English will have a separate language model from Spanish and so on. There are eight different kinds of language models that could have been used as this part of the classifier—consult the Javadoc for the LanguageModel interface. Each language model (LM) has the following properties:

The LM will provide a probability that it generated the text provided. It is robust against data that it has not seen before, in the sense that it won't crash or give a zero probability. Arabic just comes across as a sequence of unknown characters for our example.

The sum of all the possible character sequence probabilities of any length is 1 for boundary LMs. Process LMs sum the probability to 1 over all sequences of the same length. Look at the Javadoc for how this bit of math is done.

Each language model has no knowledge of data outside of its category.

The classifier keeps track of the marginal probability of the category and factors this into the results for the category. Marginal probability is saying that we tend to see two-thirds English, one-sixth Spanish, and one-sixth Japanese in Disney tweets. This information is combined with the LM estimates.

The LM is a compiled version of

LanguageModel.Dynamicthat we will cover in the later recipes that discuss training.

LMClassifier that is constructed wraps these components into a classifier.

Luckily, the interface saves the day with a more aesthetic deserialization:

JointClassifier<String> classifier = (JointClassifier<String>) AbstractExternalizable.readObject(new File(classifierPath));

The interface hides the guts of the implementation nicely and this is what we are going with in the example program.

This recipe is the first time we start peeling away from what classifiers can do, but first, let's play with it a bit:

Get your magic shell genie to conjure a command prompt with a Java interpreter and type:

java -cp lingpipe-cookbook.1.0.jar:lib/lingpipe-4.1.0.jar: com.lingpipe.cookbook.chapter1.RunClassifierJointWe will enter the same data as we did earlier:

Type a string to be classified. Empty string to quit. The rain in Spain falls mainly on the plain. Rank Categ Score P(Category|Input) log2 P(Category,Input) 0=english -3.60092 0.9999999999 -165.64233893156052 1=spanish -4.50479 3.04549412621E-13 -207.2207276413206 2=japanese -14.369 7.6855682344E-150 -660.989401136873

As described, JointClassification carries through all the classification metrics in the hierarchy rooted at Classification. Each level of classification shown as follows adds to the classifiers preceding it:

Classificationprovides the first best category as the rank 0 category.RankedClassificationadds an ordering of all the possible categories with a lower rank corresponding to greater likelihood of the category. Therankcolumn reflects this ordering.ScoredClassificationadds a numeric score to the ranked output. Note that scores might or might not compare well against other strings being classified depending on the type of classifier. This is the column labeledScore. To understand the basis of this score, consult the relevant Javadoc.ConditionalClassificationfurther refines the score by making it a category probability conditioned on the input. The probabilities of all categories will sum up to 1. This is the column labeledP(Category|Input), which is the traditional way to write probability of the category given the input.JointClassificationadds the log2 (log base 2) probability of the input and the category—this is the joint probability. The probabilities of all categories and inputs will sum up to 1, which is a very large space indeed with very low probabilities assigned to any pair of category and string. This is why log2 values are used to prevent numerical underflow. This is the column labeledlog 2 P(Category, Input), which is translated as the log 2 probability of the category and input.

Look at the Javadoc for the com.aliasi.classify package for more information on the metrics and classifiers that implement them.

The code is in src/com/lingpipe/cookbook/chapter1/RunClassifierJoint.java, and it deserializes to a JointClassifier<CharSequence>:

public static void main(String[] args) throws IOException, ClassNotFoundException {

String classifierPath = args.length > 0 ? args[0] : "models/3LangId.LMClassifier";

@SuppressWarnings("unchecked")

JointClassifier<CharSequence> classifier = (JointClassifier<CharSequence>) AbstractExternalizable.readObject(new File(classifierPath));

Util.consoleInputPrintClassification(classifier);

}It makes a call to Util.consoleInputPrintClassification(classifier), which minimally differs from Util.consoleInputBestCategory(classifier), in that it uses the toString() method of classification to print. The code is as follows:

public static void consoleInputPrintClassification(BaseClassifier<CharSequence> classifier) throws IOException {

BufferedReader reader = new BufferedReader(new InputStreamReader(System.in));

while (true) {

System.out.println("\nType a string to be classified." + Empty string to quit.");

String data = reader.readLine();

if (data.equals("")) {

return;

}

Classification classification = classifier.classify(data);

System.out.println(classification);

}

}We got a richer output than we expected, because the type is Classification, but the toString() method will be applied to the runtime type JointClassification.

There is detailed information in Chapter 6, Character Language Models of Text Analysis with LingPipe 4, by Bob Carpenter and Breck Baldwin, LingPipe Publishing (http://alias-i.com/lingpipe-book/lingpipe-book-0.5.pdf) on language models.

We use the popular twitter4j package to invoke the Twitter Search API, and search for tweets and save them to disk. The Twitter API requires authentication as of Version 1.1, and we will need to get authentication tokens and save them in the twitter4j.properties file before we get started.

If you don't have a Twitter account, go to twitter.com/signup and create an account. You will also need to go to dev.twitter.com and sign in to enable yourself for the developer account. Once you have a Twitter login, we'll be on our way to creating the Twitter OAuth credentials. Be prepared for this process to be different from what we are presenting. In any case, we will supply example results in the data directory. Let's now create the Twitter OAuth credentials:

Log in to dev.twitter.com.

Find the little pull-down menu next to your icon on the top bar.

Choose My Applications.

Click on Create a new application.

Fill in the form and click on Create a Twitter application.

The next page contains the OAuth settings.

Click on the Create my access token link.

You will need to copy Consumer key and Consumer secret.

You will also need to copy Access token and Access token secret.

These values should go into the

twitter4j.propertiesfile in the appropriate locations. The properties are as follows:debug=false oauth.consumerKey=ehUOExampleEwQLQpPQ oauth.consumerSecret=aTHUGTBgExampleaW3yLvwdJYlhWY74 oauth.accessToken=1934528880-fiMQBJCBExamplegK6otBG3XXazLv oauth.accessTokenSecret=y0XExampleGEHdhCQGcn46F8Vx2E

Now, we're ready to access Twitter and get some search data using the following steps:

Go to the directory of this chapter and run the following command:

java -cp lingpipe-cookbook.1.0.jar:lib/twitter4j-core-4.0.1.jar:lib/opencsv-2.4.jar:lib/lingpipe-4.1.0.jar com.lingpipe.cookbook.chapter1.TwitterSearchThe code displays the output file (in this case, a default value). Supplying a path as an argument will write to this file. Then, type in your query at the prompt:

Writing output to data/twitterSearch.csv Enter Twitter Query:disney

The code then queries Twitter and reports every 100 tweets found (output truncated):

Tweets Accumulated: 100 Tweets Accumulated: 200 … Tweets Accumulated: 1500 writing to disk 1500 tweets at data/twitterSearch.csv

This program uses the search query, searches Twitter for the term, and writes the output (limited to 1500 tweets) to the .csv file name that you specified on the command line or uses a default.

The code uses the twitter4j library to instantiate TwitterFactory and searches Twitter using the user-entered query. The start of main() at src/com/lingpipe/cookbook/chapter1/TwitterSearch.java is:

String outFilePath = args.length > 0 ? args[0] : "data/twitterSearch.csv";

File outFile = new File(outFilePath);

System.out.println("Writing output to " + outFile);

BufferedReader reader = new BufferedReader(new InputStreamReader(System.in));

System.out.print("Enter Twitter Query:");

String queryString = reader.readLine();The preceding code gets the outfile, supplying a default if none is provided, and takes the query from the command line.

The following code sets up the query according to the vision of the twitter4j developers. For more information on this process, read their Javadoc. However, it should be fairly straightforward. In order to make our result set more unique, you'll notice that when we create the query string, we will filter out retweets using the -filter:retweets option. This is only somewhat effective; see the Eliminate near duplicates with the Jaccard distance recipe later in this chapter for a more complete solution:

Twitter twitter = new TwitterFactory().getInstance();

Query query = new Query(queryString + " -filter:retweets"); query.setLang("en");//English

query.setCount(TWEETS_PER_PAGE);

query.setResultType(Query.RECENT);We will get the following result:

List<String[]> csvRows = new ArrayList<String[]>();

while(csvRows.size() < MAX_TWEETS) {

QueryResult result = twitter.search(query);

List<Status> resultTweets = result.getTweets();

for (Status tweetStatus : resultTweets) {

String row[] = new String[Util.ROW_LENGTH];

row[Util.TEXT_OFFSET] = tweetStatus.getText();

csvRows.add(row);

}

System.out.println("Tweets Accumulated: " + csvRows.size());

if ((query = result.nextQuery()) == null) {

break;

}

}The preceding snippet is a pretty standard code slinging, albeit without the usual hardening for external facing code—try/catch, timeouts, and retries. One potentially confusing bit is the use of query to handle paging through the search results—it returns null when no more pages are available. The current Twitter API allows a maximum of 100 results per page, so in order to get 1500 results, we need to rerun the search until there are no more results, or until we get 1500 tweets. The next step involves a bit of reporting and writing:

System.out.println("writing to disk " + csvRows.size() + " tweets at " + outFilePath);

Util.writeCsvAddHeader(csvRows, outFile);The list of tweets is then written to a .csv file using the Util.writeCsvAddHeader method:

public static void writeCsvAddHeader(List<String[]> data, File file) throws IOException {

CSVWriter csvWriter = new CSVWriter(new OutputStreamWriter(new FileOutputStream(file),Strings.UTF8));

csvWriter.writeNext(ANNOTATION_HEADER_ROW);

csvWriter.writeAll(data);

csvWriter.close();

}We will be using this .csv file to run the language ID test in the next section.

Now, we can test our language ID classifier on the data we downloaded from Twitter. This recipe will show you how to run the classifier on the .csv file and will set the stage for the evaluation step in the next recipe.

Applying a classifier to the .csv file is straightforward! Just perform the following steps:

Get a command prompt and run:

java -cp lingpipe-cookbook.1.0.jar:lib/lingpipe-4.1.0.jar:lib/twitter4j-core-4.0.1.jar:lib/opencsv-2.4.jar com.lingpipe.cookbook.chapter1.ReadClassifierRunOnCsvThis will use the default CSV file from the

data/disney.csvdistribution, run over each line of the CSV file, and apply a language ID classifier frommodels/ 3LangId.LMClassifierto it:InputText: When all else fails #Disney Best Classified Language: english InputText: ES INSUPERABLE DISNEY !! QUIERO VOLVER:( Best Classified Language: Spanish

You can also specify the input as the first argument and the classifier as the second one.

We will deserialize a classifier from the externalized model that was described in the previous recipes. Then, we will iterate through each line of the .csv file and call the classify method of the classifier. The code in main() is:

String inputPath = args.length > 0 ? args[0] : "data/disney.csv";

String classifierPath = args.length > 1 ? args[1] : "models/3LangId.LMClassifier";

@SuppressWarnings("unchecked") BaseClassifier<CharSequence> classifier = (BaseClassifier<CharSequence>) AbstractExternalizable.readObject(new File(classifierPath));

List<String[]> lines = Util.readCsvRemoveHeader(new File(inputPath));

for(String [] line: lines) {

String text = line[Util.TEXT_OFFSET];

Classification classified = classifier.classify(text);

System.out.println("InputText: " + text);

System.out.println("Best Classified Language: " + classified.bestCategory());

}The preceding code builds on the previous recipes with nothing particularly new. Util.readCsvRemoveHeader, shown as follows, just skips the first line of the .csv file before reading from disk and returning the rows that have non-null values and non-empty strings in the TEXT_OFFSET position:

public static List<String[]> readCsvRemoveHeader(File file) throws IOException {

FileInputStream fileIn = new FileInputStream(file);

InputStreamReader inputStreamReader = new InputStreamReader(fileIn,Strings.UTF8);

CSVReader csvReader = new CSVReader(inputStreamReader);

csvReader.readNext(); //skip headers

List<String[]> rows = new ArrayList<String[]>();

String[] row;

while ((row = csvReader.readNext()) != null) {

if (row[TEXT_OFFSET] == null || row[TEXT_OFFSET].equals("")) {

continue;

}

rows.add(row);

}

csvReader.close();

return rows;

}Evaluation is incredibly important in building solid NLP systems. It allows developers and management to map a business need to system performance, which, in turn, helps communicate system improvement to vested parties. "Well, uh, the system seems to be doing better" does not hold the gravitas of "Recall has improved 20 percent, and the specificity is holding well with 50 percent more training data".

This recipe provides the steps for the creation of truth or gold standard data and tells us how to use this data to evaluate the performance of our precompiled classifier. It is as simple as it is powerful.

You might have noticed the headers from the output of the CSV writer and the suspiciously labeled column, TRUTH. Now, we get to use it. Load up the tweets we provided earlier or convert your data into the format used in our .csv format. An easy way to get novel data is to run a query against Twitter with a multilingual friendly query such as Disney, which is our default supplied data.

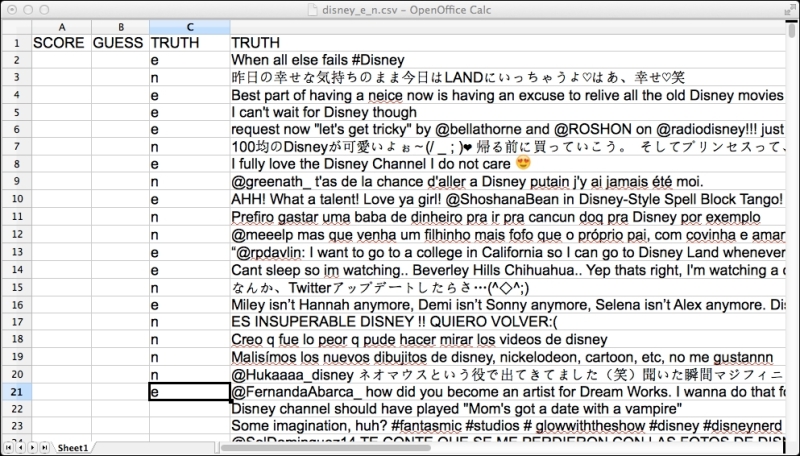

Open the CSV file and annotate the language you think the tweet is in for at least 10 examples each of e for English and n for non-English. There is a data/disney_e_n.csv file in the distribution; you can use this if you don't want to deal with annotating data. If you are not sure about a tweet, feel free to ignore it. Unannotated data is ignored. Have a look at the following screenshot:

Screenshot of the spreadsheet with human annotations for English 'e' and non-English 'n'. It is known as truth data or gold standard data because it represents the phenomenon correctly.

Often, this data is called gold standard data, because it represents the truth. The "gold" in "gold standard" is quite literal. Back it up and store it with longevity in mind—it is most likely that it is the single-most valuable collection of bytes on your hard drive, because it is expensive to produce in any quantity and the cleanest articulation of what is being done. Implementations come and go; evaluation data lives on forever. The John Smith corpus from the The John Smith problem recipe, in Chapter 7, Finding Coreference Between Concepts/People, is the canonical evaluation corpus for that particular problem and lives on as the point of comparison for a line of research that started in 1997. The original implementation is long forgotten.

Perform the following steps to evaluate the classifiers:

Enter the following in the command prompt; this will run the default classifier on the texts in the default gold standard data. Then, it will compare the classifier's best category against what was annotated in the

TRUTHcolumn:java -cp lingpipe-cookbook.1.0.jar:lib/opencsv-2.4.jar:lib/lingpipe-4.1.0.jar com.lingpipe.cookbook.chapter1.RunConfusionMatrixThis class will then produce the confusion matrix:

reference\response \e,n, e 11,0, n 1,9,

The confusion matrix is aptly named since it confuses almost everyone initially, but it is, without a doubt, the best representation of classifier output, because it is very difficult to hide bad classifier performance with it. In other words, it is an excellent BS detector. It is the unambiguous view of what the classifier got right, what it got wrong, and what it thought was the right answer.

The sum of each row represents the items that are known by truth/reference/gold standard to belong to the category. For English (e) there were 11 tweets. Each column represents what the system thought was in the same labeled category. For English (e), the system thought 11 tweets were English and none were non-English (n). For the non-English category (n), there are 10 cases in truth, of which the classifier thought 1 was English (incorrectly) and 9 were non-English (correctly). Perfect system performance will have zeros in all the cells that are not located diagonally, from the top-left corner to the bottom-right corner.

The real reason it is called a confusion matrix is that it is relatively easy to see categories that the classifier is confusing. For example, British English and American English would likely be highly confusable. Also, confusion matrices scale to multiple categories quite nicely, as will be seen later. Visit the Javadoc for a more detailed explanation of the confusion matrix—it is well worth mastering.

Building on the code from the previous recipes in this chapter, we will focus on what is novel in the evaluation setup. The entirety of the code is in the distribution at src/com/lingpipe/cookbook/chapter1/RunConfusionMatrix.java. The start of main() is shown in the following code snippet. The code starts by reading from the arguments that look for non-default CSV data and serialized classifiers. Defaults, which this recipe uses, are shown here:

String inputPath = args.length > 0 ? args[0] : "data/disney_e_n.csv"; String classifierPath = args.length > 1 ? args[1] : "models/1LangId.LMClassifier";

Next, the language model and the .csv data will be loaded. The method differs slightly from the Util.CsvRemoveHeader explanation, in that it only accepts rows that have a value in the TRUTH column—see src/com/lingpipe/cookbook/Util.java if this is not clear:

@SuppressWarnings("unchecked")

BaseClassifier<CharSequence> classifier = (BaseClassifier<CharSequence>) AbstractExternalizable.readObject(new File(classifierPath));

List<String[]> rows = Util.readAnnotatedCsvRemoveHeader(new File(inputPath));Next, the categories will be found:

String[] categories = Util.getCategories(rows);

The method will accumulate all the category labels from the TRUTH column. The code is simple and is shown here:

public static String[] getCategories(List<String[]> data) {

Set<String> categories = new HashSet<String>();

for (String[] csvData : data) {

if (!csvData[ANNOTATION_OFFSET].equals("")) {

categories.add(csvData[ANNOTATION_OFFSET]);

}

}

return categories.toArray(new String[0]);

}The code will be useful when we run arbitrary data, where the labels are not known at compile time.

Then, we will set up BaseClassfierEvaluator. This requires the classifier to be evaluated. The categories and a boolean value that controls whether inputs are stored in the classifier for construction will also be set up:

boolean storeInputs = false; BaseClassifierEvaluator<CharSequence> evaluator = new BaseClassifierEvaluator<CharSequence>(classifier, categories, storeInputs);

Note that the classifier can be null and specified at a later time; the categories must exactly match those produced by the annotation and the classifier. We will not bother configuring the evaluator to store the inputs, because we are not going to use this capability in this recipe. See the Viewing error categories – false positives recipe for an example in which the inputs are stored and accessed.

Next, we will do the actual evaluation. The loop will iterate over each row of the information in the .csv file, build a Classified<CharSequence>, and pass it off to the evaluator's handle() method:

for (String[] row : rows) {

String truth = row[Util.ANNOTATION_OFFSET];

String text = row[Util.TEXT_OFFSET];

Classification classification = new Classification(truth);

Classified<CharSequence> classified = new Classified<CharSequence>(text,classification);

evaluator.handle(classified);

}The fourth line will create a classification object with the value from the truth annotation—e or n in this case. This is the same type as the one BaseClassifier<E> returns for the bestCategory() method. There is no special type for truth annotations. The next line adds in the text that the classification applies to and we get a Classified<CharSequence> object.

The last line of the loop will apply the handle method to the created classified object. The evaluator assumes that data supplied to its handle method is a truth annotation, which is handled by extracting the data being classified, applying the classifier to this data, getting the resulting firstBest() classification, and finally noting whether the classification matches that of what was just constructed with the truth. This happens for each row of the .csv file.

Outside the loop, we will print out the confusion matrix with Util.createConfusionMatrix():

System.out.println(Util.confusionMatrixToString(evaluator.confusionMatrix()));

Examining this code is left to the reader. That's it; we have evaluated our classifier and printed out the confusion matrix.

The world of NLP really opens up when classifiers are customized. This recipe provides details on how to customize a classifier by collecting examples for the classifier to learn from—this is called training data. It is also called gold standard data, truth, or ground truth. We have some from the previous recipe that we will use.

We will create a customized language ID classifier for English and other languages. Creation of training data involves getting access to text data and then annotating it for the categories of the classifier—in this case, annotation is the language. Training data can come from a range of sources. Some possibilities include:

Gold standard data such as the one created in the preceding evaluation recipe.

Data that is somehow already annotated for the categories you care about. For example, Wikipedia has language-specific versions, which make easy pickings to train up a language ID classifier. This is how we created the

3LangId.LMClassifiermodel.Be creative—where is the data that helps guide a classifier in the right direction?

Language ID doesn't require much data to work well, so 20 tweets per language will start to reliably distinguish strongly different languages. The amount of training data will be driven by evaluation—more data generally improves performance.

The example assumes that around 10 tweets of English and 10 non-English tweets have been annotated by people and put in data/disney_e_n.csv.

In order to train your own language model classifier, perform the following steps:

Fire up a terminal and type the following:

java -cp lingpipe-cookbook.1.0.jar:lib/opencsv-2.4.jar:lib/lingpipe-4.1.0.jar com.lingpipe.cookbook.chapter1.TrainAndRunLMClassifierThen, type some English in the command prompt, perhaps, a Kurt Vonnegut quotation, to see the resulting

JointClassification. See the Getting confidence estimates from a classifier recipe for the explanation of the following output:Type a string to be classified. Empty string to quit. So it goes. Rank Categ Score P(Category|Input) log2 P(Category,Input) 0=e -4.24592987919 0.9999933712053 -55.19708842949149 1=n -5.56922173547 6.62884502334E-6 -72.39988256112824

Type in some non-English, such as the Spanish title of Borge's The Garden of the Forking Paths:

Type a string to be classified. Empty string to quit. El Jardín de senderos que se bifurcan Rank Categ Score P(Category|Input) log2 P(Category,Input) 0=n -5.6612148689 0.999989087229795 -226.44859475801326 1=e -6.0733050528 1.091277041753E-5 -242.93220211249715

The program is in src/com/lingpipe/cookbook/chapter1/TrainAndRunLMClassifier.java; the contents of the main() method start with:

String dataPath = args.length > 0 ? args[0] : "data/disney_e_n.csv"; List<String[]> annotatedData = Util.readAnnotatedCsvRemoveHeader(new File(dataPath)); String[] categories = Util.getCategories(annotatedData);

The preceding code gets the contents of the .csv file and then extracts the list of categories that were annotated; these categories will be all the non-empty strings in the annotation column.

The following DynamicLMClassifier is created using a static method that requires the array of categories and int, which is the order of the language models. With an order of 3, the language model will be trained on all 1 to 3 character sequences of the text training data. So "I luv Disney" will produce training instances of "I", "I ", "I l", " l", " lu", "u", "uv", "luv", and so on. The createNGramBoundary method appends a special token to the beginning and end of each text sequence; this token can help if the beginnings or ends are informative for classification. Most text data is sensitive to beginnings/ends, so we will choose this model:

int maxCharNGram = 3; DynamicLMClassifier<NGramBoundaryLM> classifier = DynamicLMClassifier.createNGramBoundary(categories,maxCharNGram);

The following code iterates over the rows of training data and creates Classified<CharSequence> in the same way as shown in the Evaluation of classifiers – the confusion matrix recipe for evaluation. However, instead of passing the Classified object to an evaluation handler, it is used to train the classifier.

for (String[] row: annotatedData) {

String truth = row[Util.ANNOTATION_OFFSET];

String text = row[Util.TEXT_OFFSET];

Classification classification

= new Classification(truth);

Classified<CharSequence> classified = new Classified<CharSequence>(text,classification);

classifier.handle(classified);

}No further steps are necessary, and the classifier is ready for use by the console:

Util.consoleInputPrintClassification(classifier);

Training and using the classifier can be interspersed for classifiers based on DynamicLM. This is generally not the case with other classifiers such as LogisticRegression, because they use all the data to compile a model that can carry out classifications.

There is another method for training the classifier that gives you more control over how the training goes. The following is the code snippet for this:

Classification classification = new Classification(truth); Classified<CharSequence> classified = new Classified<CharSequence>(text,classification); classifier.handle(classified);

Alternatively, we can have the same effect with:

int count = 1; classifier.train(truth,text,count);

The train() method allows an extra degree of control for training, because it allows for the count to be explicitly set. As we explore LingPipe classifiers, we will often see an alternate way of training that allows for some additional control beyond what the handle() method provides.

Character-language model-based classifiers work very well for tasks where character sequences are distinctive. Language identification is an ideal candidate for this, but it can also be used for tasks such as sentiment, topic assignment, and question answering.

The earlier recipes have shown how to evaluate classifiers with truth data and how to train with truth data but how about doing both? This great idea is called cross validation, and it works as follows:

Split the data into n distinct sets or folds—the standard n is 10.

For i from 1 to n:

Train on the n - 1 folds defined by the exclusion of fold i

Evaluate on fold i

Report the evaluation results across all folds i.

This is how most machine-learning systems are tuned for performance. The work flow is as follows:

See what the cross validation performance is.

Look at the error as determined by an evaluation metric.

Look at the actual errors—yes, the data—for insights into how the system can be improved.

Make some changes

Evaluate it again.

Cross validation is an excellent way to compare different approaches to a problem, try different classifiers, motivate normalization approaches, explore feature enhancements, and so on. Generally, a system configuration that shows increased performance on cross validation will also show increased performance on new data. What cross validation does not do, particularly with active learning strategies discussed later, is reliably predict performance on new data. Always apply the classifier to new data before releasing production systems as a final sanity check. You have been warned.

Cross validation also imposes a negative bias compared to a classifier trained on all possible training data, because each fold is a slightly weaker classifier, in that it only has 90 percent of the data on 10 folds.

Rinse, lather, and repeat is the mantra of building state-of-the-art NLP systems.

Note how different this approach is from other classic computer-engineering approaches that focus on developing against a functional specification driven by unit tests. This process is more about refining and adjusting the code to work better as determined by the evaluation metrics.

To run the code, perform the following steps:

Get to a command prompt and type:

java -cp lingpipe-cookbook.1.0.jar:lib/opencsv-2.4.jar:lib/lingpipe-4.1.0.jar com.lingpipe.cookbook.chapter1.RunXValidateThe result will be:

Training data is: data/disney_e_n.csv Training on fold 0 Testing on fold 0 Training on fold 1 Testing on fold 1 Training on fold 2 Testing on fold 2 Training on fold 3 Testing on fold 3 reference\response \e,n, e 10,1, n 6,4,The preceding output will make more sense in the following section.

This recipe introduces an XValidatingObjectCorpus object that manages cross validation. It is used heavily in training classifiers. Everything else should be familiar from the previous recipes. The main() method starts with:

String inputPath = args.length > 0 ? args[0] : "data/disney_e_n.csv";

System.out.println("Training data is: " + inputPath);

List<String[]> truthData = Util.readAnnotatedCsvRemoveHeader(new File(inputPath));The preceding code gets us the data from the default or a user-entered file. The next two lines introduce XValidatingObjectCorpus—the star of this recipe:

int numFolds = 4; XValidatingObjectCorpus<Classified<CharSequence>> corpus = Util.loadXValCorpus(truthData, numFolds);

The numFolds variable controls how the data that is just loaded will be partitioned—it will be in four partitions in this case. Now, we will look at the Util.loadXValCorpus(truthData, numfolds) subroutine:

public static XValidatingObjectCorpus<Classified<CharSequence>> loadXValCorpus(List<String[]> rows, int numFolds) throws IOException {

XValidatingObjectCorpus<Classified<CharSequence>> corpus = new XValidatingObjectCorpus<Classified<CharSequence>>(numFolds);

for (String[] row : rows) {

Classification classification = new Classification(row[ANNOTATION_OFFSET]);

Classified<CharSequence> classified = new Classified<CharSequence>(row[TEXT_OFFSET],classification);

corpus.handle(classified);

}

return corpus;

}

XValidatingObjectCorpus<E> constructed will contain all the truth data in the form of Objects E. In this case, we are filling the corpus with the same object used to train and evaluate in the previous recipes in this chapter—Classified<CharSequence>. This will be handy, because we will be using the objects to both train and test our classifier. The numFolds parameter specifies how many partitions of the data to make. It can be changed later.

The following for loop should be familiar, in that, it should iterate over all the annotated data and creates the Classified<CharSequence> object before applying the corpus.handle() method, which adds it to the corpus. Finally, we will return the corpus. It is worth taking a look at the Javadoc for XValidatingObjectCorpus<E> if you have any questions.

Returning to the body of main(), we will permute the corpus to mix the data, get the categories, and set up BaseClassifierEvaluator<CharSequence> with a null value where we supplied a classifier in a previous recipe:

corpus.permuteCorpus(new Random(123413)); String[] categories = Util.getCategories(truthData); boolean storeInputs = false; BaseClassifierEvaluator<CharSequence> evaluator = new BaseClassifierEvaluator<CharSequence>(null, categories, storeInputs);

Now, we are ready to do the cross validation:

int maxCharNGram = 3;

for (int i = 0; i < numFolds; ++i) {

corpus.setFold(i);

DynamicLMClassifier<NGramBoundaryLM> classifier = DynamicLMClassifier.createNGramBoundary(categories, maxCharNGram);

System.out.println("Training on fold " + i);

corpus.visitTrain(classifier);

evaluator.setClassifier(classifier);

System.out.println("Testing on fold " + i);

corpus.visitTest(evaluator);

}On each iteration of the for loop, we will set which fold is being used, which, in turn, will select the training and testing partition. Then, we will construct DynamicLMClassifier and train it by supplying the classifier to corpus.visitTrain(classifier). Next, we will set the evaluator's classifier to the one we just trained. The evaluator is passed to the corpus.visitTest(evaluator) method where the contained classifier is applied to the test data that it was not trained on. With four folds, 25 percent of the data will be test data at any given iteration, and 75 percent of the data will be training data. Data will be in the test partition exactly once and three times in the training. The training and test partitions will never contain the same data unless there are duplicates in the data.

Once the loop has finished all iterations, we will print a confusion matrix discussed in the Evaluation of classifiers – the confusion matrix recipe:

System.out.println( Util.confusionMatrixToString(evaluator.confusionMatrix()));

This recipe introduces quite a few moving parts, namely, cross validation and a corpus object that supports it. The ObjectHandler<E> interface is also used a lot; this can be confusing to developers not familiar with the pattern. It is used to train and test the classifier. It can also be used to print the contents of the corpus. Change the contents of the for loop to visitTrain with Util.corpusPrinter:

System.out.println("Training on fold " + i);

corpus.visitTrain(Util.corpusPrinter());

corpus.visitTrain(classifier);

evaluator.setClassifier(classifier);

System.out.println("Testing on fold " + i);

corpus.visitTest(Util.corpusPrinter());Now, you will get an output that looks like:

Training on fold 0 Malis?mos los nuevos dibujitos de disney, nickelodeon, cartoon, etc, no me gustannn:n @meeelp mas que venha um filhinho mais fofo que o pr?prio pai, com covinha e amando a Disney kkkkkkkkkkkkkkkkk:n @HedyHAMIDI au quartier pas a Disney moi:n I fully love the Disney Channel I do not care ?:e

The text is followed by : and the category. Printing the training/test folds is a good sanity check for whether the corpus is properly populated. It is also a nice glimpse into how the ObjectHandler<E> interface works—here, the source is from com/lingpipe/cookbook/Util.java:

public static ObjectHandler<Classified<CharSequence>> corpusPrinter () {

return new ObjectHandler<Classified<CharSequence>>() {

@Override

public void handle(Classified<CharSequence> e) {

System.out.println(e.toString());

}

};

}There is not much to the returned class. There is a single handle()method that just prints the toString() method of Classified<CharSequence>. In the context of this recipe, the classifier instead invokes train() on the text and classification, and the evaluator takes the text, runs it past the classifier, and compares the result to the truth.

Another good experiment to run is to report performance on each fold instead of all folds. For small datasets, you will see very large variations in performance. Another worthwhile experiment is to permute the corpus 10 times and see the variations in performance that come from different partitioning of the data.

Another issue is how data is selected for evaluation. To text process applications, it is important to not leak information between test data and training data. Cross validation over 10 days of data will be much more realistic if each day is a fold rather than a 10-percent slice of all 10 days. The reason is that a day's data will likely be correlated, and this correlation will produce information about that day in training and testing, if days are allowed to be in both train and test. When evaluating the final performance, always select data from after the training data epoch if possible, to better emulate production environments where the future is not known.

We can achieve the best possible classifier performance by examining the errors and making changes to the system. There is a very bad habit among developers and machine-learning folks to not look at errors, particularly as systems mature. Just to be clear, at the end of a project, the developers responsible for tuning the classifier should be very familiar with the domain being classified, if not expert in it, because they have looked at so much data while tuning the system. If the developer cannot do a reasonable job of emulating the classifiers that you are tuning, then you are not looking at enough data.

This recipe performs the most basic form of looking at what the system got wrong in the form of false positives, which are examples from training data that the classifier assigned to a category, but the correct category was something else.

Perform the following steps in order to view error categories using false positives:

This recipe extends the previous How to train and evaluate with cross validation recipe by accessing more of what the evaluation class provides. Get a command prompt and type:

java -cp lingpipe-cookbook.1.0.jar:lib/opencsv-2.4.jar:lib/lingpipe-4.1.0.jar com.lingpipe.cookbook.chapter1.ReportFalsePositivesOverXValidationThis will result in:

Training data is: data/disney_e_n.csv reference\response \e,n, e 10,1, n 6,4, False Positives for e Malisímos los nuevos dibujitos de disney, nickelodeon, cartoon, etc, no me gustannn : n @meeelp mas que venha um filhinho mais fofo que o próprio pai, com covinha e amando a Disney kkkkkkkkkkkkkkkkk : n @HedyHAMIDI au quartier pas a Disney moi : n @greenath_ t'as de la chance d'aller a Disney putain j'y ai jamais été moi. : n Prefiro gastar uma baba de dinheiro pra ir pra cancun doq pra Disney por exemplo : n ES INSUPERABLE DISNEY !! QUIERO VOLVER:( : n False Positives for n request now "let's get tricky" by @bellathorne and @ROSHON on @radiodisney!!! just call 1-877-870-5678 or at http://t.co/cbne5yRKhQ!! <3 : e

The output starts with a confusion matrix. Then, we will see the actual six instances of false positives for

pfrom the lower left-hand side cell of the confusion matrix labeled with the category that the classifier guessed. Then, we will see false positives forn, which is a single example. The true category is appended with:, which is helpful for classifiers that have more than two categories.

This recipe is based on the previous one, but it has its own source in com/lingpipe/cookbook/chapter1/ReportFalsePositivesOverXValidation.java. There are two differences. First, storeInputs is set to true for the evaluator:

boolean storeInputs = true; BaseClassifierEvaluator<CharSequence> evaluator = new BaseClassifierEvaluator<CharSequence>(null, categories, storeInputs);

Second, a Util method is added to print false positives:

for (String category : categories) {

Util.printFalsePositives(category, evaluator, corpus);

}The preceding code works by identifying a category of focus—e or English tweets—and extracting all the false positives from the classifier evaluator. For this category, false positives are tweets that are non-English in truth, but the classifier thought they were English. The referenced Util method is as follows:

public static <E> void printFalsePositives(String category, BaseClassifierEvaluator<E> evaluator, Corpus<ObjectHandler<Classified<E>>> corpus) throws IOException {

final Map<E,Classification> truthMap = new HashMap<E,Classification>();

corpus.visitCorpus(new ObjectHandler<Classified<E>>() {

@Override

public void handle(Classified<E> data) {

truthMap.put(data.getObject(),data.getClassification());

}

});The preceding code takes the corpus that contains all the truth data and populates Map<E,Classification> to allow for lookup of the truth annotation, given the input. If the same input exists in two categories, then this method will not be robust but will record the last example seen:

List<Classified<E>> falsePositives = evaluator.falsePositives(category);

System.out.println("False Positives for " + category);

for (Classified<E> classified : falsePositives) {

E data = classified.getObject();

Classification truthClassification = truthMap.get(data);

System.out.println(data + " : " + truthClassification.bestCategory());

}

}The code gets the false positives from the evaluator and then iterates over all them with a lookup into truthMap built in the preceding code and prints out the relevant information. There are also methods to get false negatives, true positives, and true negatives in evaluator.

The ability to identify mistakes is crucial to improving performance. The advice seems obvious, but it is very common for developers to not look at mistakes. They will look at system output and make a rough estimate of whether the system is good enough; this does not result in top-performing classifiers.

The next recipe works through more evaluation metrics and their definition.

The false positive from the preceding recipe is one of the four possible error categories. All the categories and their interpretations are as follows:

For a given category X:

True positive: The classifier guessed X, and the true category is X

False positive: The classifier guessed X, but the true category is a category that is different from X

True negative: The classifier guessed a category that is different from X, and the true category is different from X

False negative: The classifier guessed a category different from X, but the true category is X

With these definitions in hand, we can define the additional common evaluation metrics as follows:

Precision for a category X is true positive / (false positive + true positive)

The degenerate case is to make one very confident guess for 100 percent precision. This minimizes the false positives but will have a horrible recall.

Recall or sensitivity for a category X is true positive / (false negative + true positive)

The degenerate case is to guess all the data as belonging to category X for 100 percent recall. This minimizes false negatives but will have horrible precision.

Specificity for a category X is true negative / (true negative + false positive)

The degenerate case is to guess that all data is not in category X.

The degenerate cases are provided to make clear what the metric is focused on. There are metrics such as f-measure that balance precision and recall, but even then, there is no inclusion of true negatives, which can be highly informative. See the Javadoc at com.aliasi.classify.PrecisionRecallEvaluation for more details on evaluation.

In our experience, most business needs map to one of the three scenarios:

High precision / high recall: The language ID needs to have both good coverage and good accuracy; otherwise, lots of stuff will go wrong. Fortunately, for distinct languages where a mistake will be costly (such as Japanese versus English or English versus Spanish), the LM classifiers perform quite well.

High precision / usable recall: Most business use cases have this shape. For example, a search engine that automatically changes a query if it is misspelled better not make lots of mistakes. This means it looks pretty bad to change "Breck Baldwin" to "Brad Baldwin", but no one really notices if "Bradd Baldwin" is not corrected.

High recall / usable precision: Intelligence analysis looking for a particular needle in a haystack will tolerate a lot of false positives in support of finding the intended target. This was an early lesson from our DARPA days.

In a deployment situation, trained classifiers, other Java objects with complex configuration, or training are best accessed by deserializing them from a disk. The first recipe did exactly this by reading in LMClassifier from the disk with AbstractExternalizable. This recipe shows how to get the language ID classifier written out to the disk for later use.

Serializing DynamicLMClassifier and reading it back in results in a different class, which is an instance of LMClassifier that performs the same as the one just trained except that it can no longer accept training instances because counts have been converted to log probabilities and the backoff smoothing arcs are stored in suffix trees. The resulting classifier is much faster.

In general, most of the LingPipe classifiers, language models, and hidden Marcov models (HMM) implement both the Serializable and Compilable interfaces.

We will work with the same data as we did in the Viewing error categories – false positives recipe.

Perform the following steps to serialize a LingPipe object:

Go to the command prompt and convey:

java -cp lingpipe-cookbook.1.0.jar:lib/opencsv-2.4.jar:lib/lingpipe-4.1.0.jar com.lingpipe.cookbook.chapter1.TrainAndWriteClassifierToDiskThe program will respond with the default file values for input/output:

Training on data/disney_e_n.csv Wrote model to models/my_disney_e_n.LMClassifier

Test if the model works by invoking the Deserializing and running a classifier recipe while specifying the classifier file to be read in:

java -cp lingpipe-cookbook.1.0.jar:lib/lingpipe-4.1.0.jar com.lingpipe.cookbook.chapter1.LoadClassifierRunOnCommandLine models/my_disney_e_n.LMClassifierThe usual interaction follows:

Type a string to be classified. Empty string to quit. The rain in Spain Best Category: e

The contents of main() from src/com/lingpipe/cookbook/chapter1/ TrainAndWriteClassifierToDisk.java start with the materials covered in the previous recipes of the chapter to read the .csv files, set up a classifier, and train it. Please refer back to it if any code is unclear.

The new bit for this recipe happens when we invoke the AbtractExternalizable.compileTo() method on DynamicLMClassifier, which compiles the model and writes it to a file. This method is used like the writeExternal method from Java's Externalizable interface:

AbstractExternalizable.compileTo(classifier,outFile);

This is all you need to know folks to write a classifier to a disk.

There is an alternate way to serialize that is amenable to more variations of data sources for serializations that are not based on the File class. An alternate way to write a classifier is:

FileOutputStream fos = new FileOutputStream(outFile); ObjectOutputStream oos = new ObjectOutputStream(fos); classifier.compileTo(oos); oos.close(); fos.close();

Additionally, DynamicLM can be compiled without involving the disk with a static AbstractExternalizable.compile() method. It will be used in the following fashion:

@SuppressWarnings("unchecked")

LMClassifier<LanguageModel, MultivariateDistribution> compiledLM = (LMClassifier<LanguageModel, MultivariateDistribution>) AbstractExternalizable.compile(classifier);The compiled version is a lot faster but does not allow further training instances.

It often happens that the data has duplicates or near duplicates that should be filtered. Twitter data has lots of duplicates that can be quite frustrating to work with even with the -filter:retweets option available for the search API. A quick way to see this is to sort the text in the spreadsheet, and tweets with common prefixes will be neighbors:

Duplicate tweets that share a prefix

This sort only reveals shared prefixes; there are many more that don't share a prefix. This recipe will allow you to find other sources of overlap and threshold, the point at which duplicates are removed.

Perform the following steps to eliminate near duplicates with the Jaccard distance:

Type in the command prompt:

java -cp lingpipe-cookbook.1.0.jar:lib/opencsv-2.4.jar:lib/lingpipe-4.1.0.jar com.lingpipe.cookbook.chapter1.DeduplicateCsvDataYou will be overwhelmed with a torrent of text:

Tweets too close, proximity 1.00 @britneyspears do you ever miss the Disney days? and iilysm please follow me. kiss from Turkey #AskBritneyJean ?? @britneyspears do you ever miss the Disney days? and iilysm please follow me. kiss from Turkey #AskBritneyJean ??? Tweets too close, proximity 0.50 Sooo, I want to have a Disney Princess movie night.... I just want to be a Disney Princess

Two example outputs are shown—the first is a near-exact duplicate with only a difference in a final

?. It has a proximity of1.0; the next example has proximity of0.50, and the tweets are different but have a good deal of word overlap. Note that the second case does not share a prefix.

This recipe jumps a bit ahead of the sequence, using a tokenizer to drive the deduplication process. It is here because the following recipe, for sentiment, really needs deduplicated data to work well. Chapter 2, Finding and Working with Words, covers tokenization in detail.

The source for main() is:

String inputPath = args.length > 0 ? args[0] : "data/disney.csv"; String outputPath = args.length > 1 ? args[1] : "data/disneyDeduped.csv"; List<String[]> data = Util.readCsvRemoveHeader(new File(inputPath)); System.out.println(data.size());

There is nothing new in the preceding code snippet, but the following code snippet has TokenizerFactory:

TokenizerFactory tokenizerFactory = new RegExTokenizerFactory("\\w+");Briefly, the tokenizer breaks the text into text sequences defined by matching the regular expression \w+ (the first \ escapes the second one in the preceding code—it is a Java thing). It matches contiguous word characters. The string "Hi, you here??" produces tokens "Hi", "you", and "here". The punctuation is ignored.

Next up, Util.filterJaccard is called with a cutoff of .5, which roughly eliminates tweets that overlap with half their words. Then, the filter data is written to disk:

double cutoff = .5; List<String[]> dedupedData = Util.filterJaccard(data, tokenizerFactory, cutoff); System.out.println(dedupedData.size()); Util.writeCsvAddHeader(dedupedData, new File(outputPath)); }

The Util.filterJaccard() method's source is as follows:

public static List<String[]> filterJaccard(List<String[]> texts, TokenizerFactory tokFactory, double cutoff) {

JaccardDistance jaccardD = new JaccardDistance(tokFactory);In the preceding snippet, a JaccardDistance class is constructed with a tokenizer factory. The Jaccard distance divides the intersection of tokens from the two strings over the union of tokens from both strings. Look at the Javadoc for more information.

The nested for loops in the following example explore each row with every other row until a higher threshold proximity is found or until all data has been looked at. Do not use this for large datasets because it is the O(n2)algorithm. If no row is above proximity, then the row is added to filteredTexts:

List<String[]> filteredTexts = new ArrayList<String[]>();

for (int i = 0; i < texts.size(); ++i) {

String targetText = texts.get(i)[TEXT_OFFSET];

boolean addText = true;

for (int j = i + 1; j < texts.size(); ++j ) {

String comparisionText = texts.get(j)[TEXT_OFFSET];

double proximity = jaccardD.proximity(targetText,comparisionText);

if (proximity >= cutoff) {

addText = false;

System.out.printf(" Tweets too close, proximity %.2f\n", proximity);

System.out.println("\t" + targetText);

System.out.println("\t" + comparisionText);

break;

}

}

if (addText) {

filteredTexts.add(texts.get(i));

}

}

return filteredTexts;

}There are much better ways to efficiently filter the texts at a cost of extra complexity—a simple reverse-word lookup index to compute an initial covering set will be vastly more efficient—search for a shingling text lookup for O(n) to O(n log(n)) approaches.

Setting the threshold can be a bit tricky, but looking a bunch of data should make the appropriate cutoff fairly clear for your needs.

Sentiment has become the classic business-oriented classification task—what executive can resist an ability to know on a constant basis what positive and negative things are being said about their business? Sentiment classifiers offer this capability by taking text data and classifying it into positive and negative categories. This recipe addresses the process of creating a simple sentiment classifier, but more generally, it addresses how to create classifiers for novel categories. It is also a 3-way classifier, unlike the 2-way classifiers we have been working with.

Our first sentiment system was built for BuzzMetrics in 2004 using language model classifiers. We tend to use logistic regression classifiers now, because they tend to perform better. Chapter 3, Advanced Classifiers, covers logistic regression classifiers.

The previous recipes focused on language ID—how do we shift the classifier over to the very different task of sentiment? This will be much simpler than one might think—all that needs to change is the training data, believe it or not. The steps are as follows:

Use the Twitter search recipe to download tweets about a topic that has positive/negative tweets about it. A search on

disneyis our example, but feel free to branch out. This recipe will work with the supplied CSV file,data/disneySentiment_annot.csv.Load the created

data/disneySentiment_annot.csvfile into your spreadsheet of choice. There are already some annotations done.As in the Evaluation of classifiers – the confusion matrix recipe, annotate the

true classcolumn for one of the three categories:The

pannotation stands for positive. The example is "Oh well, I love Disney movies. #hateonit".The

nannotation stands for negative. The example is "Disney really messed me up yo, this is not the way things are suppose to be".The

oannotation stands for other. The example is "Update on Downtown Disney. http://t.co/SE39z73vnw.Leave blank tweets that are not in English, irrelevant, both positive and negative, or you are unsure about.

Keep annotating until the smallest category has at least 10 examples.

Save the annotations.

Run the previous recipe for cross validation, providing the annotated file's name:

java -cp lingpipe-cookbook.1.0.jar:lib/lingpipe-4.1.0.jar:lib/opencsv-2.4.jar com.lingpipe.cookbook.chapter1.RunXValidate data/disneyDedupedSentiment.csvThe system will then run a four-fold cross validation and print a confusion matrix. Look at the How to train and evaluate with cross validation recipe if you need further explanation:

Training on fold 0 Testing on fold 0 Training on fold 1 Testing on fold 1 Training on fold 2 Testing on fold 2 Training on fold 3 Testing on fold 3 reference\response \p,n,o, p 14,0,10, n 6,0,4, o 7,1,37,

That's it! Classifiers are entirely dependent on training data for what they classify. More sophisticated techniques will bring richer features into the mix than character ngrams, but ultimately, the labels imposed by training data are the knowledge being imparted to the classifier. Depending on your view, the underlying technology is magical or astoundingly simple minded.

Most developers are surprised that the only difference between language ID and sentiment is the labeling applied to the data for training. The language model classifier is applying an individual language model for each category and also noting the marginal distribution of the categories in the estimates.

Classifiers are pretty dumb but very useful if they are not expected to work outside their capabilities. Language ID works great as a classification problem because the observed events are tightly tied to the classification being done—the words and characters of a language. Sentiment is more difficult because the observed events, in this case, are exactly the same as the language ID and are less strongly associated with the end classification. For example, the phrase "I love" is a good predictor of the sentence being English but not as clear a predictor that the sentiment is positive, negative, or other. If the tweet is "I love Disney", then we have a positive statement. If the tweet is "I love Disney, not", then it is negative. Addressing the complexities of sentiment and other more complex phenomenon tends to be resolved in the following ways:

Create more training data. Even relatively dumb techniques such as language model classifiers can perform very well given enough data. Humanity is just not that creative in ways to gripe about, or praise, something. The Train a little, learn a little – active learning recipe of Chapter 3, Advanced Classifiers, presents a clever way to do this.

Use fancier classifiers that in turn use fancier features (observations) about the data to get the job done. Look at the logistic regression recipes for more information. For the negation case, a feature that looked for a negative phrase in the tweet might help. This could get arbitrarily sophisticated.

Note that a more appropriate way to take on the sentiment problem can be to create a binary classifier for positive and not positive and a binary classifier for negative and not negative. The classifiers will have separate training data and will allow for a tweet to be both positive and negative.

Classifiers form the foundations of many industrial NLP problems. This recipe goes through the process of encoding some common problems into a classification-based solution. We will pull from real-world examples that we have built whenever possible. You can think of them as mini recipes.