Welcome to the world of Performance Monitoring and Tuning with SQL Server 2012!

Let's assume that you are a database administrator in your organization. What, if one day one of your colleagues from your IT department calls you right away and complains that the production database server has abruptly started to run very slowly and applications that are accessing the production database are not responding the way they should? The issue needs immediate attention and for that you are required to investigate the issue and fix it in timely manner. What will be your approach to look at the problem and solve it? How would you be able to analyze the situation and identify where the problem is? What actions would you take once a particular problem is recognized in order to resolve it?

Installing and upgrading database servers, managing and maintaining database servers, managing database security, implementing disaster recovery plan, capacity planning, managing high-availability of databases, and performance tuning of databases and SQL server are some of the responsibilities of a DBA. Amongst these responsibilities, performance tuning of the database server is one of the prime responsibilities of DBA. The most common reason is, companies offering IT services are often engaged in signing Service Level Agreements (SLAs) and as per their SLAs they are committed to provide a certain level of services and up-time. Any additional down-time than what is allowed as per SLAs can cause them money loss or business loss. Even companies not engaged in SLAs might lose business because of their poor software systems caused by poor database systems. This is one of the reasons why skilled DBAs are required to keep the database performance up-to date by monitoring and tuning database performance.

In database centric application environment, it is very common for any DBA to face such database related performance issues at different levels. By means of different levels, it implies that performance problem can be found at query level, database level, server level or application level .There can be a number of reasons for a database centric application to be performing poorly. The troubleshooting skills and expertise in performance tuning of a DBA are tested out in recognizing such factors behind the performance degradation and taking the necessary corrective steps.

The first step towards performance tuning is monitoring. In data platform, monitoring something is the process of analyzing and identifying something. So, until you monitor something, you can't know for sure what and where the problem is. Until you know what and where the problem is, you can't analyze the problem. And until you can analyze the problem, you can't solve a problem! This also means that unless you understand performance monitoring, you cannot master performance tuning in a true sense. Thus, performance tuning always comes after performance monitoring. This is the reason why we have a few opening chapters that specifically concentrates on performance monitoring.

The troublesome situation that was just described earlier needs thorough monitoring and systematic analysis in order to identify the root problem accurately before a problem can be solved.

SQL Server Profiler is the most common but powerful tool for monitoring and auditing an instance of SQL server. By using this tool, a DBA is able to solve a large number of different types of database performance issues whether it is a query issue, index issue, locking issue or database, or server configuration issue. It is the tool that essentially any DBA must know. So, SQL Server Profiler will be the subject of this first chapter.

If you have never worked with SQL Server Profiler, this recipe will teach you how to create and start your first SQL Trace. There is some detailed information on SQL Trace in There's more... section of this recipe. This will help you in appreciating rest of the recipes quite easily, which employs SQL Trace in remaining chapters. The section covers the information that will help you in mastering core concepts of SQL Trace and thus mastering SQL Server Profiler. There are no major changes in SQL Server Profiler 2012 documented. In SQL Server 2012, the architecture and functionality of SQL Server Profiler is almost identical to that of SQL Server 2008.

In this recipe, we will create our first trace with SQL Server Profiler. The following are the prerequisites that you should fulfil:

An instance of SQL Server 2012 Developer or Enterprise Evaluation edition.

An SQL Server Login account with administrative rights.

Sample AdventureWorks2012 database on the instance of SQL Server. For more details on how to install AdventureWorks2012 database, please refer to the Introduction section of this book.

To create a new trace, follow the steps provided here.

Start SQL Server Profiler. To start SQL Server Profiler, navigate through Start | All Programs | Microsoft SQL Server 2012 Program Group | Performance Tools | SQL Server Profiler.

Select New Trace... from the File menu. In the Connect to Server dialog box, provide connection details of SQL Server hosting AdventureWorks2012 database and click on Connect.

In the General tab of the Trace Properties dialog box, specify

CreatingTraceWorkloadas trace name. Use the Standard (default) trace template for the Use the template: option.Check the checkbox Save to file: and specify a path and file name in the Save As dialog box and then click on Save.

Keep Enable file rollover checked and Set maximum file size (MB): to its default value, that is,

128. The following screenshot shows the General tab of the Trace Properties dialog box:

Tip

In the Trace Properties dialog box, there is a checkbox option in the General tab with the caption Server processes trace data, to specify whether trace data should be processed on the server. If not checked, trace data is processed at the client side.

When trace data is processed at the client side, it is possible for some events to be missed if the server load is high. If this option is checked, then trace data is processed on the server and all the events included in trace definition are guaranteed to be captured without miss. However, this guarantee comes with performance penalty, because processing trace data on server has an impact on the performance of SQL Server, and hence enabling this option is not recommended on production server.

Also, running SQL Server Profiler on production server itself should be avoided as running SQL Server Profiler is resource consuming. Instead, you should run SQL Server Profiler from a client computer and connect it to your SQL Server from there.

Click on the Events Selection tab. On this screen, the events that are predefined for the Standard (default) trace template are selected and shown in grid. Check the Show all events check box to show all events.

Navigate through the Events list until you find Stored Procedures event category. Expand Stored Procedures event category if it is collapsed. Uncheck the checkbox for RPC:Completed event and check the checkbox for SP:Completed event. Uncheck the Show all events checkbox to show only selected events. The screen should now look as shown in following screenshot:

Click on the Run button to start the trace.

Now open SQL Server Management Studio and establish a connection to the same SQL Server.

In query window, type the sample T-SQL statements as shown in following script and then execute them by pressing the F5 key:

USE AdventureWorks2012 GO SELECT DB_ID() GO EXECUTE sp_helpdb GO SELECT P.FirstName + ' ' + P.LastName AS EmployeeName ,E.JobTitle ,E.BirthDate ,E.Gender ,E.BirthDate FROM HumanResources.Employee AS E INNER JOIN Person.Person AS P ON E.BusinessEntityID = P.BusinessEntityID GO

Now switch to the SQL Server Profiler window and stop the trace by clicking Stop selected trace button in toolbar. Observe the events captured in the trace. The following screenshot shows the captured events that are displayed in SQL Server Profiler:

We started to configure a trace by setting a few trace properties. To demonstrate how we can use one of the in-built trace templates to get a quick start, we used the default trace template Standard (default) in this example. When this template is used, the following events are selected by default:

Audit LoginAudit LogoutExistingConnectionRPC:CompletedSQL:BatchCompletedSQL:BatchStarting

Note

You may notice some TextData appearing multiple times in a trace for a single execution of a T-SQL statement. For instance, in the previous example, you will notice two events for SELECT DB_ID() statement even if we executed it only once. These two entries here do not represent two executions of the said statement. Rather, they represent two different related events associated to one single execution of the statement. For example, both events SQL:BatchStarting and SQL:BatchCompleted raised for a single execution of batch containing SELECT DB_ID() statement and they both show the same T-SQL command in TextData data column. This depends upon what events you have selected in trace definition.

In the Trace Properties dialog box, we have set the maximum file size for our trace to 128 MB. Option Enable file rollover was also enabled by default. Enabling this option is helpful while working with large amount of trace data.

When large amount of event data is captured, the trace file can grow very quickly and become very large. Enabling the Enable file rollover option can prevent a trace file from becoming very large by limiting a file to the maximum file size specified. When the file size is reached to the maximum file size specified, SQL Server creates a new roll-over file with the same name appended with a suffix of an incremental number for the same trace. Thus, when we have this option enabled and the size of trace data is greater than maximum file, we have multiple trace files for the same trace.

In this example, we are saving our trace file as C:\MyTraces\CreatingTraceWorkload.trc. A trace can also be started without having to save the trace data. In case a trace was started in this way without enabling the Save to file: checkbox, SQL Server manages to keep the captured data in queue temporarily. The unsaved trace data can be saved later on as well after gathering the required data. This can be done with the Save or Save As command from the File menu. With the Save As command, we can save trace data in our desired format. Selecting the Trace Table... option in the Save As command, asks for the SQL Server connection details and destination table details where the trace data will be stored.

It's best to store the trace file on a separate disk other than the one which is used to store data files and log files of SQL server databases. Storing the trace file on the same physical disk where database files are stored can degrade the performance of normal I/O operations of other databases.

Tip

Configuring a trace by enabling the Save to table checkbox in the Trace Properties dialog box and saving trace data directly to trace table is less efficient. If you want your trace data to be saved in a trace table then consider saving the trace data first in a trace file; then export your trace data from trace file to trace table by opening the trace file in SQL Server Profiler and selecting the Save As command from the File menu with the Trace Table... option. When you want to save your trace in a trace table, always consider to save your trace in a separate database.

The Events Selection tab of Trace Properties dialog box displays the selected events only and does not show all events by default. So, we checked the Show all events option to list all the available events. Because we did not want to capture RPC:Completed event, we excluded this event by un-checking its checkbox from the event list and included SP:Completed event under Stored Procedures event category.

Once we finished configuring our trace, the trace was started. To demonstrate how the events are captured, we produced some events by executing a few T-SQL statements from another connection through SQL Server Management Studio.

In the final figure, we can see the trace data that is produced by the events included in trace definition. Look at the trace data that we captured. By looking at the values in different data columns, we can learn many different things. For example, for a given trace, by examining LoginName, TextData, and HostName we can tell who is running which query and from which machine. By examining StartTime and EndTime data columns we can determine when a particular query was executed and when it finished its execution.

Tip

Pausing and Stopping a trace

Once a trace is started, it can be either paused or stopped. To do this, select the Run Trace, Pause Trace, and Stop Trace commands from the File menu or click on the corresponding shortcut command buttons on standard toolbar.

Pausing and resuming trace: When a trace is paused, event data stops from being captured temporarily. Once a trace is paused, it can be resumed by starting it again. Restarting a trace resumes and continues to capture event data again without wiping out any previously captured trace data.

Stopping and restarting trace: When a trace is stopped, event data stops from being captured. If a trace is stopped, it can be restarted by starting it again. Restarting a stopped trace starts to capture event data again; but any previously captured trace data is lost.

Remember that we cannot change the Trace Properties of a trace while it is running. To do this, we must have to pause or stop the trace.

This section covers some essential information on SQL Trace that you must know if you want to master SQL Tracing. It is advised that even if you are an advanced user, you do not skip this section.

Follow this section in order to have an in-depth understanding of SQL Trace and its architecture.

Understanding the SQL Trace and its architecture by knowing its related terms and concepts is a prerequisite for working with SQL Server Profiler effectively. This section discusses the basic terminologies and concepts of SQL Trace in brief.

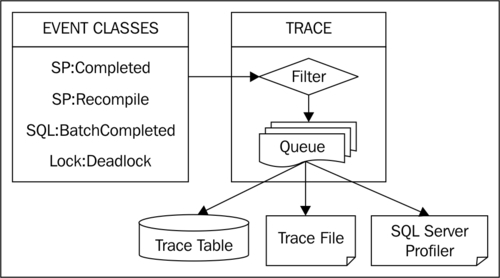

SQL Trace is an event monitoring and capturing engine that comes with SQL Server. It provides the capability to capture the database events with event data and create traces that can be used for performance analysis afterwards.

SQL Server Profiler is a graphical user interface tool for working with SQL Trace. Behind the scene, it uses the same SQL Trace engine, but additionally provides graphical user interface to the user for working with traces. SQL Server Profiler provides functionalities, such as displaying collected event data on its graphical interface, saving traces either in a file or in an SQL Server table, opening previously saved traces, extracting T-SQL statements from a trace, and many more. Finding and analyzing long running or costly queries, finding deadlocks and their related information, looking for which indexes are scanned, and looking for database connection requests are some of the practical applications of SQL Server Profiler.

In context of SQL Trace terminology, an event is the happening of a database activity that takes place within an instance of SQL Server. Execution of an ad-hoc query or T-SQL batch, a call to stored procedure, an attempt to log in or log out from database server are a few examples that raise specific SQL Server events.

An event class describes a specific type of event. There are many different types of events that can occur within the database engine and each type of event is represented by an event class. Audit Login, Audit Logout, SP:Completed, SP:Recompile, SQL:BatchCompleted, Lock:Deadlock are some of the examples of event classes. To get list of all available event classes, you can query sys.trace_events catalog view.

An event category is a subset of related event classes. Each event class belongs to a particular event category and each event category includes a subset of specific type of event classes. Locks, performance, scans, and stored procedures are some examples of the event categories. To get list of all available event categories,you can query sys.trace_categories catalog view. You can join sys.trace_events and sys.trace_categories catalog views on category_id column to make correlation between the two views.

A data column is an attribute that represents a particular characteristic of an event class. For example, event class SQL:BatchCompleted can have different characteristics, such as TextData, LoginName, Duration, StartTime, EndTime, and so on, where TextData represents T-SQL statement(s) whose execution raises a particular event. These characteristics of event classes are represented by different data columns.

A session that performs the activity of capturing database events and collecting events' data is typically called a trace. Loosely, the term Trace is also used by database professionals to refer the Trace Data that has been collected previously during a trace session and saved in a trace file or SQL Server table.

A set of configured settings for a trace that defines how event data should be collected or saved and which event classes or data columns should be collected as a part of trace data is called Trace properties or a Trace definition.

A filter is an optional logical condition that can be applied to a trace to limit the resulting trace data by capturing only the required trace events for which the filter condition is satisfied. For example, in a trace definition we can specify a filter condition so that SQL Trace collects event data only for a specific database by applying a filter on either DatabaseID data column or DatabaseName data column.

After learning the basic SQL Trace terms and concepts, it will be easier to understand the following architectural diagram of SQL Trace:

When events are raised in SQL Server database engine, SQL Trace captures event data only for those event classes that are included in trace definition and for which filter conditions if specified any are satisfied. Once the event data is captured, it is queued and then sent to its specified target location. The target location can be a Trace file, Trace table, or SQL Server Profiler. Trace data can also be viewed only in SQL Server Profiler without the need of saving a trace.

After understanding the basic concepts of SQL Trace, working with SQL Server Profiler and traces should be an easy task. As this is our first recipe of the book where we learn how to create a trace or workload with SQL Server Profiler, let's first discuss something about trace and workload.

We now know that a trace is a session during which the events are captured and event data is collected. SQL Server supports few formats for saving this collected trace data. We can save trace data in one of the following formats:

A trace file with

.trcextension nameA trace file in XML format with

.xmlextension nameA trace table in an SQL Server database

A trace contains a series of events and every event has its associated event data. All the events of a trace and their event data collectively form trace data for a trace file. Data columns associated with trace events form the event data. T-SQL statements whose execution causes the events to be raised are also a part of this event data under TextData data column and are themselves included in trace data.

A workload or workload file basically contains a series of T-SQL statements. A T-SQL script is an example of a workload file. Because trace data also contains a series of T-SQL statements as a part of event data (as TextData Column), they are also used as workloads. Thus, a T-SQL script, trace file (.trc or .xml), trace table, all can be considered as workload. In other words, a trace file is also a workload file. This workload can be used to re-run on a database for workload or performance analysis. Usually, a workload file is provided as input file to Database Engine Tuning Advisor (DTA) for a tuning session. You will learn more about Database Engine Tuning Advisor in Chapter 2, Tuning with Database Engine Tuning Advisor.

The following list gives brief descriptions of commonly used event classes:

Audit Login:This event occurs when a user connects and logs in to SQL ServerAudit Logout:This event occurs when a users disconnects and logs out from SQL ServerRPC:Starting:This event occurs when a Remote Procedure Call (RPC) starts executingRPC:Completed:This event occurs when a Remote Procedure Call (RPC) completes its executionSQL:BatchStarting:This event occurs when a T-SQL batch starts executingSQL:StmtStarting:This event occurs when a statement inside a T-SQL batch starts executingSQL:StmtCompleted:This event occurs when a statement inside a T-SQL batch completes its executionSQL:BatchCompleted:This event occurs when a T-SQL batch completes its executionSP:Starting:This event occurs when a stored procedure starts executingSP:StmtStarting:This event occurs when a statement inside a stored procedure starts executingSP:StmtCompleted:This event occurs when a statement inside a stored procedure completes its executionSP:Completed:This event occurs when a stored procedure completes its execution

The following list gives brief descriptions of commonly used event classes:

ApplicationName:This data column represents the name of the client application causing a trace event to occurDatabaseID:This data column represents the internal system assigned ID of the database for which a trace event occursDatabaseName:This data column represents the name of the database for which a trace event occursHostName:This data column represents the name of the host or computer where the client component connecting to SQL Server causes a trace event to occurLoginName:This data column represents the name of the login under whose security context, particular T-SQL statement(s) executes that causes trace event to occurObjectID:This data column represents the internal system assigned ID of an object for which a trace event occursObjectName:This data column represents the name of an object for which a trace event occursSessionLoginName:This data column represents the name of the login who initiated the connection and under whose security context a trace event occursSPID:This data column represents the Server Process ID or Session ID of the connection which causes a trace event to occur

Note

For a complete list of event classes and data columns of SQL Trace with their description, you can refer product documentation for SQL Server 2012 at msdn.microsoft.com/en-us/library/bb418432(v=sql.10).aspx.

Running a trace which is configured to collect large number of events is not best practice. While collecting trace data, SQL Trace itself can introduce overhead and affect the performance of SQL Server if trace is configured to collect too much trace information. This also depends on whether the trace is server-side trace or client-side trace. If the trace is client-side using profiler, then the performance overhead can be greater.

Also, if large number of trace data is captured, the size of the trace file immediately grows very big and it becomes a difficult job for us to look for the right data in the trace. Therefore, any unnecessary or irrelevant trace data should not be collected.

This is the reason why we should consider limiting the resulting trace data and capturing only the events which are of our interest. For this, we should identify what trace data we need to look at and based upon that we should identify the filters that are applied to our trace.

Note

Collecting large amount of trace data can affect the performance of SQL Server. So, before creating a trace, we should identify the type of analysis we want to perform on trace information. A single trace should not be created for multiple types of analysis. For each analysis type, a separate trace should be created until and unless different types of analysis explicitly need to be combined into single trace for performing correlative analysis. For example, rather than creating a single trace that collects both scan events and lock events for index scan analysis and object locking analysis respectively, we should consider creating two separate traces; one for collecting only scan events and another for collecting lock events only.

In this recipe, we will see how to capture only those trace events that occurred for a specific database and from a specific SQL Server login.

Let's assume that sample database AdventureWorks2012 is our production database on our production server, which is hosting other databases also. One of the database users James complains that he faces some problems while running queries against database AdventureWorks2012. So, we want to trace his session only for database AdventureWorks2012. Because there are also other databases hosted on the same production server and many users are accessing AdventureWorks2012 database, we need to filter trace events based on session login name and database name in order to avoid any unwanted trace data from being collected.

To emulate this case practically, we need the following as prerequisites:

An instance of SQL Server 2012 Developer or Enterprise Evaluation edition

An SQL Server Login account with sysadmin rights

The sample AdventureWorks2012 database on the instance of SQL Server. For more details on how to install AdventureWorks2012 database, please refer the Introduction section of this book.

Two SQL Server logins named James and Peter with some permission on AdventureWorks2012 database.

We will be performing three main actions in this example. These are as follows:

Creating the required logins and users in the AdventureWorks2012 database (James and Peter )

Creating a trace by applying filters on the

DatabaseNameandSessionLoginNamedata columnsExecuting sample queries from two separate connections belonging to James and Peter respectively and observing the trace data

Because two SQL Server logins named James and Peter with permissions on AdventureWorks2012 database are required, create them by performing the following steps:

Open SQL Server Management Studio.

Connect to the instance of SQL Server with login account having sysadmin rights.

Execute the following T-SQL script to create the logins and their corresponding users in the AdventureWorks2012 database for James and Peter:

--Creating Login and User in --AdventureWorks2012 database for James USE [master] GO CREATE LOGIN [James] WITH PASSWORD=N'JamesPass123' ,DEFAULT_DATABASE=[AdventureWorks2012] ,CHECK_EXPIRATION=OFF ,CHECK_POLICY=OFF GO USE [AdventureWorks2012] GO CREATE USER [James] FOR LOGIN [James] GO ALTER ROLE [db_owner] ADD MEMBER [James] GO --Creating Login and User in AdventureWorks2012 database for Peter USE [master] GO CREATE LOGIN [Peter] WITH PASSWORD=N'PeterPass123' ,DEFAULT_DATABASE=[AdventureWorks2012] ,CHECK_EXPIRATION=OFF ,CHECK_POLICY=OFF GO USE [AdventureWorks2012] GO CREATE USER [Peter] FOR LOGIN [Peter] GO ALTER ROLE [db_owner] ADD MEMBER [Peter] GO

Now, we will create a trace and capture only events that occur for AdventureWorks2012 database from James' session only. To do this, follow these steps:

Start SQL Server Profiler.

Select New Trace... from the File menu. In the Connect to Server dialog box, provide connection details of SQL Server hosting the AdventureWorks2012 database and click on Connect.

In the General tab of Trace Properties, enter

FilteringEventsas the Trace name and select Blank template for the Use the template: drop-down menu as shown in following:

In Events Selection tab, check the checkbox for event class SQL:BatchCompleted under the TSQL event category as shown in following screenshot:

Click on Column Filters... button.

In the Edit Filter dialog box, select DatabaseName from the list of available data columns on the left. Expand the Like option and enter string value

AdventureWorks2012; then press the OK button as shown in the following screenshot:

In the Edit Filter dialog box, select SessionLoginName from the list of available data columns on the left. Expand the Like option and enter string value

James; then press the OK button as shown in following screenshot:

Click on the Organize Columns... button in Events Selection tab of Trace Properties dialog box. Select TextData data column and then keep clicking on Up button repeatedly to move the column up the order in the list, until the column appears as the second item, at the top of the list underneath EventClass data column. Do this same exercise also for the data columns DatabaseName and SessionLoginName so that the final order of the data columns should look like as shown in following screenshot. Press OK in the Organize Columns dialog box:

Click on the Run button to run the trace in the Trace Properties dialog box.

Now, we will open two instances of SQL Server Management Studio one by one that connect to SQL Server with the logins James and Peter respectively and run a few queries.

Open the first instance of SSMS and connect to SQL Server with the login credentials of James. In the query window, type and execute the T-SQL statements as shown in following script:

USE [AdventureWorks2012] GO SELECT * FROM [Sales].[Customer] GO USE [master] GO SELECT * FROM sys.databases GO

Open a second instance of SSMS and connect to SQL Server with the login credentials of Peter. In the query window, type and execute the same T-SQL queries as shown in previous step.

Switch to SQL Server Profiler window that is running the trace. Examine the trace data as shown in following screenshot:

In this recipe, we first created two SQL Server logins and their corresponding users in AdventureWorks2012 database to demonstrate how to apply a trace filter based on a specific SQL Server login, so that the events belonging to SQL Server logins other than the one for which the filter condition on SessionLoginName is satisfied are not captured. We executed a T-SQL script to create logins and users for James and Peter. For a login/user, the script first creates an SQL Server login account by the executing T-SQL statement—CREATE LOGIN. It then creates a user in the AdventureWorks2012 database for that login and adds the user to the db_owner database role by executing the T-SQL commands CREATE USER and ALTER ROLE respectively.

After creating logins and users, we started a new trace in SQL Server Profiler. We selected a Blank trace template and chose SQL:BatchCompleted event class as the only event that will be captured. Then we specified filters on DatabaseName and SessionLoginName data columns so that only the events which are occurred against AdventureWorks2012 database by user James are captured. We also organized the data columns in the Organize Columns dialog box, so that we can have better view of data columns we are interested in when trace data is displayed in SQL Server Profiler; we do not have to scroll much across the right side to see the values of TextData, DatabaseName, and SessionLoginName.

Tip

Use of DatabaseID

We can alternatively use DatabaseID data column instead of DatabaseName to specify a filter on a particular database. For this, we must know system assigned ID value for a specific database. This value can be retrieved by either calling DB_ID('AdventureWorks2012') metadata function or querying sys.databases catalog view.

After starting the trace, we opened two instances of SSMS out of which one instance connects with the login James and another one connects with the login Peter. In both the instances of SSMS, we run a few sample queries against the AdventureWorks2012 and master database.

We can see the resulting trace data as shown in final screenshot. Notice that events belonging to login Peter and the events occurred for master database were not captured.

In a real world scenario, you may need to put filters on columns that are frequently used in trace filters to narrow down the data that you have to look at for troubleshooting. The following section lists some of data columns that are commonly used in trace filters:

ApplicationName: A filter can be specified on this data column so that only trace events raised by a particular client application are captured

DatabaseID: A filter can be specified on this data column so that only trace events raised for a specific database are captured

DatabaseName: A filter can be specified on this data column so that only trace events raised for a specific database are captured

HostName: A filter can be specified on this data column so that only trace events raised from a specific host or client machine are captured

LoginName: A filter can be specified on this data column so that only trace events raised by a specific login are captured

ObjectID: A filter can be specified on this data column so that only trace events raised for a specific object are captured

ObjectName: A filter can be specified on this data column so that only trace events raised for a specific object are captured

SessionLoginName: A filter can be specified on this data column so that only trace events raised by a specific login are captured

SPID: A filter can be specified on this data column so that only trace events raised from a specific session connection are captured

Tip

LoginName and SessionLoginName may look identical at first. However, there is a small difference between them.

By using EXECUTE AS syntax in SQL Server, we can execute T-SQL statements in the same session under different security context other than the security context of the login who actually initiates the session/connection. For example, James can login to SQL Server and run a query under security context of Peter by using EXECUTE AS command. In this case, data column SessionLoginName returns James, while LoginName data column returns Peter. In other cases, where SQL Statements are not executed under different security context, data columns SessionLoginName and LoginName return the same value.

Quite a few times, you may come across database related performance issues that are caused by slow running and expensive queries. Slow running queries or expensive queries are queries that have longer execution time and consume more hardware resources, such as CPU, memory, and disk I/O. For instance, suppose that you are working for an organization having an enterprise application environment with high degree of database transaction activity against single production database that is used to support many applications, it is usual to face database performance issues due to a poorly designed application or poorly written queries.

For example, an application that processes one record at a time and makes a round trip to SQL server for each record is an example of poorly designed application when it is possible to process multiple records in batch and send them to database server in one go. Similarly, a query can be considered to be poorly written if is not optimized for efficient read/write operations, generates sub-optimum execution plan, and takes longer to execute. One common example of a poorly written query is the one which processes records row- by row, using cursor to perform a task that can be accomplished by a set-based query.

When there are a few hundreds of query requests per second coming from different applications hitting the same database continuously, how would you identify those slow running and expensive queries?

Of course, you can use Dynamic Management Views or Activity Monitor to perform such an investigation. However, SQL Profiler will give you more insight into the execution flow of different applications because you can see the actual order and sequence of incoming query requests in real-time along with their execution statistics that can help you in identifying the performance related issues caused by any possible loopholes in application logic.

Remember that the objective of this recipe is not to teach you how to write efficient queries but instead how to identify expensive queries. Thus, for demonstration purposes, we ourselves will write a few expensive queries that take longer to execute in this example.

But before you can identify these slow running queries, you need to know what to look in SQL Server Profiler to identify those queries.

Whenever there is problem with the logic of the query, there is a possibility that the queries may start to take longer to execute as the database starts to grow. This results in holding locks on resources for a longer time, which can lead blockage to other queries. Poorly written queries also produce bad execution plans and can cause a high number of read/write operations that are expensive and take longer to execute.

So, when you are identifying long running queries, mostly you will be looking at time duration and CPU time that a query takes and the number of read/write operations that a query causes.

Therefore, in this recipe we will look at the following data columns:

CPU: Amount of CPU processing time in milliseconds taken by an event

Duration: Total amount of time in microseconds taken by an event

StartTime: Time when an event starts

EndTime: Time when an event ends

Reads: Number of data pages that SQL Server has to read for an event

Writes: Number of data pages that SQL Server has to write on disk for an event

The following are the prerequisites to do this recipe:

Follow the steps provided here for this recipe:

Start SQL Server Profiler. To start SQL Server Profiler, navigate through Start | All Programs | Microsoft SQL Server 2012 Program Group | Performance Tools | SQL Server Profiler.

Select New Trace... from the File menu. In the Connect to Server dialog box, provide connection details of SQL Server hosting the AdventureWorks2012 database and click on Connect.

In the General tab of Trace Properties, specify

IdentifyingExpensiveQueriesas trace name and select Blank template for the Use the template: drop-down menu.Check the checkbox Save to file: and specify a trace file name and location in the Save As dialog box.

In the Events Selection tab, check the checkbox for event class SQL:BatchCompleted under TSQL event category.

Click on the Column Filters... button.

In the Edit Filter dialog box, select DatabaseName from the list of available data columns on the left. Expand the Like option and enter string value

AdventureWorks2012; then click on the OK button.Click on Organize Columns... button in Events Selection tab of Trace Properties dialog box. Select TextData data column and then keep clicking the Up button repeatedly to move the column up the order in the list until the column appears as the second item at the top of the list underneath EventClass data column. Do this same exercise also for data columns, such as CPU, Duration, StartTime, Endtime, Reads, and Writes so that they appear underneath the TextData column. Press OK in the Organize Columns dialog box.

Open SQL Server Management Studio and connect to SQL Server.

Click on the Run button to run the trace in Trace Properties dialog box.

Type and execute the following T-SQL script.The script creates a stored procedure

usp_calculateOrderTotalsin AdventureWorks2012 database and a tabletbl_SampleDataby generating and inserting five million sample records:USE [AdventureWorks2012] GO --Drop the stored procedure if it exists. IF OBJECT_ID('[dbo].[usp_CalculateOrderTotals]') IS NOT NULL DROP PROCEDURE [dbo].[usp_CalculateOrderTotals] GO --Creates the stored procedure. CREATE PROCEDURE [dbo].[usp_CalculateOrderTotals] AS BEGIN CREATE TABLE [tempdb].[dbo].[#tbl_OrderTotals] ( SRNo INT IDENTITY(1,1) PRIMARY KEY CLUSTERED ,OrderID INT ,OrderDate DATETIME ,CustomerName NVARCHAR(200) ,SalesPersonName NVARCHAR(200) ,OrderTotal NUMERIC(38,6) ) DECLARE @SalesOrderID INT DECLARE @OrderDate DATETIME DECLARE @CustomerName NVARCHAR(200) DECLARE @SalesPersonName NVARCHAR(200) DECLARE @OrderTotal NUMERIC(38,6) DECLARE curSalesOrders CURSOR FAST_FORWARD FOR SELECT SOH.SalesOrderID ,SOH.OrderDate ,UPPER(P2.FirstName + ' ' + P2.LastName) AS CustomerName ,UPPER(P1.FirstName + ' ' + P1.LastName) AS SalesPersonName FROM [Sales].[SalesOrderHeader] AS SOH LEFT OUTER JOIN [Sales].[SalesPerson] AS SP ON SOH.SalesPersonID = SP.BusinessEntityID LEFT OUTER JOIN [Sales].[Customer] AS C ON SOH.CustomerID = C.CustomerID LEFT OUTER JOIN [Person].[Person] AS P1 ON SP.BusinessEntityID = P1.BusinessEntityID LEFT OUTER JOIN [Person].[Person] AS P2 ON C.PersonID = P2.BusinessEntityID OPEN curSalesOrders FETCH NEXT FROM curSalesOrders INTO @SalesOrderID ,@OrderDate ,@CustomerName ,@SalesPersonName WHILE @@FETCH_STATUS=0 BEGIN SELECT @OrderTotal=SUM(LineTotal) FROM [Sales].[SalesOrderDetail] WHERE SalesOrderID = @SalesOrderID INSERT INTO [tempdb].[dbo].[#tbl_OrderTotals] VALUES ( @SalesOrderID ,@OrderDate ,@CustomerName ,@SalesPersonName ,@OrderTotal ) FETCH NEXT FROM curSalesOrders INTO @SalesOrderID ,@OrderDate ,@CustomerName ,@SalesPersonName END CLOSE curSalesOrders DEALLOCATE curSalesOrders SELECT * FROM [tempdb].[dbo].[#tbl_OrderTotals] ORDER BY OrderID DESC END GO --Excutes stored procedure. EXECUTE [dbo].[usp_CalculateOrderTotals] GO --Drop the table if it exists IF OBJECT_ID('[dbo].[tblSampleData]') IS NOT NULL DROP TABLE [dbo].[tblSampleData] GO --Generate 5 million records and insert them into a table. SELECT TOP 5000000 C1.* INTO [dbo].[tblSampleData] FROM sys.columns AS C1 CROSS JOIN sys.columns AS C2 CROSS JOIN sys.columns AS C3 GOAfter executing the previous script, switch to SQL Server Profiler and stop the trace. Notice the CPU, Duration, StartTime, EndTime, Reads, and Write columns. The following screenshot shows the trace after execution of the script:

Notice in the figure, how some of the SQL:BatchCompleted events caused high number of CPU usage counts, duration counts, and reads/writes counts. These queries are resource consuming and thus expensive queries.

We started a new trace in SQL Server Profiler. We selected Blank trace template and SQL:BatchCompleted event class that is the only event we wanted to capture. We then specified a trace filter on DatabaseName data column so that only the events which are occurred against AdventureWorks2012 database are captured.

We organized data columns in the Organize Columns dialog box so we can have a better view of data columns that we are interested in when trace data is displayed in SQL Server Profiler; we do not have to scroll much across the right side to see the values of TextData, CPU, Duration, StartTime, Endtime, Reads, and Writes data columns.

Tip

Trace Filter on CPU or Duration

We could also have put a trace filter on CPU or Duration data column with> (greater than) operator in order to capture only those events whose CPU or duration count is higher than the value specified in trace filter. With this, let's say for example, if you want to find out the queries that are taking total execution time of 10 seconds or more, then you can define a filter on Duration column and only those queries running for 10 seconds or more will be captured.

After starting trace, we opened SSMS and connected to SQL Server. We then run sample script against AdventureWorks2012 database. The script creates and executes a sample stored procedure named [AdventureWorks2012].[dbo].[usp_CalculateOrderTotals] that loops through a cursor to calculate the total for an order and inserts it in a temporary table. Looking at CPU and Duration data columns, it can be noticed that stored procedure took almost around six seconds to execute. Also, the Reads data column has high value and suggests that SQL Server had to read 296166 data pages to run this stored procedure. Higher the reads and writes counts are, slower the query will be. When the stored procedure [AdventureWorks2012].[dbo].[usp_CalculateOrderTotals] is executed to retrieve the requested data with required columns along with the required calculation, it performed a read operation on the following tables:

Sales.SalesOrderHeaderSales.SalesPersonSales.CustomerPerson.Person#tbl_OrderTotals

The script also generates five million sample records by cross joining sys.columns catalog view with itself multiple times and inserting the resulting data in tblSampleData table by SELECT...INTO command. This demonstrates how the writes count gets high when large amount of data is inserted. You can see that it caused 55369 reads and 35862 writes counts.

Remember that value in CPU data column is reported in milliseconds and the value in Duration data column is reported in microseconds. However, when SQL Server Profiler shows the value of Duration on its GUI, it shows the value in milliseconds by default. But when you save the trace in a trace file or trace table the value is stored in microseconds and not in milliseconds. Thus, for the Duration data column SQL Server behaves differently when it displays and stores the value.

If you are performing the task of identifying expensive queries on frequent basis, you may want to use the same trace definition each time you run a trace. It's convenient to save our trace definition as a trace template and use that template each time we run a trace.

Trace templates are the files that save the trace definition and trace properties. SQL Server Profiler comes with some default trace templates. They are as follows:

Blank

SP_Counts

Standard

TSQL

TSQL_Duration

TSQL_Grouped

TSQL_Locks

TSQL_Replay

TSQL_SPs

Tuning

Each of the above trace templates has its own trace definition that can be used to start a new trace. However, there are chances that the in-built templates may not have the settings which you require for your regular task.

In this type of situation, creating and using trace template should be a practical thing. The trace definition and settings that you normally use on regular basis or frequently can be saved in a trace template file. For this, you just need to save a trace file as trace template in order to create it. Once a trace template is created, it can be used for other trace sessions later on and you do not need to perform the tedious task of playing with trace properties each time.

What if you have no SQL Server Profiler installed on your machine and want to create a trace? What if you have SQL Server Profiler installed but the executable binary file of SQL Server Profiler is corrupted and cannot be run? What if you want to automate completely the process of capturing trace data as per your defined schedules so that you do not have to be physically present to start and stop the traces? Is it possible to create a trace in this manner without SQL Server Profiler?

The answer is yes. You can do this. SQL Server provides T-SQL system stored procedures to deal with SQL Trace. This capability enables us to write code that can create traces programmatically. By using SQL Trace system stored procedures along with SQL Agent, it is possible to automate and schedule the traces so that they run in background and capture event data during only certain period of time on a regular basis.

In this recipe, we will see how to create a trace without SQL Server Profiler by using SQL Trace system stored procedures. The trace that we will create in this recipe can be used to monitor the file growth of data files and log files of all databases on an instance of SQL Server. Monitoring file growth event for data files and log files will tell you how frequently your database files are grown that helps further in determining appropriate values for FILEGROWTH attribute of database files. If the size of files is increased by a smaller amount (for example, by 1 MB), SQL Server has to increase and extend the size of database files very frequently, which degrades the performance of write operations while working with large amount of data. It may also degrade the performance of read operations due to physical file fragmentation caused by small file chunks that are spread all over on the disk which makes a possible sequential read a random read. Thus, you should consider setting an appropriate FILEGROWTH value for your databases.

Before you start with the recipe, it is necessary that you have some background of basic system stored procedures provided in SQL Server which are used to work with traces. Following are the stored procedures which you should know:

sp_trace_create:This stored procedure is used to create a trace and returns the ID of newly created tracesp_trace_setevent:This stored procedure is used to add or remove event classes and data columns to and from a given tracesp_trace_setfilter:This stored procedure is used to set a filter condition on desired data column for a given tracesp_trace_setstatus:This stored procedure is used to start, stop, or close a given trace

In this example, we will capture only two event classes:

Data File Auto GrowLog File Auto Grow

For these mentioned event classes, we will be capturing the following data columns:

DatabaseName

FileName

StartTime

EndTime

By collecting these data columns, we can know which database file is automatically grown for which database and when.

We will not apply any filter in this trace because we want to capture and audit the database file growth events for all databases on the server. Thus, stored procedure sp_trace_setfilter will not be used in our example.

Follow the steps provided here to create a trace with system stored procedures:

Start SQL Server Management Studio and connect to SQL Server.

In the query window, type and execute the following T-SQL script to create a new trace through system stored procedures:

DECLARE @ReturnCode INT DECLARE @TraceID INT DECLARE @Options INT = 2 DECLARE @TraceFile NVARCHAR(245) = 'C:\MyTraces\MyTestTrace' DECLARE @MaxFileSize INT = 5 DECLARE @Event_DataFileAutoGrow INT = 92 DECLARE @Event_LogFileAutoGrow INT = 93 DECLARE @DataColumn_DatabaseName INT = 35 DECLARE @DataColumn_FileName INT = 36 DECLARE @DataColumn_StartTime INT = 14 DECLARE @DataColumn_EndTime INT = 15 DECLARE @On BIT = 1 DECLARE @Off BIT = 0 --Create a trace and collect the returned code. EXECUTE @ReturnCode = sp_trace_create @traceid = @TraceID OUTPUT ,@options = @Options ,@tracefile = @TraceFile --Check returned code is zero and no error occurred. IF @ReturnCode = 0 BEGIN BEGIN TRY --Add DatabaseName column to DataFileAutoGrow event. EXECUTE sp_trace_setevent @traceid = @TraceID ,@eventid = @Event_DataFileAutoGrow ,@columnid = @DataColumn_DatabaseName ,@on = @On --Add FileName column to DataFileAutoGrow event. EXECUTE sp_trace_setevent @traceid = @TraceID ,@eventid = @Event_DataFileAutoGrow ,@columnid = @DataColumn_FileName ,@on = @On --Add StartTime column to DataFileAutoGrow event. EXECUTE sp_trace_setevent @traceid = @TraceID ,@eventid = @Event_DataFileAutoGrow ,@columnid=@DataColumn_StartTime ,@on = @On --Add EndTime column to DataFileAutoGrow event. EXECUTE sp_trace_setevent @traceid = @TraceID ,@eventid = @Event_DataFileAutoGrow ,@columnid = @DataColumn_EndTime ,@on = @On --Add DatabaseName column to LogFileAutoGrow event. EXECUTE sp_trace_setevent @traceid = @TraceID ,@eventid = @Event_LogFileAutoGrow ,@columnid = @DataColumn_DatabaseName ,@on = @On --Add FileName column to LogFileAutoGrow event. EXECUTE sp_trace_setevent @traceid = @TraceID ,@eventid = @Event_LogFileAutoGrow ,@columnid = @DataColumn_FileName ,@on = @On --Add StartTime column to LogFileAutoGrow event. EXECUTE sp_trace_setevent @traceid = @TraceID ,@eventid = @Event_LogFileAutoGrow ,@columnid=@DataColumn_StartTime ,@on = @On --Add EndTime column to LogFileAutoGrow event. EXECUTE sp_trace_setevent @traceid = @TraceID ,@eventid = @Event_LogFileAutoGrow ,@columnid = @DataColumn_EndTime ,@on = @On --Start the trace. Status 1 corresponds to START. EXECUTE sp_trace_setstatus @traceid = @TraceID ,@status = 1 END TRY BEGIN CATCH PRINT 'An error occurred while creating trace.' END CATCH END GO

Note

It is possible that the stored procedure

sp_trace_createmay fail if the windows account under which the SQL Server Service is running has no write permission on the directory where the trace file is created. If this is the case, then you will need to assign proper permissions to the login account so that it can write to the specified directory.By executing the following query and observing the result set, make sure that the trace has been created successfully. This query should return a record for the trace that we created:

--Verify the trace has been created. SELECT * FROM sys.traces GO

The previous query will give you the list of traces that are currently running on the system. You should see your newly created trace listed in the result set of the previous query. If the trace could be created successfully, execute the following T-SQL script to create a sample database and insert one million records:

--Creating Sample Database keeping Filegrowth Size --to 1 MB for Data and Log file. CREATE DATABASE [SampeDBForTrace] ON PRIMARY ( NAME = N'SampeDB' ,FILENAME = N'C:\MyTraces\SampeDBForTrace_Data.mdf' ,SIZE = 2048KB , FILEGROWTH = 1024KB ) LOG ON ( NAME = N'SampeDBForTrace_log' ,FILENAME = N'C:\MyTraces\SampeDBForTrace_log.ldf' ,SIZE = 1024KB , FILEGROWTH = 1024KB ) GO USE SampeDBForTrace GO --Creating and Inserting one million records tbl_SampleData table. SELECT TOP 1000000 C1.* INTO tbl_SampleData FROM sys.columns AS C1 CROSS JOIN sys.columns AS C2 CROSS JOIN sys.columns AS C3 GO

After executing the previous script, execute the following T-SQL script to stop and close the trace:

DECLARE @TraceID INT DECLARE @TraceFile NVARCHAR(245) = 'C:\MyTraces\MyTestTrace.trc' --Get the TraceID for our trace. SELECT @TraceID = id FROM sys.traces WHERE path = @TraceFile IF @TraceID IS NOT NULL BEGIN --Stop the trace. Status 0 corroponds to STOP. EXECUTE sp_trace_setstatus @traceid = @TraceID ,@status = 0 --Closes the trace. Status 2 corroponds to CLOSE. EXECUTE sp_trace_setstatus @traceid = @TraceID ,@status = 2 END GO

Execute the following query to verify that the trace has been stopped and closed successfully. This query should not return a record for our trace if it is stopped and closed successfully.

--Verify the trace has been stopped and closed. SELECT * FROM sys.traces GO

The previous query will not return the row for the trace that we created because the trace has now been stopped and closed. Inspect the resulting trace data collected in our trace file by executing the following query:

--Retrieve the collected trace data. SELECT TE.name AS TraceEvent ,TD.DatabaseName ,TD.FileName ,TD.StartTime ,TD.EndTime FROM fn_trace_gettable('C:\MyTraces\MyTestTrace.trc',default) AS TD LEFT JOIN sys.trace_events AS TE ON TD.EventClass = TE.trace_event_id GO

In this recipe, we first created and configured our trace by executing a T-SQL script. The script first declares some required variables whose values are passed as parameters to system stored procedures. It creates a trace by executing the sp_trace_create stored procedure that returns ID of the newly created trace. The stored procedure sp_trace_create accepts the following parameters:

The @Options parameter is passed to specify the trace options. The following are the predefined values for the @Options parameter:

The parameter @TraceFile specifies the location and file name where the trace file should be saved. @TraceID is the output variable and the returned ID value of the trace will be stored in this variable. If the stored procedure can create a trace file successfully, it returns 0 that gets stored in variable @ReturnCode.

Note

Remember that all SQL Trace system stored procedures are strictly typed. By saying strictly typed, it means that the data types of the parameters that you pass to these stored procedures must match exactly with the data types of stored procedures' parameter definition. So, you cannot pass a parameter of type INT when BIGINT is required.

If trace is created successfully and @ReturnCode is zero, then we add event classes and data columns by calling stored procedure sp_trace_setevent for each combination of event class and data column one-by-one for following event classes and data columns:

DataFileAutoGrowevent class and DatabaseName data columnDataFileAutoGrowevent class and FileName data columnDataFileAutoGrowevent class and StartTime data columnDataFileAutoGrowevent class and EndTime data columnLogFileAutoGrowevent class and DatabaseName data columnLogFileAutoGrowevent class and FileName data columnLogFileAutoGrowevent class and StartTime data columnLogFileAutoGrowevent class and EndTime data column

Stored procedure accepts the following parameters:

@traceid@eventid@columnid@on

@TraceID is the ID of the trace we add event classes and data columns to.

Note that every event classes and data columns have their associated event IDs and column IDs. We have to pass these ID values corresponding to event classes and data columns that we want to include in our trace. These values are passed by appropriate variables declared for each event class and data column. For example, for DataFileAutoGrow event class and FileName data column we have stored their appropriate ID values in @Event_DataFileAutoGrow and @DataColumn_FileName variables respectively.

Tip

How to get IDs for all event classes and data columns?

ID values for required event classes and data columns must be passed to the stored procedure sp_trace_setevent. You can get a list of EventIDs for all event classes by querying sys.trace_events system catalog view. To get a list of column IDs for all data columns, use sys.trace_columns system catalog view. Also, you can retrieve list of column IDs for all available columns for a given event by querying sys.trace_event_bindings system catalog view and by joining it with sys.trace_events and sys.trace_columns system catalog views on trace_event_id and trace_column_id columns respectively.

The value of @ on parameter value can be either 0 or 1 where the value 1 means that event data for specified event class and data column should be captured otherwise not.

After adding the required event classes and data columns, the stored procedure sp_trace_setstatus is used to set the status of the trace to START. Any trace that is created with system stored procedure is always in STOP state by default, and needs to be started explicitly by calling sp_trace_setstatus stored procedure. This stored procedure accepts the following parameters:

@traceid@status

@TraceID is the ID of the trace we created and need to be started. @Status specifies the state of the trace. Possible values for @Status parameter are as follows:

0:Stops a trace1:Starts a trace2:Closes a trace

Because we wanted to start our trace, we are passing a value of 1 to this parameter.

SQL Server keeps track of currently opened trace sessions. This list of traces can be retrieved by querying sys.traces system catalog view. We just make sure by querying this view that the trace is indeed created.

Next, we create a sample database named SampleDBTrace. We deliberately keep the value of FILEGROWTH attribute smaller in order to be able to produce Data File Auto Growth and Log File Auto Growth events. The script also creates a sample table named tbl_SampleData though SELECT ... INTO statement in which we insert one million sample records by cross joining sys.columns system catalog view with itself multiple times. This operation requires additional space in data and log files to make room for inserting new records. For this, SQL Server has to increase the size of data and log files when required by one MB (specified value for the FILEGROWTH attribute). This causes DataFileAutoGrowth and LogFileAutoGrowth events to be raised.

Note

We deliberately kept the value of the FILEGROWTH attribute as smaller as 1 MB in order to demonstrate this recipe. Setting value of the FILEGROWTH attribute this small is just for the sake of being able to produce the desired file growth events. Such small value for the FILEGROWTH attribute is not recommended and should not be used on production server with heavy DML operations.

Once the record insertion operation is completed, the script is executed to stop and close the trace by again calling the stored procedure sp_trace_setstatus twice with the appropriate status value for each call. Remember that to close a trace, it should be stopped first. So, a trace should be stopped first before it can be closed.

After closing a trace, we make sure that the trace stopped and closed successfully by querying sys.traces system catalog view again.

Once our trace is stopped, we use fn_trace_gettable() function to query the captured trace data saved in specified trace file whose full file path is also being passed to the function for the first parameter filename. We also pass the default value for the second parameter number_files of the function which specifies that the function should read all rollover files to return trace data. Because this function does not return any column for the event class' name, we join it with sys.trace_events system catalog view on IDs of event classes in order to fetch the names of event classes.

Tip

If you want to analyze large size of trace data containing large number of trace files, then you should specify 1 for number_files parameter. If you specify default, the SQL Server tries to load all trace files into memory and then inserts them into a table in a single operation, which may crash your system.