Common Web Applications and Architectures

Web applications are essential for today's civilization. I know this sounds bold, but when you think of how the technology has changed the world, there is no doubt that globalization is responsible for the rapid exchange of information across great distances via the internet in large parts of the world. While the internet is many things, the most inherently valuable components are those where data resides. Since the advent of the World Wide Web in the 1990s, this data has exploded, with the world currently generating more data in the next 2 years than in all of the recorded history. While databases and object storage are the main repositories for this staggering amount of data, web applications are the portals through which that data comes and goes is manipulated, and processed into actionable information. This information is presented to the end users dynamically in their browser, and the relative simplicity and access that this imbues are the leading reason why web applications are impossible to avoid. We're so accustomed to web applications that many of us would find it impossible to go more than a few hours without them.

Financial, manufacturing, government, defense, businesses, educational, and entertainment institutions are dependent on the web applications that allow them to function and interact with each other. These ubiquitous portals are trusted to store, process, exchange, and present all sorts of sensitive information and valuable data while safeguarding it from harm. the industrial world has placed a great deal of trust in these systems. So, any damage to these systems or any kind of trust violation can and often does cause far-reaching economic, political, or physical damage and can even lead to loss of life. The news is riddled with breaking news of compromised web applications every day. Each of these attacks results in loss of that trust as data (from financial and health information to intellectual property) is stolen, leaked, abused, and disclosed. Companies have been irreparably harmed, patients endangered, careers ended, and destinies altered. This is heavy stuff!

While there are many potential issues that keep architects, developers, and operators on edge, many of these have a very low probability of occurring – with one great exception. Criminal and geopolitical actors and activists present a clear danger to computing systems, networks, and all other people or things that are attached to or make use of them. Bad coding, improper implementation, or missing countermeasures are a boon to these adversaries, offering a way in or providing cover for their activities. As potential attackers see the opportunity to wreak havoc, they invest more, educate themselves, develop new techniques, and then achieve more ambitious goals. This cycle repeats itself. Defending networks, systems, and applications against these threats is a noble cause.

Defensive approaches also exist that can help reduce risks and minimize exposure, but it is the penetration tester (also known as the White Hat Hacker) that ensures that they are up to the task. By thinking like an attacker - and using many of the same tools and techniques - a pen tester can uncover latent flaws in the design or implementation and allow the application stakeholders to fill these gaps before the malicious hacker (also known as the Black Hat Hacker) can take advantage of them. Security is a journey, not a destination, and the pen tester can be the guide leading the rest of the stakeholders to safety.

In this book, I'll assume that you are an interested or experienced penetration tester who wants to specifically test web applications using Kali Linux, the most popular open source penetration testing platform today. The basic setup and installation of Kali Linux and its tools is covered in many other places, be it Packt's own Web Penetration Testing with Kali Linux - Second Edition (by Juned Ahmed Ansari, available at https://www.packtpub.com/networking-and-servers/web-penetration-testing-kali-linux-second-edition) or one of a large number of books and websites.

In this first chapter, we'll take a look at the following:

- Leading web application architectures and trends

- Common web application platforms

- Cloud and privately hosted solutions

- Common defenses

- A high-level view of architectural soft-spots which we will evaluate as we progress through this book

Common architectures

Web applications have evolved greatly over the last 15 years, emerging from their early monolithic designs to segmented approaches, which in more professionally deployed instances dominate the market now. They have also seen a shift in how these elements of architecture are hosted, from purely on-premise servers, to virtualized instances, to now pure or hybrid cloud deployments. We should also understand that the clients' role in this architecture can vary greatly. This evolution has improved scale and availability, but the additional complexity and variability involved can work against less diligent developers and operators.

The overall web application's architecture maybe physically, logically, or functionally segmented. These types of segmentation may occur in combinations; with the cross-application integration so prevalent in enterprises, it is likely that these boundaries or characteristics are always in a state of transition. This segmentation serves to improve scalability and modularity, split management domains to match the personnel or team structure, increase availability, and can also offer some much-needed segmentation to assist in the event of a compromise. The degree to which this modularity occurs and how the functions are divided logically and physically is greatly dependent on the framework that is used.

Let's discuss some of the more commonly used logical models as well as some of the standout frameworks that these models are implemented on.

Standalone models

Most small or ad hoc web applications at some point or another were hosted on a physical or virtual server and within a single monolithic installation, and this is commonly encountered in simpler self-hosted applications such as a small or medium business web page, inventory service, ticketing systems, and so on. As these applications or their associated databases grow, it becomes necessary to separate the components or modules to better support the scale and integrate with adjacent applications and data stores.

These applications tend to use commonly available turnkey web frameworks such as Drupal, WordPress, Joomla!, Django, or a multitude of other frameworks, each of which includes a content delivery manager and language platform (for example Java, PHP: Hypertext Pre-Processor (PHP), Active Server Pages (ASP.NET), and so on), generated content in Hyper Text Markup Language (HTML), and a database type or types they support (various Server Query Languages (SQLs), Oracle, IBM DB2, or even flat files and Microsoft Access databases). Available as a single image or install medium, all functions reside within the same operating system and memory space. The platform and database combinations selected for this model are often more a question of developer competencies and preferences than anything else. Social engineering and open source information gathering on the responsible teams will certainly assist in characterizing the architecture of the web application.

A simple single-tier or standalone architecture is shown here in the following figure:

Three-tier models

Conceptually, the three-tier design is still used as a reference model, even if most applications have migrated to other topologies or have yet to evolve from a standalone implementation. While many applications now stray from this classic model, we still find it useful for understanding the basic facilities needed for real-world applications. We call it a three-tier model but it also assumes a fourth unnamed component: the client.

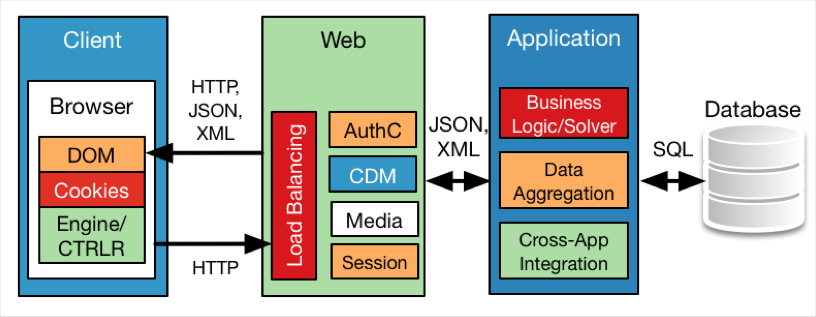

The three tiers include the web tier (or front end), the application tier, and the database tier, as seen here: in the following figure:

The role of each tier is important to consider:

- Web or Presentation Tier/Server/Front End: This module provides the User Interface (UI), authentication and authorization, scaling provisions to accommodate the large number of users, high availability features (to handle load shifting, content caching, and fault tolerance), and any software service that must be provisioned for the client or is used to communicate with the client. HTML, eXtensible Markup Language (XML), Asynchronous JavaScript And XML (AJAX), Common Style Sheets (CSS), JavaScript, Flash, other presented content, and UI components all reside in this tier, which is commonly hosted by Apache, IBM WebSphere, or Microsoft IIS. In effect, this tier is what the users see through their browser and interact with to request and receive their desired outcomes.

- Application or Business Tier/Server: This is the engine of the web application. Requests fielded by the web tier are acted upon here, and this is where business logic, processes, or algorithms reside. This tier also acts as a bridge module to multiple databases or even other applications, either within the same organization or with trusted third parties. C/C++, Java, Ruby, and PHP are usually the languages used to do the heavy lifting and turn raw data from the database tier into the information that the web tier presents to the client.

- The Database Tier/Server: Massive amounts of data of all forms is stored in specialized systems called databases. These troves of information are arranged so they can be quickly accessed but continually scaled. Classic SQL implementations such as MySQL and ProstgreSQL, Redis, CouchDB, Oracle, and others are common for storing the data, along with a large variety of abstraction tools helping to organize and access that data. At the higher end of data collection and processing, there are a growing number of superscalar database architectures that involve Not Only SQL (NoSQL), which is coupled with database abstraction software such as Hadoop. These are commonly found in anything that claims to be Big Data or Data Analytics, such as Facebook, Google, NASA, and so on.

- The Client: All of the three tiers need an audience, and the client (more specifically, their browser) is where users access the application and interact. The browser and its plugin software modules support the web tier in presenting the information as intended by the application developers.

The vendor takes this model and modifies it to accentuate their strengths or more closely convey their strategies. Both Oracle's and Microsoft's reference web application architectures, for instance, combine the web and application tiers into a single tier, but Oracle calls attention to its strength on the database side of things, whereas Microsoft expends considerable effort expanding on its list of complementary services that can add value to the customer (and revenue for Microsoft) to include load balancing, authentication services, and ties to its own operating systems on a majority of clients worldwide.

Model-View-Controller design

The Model-View-Controller (MVC) design is a functional model that guides the separation of information and exposure, and to some degree, also addresses the privileges of the stakeholder users through role separation. This allows the application to keep users and their inputs from intermingling with the back-end business processes, logic, and transactions that can expose earlier architectures to data leakage. The MVC design approach was actually created by thick-application software developers and is not a logical separation of services and components but rather a role-based separation. Now that web applications commonly have to scale while tracking and enforcing roles, web application developers have adapted it to their use. MVC designs also facilitate code reuse and parallel module development.

An MVC design can be seen in following figure:

In the MVC design, the four components are as follows:

- Model: The model maintains and updates data objects as the source of truth for the application, possessing the rules, logic, and patterns that make the application valuable. It has no knowledge of the user, but rather receives calls from the controller to process commands against its own data objects and returns its results to both the controller and the view. Another way to look at it is that the Model determines the behavior of the application.

- View: The view is responsible for presenting the information to the user, and so, it is responsible for the content delivery and responses: taking feedback from the controller and results from the model. It frames the interface that the user views and interacts with. The view is where the user sees the application work.

- Controller: The controller acts as the central link between the view and model; in receiving input from the view's user interface, the Controller translates these input calls to requests that the model acts on. These requests can update the Model and act on the user's intent or update the View presented to the user. The controller is what makes the application interactive, allowing the outside world to stimulate the model and alter the view.

- User: As in the other earlier models, the user is an inferred component of the design; and indeed, the entire design will revolve around how to allow the application to deliver value to the customer.

Notice that in the MVC model, there is very little detail given about software modules, and this is intentional. By focusing on the roles and separation of duties, software (and now, web) developers were free to create their own platform and architecture while using MVC as a guide for role-based segmentation. Contrast this with the standalone or 3-tier models break down the operation of an application, and we'll see that they are thinking about the same thing in very different ways.

One thing MVC does instill is a sense of statefulness, meaning that the application needs to track session information for continuity. This continuity drove the need for HTTP cookies and tokens to track sessions, which are in themselves something our app developers should now find ways to secure. Heavy use of application programming interfaces (APIs) also mean that there is now a larger attack surface. If the application is only presenting a small portion of data stored within the database tier, or that information should be more selectively populated to avoid leaks by maintaining too much information within the model that can be accessed when misconfigured or breached. In these cases, MVC is often shunned as a methodology because it can be difficult to manage data exposure within it.

It should be noted that the MVC design approach can be combined with physical or logical models of functions; in fact, platforms that use some MVC design principles power the majority of today's web applications.

Web application hosting

The location of the application or its modules has a direct bearing on our role as penetration testers. Target applications may be anywhere on a continuum, from physical to virtual, to cloud-hosted components, or some combination of the three. Recently, a fourth possibility has arrived: containers. The continuum of hosting options and their relative scale and security attributes are shown in the following figure. Bear in mind that the dates shown here relate to the rise in popularity of each possibility, but that any of the hosting possibilities may coexist, and that containers, in fact, can be hosted just as well in either cloud or on-premise data centers.

This evolution is seen in the following figure:

Physical hosting

For many years, application architectures and design choices only had to consider the physical, barebones host for running various components of the architecture. As web applications scaled and incorporated specialized platforms, additional hosts were added to meet the need. New database servers were added as the data sets became more diverse, additional application servers would be needed to incorporate additional software platforms, and so on. Labor and hardware resources are dedicated to each additional instance, but they add cost, complexity, and waste to a data center. The workloads depended on dedicated resources, and this made them both vulnerable and inflexible.

Virtual hosting

Virtualization has drastically changed this paradigm. By allowing hardware resources to be pooled and allocated logically to multiple guest systems, a single pool of hardware resources could contain all of the disparate operating systems, applications, database types, and other application necessities on a homogenous pool of servers, which provided centralized management and dynamically allocated interfaces and devices to multiple organizations in a prioritized manner. Web applications, in particular, benefited from this, as the flexibility of virtualization has offered a means to create parallel application environments, clones of databases, and so on, for the purposes of testing, quality assurance, and the creation of surge capacity. Because system administrators could now manage multiple workloads on the same pool of resources, hardware and support costs (for example power, floor space, installation and provisioning) could also be reduced, assuming the licensing costs don't neutralize the inherent efficiencies. Many applications still run in virtual on-premise environments.

Cloud hosting

Amazon took the concept of hosting virtual workloads a step further in 2006 and introduced cloud computing, with Microsoft Azure and others following shortly thereafter. The promise of turn-key Software as a Service (SaaS) running in highly survivable infrastructures via the internet allowed companies to build out applications without investing in hardware, bandwidth, or even real estate. Cloud computing was supposed to replace private cloud (traditional on premise systems), and some organizations have indeed made this happen. The predominant trend, however, is for most enterprises to see a split in applications between private and public cloud, based on the types and steady-state demand for these services.

Containers – a new trend

Containers offer a parallel or alternate packaging; rather than including the entire operating system and emulated hardware common in virtual machines, containers only bring their unique attributes and share these common ancillaries and functions, making them smaller and more agile. These traits have allowed large companies such as Google and Facebook to scale in real time to surge needs of their users with microsecond response times and complete the automation of both the spawning and the destruction of container workloads.

So, what does all of this mean to us? The location and packaging of a web application impacts its security posture. Both private and public cloud-hosted applications will normally integrate with other applications that may span in both domains. These integration points offer potential threat vectors that must be tested, or they can certainly fall victim to attack. Cloud-hosted applications may also benefit from protection hosted or offered by the service provider, but they may also limit the variety of defensive options and web platforms that can be supported. Understanding these constraints can help us focus on our probing and eliminating unnecessary work. The hosting paradigm also determines the composition of the team of defenders and operators that we are encountering. Cloud hosting companies may have more capable security operations centers, but a division of application security responsibility could result in a fragmentation of the intelligence and provide a gap that can be used to exploit the target. The underlying virtualization and operating systems available will also influence the choice of the application's platform, surrounding security mechanisms, and so on.

Application development cycles

Application developers adhere to processes that help maintain progress according to schedule and budget. Each company developing applications will undoubtedly have its own process in place, but common elements of these processes will be various phases: from inception to delivery/operation as well as any required reviews, deliverable expectations, testing processes, and resource requirements.

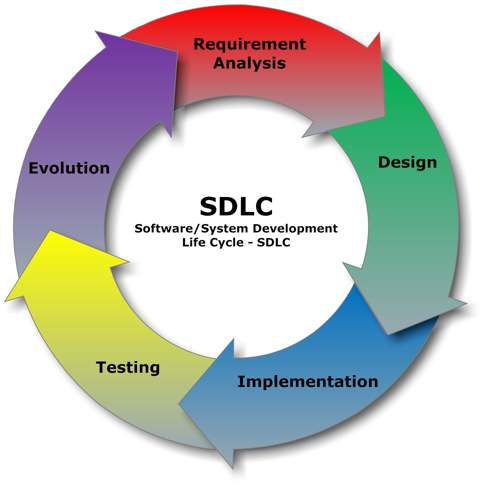

A common development process used in web applications is the application or Software Development Life Cycle (SDLC) shown in following figure, captured from https://commons.wikimedia.org/wiki/File:SDLC_-_Software_Development_Life_Cycle.jpg:

No matter what phases are defined in a company's development process, it is important to incorporate testing, penetration testing included, in each to mitigate risk at the most cost-effective stage of the process possible.

I've seen government development programs quickly exceed their budget due to inadequate consideration for security and security testing in the earlier phases of the cycle. In these projects, project management often delayed any testing for security purposes until after the product was leaving the implementation phase, believing that the testing phase was so-named as to encompass all verification activities. Catching bugs, defects, or security gaps in this phase required significant rework, redesign, or work-arounds that drastically impacted the schedule and budget.

Coordinating with development teams

The costs to mitigate threats and impact overall schedules are the most fundamental reasons for testing early and is often a part of the development cycle. If you are a tester working within a multi-disciplinary team, early and coordinated penetration tests can eliminate flaws and address security concerns long before they are concrete and costly to address. Penetration testing requirements should be part of any verification and a validation test plan. Additionally, development teams should include application security experts throughout the requirement and design phases to ensure that the application is designed with security in mind, a perspective that a web application penetration tester is well-suited to provide.

There are references from organizations such as the Open Web Application Security Project (OWASP, https://www.owasp.org/index.php/Testing_Guide_Introduction), SANS (https://www.sans.org), and the US Computer Emergency Response Team (https://www.us-cert.gov/bsi/articles/best-practices/security-testing/adapting-penetration-testing-software-development-purposes) that can be used to help guide the adaptation of penetration testing processes to the company's own development cycle. The value of this early and often strategy should be easy to articulate to the management, but concrete recommendations for the countering of specific web application vulnerabilities and security risks can be found in security reports such as those prepared by WhiteHat Security, Inc. found here (https://info.whitehatsec.com/rs/675-YBI-674/images/WH-2016-Stats-Report-FINAL.pdf) and from major companies such as Verizon, Cisco, Dell, McAfee, and Symantec. It is essential to have corporate sponsorship throughout the development to ensure that cyber security is adequately and continuously considered.

Post deployment - continued vigilance

Testing is not something that should be completed once to check the box and never revisited. Web applications are complex; they reuse significant repositories of code contributed by a vast array of organizations and projects. Vulnerabilities in recent years have certainly picked up, with 2014 and 2015 being very active years for attacking common open source libraries in use. Attacks such as Heartbleed, SSLPoodle, and Shellshock all take advantage of these open source libraries that, in some cases power, over 80% of the current web applications today. It can take years for admins to upgrade servers, and with the increasing volume of cataloged weaknesses it can be hard to follow. 2016, for instance, was the year of Adobe Flash, Microsoft internet Explorer, and Silverlight vulnerabilties. It is impossible for the community at large to police each and every use case for these fundamental building blocks, and a web application's owners may not be aware of the inclusion of these modules in the first place.

Applications for one battery of tests should continue to be analyzed at periodic intervals to ascertain their risk exposure with time. It is also important to ensure that different test methodologies, if not different teams, are used as often as possible to ensure that all angles are considered. This testing helps complete the SDLC by providing the needed security feedback in the testing and evolution phases to ensure that, just as new features are incorporated, the application is also developed to stay one step ahead of potential attackers. It is highly recommended that you advise your customers to fund or support this testing and employ both internal and a selection of external testing teams so that these findings make their way into the patch and revision schedule just as functional enhancements do.

Common weaknesses – where to start

Web application penetration testing focuses on a thorough evaluation of the application, its software framework, and platform. Web penetration testing has evolved into a dedicated discipline apart from network, wireless, or client-side (malware) tests. It is easy for us to see why recent trends indicate that almost 75% of reported cyber attacks are focused on the web applications. If you look at it from the hacker's perspective, this makes sense:

- Portals and workflows are very customized, and insulating them against all vectors during development is no small feat.

- Web applications must be exposed to the outside world to enable the users to actually use them. Too much security is seen as a burden and a potential deterrent to conducting business.

- Firewalls and intrusion systems, highly effective against network-based attacks, are not necessarily involved in the delivery of a web portal.

- These applications present potentially proprietary or sensitive data to externally situated users. It is their job, so exploiting this trust can expose a massive amount of high-value information.

- Web app attacks can often expose an entire database without a file-based breach, making attribution and forensics more difficult.

The bulk of this chapter was meant to introduce you to the architectural aspects of your targets. A deep understanding of your customers' applications will allow you to focus efforts on the tests that make the most sense.

Let's look again at a typical 3-tier application architecture (shown in following figure), and see what potential issues there may be that we should look into:

These potential vectors are some of the major threats we will test against; and in some cases, we will encompass a family of similar attack types. They are shown in relation to their typical place in the 3-tier design where the attack typically takes effect, but the attackers themselves are normally positioned in a public web tier much like the legitimate client. The attack categories that we'll discuss as we proceed are grouped as follows:

- Authentication, authorization, and session management attacks: These attacks (and our tests) focus on the rigor with which the application itself verifies the identity and enforces the privilege of a particular user. These tests will focus on convincing the Web Tier that we belong in the conversation.

- Cross-Site Scripting (XSS) attacks: XSS attacks involve manipulating either the client or the web and/or application tiers into diverting a valid session's traffic or attention to a hostile location, which can allow the attacker to exploit valid clients through scripts. Hijacking attempts often fit in this category as well.

- Injections and overflows: Various attacks find places throughout the 3-tier design to force applications to work outside tested boundaries by injecting code that maybe allowed by the underlying modules but should be prohibited by the application's implementation. Most of these injections (SQL, HTML, XML, and so on) can force the application to divulge information that should not be allowed, or they can help the attacker find administrative privileges to initiate a straightforward dump by themselves.

- Man-in-the-Middle (MITM) attacks: Session hijacking is a means by which the hacker or tester intercepts a session without the knowledge of either side. After doing so, the hacker has the ability to manipulate or fuzz requests and responses to manipulate one or both sides and uncover more data than what the legitimate user was actually after or entitled to have.

- Application tier attacks: Some applications are not configured to validate inputs properly, be it in validating how operations are entered or how file access is granted. It is also common to see applications fall short in enforcing true role-based controls; and privilege escalation attacks often occur, giving hackers the run of the house.

Web application defenses

If we step back and think about what customers are up against, it is truly staggering. Building a secure web application and network are akin to building a nuclear reactor plant. No detail is small and insignificant, so one tiny failure (a crack, weak weld, or a small contamination), despite all of the good inherent in the design and implementation, can mean failure. A similar truth impacts web application security – just one flaw, be it a misconfiguration or omission in the myriad of components, can provide attackers with enough of a gap through which immense damage can be inflicted. Add to this the extra problem that these same proactive defensive measures are relied upon in many environments to help detect these rare events (sometimes called black swan events). Network and application administrators have a tough job, and our purpose is to help them and their organization do it a job better.

Web application frameworks and platforms contain provisions to help secure them against nefarious actors, but they are rarely deployed alone in a production system. Our customers will often deploy cyber defense systems that can also enhance their applications' protection, awareness, and resilience against the attack. In most cases, customers will associate more elements with a greater defense in depth and assume higher levels of protection. As with the measures that their application platform provides, these additional systems are only as good as the processes and people responsible for installing, configuring, monitoring, and integrating these systems holistically into the architecture. Lastly, given the special place in an enterprise that these applications have, there is a good chance that the customer's various stakeholders have the wrong solutions in place to protect against the form of attacks that we will be testing against. We must endeavor to both assess the target and educate the customer.

Standard defensive elements

So, what elements of the system fit in here? The following figure shows the most common elements involved in a web application's path, relative to a 3-tier design:

- Firewall (FW): The first element focused on security is usually a perimeter or the internet Edge firewall that is responsible for enforcing a wide variety of access controls and policies to reduce the overall attack surface of the enterprise, web applications included. Recent advances in the firewall market have seen the firewall become a Next Generation Firewall (NGFW) where these policies are no longer defined by strict source and destination port and IP Address pairs, but in contextual fashion, incorporating more human-readable elements such as the users or groups in the conversation, the geographic location, reputation, or category of the external participant, and the application or purpose of the conversation.

- Load balancer: Many scaled designs rely on load balancers to provide the seamless assignment of workloads to a bank of web servers. While this is done to enable an application to reach more users, this function often corresponds with some proxy-like functions that can obscure the actual web tier resources from the prying eyes of the hacker. Some load balancer solutions also include security-focused services in addition to their virtual IP or reverse proxy functions. Functionally, they may include the web application firewall functions. Load balancers can also be important in helping to provide Distributed Denial of Service (DDoS) protection spreading, diverting, or absorbing malicious traffic loads.

- Web Application Firewall (WAF): WAFs provide application-layer inspection and prevention of attacks to ensure that many of the exploits that we will attempt in this book are either impossible or difficult to carry out. These firewalls differ from the network firewall at the perimeter in that they are only inspecting the HTTP/HTTPS flows for attacks. WAFs tend to be very signature-dependent and must be combined with other defense solutions to provide coverage of other vectors.

Additional layers

Not shown in the preceding diagram are additional defensive measures that may run as features on the firewalls or independently at one or more stages of the environment. Various vendors market these solutions in a wide variety of market categories and capability sets. While the branding may vary, they fall into a couple of major categories:

- Intrusion Detection/Prevention Systems (IDS/IPS): These key elements provide deep packet inspection capabilities to enterprises to detect both atomic and pattern-based (anomaly) threats. In a classic implementation, these offer little value to web applications given that they lack the insight into the various manipulations of the seemingly valid payloads that hackers will use to initiate common web application attacks. Next-Generation IPS (NGIPS) may offer more protection from certain threats, in that they not only process classic IDS/IPS algorithms, but combine context and rolling baselines to identify abnormal transactions or interactions. These tools may also be integrated within the network firewall or between tiers of the environment. Newer NGIPS technologies may have the ability to detect common web vulnerabilities, and these tools have shown tremendous value in protecting target systems that use unpatched or otherwise misconfigured software modules.

- Network Behavioral Analysis (NBA): These tools leverage metadata from network elements to see trends and identify abnormal behavior. Information gleaned from Syslogs, and flow feeds (Neflow/IPFIX, sFlow, jFlow, NSEL, and so on) won't provide the same deep packet information that an IPS can glean, but the trends and patterns gleaned from the many streams through a network can tip operators off to an illicit escalation of credentials. In web applications, more egregious privilege attacks maybe identified by NBA tools, along with file and directory scraping attacks.

All of the components mentioned can be implemented in a multitude of form factors: from various physical appliance types to virtual machines to cloud offerings. More sophisticated web applications will often employ multiple layers differentially to provide greater resilience against attacks, as well as to provide overarching functions for a geographically disperse arrangement of hosting sites. A company may have 10 locations, for example, that are globally load-balanced to serve customers. In this situation, cloud-based load balancers, WAFs, and firewalls may provide the first tier of defense, while each data center may have additional layers serving not only local web application protection but also other critical services specific to that site.

Summary

Since this is a book on mastering Kali Linux for the purposes of conducting web application penetration tests, it may have come as a surprise that we started with foundational topics such as the architecture, security elements, and so on. It is my hope that covering these topics will help set us apart from the script-kiddies that often engage in pen testing but offer minimal value. Anyone can fire up Kali Linux or some other distribution and begin hacking away, but without this foundation, our tests run the risk of being incomplete or inaccurate. Our gainful employment is dependent on actually helping the customer push their network to their (agreed upon) limits and helping them see their weaknesses. Likewise, we should also be showing them what they are doing right. John Strand, owner and analyst at Black Hills Information Security, is fond of saying that we should strive to get caught after being awesome

.

While the knowledge of the tools and underlying protocols is often what sets a serious hacker apart from a newbie, it is also the knowledge of their quarry and the depth of the service they provide. If we are merely running scripts for the customer and reporting glaring issues, we are missing the point of being a hired penetration tester. Yes, critical flaws need to be addressed, but so do the seemingly smaller ones. It takes an expert to detect a latent defect that isn't impacting the performance now but will result in a major catastrophe some time later. This not only holds true for power plants, but for our web applications. We need to not just show them what they can see on their own, but take it further to help them insulate against tomorrow's attacks.

In this chapter, we discussed some architectural concepts that may help us gain better insight into our targets. We also discussed the various security measures our customers can put into place that we will need to be aware of, both to plan our attacks and to test for efficacy. Our discussion also covered the importance of testing throughout the lifecycle of the application. Doing this saves both time and money, and can certainly save the reputation and minimize risk once the application is in production. These considerations should merit having penetration testers as a vital and permanent member of any development team.

In our next chapter, we will talk briefly about how to prepare a fully featured sandbox environment that can help us practice the test concepts. We'll also discuss the leading test frameworks that can help us provide comprehensive test coverage. Lastly, we'll discuss contracts and the ethical and legal aspects of our job; staying out of jail is a key objective.

Download code from GitHub

Download code from GitHub