Introducing Machine Learning with Go

All around us, automation is changing our lives in subtle increments that live on the bleeding edge of mathematics and computer science. What do a Nest thermostat, Netflix's movie recommendations and Google's Images search algorithm all have in common? Created by some of the brightest minds in todays software industry, these technologies all rely on machine learning (ML) techniques.

In February 2019, Crunchbase listed over 4,700 companies that categorized themselves as Artificial Intelligence (AI) or ML[1]. Most of these companies were very early stage and funded by angel investors or early round funding from venture capitalists. Yet articles in 2017 and 2018 by Crunchbase, and the UK Financial Times, center around a common recognition that ML is increasingly relied upon for sustained growth[2], and that its increasing maturity will lead to even more widespread applications[3], particularly if challenges around the opacity of decisions made by ML algorithms can be solved[4]. The New York Times even has a column dedicated to ML[5], a tribute to its importance in everyday life.

This book will teach a software engineer with intermediate knowledge of the Go programming language how to write and produce an ML application from concept to deployment, and beyond. We will first categorize problems suitable for ML techniques and the life cycle of ML applications. Then, we will explain how to set up a development environment specifically suited for data science with the Go language. Then, we will provide a practical guide to the main ML algorithms, their implementations, and their pitfalls. We will also provide some guidance on using ML models produced using other programming languages and integrating them in Go applications. Finally, we will consider different deployment models and the elusive intersection between DevOps and data science. We will conclude with some remarks on managing ML projects from our own experience.

In our first chapter, we will introduce some fundamental concepts of Go ML applications:

- What is ML?

- Types of ML problems

- Why write ML applications in Go?

- The ML development life cycle

What is ML?

ML is a field at the intersection of statistics and computer science. The output of this field has been a collection of algorithms capable of operating autonomously by inferring the best decision or answer to a question from a dataset. Unlike traditional programming, where the programmer must decide the rules of the program and painstakingly encode these in the syntax of their chosen programming language, ML algorithms require only sufficient quantities of prepared data, computing power to learn from the data, and often some knowledge to tweak the algorithms parameters to improve the final result.

The resulting systems are very flexible and can be excellent at capitalizing on patterns that human beings would miss. Imagine writing a recommender system for a TV series from scratch. Perhaps you might begin by defining the inputs and the outputs of the problem, then finding a database of TV series that had such details as their date of release, genre, cast, and director. Finally, you might create a score func that rates a pair of series more highly if their release dates are close, they have the same genre, share actors, or have the same director.

Given one TV series, you could then rank all other TV series by decreasing similarity score and present the first few to the user. When creating the score func, you would make judgement calls on the relative importance of the various features, such as deciding that each pair of shared actors between two series is worth one point. This type of guesswork, also known as a heuristic, is what ML algorithms aim to do for you, saving time and improving the accuracy of the final result, especially if user preferences shift and you have to change the scoring func regularly to keep up.

The distinction between the broader field of AI and ML is a murky one. While the hype surrounding ML may be relatively new[6], the history of the field began in 1959 when Arthur Samuel, a leading expert in AI, first used these words[7]. In the 1950s, ML concepts such as the perceptron and genetic algorithms were invented by the likes of Alan Turing[8] as well as Samuel himself. In the following decades, practical and theoretical difficulties in achieving general AI, led to approaches such as rule-based methods such as expert systems, which did not learn from data, but rather from expert-devised rules which they had learned over many years, encoded in if-else statements.

In the 1990s, recognizing that achieving AI was unlikely with existing technology, there was an increasing appetite for a narrow approach to tackling very specific problems that could be solved using a combination of statistics and probability theory. This led to the development of ML as a separate field. Today, ML and AI are often used interchangeably, particularly in marketing literature[9].

Types of ML algorithms

There are two main categories of ML algorithms: supervised learning and unsupervised learning. The decision of which type of algorithm to use depends on the data you have available and the project objectives.

Supervised learning problems

Supervised learning problems aim to infer the best mapping between an input and output dataset based on provided labeled pairs of input/output. The labeled dataset acts as feedback for the algorithm, allowing it to gauge the optimality of its solution. For example, given a list of mean yearly crude oil prices from 2010-2018, you may wish to predict the mean yearly crude oil price of 2019. The error that the algorithm makes on the 2010-2018 years will allow the engineer to estimate its error on the target prediction year of 2019.

Given a labeled collection of handwritten digits, you may wish to predict the label of a previously unseen handwritten digit. Similarly, given a dataset of emails that are labeled as being either spam or not spam, a company that wants to create a spam filter would want to predict whether a previously unseen message was spam. All these problems are supervised learning problems.

Supervised ML problems can be further divided into prediction and classification:

- Classification attempts to label an unknown input sample with a known output value. For example, you could train an algorithm to recognize breeds of cats. The algorithm would classify an unknown cat by labeling it with a known breed.

- By contrast, prediction algorithms attempt to label an unknown input sample with either a known or unknown output value. This is also known as estimation or regression. A canonical prediction problem is time series forecasting, where the output value of the series is predicted for a time value that was not previously seen.

We will cover supervised algorithms in more detail in Chapter 3, Supervised Learning.

Unsupervised learning problems

Unsupervised learning problems aim to learn from data that has not been labeled. For example, given a dataset of market research data, a clustering algorithm can divide consumers into segments, saving time for marketing professionals. Given a dataset of medical scans, unsupervised classification algorithms can divide the image between different kinds of tissues for further analysis. One unsupervised learning approach known as dimensionality reduction works in conjunction with other algorithms, as a pre-processing step, to reduce the volume of data that another algorithm will have to be trained on, cutting down training times. We will cover unsupervised learning algorithms in more detail in Chapter 4, Unsupervised Learning.

Most ML algorithms can be efficiently implemented in a wide range of programming languages. While Python has been a favorite of data scientists for its ease of use and plethora of open source libraries, Go presents significant advantages for a developer creating a commercial ML application.

Why write ML applications in Go?

There are libraries for other languages, especially Python, that are more complete than Go ML libraries and have benefited from years, if not decades, of research from the worlds brightest brains. Some Go programmers make the transition to Go in search of better performance, but because ML libraries are typically written in C and exposed to Python through their bindings, they do not suffer the same performance problems as interpreted Python programs. Deep learning frameworks such as TensorFlow and Caffe have very limited, if any, bindings to Go. Even with these issues in mind, Go is still an excellent, if not the best, language to develop an application containing ML components.

The advantages of Go

For researchers attempting to improve state-of-the-art algorithms in an academic environment, Go may not be the best choice. However, for a start-up with a product concept and fast-dwindling cash reserves, completing the development of the product in a maintainable and reliable way within a short space of time is essential, and this is where the Go language shines.

Go (or Golang) originates from Google, where its design began in 2007[10]. Its stated objectives were to create an efficient, compiled programming language that feels lightweight and pleasant[11]. Go benefits from a number of features that are designed to boost productivity and reliability of production applications:

- Easy to learn and on-board new developers

- Fast build time

- Good performance at run-time

- Great concurrency support

- Excellent standard library

- Type safety

- Easy-to-read, standardized code with gofmt

- Forced error handling to minimize unforeseen exceptions

- Explicit, clear dependency management

- Easy to adapt architecture as projects grow

All these reasons make Go an excellent language for building production systems, particularly web applications. The 2018 Stack Overflow developer survey reveals that while only 7% of professional developers use Go as their main language, it is 5th on the most loved list and also commands very high salaries relative to other languages, recognizing the business value that Go programmers add[12].

Go's mature ecosystem

Some of the worlds most successful technology companies use Go as the main language of their production systems and actively contribute to its development, such as Cloudflare[13], Google, Uber[14], Dailymotion[15], and Medium[16]. This means that there is now an extensive ecosystem of tools and libraries to help a development team create a reliable, maintainable application in Go. Even Docker, the worlds leading container technology, is written in Go.

At the time of writing, there are 1,774 repositories on GitHub written in the Go language that have over 500 stars, traditionally considered a good proxy measure of quality and support. In comparison, Python has 3,811 and Java 3,943. Considering that Go is several decades younger and allows for faster production-ready development, the relatively large number of well-supported repositories written in the Go language constitutes a glowing endorsement from the open source community.

Go has a number of stable and well-supported open source ML libraries. The most popular Go ML library by number of GitHub stars and contributors is GoLearn[17]. It is also the most recently updated. Other Go ML libraries include GoML and Gorgonia, a deep learning library whose API resembles TensorFlow.

Transfer knowledge and models created in other languages

Data scientists will often explore different methods to tackle an ML problem in a different language, such as Python, and produce a model that can solve the problem outside any application. The plumbing, such as getting data in and out of the model, serving this to a customer, persisting outputs or inputs, logging errors, or monitoring latencies, is not part of this deliverable and is outside the normal scope of work for a data scientist. As a result, taking the model from concept to a Go production application requires a polyglot approach such as a microservice.

However, there are Go bindings for deep learning frameworks such as TensorFlow and Caffe. Moreover, for more basic algorithms such as decision trees, the same algorithms have also been implemented in Go libraries and will produce the same results if they are configured in the same way. Together, these considerations imply that it is possible to fully integrate data science products into a Go application without sacrificing accuracy, speed, or forcing a data scientist to work with tools they are uncomfortable with.

ML development life cycle

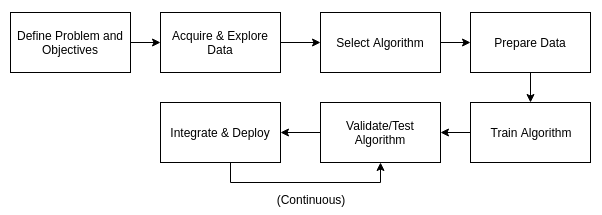

The ML development life cycle is a process to create and take to production an application containing an ML model that solves a business problem. The ML model can then be served to customers through the application as part of a product or service offering.

The following diagram illustrates the ML development life cycle process:

Defining problem and objectives

Before any development begins, the problem to be solved must be defined together with objectives of what good will look like, to set expectations. The way the problem is formulated is very important, as this can mean the difference between intractability and a simple solution. It is also likely to involve a conversation about where the input data for any algorithm will come from.

The typical formulation of an ML problem takes the form given X dataset, predict Y. The availability of data or lack of it thereof can affect the formulation of the problem, the solution, and its feasibility. For example, consider the problem given a large labeled set of images of handwritten digits[18], predict the label of a previously unseen image. Deep learning algorithms have demonstrated that it is possible to achieve relatively high accuracy on this particular problem with little work on the part of the engineer, as long as the training dataset is sufficiently large[19]. If the training set is not large, the problem immediately becomes more difficult and requires a careful selection of the algorithm to use. It also affects the accuracy and thus, the set of attainable objectives.

Experiments performed by Michael Nielsen on the MNIST handwritten digit dataset show that the difference between training an ML algorithm with 1 example of labeled input/output pairs per digit and 5 examples was an improvement of accuracy from around 40% to around 65% for most algorithms tested[20]. Using 10 examples per digit usually raised the accuracy a further 5%.

If insufficient data is available to meet the project objectives, it is sometimes possible to boost performance by artificially expanding the dataset by making small changes to existing examples. In the previously mentioned experiments, Nielsen observed that adding slightly rotated or translated images to the dataset improved performance by as much as 15%.

Acquiring and exploring data

We argued earlier that it is critical to understand the input dataset before specifying project objectives, particularly objectives related to accuracy. As a general rule, ML algorithms will produce the best results when there are large training datasets available. The more data is used to train them, the better they will perform.

Acquiring data is, therefore, a key step in the ML development life cycle—one that can be very time-consuming and fraught with difficulty. In certain industries, privacy legislation may cause a lack of availability of personal data, making it difficult to create personalized products or requiring anonymization of source data before it can be used. Some datasets may be available but could require such extensive preparation or even manual labeling that it may put the project timeline or budget under stress.

Even if you do not have a proprietary dataset to apply to your problem, you may be able to find public datasets to use. Often, public datasets will have received attention from researchers, so you may find that the particular problem you are attempting to tackle has already been solved and the solution is open source. Some good sources of public datasets areas follows:

- Awesome datasets: https://github.com/awesomedata/awesome-public-datasets

- Skymind open datasets: https://skymind.ai/wiki/open-datasets

- OpenML: https://www.openml.org/

- Kaggle: https://www.kaggle.com/datasets

- UK Governments open data: https://data.gov.uk/

- US Governments open data: https://www.data.gov/

Once the dataset has been acquired, it should be explored to gain a basic understanding of how the different features (independent variables) may affect the desired output. For example, when attempting to predict correct height and weight from self-reported figures, researchers determined from initial exploration that older subjects were more likely to under-report obesity and therefore that age was thus a relevant feature when building their model. Attempting to build a model from all available data, even features that may not be relevant, can lead to longer training times in the best case, and can severely hamper accuracy in the worst case by introducing noise.

In Chapter 2, Setting Up the ML Environment, we will see how to explore data using Go and an interactive browser-based tool called Jupyter.

Selecting the algorithm

The selection of the algorithm is arguably the most important decision that an ML application engineer will need to make, and the one that will take the most research. Sometimes, it is even required to combine an ML algorithm with traditional computer science algorithms to make a problem more tractable—an example of this is a recommender system that we consider later.

A good first step to start homing in on the best algorithm to solve a given problem is to determine whether a supervised or unsupervised approach is required. We introduced both earlier in the chapter. As a rule of thumb, when you are in possession of a labeled dataset and wish to categorize or predict a previously unseen sample, this will use a supervised algorithm. When you wish to understand an unlabeled dataset better by clustering it into different groups, possibly to then classify new samples against, you will use an unsupervised learning algorithm. A deeper understanding of the advantages and pitfalls of each algorithm and a thorough exploration of your data will provide enough information to select an algorithm. To help you get started, we cover a range of supervised learning algorithms in Chapter 3, Supervised Learning, and unsupervised learning algorithms in Chapter 4, Unsupervised Learning.

Some problems can lend themselves to a deft application of both ML techniques and traditional computer science. One such problem is recommender systems, which are now widespread in online retailers such as Amazon and Netflix. This problem asks, given a dataset of each users set of purchased items, predict a set of N items that the user is most likely to purchase next. This is exemplified in Amazons people who buy X also buy Y system.

The basic idea of the solution is that, if two users purchase very similar items, then any items not in the intersection of their purchased items are good candidates for their future purchases. First, transform the dataset so that it maps pairs of items to a score that expresses their co-occurrence. This can be computed by taking the number of times that the same customer has purchased both items, divided by the number of times a customer has purchased either one or the other, to give a number between 0 and 1. This now provides a labeled dataset to train a supervised algorithm such as a binary classifier to predict the score for a previously unseen pair. Combining this with a sorting algorithm can produce, given a single item, a list of items in a sorted rank of purchasability.

Preparing data

Data preparation refers to the processes performed on the input dataset before training the algorithm. A rigorous preparation process can simultaneously enhance the quality of the data and reduce the amount of time it will take the algorithm to reach the desired accuracy. The two steps to preparing data are data pre-processing and data transformation. We will go into more detail on preparing data in Chapters 2, Setting Up The Development Environment, Chapter 3, Supervised Learning, and Chapter 4, Unsupervised Learning.

Data pre-processing aims to transform the input dataset into a format that is adequate for work with the selected algorithm. A typical example of a pre-processing task is to format a date column in a certain way, or to ingest CSV files into a database, discarding any rows that lead to parsing errors. There may also be missing data values in an input data file that need to either be filled in (say, with a mean), or the entire sample discarded. Sensitive information such as personal information may need to be removed.

Data transformation is the process of sampling, reducing, enhancing, or aggregating the dataset to make it more suitable for the algorithm. If the input dataset is small, it may be necessary to enhance it by artificially creating more examples, such as rotating images in an image recognition dataset. If the input dataset has features that the exploration has deemed irrelevant, it would be wise to remove them. If the dataset is more granular than the problem requires, aggregating it to a coarser granularity may help speed up results, such as aggregating city-level data to counties if the problem only requires a prediction per county.

Finally, if the input dataset is particularly large, as is the case with many image datasets intended for use by deep learning algorithms, it would be a good idea to start with a smaller sample that will produce fast results so that the viability of the algorithm can be verified before investing in more computing resources.

The sampling process will also divide the input dataset into training and validation subsets. We will explain why this is necessary later, and what proportion of the data to use for both.

Training

The most compute-intensive part of the ML development life cycle is the training process. Training an ML algorithm can take seconds in the simplest case or days when the input dataset is enormous and the algorithm requires many iterations to converge. The latter case is usually observed with deep learning techniques. For example, DeepMinds AlphaGo Zero algorithm took forty days to fully master the game of Go, even though it was proficient after only three[22]. Many algorithms that operate on smaller datasets and problems other than image or sound recognition will not require such a large amount of time or computational resource.

While the algorithm is training, particularly if the training phase will take a long time, it is useful to have some real-time measures of how well the training is going, so that it can be interrupted, re-configured, and restarted without waiting for the training to complete. These metrics are typically classified as loss metrics, where loss refers to the notional error that the algorithm makes either on the training or validation subsets.

Some of the most common loss metrics in prediction problems are as follows:

- Mean square error (MSE) measures the sum of the squared distance between the output variable and the predicted values.

- Mean absolute error (MAE) measures the sum of the absolute distance between the output variable and the predicted values.

- Huber loss is a combination of the MSE and MAE that is more robust to outliers while remaining a good estimator of both the mean and median loss.

Some of the most common loss metrics in classification problems are as follows:

- Logarithmic loss measures the accuracy of the classifier by placing a penalty on false classifications. It is closely related to cross-entropy loss.

- Focal loss is a newer loss func aimed at preventing false negatives when the input dataset is sparse[23].

Validating/testing

Software engineers are familiar with testing and debugging software source code, but how should ML models be tested? Pieces of algorithms and data input/output routines can be unit tested, but often it is unclear how to ensure that the ML model itself, which presents as a black box, is correct.

The first step to ensuring correctness and sufficient accuracy of an ML model is validation. This means applying the model to predict or classify the validation data subset, and measuring the resulting accuracy against project objectives. Because the training data subset was already seen by the algorithm, it cannot be used to validate correctness, as the model could suffer from poor generalizability (also known as overfitting). To take a nonsensical example, imagine an ML model that consists of a hash map that memorizes each input sample and maps it to the corresponding training output sample. The model would have 100% accuracy on a training data subset, which was previously memorized, but very low accuracy on any data subset, and therefore it would not solve the problem it was intended for. Validation tests against this phenomenon.

In addition, it is a good idea to validate model outputs against user acceptance criteria. For example, if building a recommender system for TV series, you may wish to ensure that the recommendations made to children are never rated PG-13 or higher. Rather than trying to encode this into the model, which will have a non-zero failure rate, it is better to push this constraint into the application itself, because the cost of not enforcing it would be too high. Such constraints and business rules should be captured at the start of the project.

Integrating and deploying

The boundary between the ML model and the rest of the application must be defined. For example, will the algorithm expose a Predict method that provides a prediction for a given input sample? Will input data processing be required of the caller, or will the algorithm implementation perform it? Once this is defined, it is easier to follow best practice when it comes to testing or mocking the ML model to ensure correctness of the rest of the application. Separation of concerns is important for any application, but for ML applications where one component behaves like a black box, it is essential.

There are a number of possible deployment methods for ML applications. For Go applications, containerization is particularly simple as the compiled binary will have no dependencies (except in some very special cases, such as where bindings to deep learning libraries such as TensorFlow are required). Different cloud vendors also admit serverless deployments and have different continuous integration/continuous deployment (CI/CD) offerings. Part of the advantage of using a language such as Go is that the application can be deployed very flexibly making use of available tooling for traditional systems applications, and without resorting to a messy polyglot approach.

In Chapter 6, Deploying Machine Learning Applications, we will take a deep dive into topics such as deployment models, Platform as a Service (PaaS) versus Infrastructure as a Service (IaaS), and monitoring and alerting specific to ML applications, leveraging the tools built for the Go language.

Re-validating

It is rare to put a model into production that never requires updating or re-training. A recommender system may need regular re-training as user preferences shift. An image recognition model for car makes and models may need re-training as more models come onto the market. A behavioral forecasting tool that produces one model for each device in an IoT population may need continuous monitoring to ensure that each model still satisfies the desired accuracy criterion, and to retrain those that are not.

The re-validation process is a continuous process where the accuracy of the model is tested and, if it is deemed to have decreased, an automated or manual process is triggered to re-train it, ensuring that the results are always optimal.

Summary

In this chapter, we introduced ML and the different types of ML problems. We argued for Go as a language to develop ML applications. Then, we outlined the ML development life cycle, the process of creating and taking to production an ML application.

In the next chapter, we will explain how to set up a development environment for ML applications and Go.

Further readings

- https://www.crunchbase.com/hub/machine-learning-companies, retrieved on February 9, 2019.

- https://www.ft.com/content/133dc9c8-90ac-11e8-9609-3d3b945e78cf. Machine Learning will be the global engine of growth.

- https://news.crunchbase.com/news/venture-funding-ai-machine-learning-levels-off-tech-matures/. Retrieved on February 9, 2019.

- https://www.economist.com/science-and-technology/2018/02/15/for-artificial-intelligence-to-thrive-it-must-explain-itself. Retrieved on February 9, 2019.

- https://www.nytimes.com/column/machine-learning. Retrieved on February 9th 2019.

- See for example Google Trends for Machine Learning. https://trends.google.com/trends/explore?date=all&geo=US&q=machine%20learning.

- R. Kohavi and F. Provost, Glossary of Terms, Machine Learning, vol. 30, no. 2–3, pp. 271–274, 1998. 30, no. 2–3, pp. 271–274, 1998.

- Turing, Alan (October 1950). Computing Machinery and Intelligence. Mind. 59 (236): 433–460. doi:10.1093/mind/LIX.236.433. Retrieved 8 June 2016.016.

- https://www.forbes.com/sites/bernardmarr/2016/12/06/what-is-the-difference-between-artificial-intelligence-and-machine-learning/. Retrieved on February 9, 2019.

- https://talks.golang.org/2012/splash.article. Retrieved February 9, 2019.

- https://talks.golang.org/2012/splash.article. Retrieved February 9,h 2019.

- https://insights.stackoverflow.com/survey/2018/. Retrieved February 9, 2019.

- https://github.com/cloudflare. Retrieved February 9, 2019.

- https://github.com/uber. Retrieved February 9, 2019.

- https://github.com/dailymotion. Retrieved February 9, 2019.

- https://github.com/medium. Retrieved February 9, 2019.

- https://github.com/sjwhitworth/golearn. Retrieved on 10, February 2019.

- See the MNIST dataset hosted at http://yann.lecun.com/exdb/mnist/. Retrieved February 10, 2019.

- See https://machinelearningmastery.com/handwritten-digit-recognition-using-convolutional-neural-networks-python-keras/ for an example. Retrieved February 10, 2019.

- http://cognitivemedium.com/rmnist. Retrieved February 10, 2019.

- Regression Models to Predict Corrected Weight, Height and Obesity Prevalence From Self-Reported Data: data from BRFSS 1999-2007. Int J Obes (Lond). 2010 Nov; 34(11):1655-64. doi: 10.1038/ijo.2010.80. Epub 2010 Apr 13.

- https://deepmind.com/blog/alphago-zero-learning-scratch/. Retrieved February 10th, 2019.

- Focal Loss for Dense Object Detection. Lin et al. ICCV 2980-2988. Pre-print available at https://arxiv.org/pdf/1708.02002.pdf.

Download code from GitHub

Download code from GitHub