Data science is not a single science as much as it is a collection of various scientific disciplines integrated for the purpose of analyzing data. These disciplines include various statistical and mathematical techniques, including:

- Computer science

- Data engineering

- Visualization

- Domain-specific knowledge and approaches

With the advent of cheaper storage technology, more and more data has been collected and stored permitting previously unfeasible processing and analysis of data. With this analysis came the need for various techniques to make sense of the data. These large sets of data, when used to analyze data and identify trends and patterns, become known as big data.

This in turn gave rise to cloud computing and concurrent techniques such as map-reduce, which distributed the analysis process across a large number of processors, taking advantage of the power of parallel processing.

The process of analyzing big data is not simple and evolves to the specialization of developers who were known as data scientists. Drawing upon a myriad of technologies and expertise, they are able to analyze data to solve problems that previously were either not envisioned or were too difficult to solve.

Early big data applications were typified by the emergence of search engines capable of more powerful and accurate searches than their predecessors. For example, AltaVista was an early popular search engine that was eventually superseded by Google. While big data applications were not limited to these search engine functionalities, these applications laid the groundwork for future work in big data.

The term, data science, has been used since 1974 and evolved over time to include statistical analysis of data. The concepts of data mining and data analytics have been associated with data science. Around 2008, the term data scientist appeared and was used to describe a person who performs data analysis. A more in-depth discussion of the history of data science can be found at http://www.forbes.com/sites/gilpress/2013/05/28/a-very-short-history-of-data-science/#3d9ea08369fd.

This book aims to take a broad look at data science using Java and will briefly touch on many topics. It is likely that the reader may find topics of interest and pursue these at greater depth independently. The purpose of this book, however, is simply to introduce the reader to the significant data science topics and to illustrate how they can be addressed using Java.

There are many algorithms used in data science. In this book, we do not attempt to explain how they work except at an introductory level. Rather, we are more interested in explaining how they can be used to solve problems. Specifically, we are interested in knowing how they can be used with Java.

The various data science techniques that we will illustrate have been used to solve a variety of problems. Many of these techniques are motivated to achieve some economic gain, but they have also been used to solve many pressing social and environmental problems. Problem domains where these techniques have been used include finance, optimizing business processes, understanding customer needs, performing DNA analysis, foiling terrorist plots, and finding relationships between transactions to detect fraud, among many other data-intensive problems.

Data mining is a popular application area for data science. In this activity, large quantities of data are processed and analyzed to glean information about the dataset, to provide meaningful insights, and to develop meaningful conclusions and predictions. It has been used to analyze customer behavior, detecting relationships between what may appear to be unrelated events, and to make predictions about future behavior.

Machine learning is an important aspect of data science. This technique allows the computer to solve various problems without needing to be explicitly programmed. It has been used in self-driving cars, speech recognition, and in web searches. In data mining, the data is extracted and processed. With machine learning, computers use the data to take some sort of action.

Data science is concerned with the processing and analysis of large quantities of data to create models that can be used to make predictions or otherwise support a specific goal. This process often involves the building and training of models. The specific approach to solve a problem is dependent on the nature of the problem. However, in general, the following are the high-level tasks that are used in the analysis process:

- Acquiring the data: Before we can process the data, it must be acquired. The data is frequently stored in a variety of formats and will come from a wide range of data sources.

- Cleaning the data: Once the data has been acquired, it often needs to be converted to a different format before it can be used. In addition, the data needs to be processed, or cleaned, so as to remove errors, resolve inconsistencies, and otherwise put it in a form ready for analysis.

- Analyzing the data: This can be performed using a number of techniques including:

- Statistical analysis: This uses a multitude of statistical approaches to provide insight into data. It includes simple techniques and more advanced techniques such as regression analysis.

- AI analysis: These can be grouped as machine learning, neural networks, and deep learning techniques:

- Machine learning approaches are characterized by programs that can learn without being specifically programmed to complete a specific task

- Neural networks are built around models patterned after the neural connection of the brain

- Deep learning attempts to identify higher levels of abstraction within a set of data

- Text analysis: This is a common form of analysis, which works with natural languages to identify features such as the names of people and places, the relationship between parts of text, and the implied meaning of text.

- Data visualization: This is an important analysis tool. By displaying the data in a visual form, a hard-to-understand set of numbers can be more readily understood.

- Video, image, and audio processing and analysis: This is a more specialized form of analysis, which is becoming more common as better analysis techniques are discovered and faster processors become available. This is in contrast to the more common text processing and analysis tasks.

Complementing this set of tasks is the need to develop applications that are efficient. The introduction of machines with multiple processors and GPUs contributes significantly to the end result.

While the exact steps used will vary by application, understanding these basic steps provides the basis for constructing solutions to many data science problems.

Java and its associated third-party libraries provide a range of support for the development of data science applications. There are numerous core Java capabilities that can be used, such as the basic string processing methods. The introduction of lambda expressions in Java 8 helps enable more powerful and expressive means of building applications. In many of the examples that follow in subsequent chapters, we will show alternative techniques using lambda expressions.

There is ample support provided for the basic data science tasks. These include multiple ways of acquiring data, libraries for cleaning data, and a wide variety of analysis approaches for tasks such as natural language processing and statistical analysis. There are also myriad of libraries supporting neural network types of analysis.

Java can be a very good choice for data science problems. The language provides both object-oriented and functional support for solving problems. There is a large developer community to draw upon and there exist multiple APIs that support data science tasks. These are but a few reasons as to why Java should be used.

The remainder of this chapter will provide an overview of the data science tasks and Java support demonstrated in the book. Each section is only able to present a brief introduction to the topics and the available support. The subsequent chapter will go into considerably more depth regarding these topics.

Using Java to support data science

Java and its associated third-party libraries provide a range of support for the development of data science applications. There are numerous core Java capabilities that can be used, such as the basic string processing methods. The introduction of lambda expressions in Java 8 helps enable more powerful and expressive means of building applications. In many of the examples that follow in subsequent chapters, we will show alternative techniques using lambda expressions.

There is ample support provided for the basic data science tasks. These include multiple ways of acquiring data, libraries for cleaning data, and a wide variety of analysis approaches for tasks such as natural language processing and statistical analysis. There are also myriad of libraries supporting neural network types of analysis.

Java can be a very good choice for data science problems. The language provides both object-oriented and functional support for solving problems. There is a large developer community to draw upon and there exist multiple APIs that support data science tasks. These are but a few reasons as to why Java should be used.

The remainder of this chapter will provide an overview of the data science tasks and Java support demonstrated in the book. Each section is only able to present a brief introduction to the topics and the available support. The subsequent chapter will go into considerably more depth regarding these topics.

Data acquisition is an important step in the data analysis process. When data is acquired, it is often in a specialized form and its contents may be inconsistent or different from an application's need. There are many sources of data, which are found on the Internet. Several examples will be demonstrated in Chapter 2, Data Acquisition.

Data may be stored in a variety of formats. Popular formats for text data include HTML, Comma Separated Values (CSV), JavaScript Object Notation (JSON), and XML. Image and audio data are stored in a number of formats. However, it is frequently necessary to convert one data format into another format, typically plain text.

For example, JSON (http://www.JSON.org/) is stored using blocks of curly braces containing key-value pairs. In the following example, parts of a YouTube result is shown:

{

"kind": "youtube#searchResult",

"etag": etag,

"id": {

"kind": string,

"videoId": string,

"channelId": string,

"playlistId": string

},

...

}

Data is acquired using techniques such as processing live streams, downloading compressed files, and through screen scraping, where the information on a web page is extracted. Web crawling is a technique where a program examines a series of web pages, moving from one page to another, acquiring the data that it needs.

With many popular media sites, it is necessary to acquire a user ID and password to access data. A commonly used technique is OAuth, which is an open standard used to authenticate users to many different websites. The technique delegates access to a server resource and works over HTTPS. Several companies use OAuth 2.0, including PayPal, Facebook, Twitter, and Yelp.

Once the data has been acquired, it will need to be cleaned. Frequently, the data will contain errors, duplicate entries, or be inconsistent. It often needs to be converted to a simpler data type such as text. Data cleaning is often referred to as data wrangling, reshaping, or munging. They are effectively synonyms.

When data is cleaned, there are several tasks that often need to be performed, including checking its validity, accuracy, completeness, consistency, and uniformity. For example, when the data is incomplete, it may be necessary to provide substitute values.

Consider CSV data. It can be handled in one of several ways. We can use simple Java techniques such as the String class' split method. In the following sequence, a string array, csvArray, is assumed to hold comma-delimited data. The split method populates a second array, tokenArray.

for(int i=0; i<csvArray.length; i++) {

tokenArray[i] = csvArray[i].split(",");

}

More complex data types require APIs to retrieve the data. For example, in Chapter 3, Data Cleaning, we will use the Jackson Project (https://github.com/FasterXML/jackson) to retrieve fields from a JSON file. The example uses a file containing a JSON-formatted presentation of a person, as shown next:

{

"firstname":"Smith",

"lastname":"Peter",

"phone":8475552222,

"address":["100 Main Street","Corpus","Oklahoma"]

}The code sequence that follows shows how to extract the values for fields of a person. A parser is created, which uses getCurrentName to retrieve a field name. If the name is firstname, then the getText method returns the value for that field. The other fields are handled in a similar manner.

try {

JsonFactory jsonfactory = new JsonFactory();

JsonParser parser = jsonfactory.createParser(

new File("Person.json"));

while (parser.nextToken() != JsonToken.END_OBJECT) {

String token = parser.getCurrentName();

if ("firstname".equals(token)) {

parser.nextToken();

String fname = parser.getText();

out.println("firstname : " + fname);

}

...

}

parser.close();

} catch (IOException ex) {

// Handle exceptions

}

The output of this example is as follows:

firstname : Smith

Simple data cleaning may involve converting the text to lowercase, replacing certain text with blanks, and removing multiple whitespace characters with a single blank. One way of doing this is shown next, where a combination of the String class' toLowerCase, replaceAll, and trim methods are used. Here, a string containing dirty text is processed:

dirtyText = dirtyText

.toLowerCase()

.replaceAll("[\\d[^\\w\\s]]+", "

.trim();

while(dirtyText.contains(" ")){

dirtyText = dirtyText.replaceAll(" ", " ");

}

Stop words are words such as the, and, or but that do not always contribute to the analysis of text. Removing these stop words can often improve the results and speed up the processing.

The LingPipe API can be used to remove stop words. In the next code sequence, a TokenizerFactory class instance is used to tokenize text. Tokenization is the process of returning individual words. The EnglishStopTokenizerFactory class is a special tokenizer that removes common English stop words.

text = text.toLowerCase().trim();

TokenizerFactory fact = IndoEuropeanTokenizerFactory.INSTANCE;

fact = new EnglishStopTokenizerFactory(fact);

Tokenizer tok = fact.tokenizer(

text.toCharArray(), 0, text.length());

for(String word : tok){

out.print(word + " ");

}

Consider the following text, which was pulled from the book, Moby Dick:

Call me Ishmael. Some years ago- never mind how long precisely - having little or no money in my purse, and nothing particular to interest me on shore, I thought I would sail about a little and see the watery part of the world.

The output will be as follows:

call me ishmael . years ago - never mind how long precisely - having little money my purse , nothing particular interest me shore , i thought i sail little see watery part world .

These are just a couple of the data cleaning tasks discussed in Chapter 3, Data Cleaning.

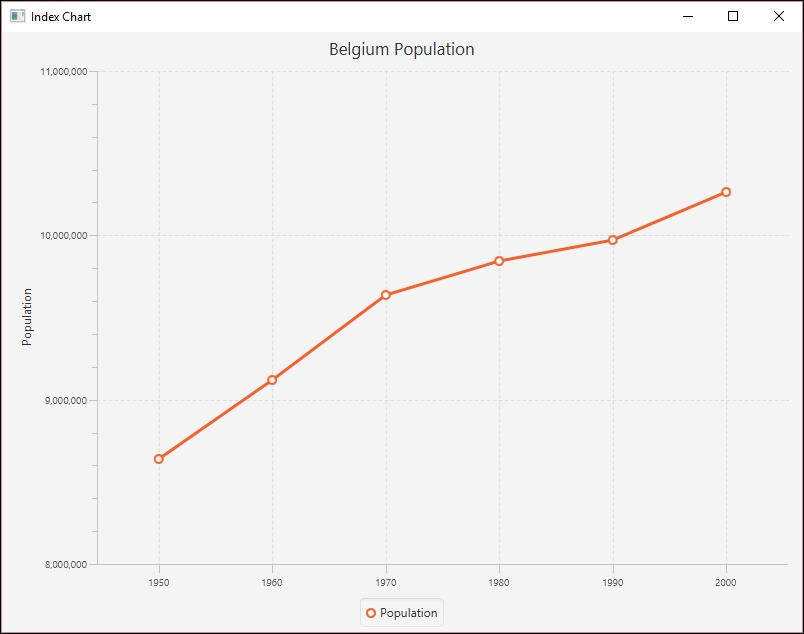

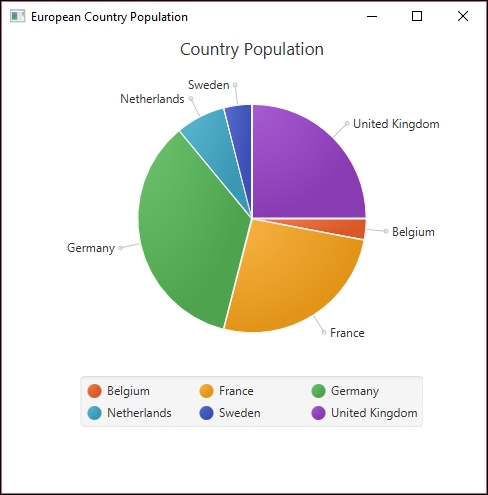

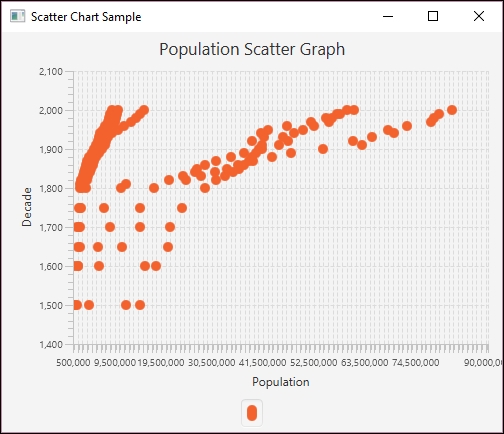

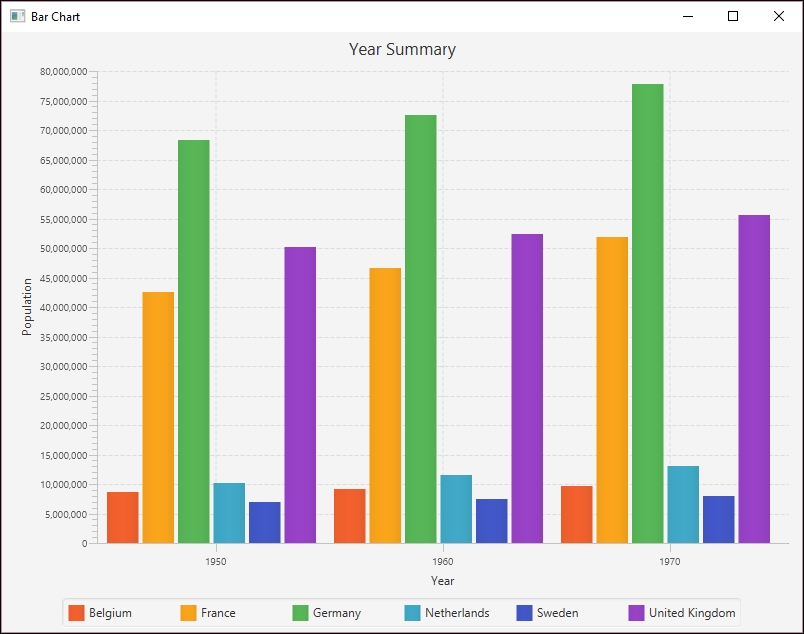

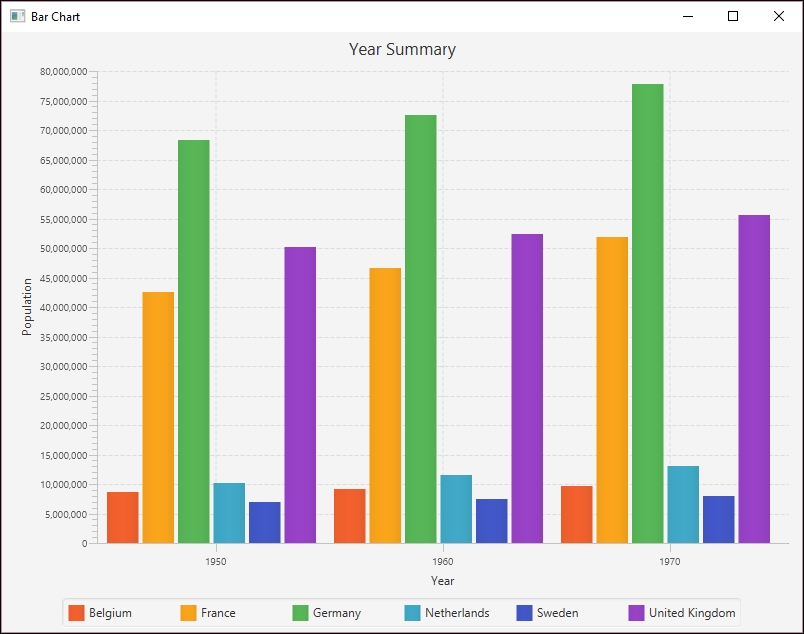

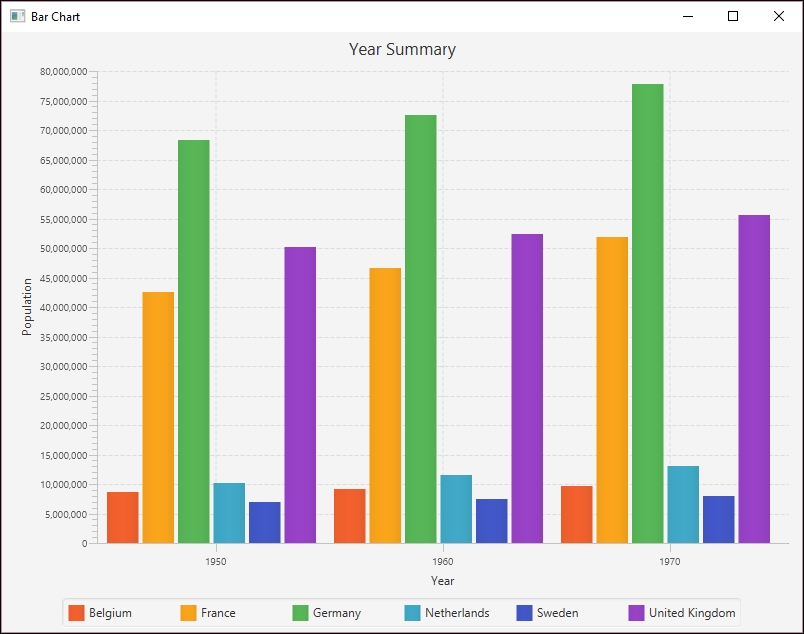

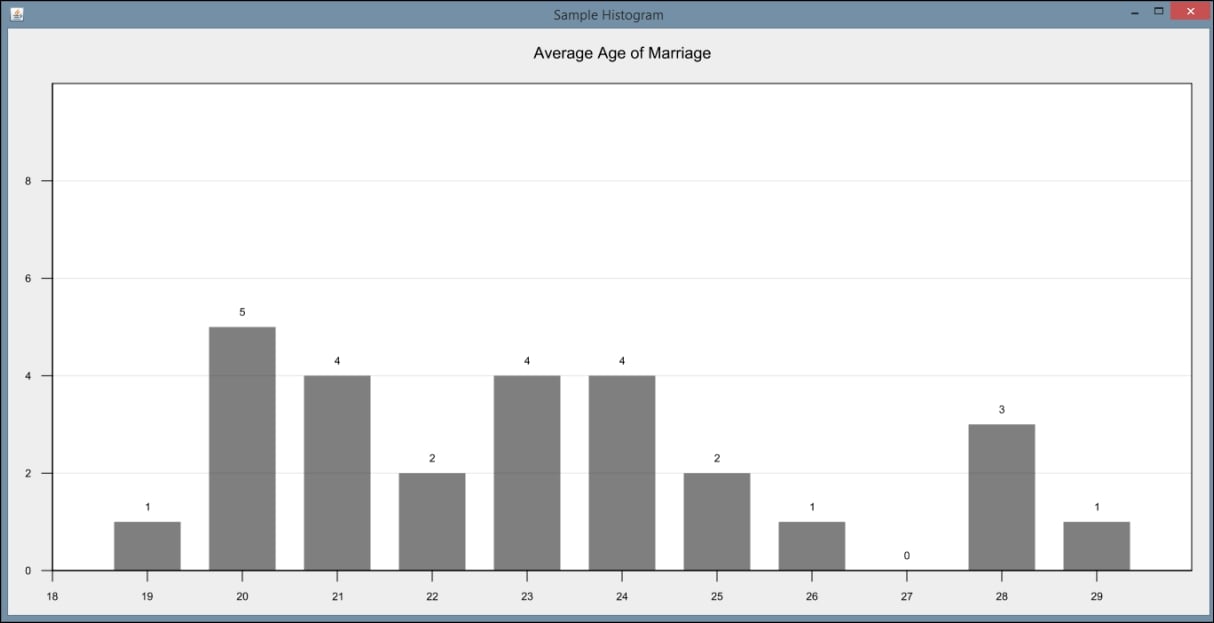

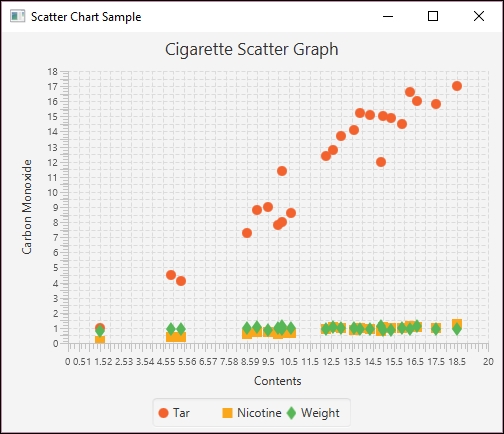

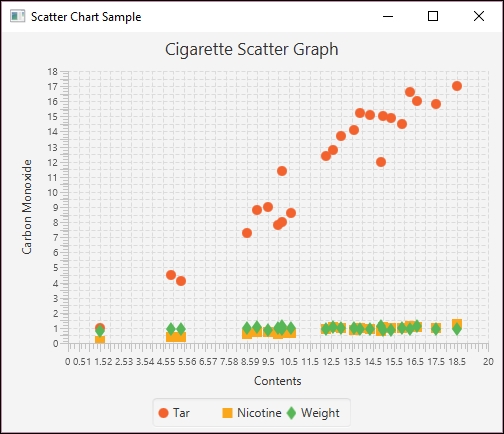

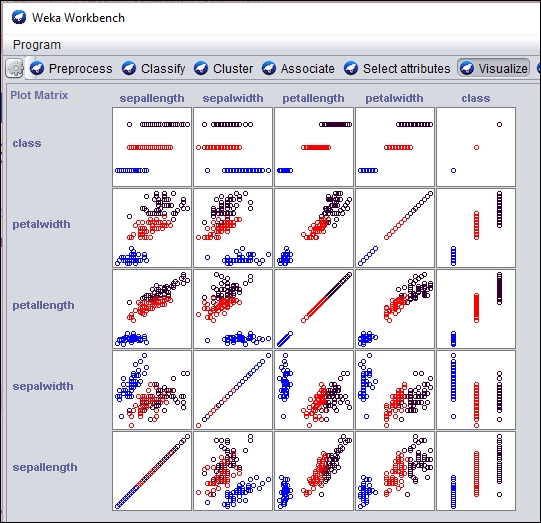

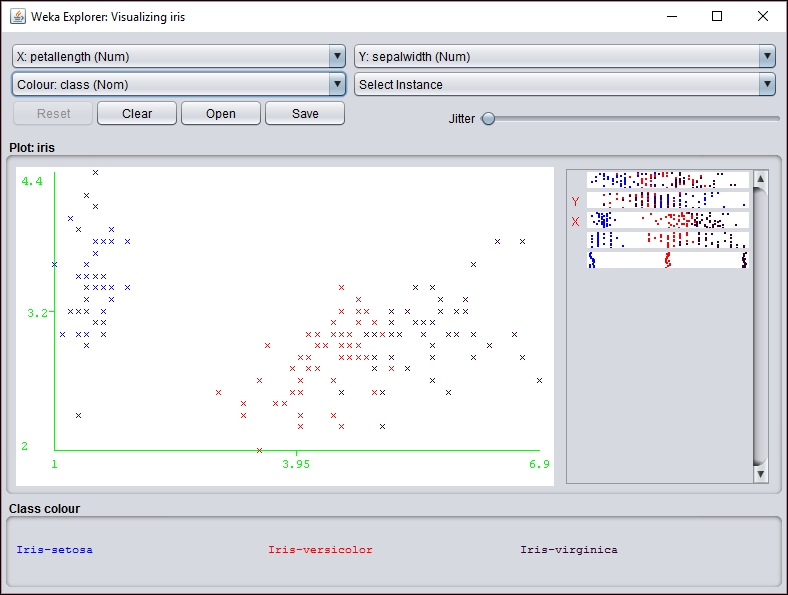

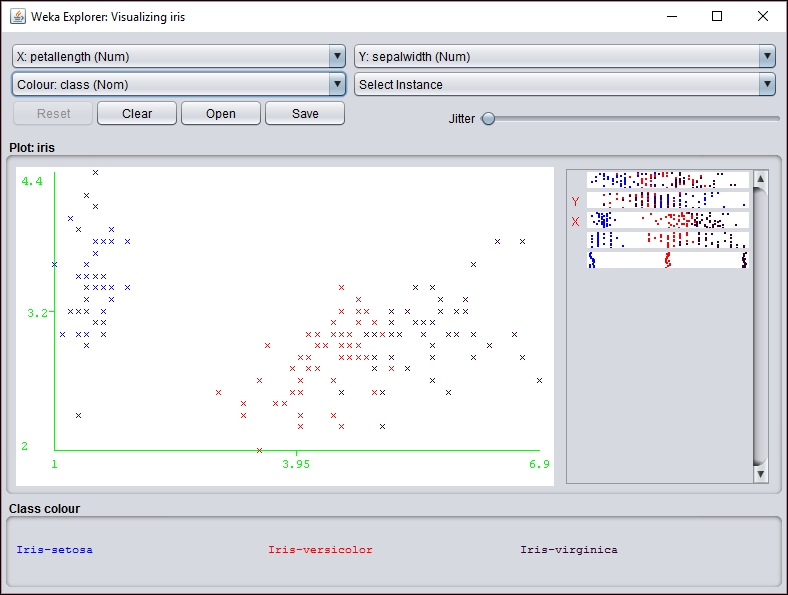

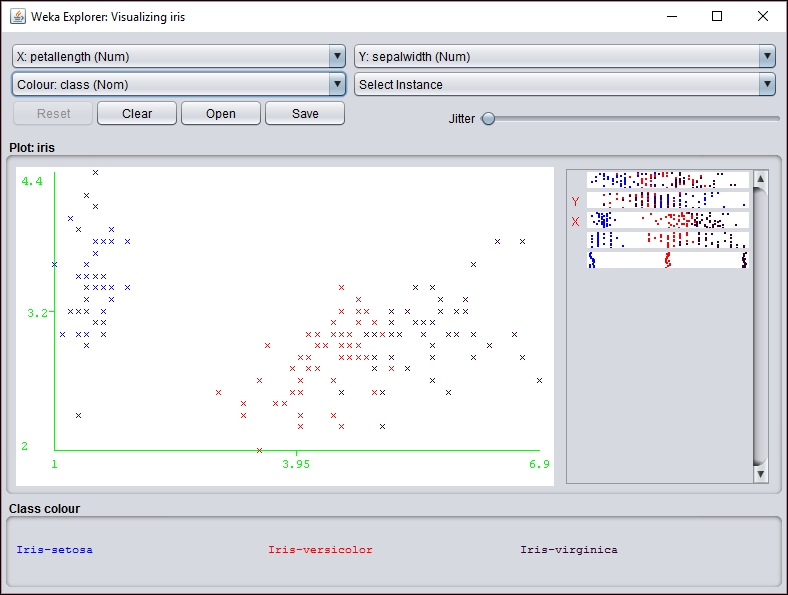

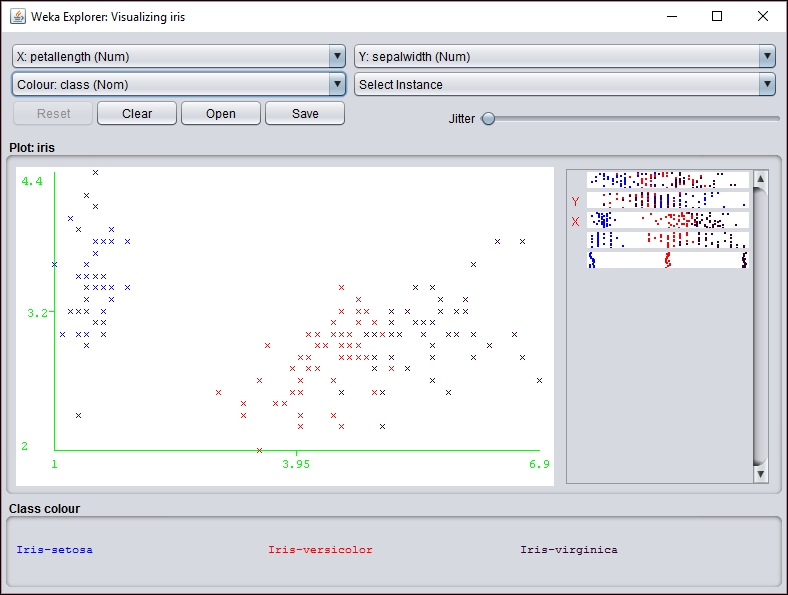

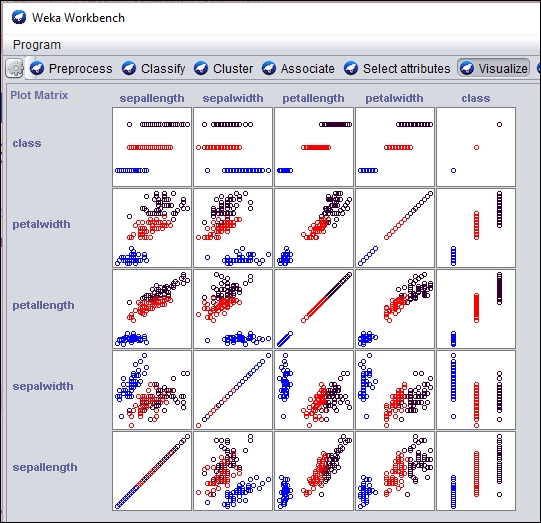

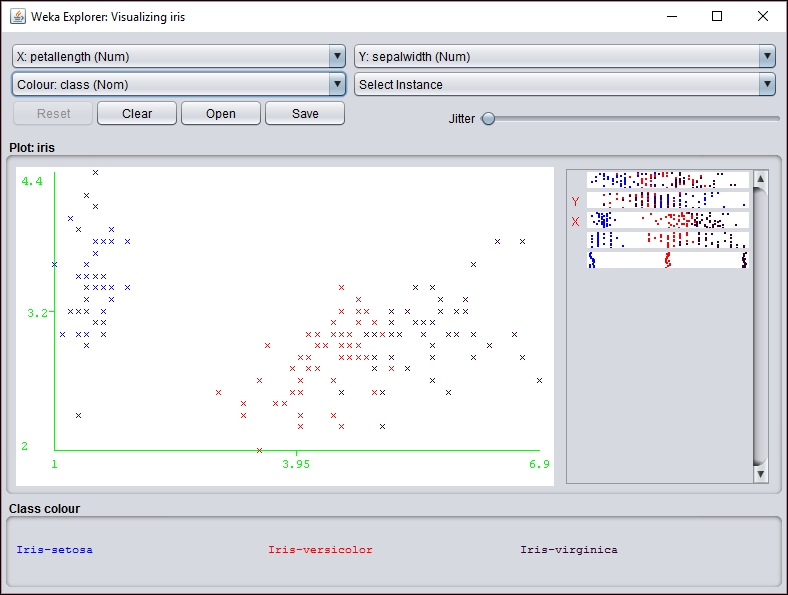

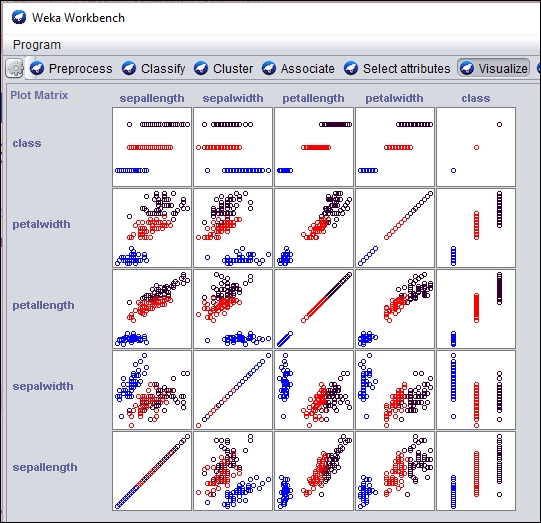

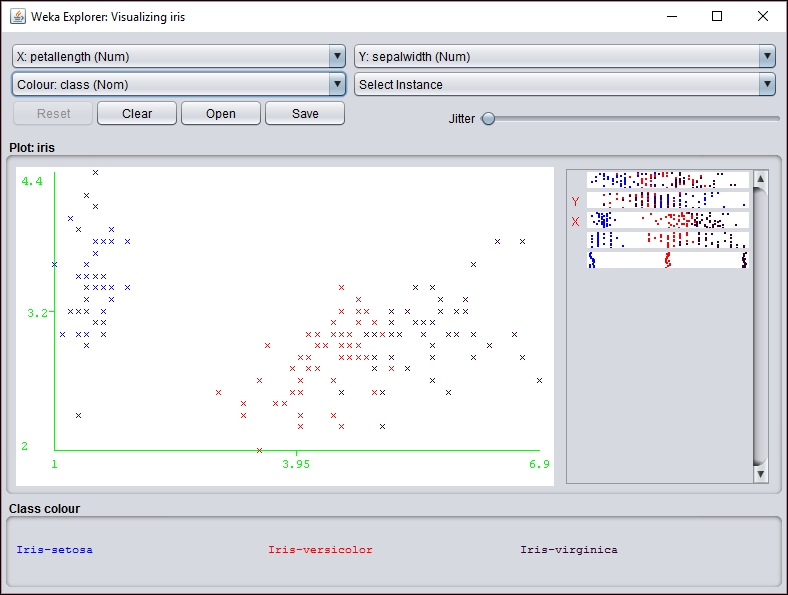

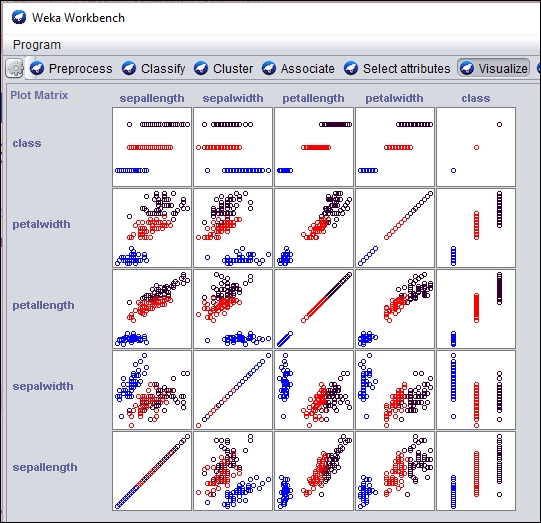

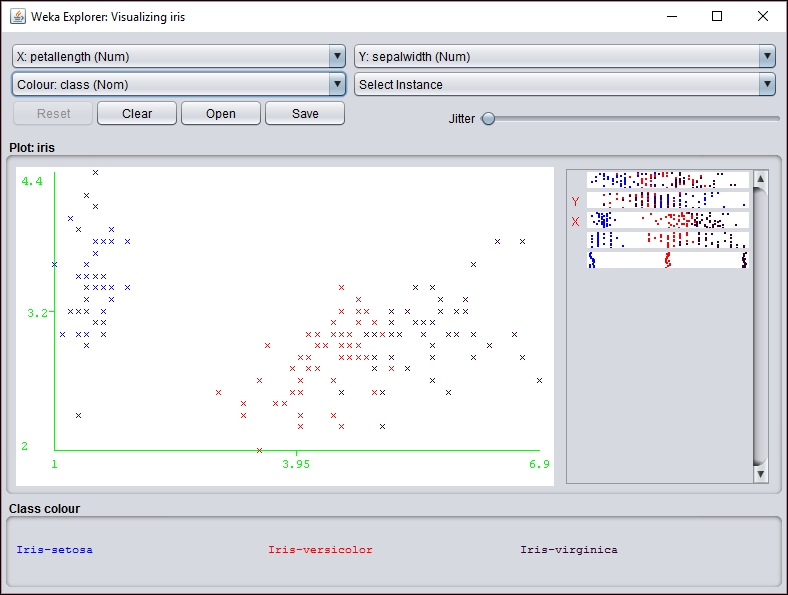

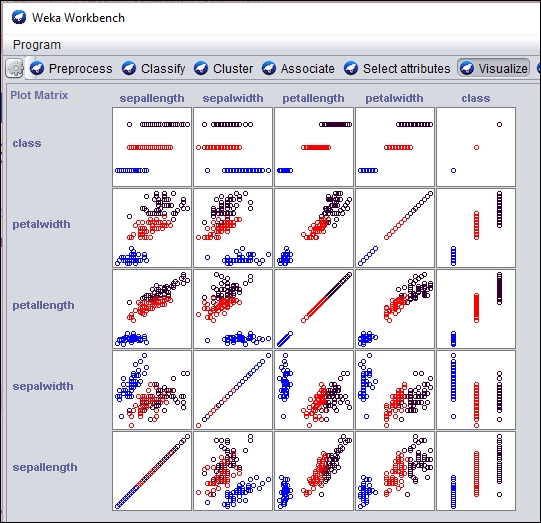

The analysis of data often results in a series of numbers representing the results of the analysis. However, for most people, this way of expressing results is not always intuitive. A better way to understand the results is to create graphs and charts to depict the results and the relationship between the elements of the result.

The human mind is often good at seeing patterns, trends, and outliers in visual representation. The large amount of data present in many data science problems can be analyzed using visualization techniques. Visualization is appropriate for a wide range of audiences ranging from analysts to upper-level management to clientele. In this chapter, we present various visualization techniques and demonstrate how they are supported in Java.

In Chapter 4, Data Visualization, we illustrate how to create different types of graphs, plots, and charts. These examples use JavaFX using a free library called GRAL(http://trac.erichseifert.de/gral/).

Visualization allows users to examine large datasets in ways that provide insights that are not present in the mass of the data. Visualization tools helps us identify potential problems or unexpected data results and develop meaningful interpretations of the data.

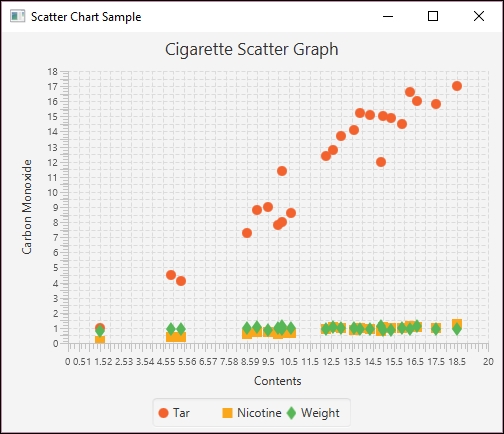

For example, outliers, which are values that lie outside of the normal range of values, can be hard to spot from a sea of numbers. Creating a graph based on the data allows users to quickly see outliers. It can also help spot errors quickly and more easily classify data.

For example, the following chart might suggest that the upper two values should be outliers that need to be dealt with:

Statistical analysis is the key to many data science tasks. It is used for many types of analysis ranging from the computation of simple mean and medium to complex multiple regression analysis. Chapter 5, Statistical Data Analysis Techniques, introduces this type of analysis and the Java support available.

Statistical analysis is not always an easy task. In addition, advanced statistical techniques often require a particular mindset to fully comprehend, which can be difficult to learn. Fortunately, many techniques are not that difficult to use and various libraries mitigate some of these techniques' inherent complexity.

Regression analysis, in particular, is an important technique for analyzing data. The technique attempts to draw a line that matches a set of data. An equation representing the line is calculated and can be used to predict future behavior. There are several types of regression analysis, including simple and multiple regression. They vary by the number of variables being considered.

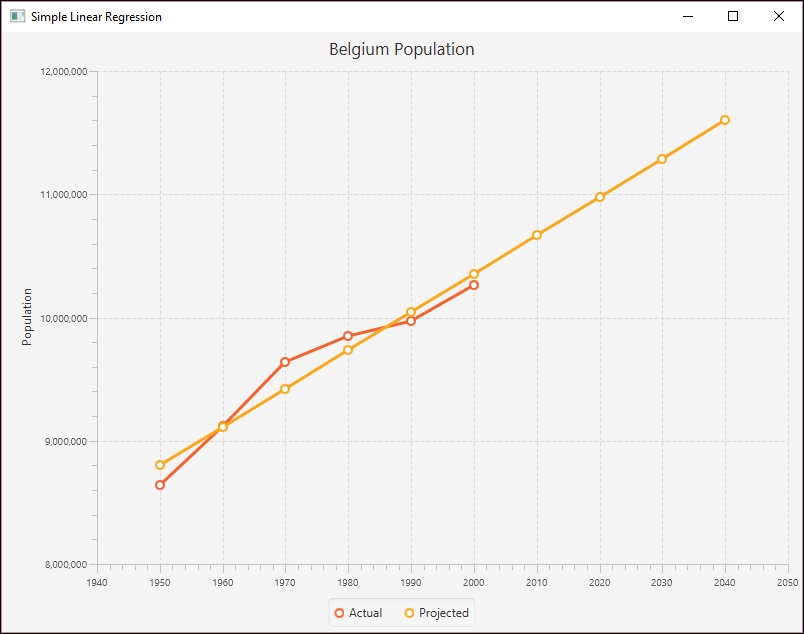

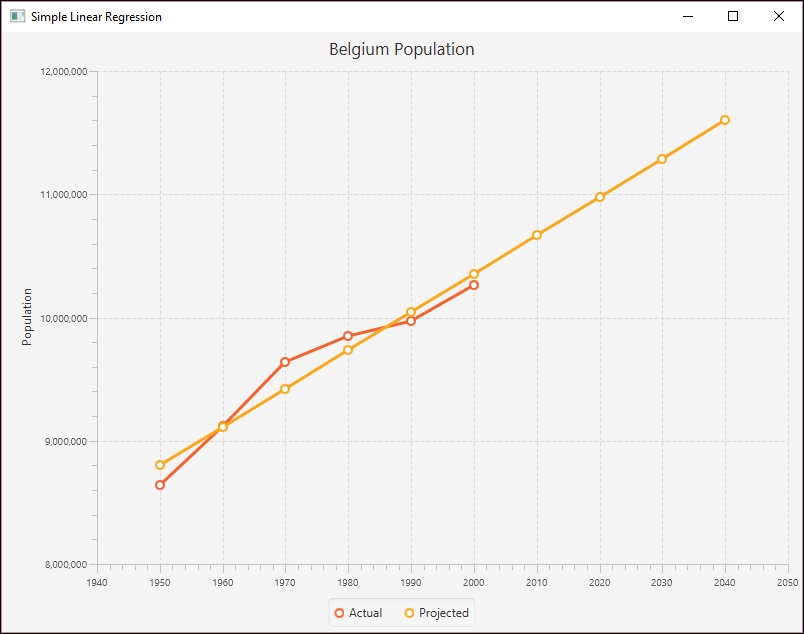

The following graph shows the straight line that closely matches a set of data points representing the population of Belgium over several decades:

Simple statistical techniques, such as mean and standard deviation, can be computed using basic Java. They can also be handled by libraries such as Apache Commons. For example, to calculate the median, we can use the Apache Commons DescriptiveStatistics class. This is illustrated next where the median of an array of doubles is calculated. The numbers are added to an instance of this class, as shown here:

double[] testData = {12.5, 18.3, 11.2, 19.0, 22.1, 14.3, 16.2,

12.5, 17.8, 16.5, 12.5};

DescriptiveStatistics statTest =

new SynchronizedDescriptiveStatistics();

for(double num : testData){

statTest.addValue(num);

}

The getPercentile method returns the value stored at the percentile specified in its argument. To find the median, we use the value of 50.

out.println("The median is " + statTest.getPercentile(50));

Our output is as follows:

The median is 16.2

In Chapter 5, Statistical Data Analysis Techniques, we will demonstrate how to perform regression analysis using the Apache Commons SimpleRegression class.

Machine learning has become increasingly important for data science analysis as it has been for a multitude of other fields. A defining characteristic of machine learning is the ability of a model to be trained on a set of representative data and then later used to solve similar problems. There is no need to explicitly program an application to solve the problem. A model is a representation of the real-world object.

For example, customer purchases can be used to train a model. Subsequently, predictions can be made about the types of purchases a customer might subsequently make. This allows an organization to tailor ads and coupons for a customer and potentially providing a better customer experience.

Training can be performed in one of several different approaches:

- Supervised learning: The model is trained with annotated, labeled, data showing corresponding correct results

- Unsupervised learning: The data does not contain results, but the model is expected to find relationships on its own

- Semi-supervised: A small amount of labeled data is combined with a larger amount of unlabeled data

- Reinforcement learning: This is similar to supervised learning, but a reward is provided for good results

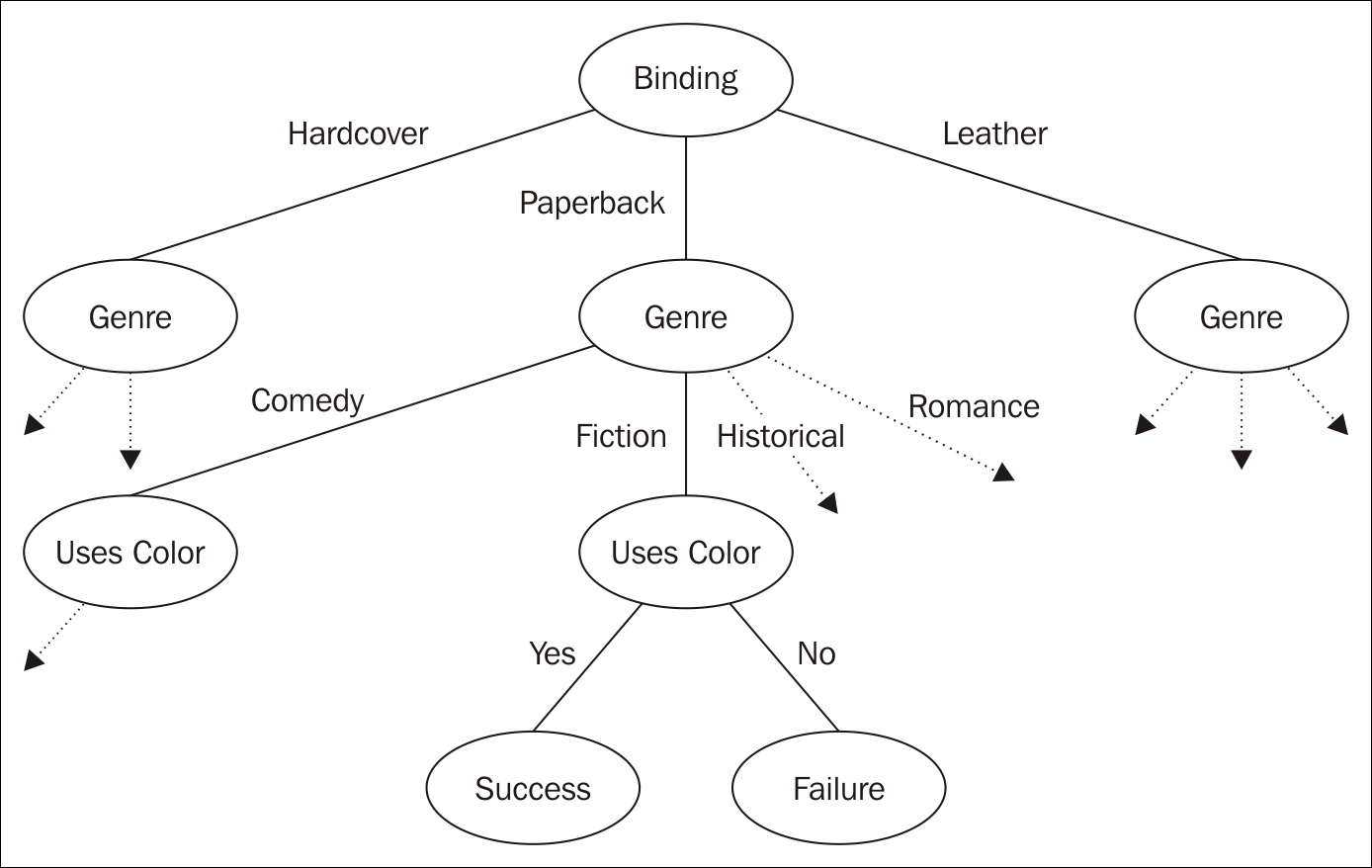

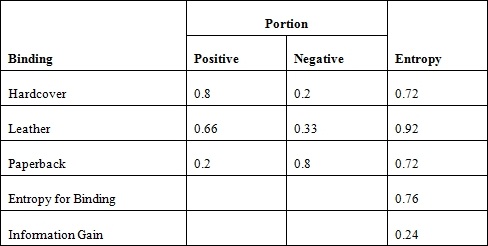

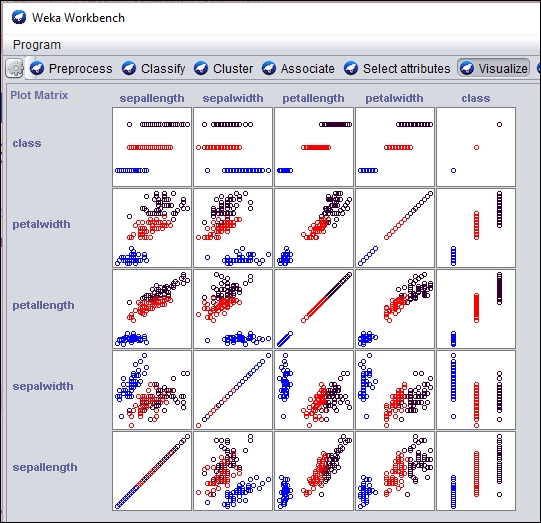

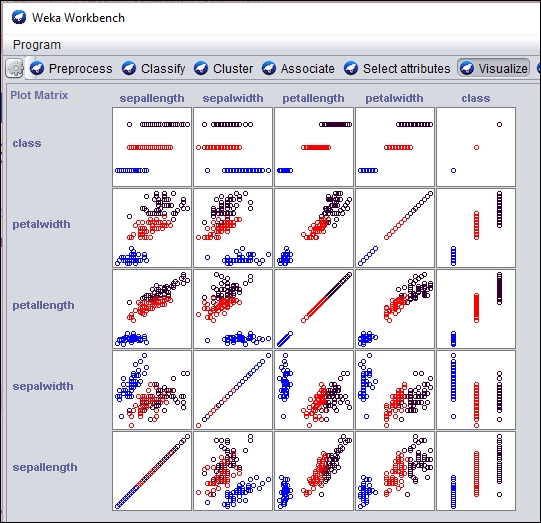

There are several approaches that support machine learning. In Chapter 6, Machine Learning, we will illustrate three techniques:

- Decision trees: A tree is constructed using features of the problem as internal nodes and the results as leaves

- Support vector machines: This is used for classification by creating a hyperplane that partitions the dataset and then makes predictions

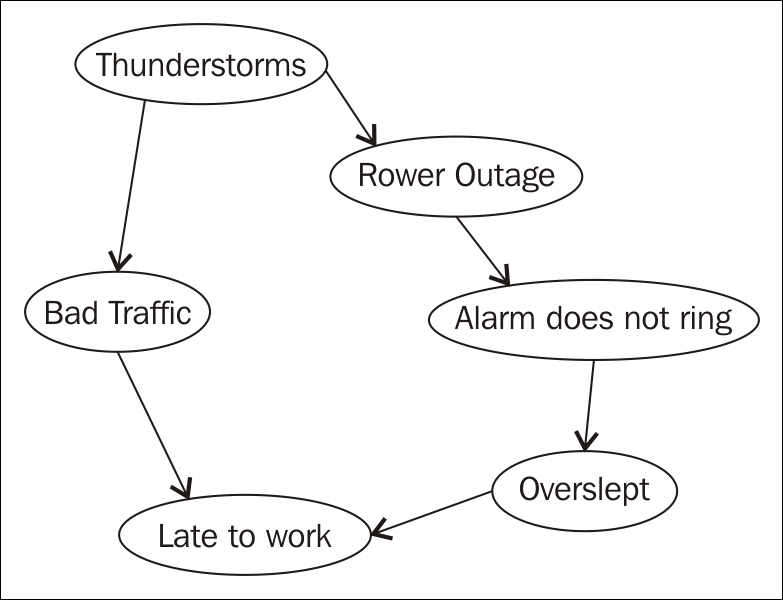

- Bayesian networks: This is used to depict probabilistic relationships between events

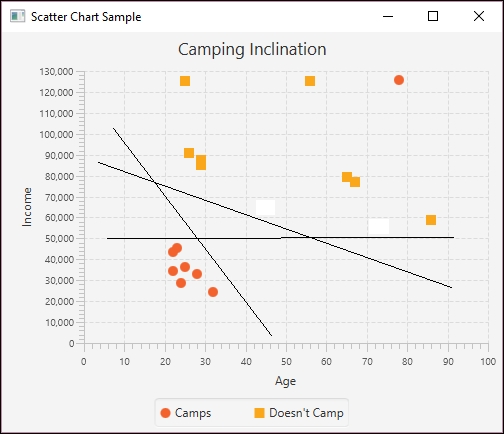

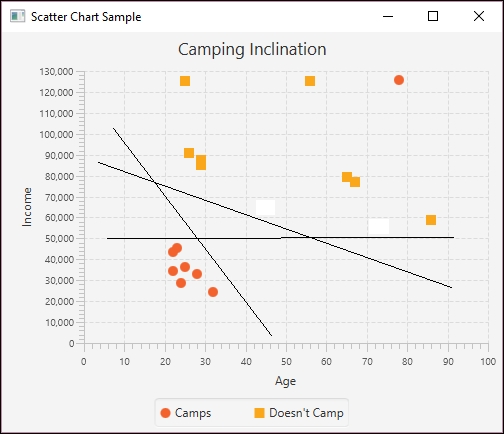

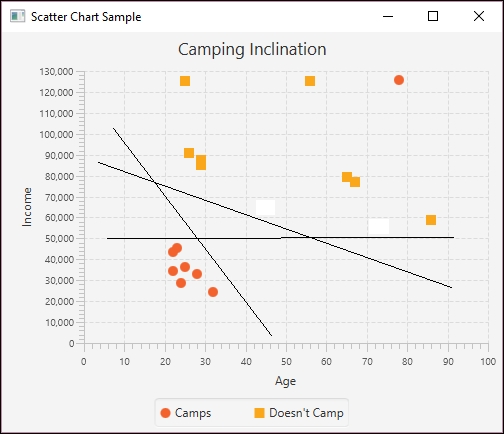

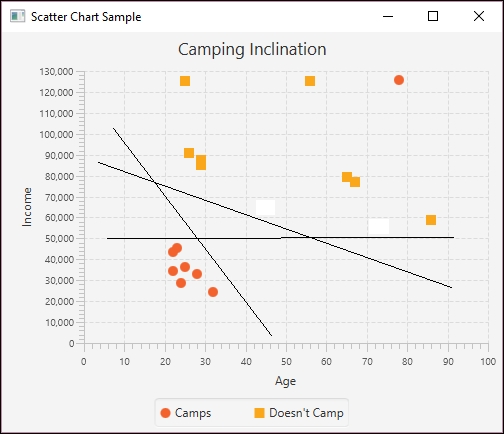

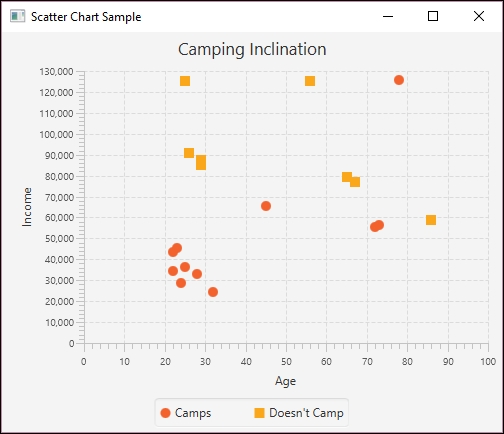

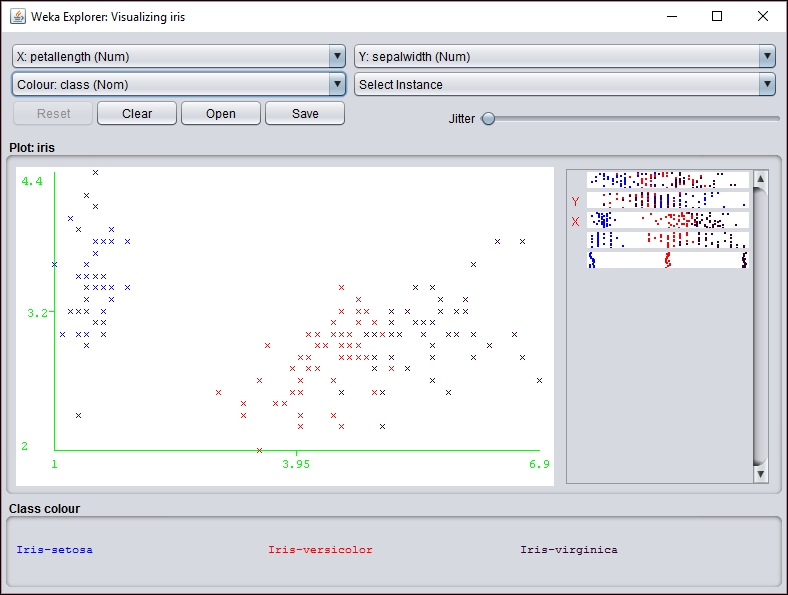

A Support Vector Machine (SVM) is used primarily for classification type problems. The approach creates a hyperplane to categorize data, which can be envisioned as a geometric plane that separates two regions. In a two-dimensional space, it will be a line. In a three-dimensional space, it will be a two-dimensional plane. In Chapter 6, Machine Learning, we will demonstrate how to use the approach using a set of data relating to the propensity of individuals to camp. We will use the Weka class, SMO, to demonstrate this type of analysis.

The following figure depicts a hyperplane using a distribution of two types of data points. The lines represent possible hyperplanes that separate these points. The lines clearly separate the data points except for one outlier.

Once the model has been trained, the possible hyperplanes are considered and predictions can then be made using similar data.

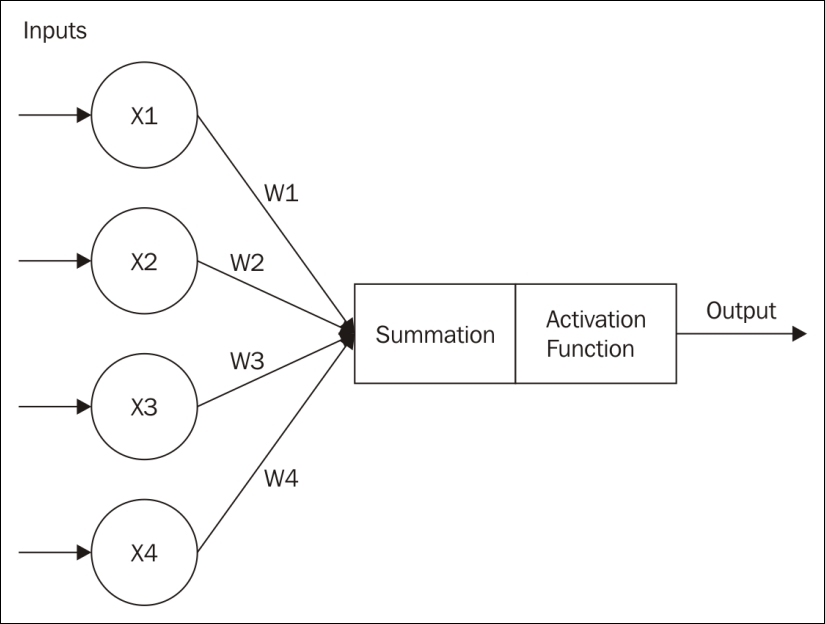

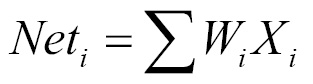

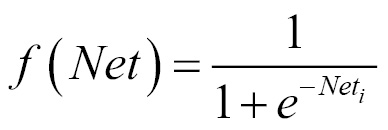

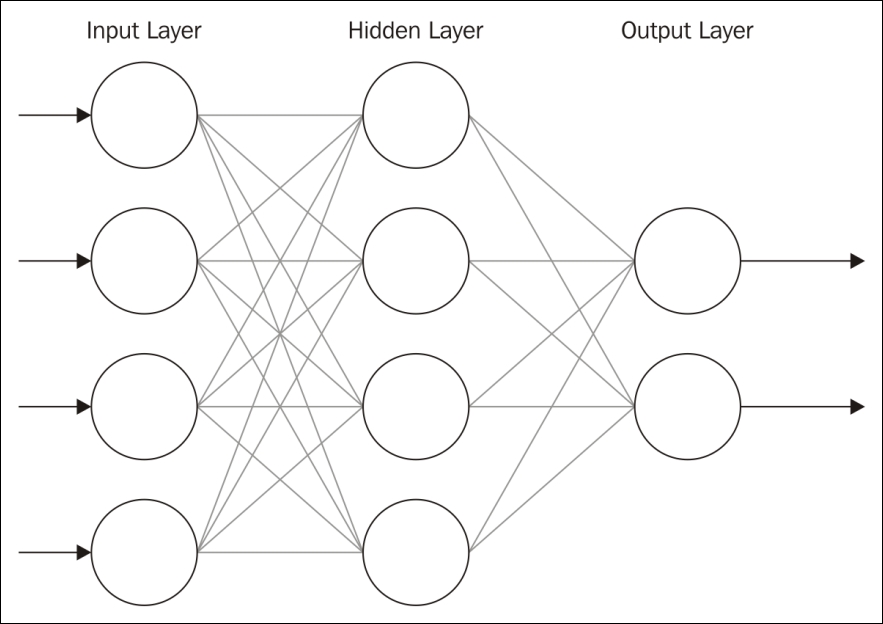

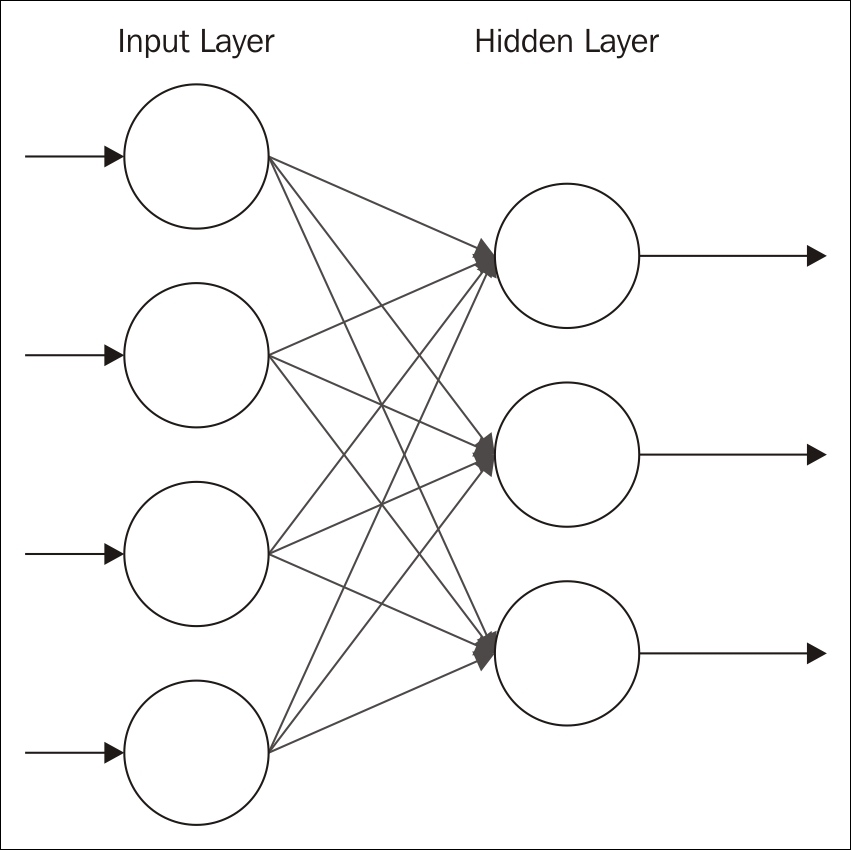

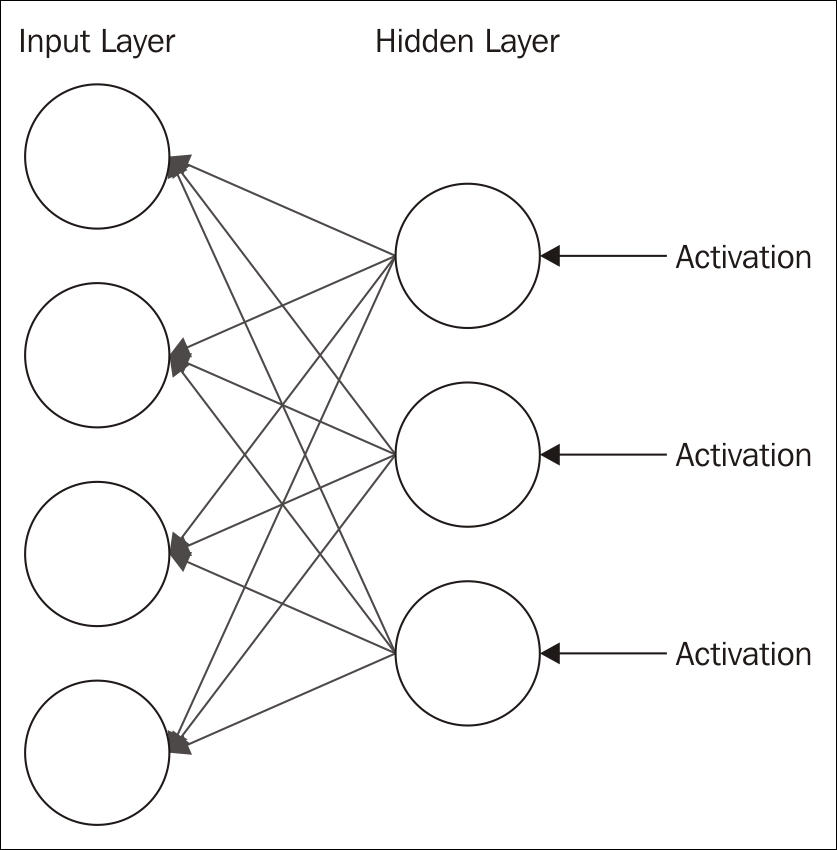

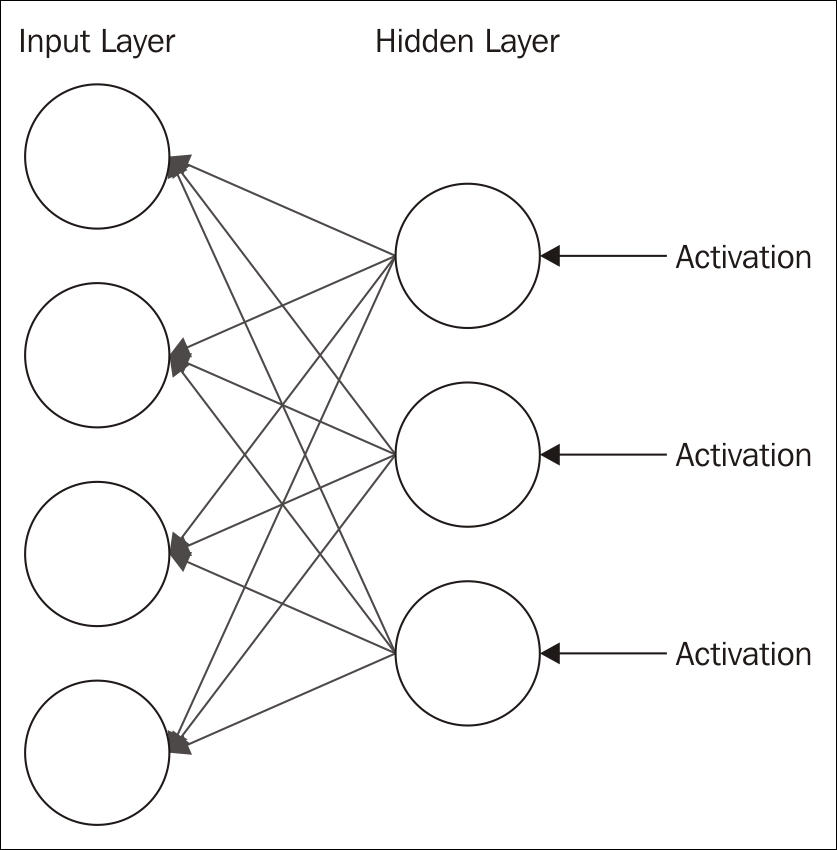

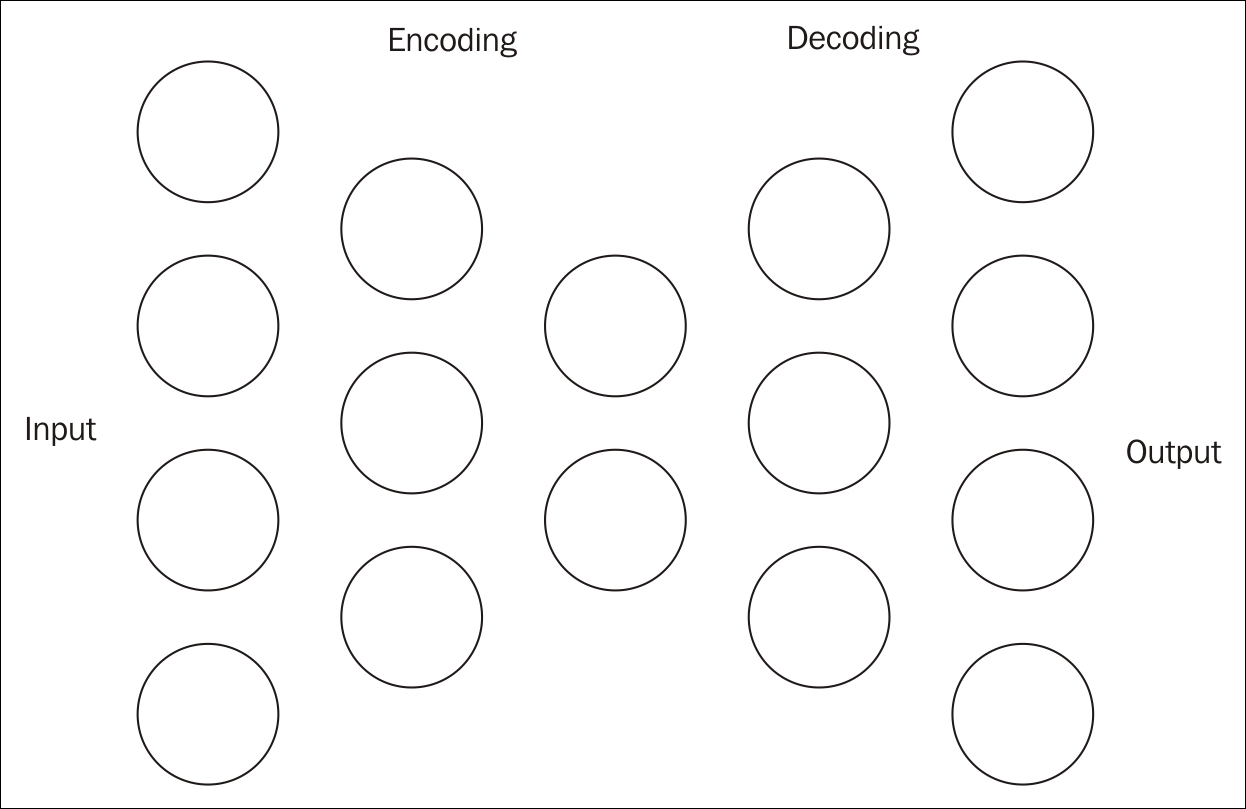

An Artificial Neural Network (ANN), which we will call a neural network, is based on the neuron found in the brain. A neuron is a cell that has dendrites connecting it to input sources and other neurons. Depending on the input source, a weight allocated to a source, the neuron is activated, and then fires a signal down a dendrite to another neuron. A collection of neurons can be trained to respond to a set of input signals.

An artificial neuron is a node that has one or more inputs and a single output. Each input has a weight assigned to it that can change over time. A neural network can learn by feeding an input into a network, invoking an activation function, and comparing the results. This function combines the inputs and creates an output. If outputs of multiple neurons match the expected result, then the network has been trained correctly. If they don't match, then the network is modified.

A neural network can be visualized as shown in the following figure, where Hidden Layer is used to augment the process:

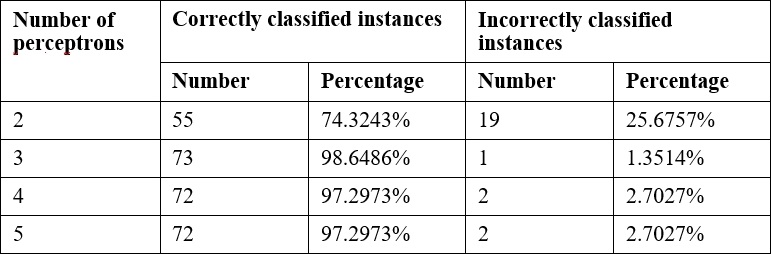

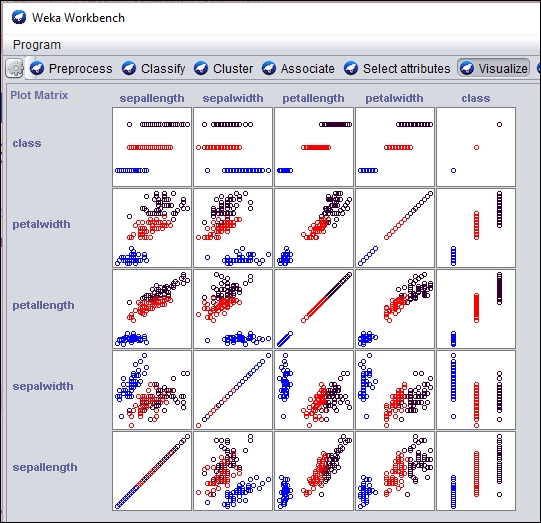

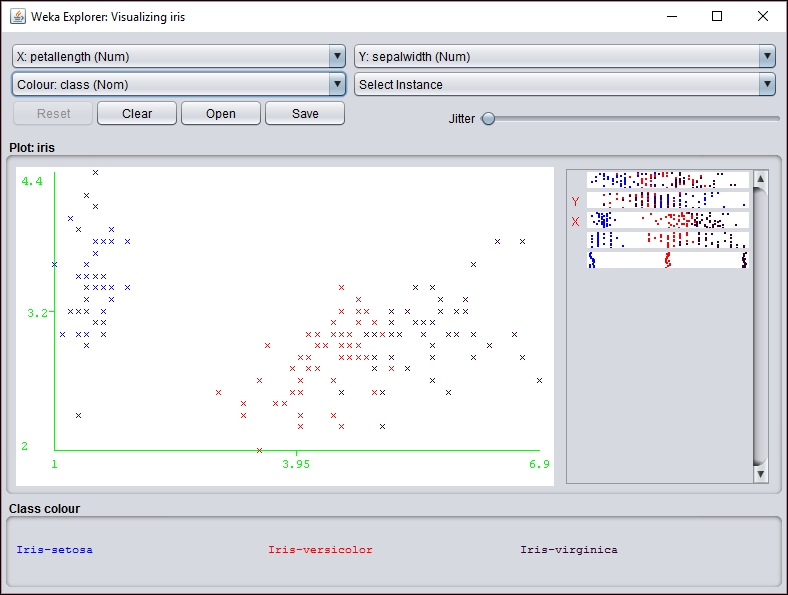

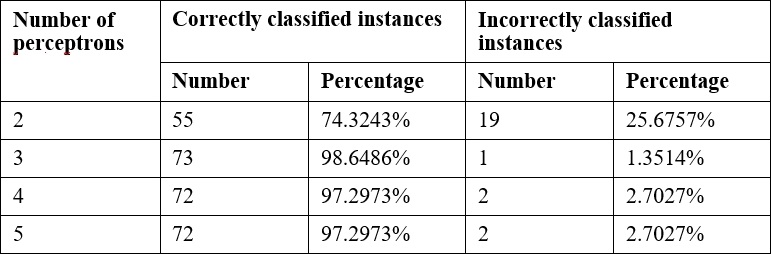

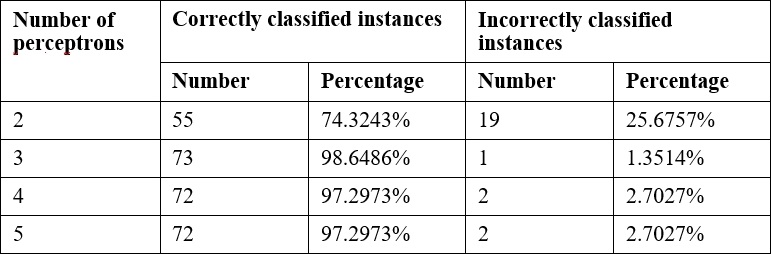

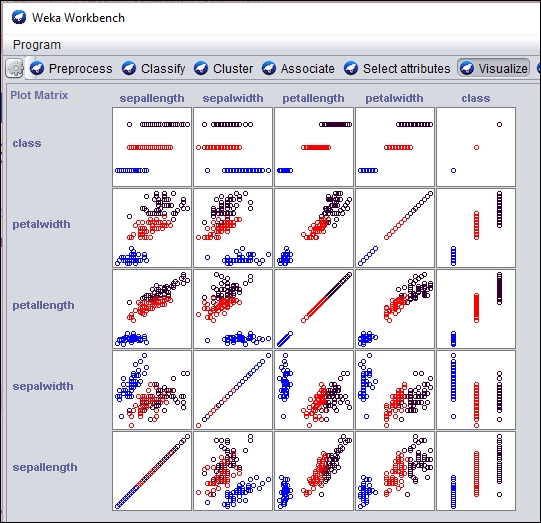

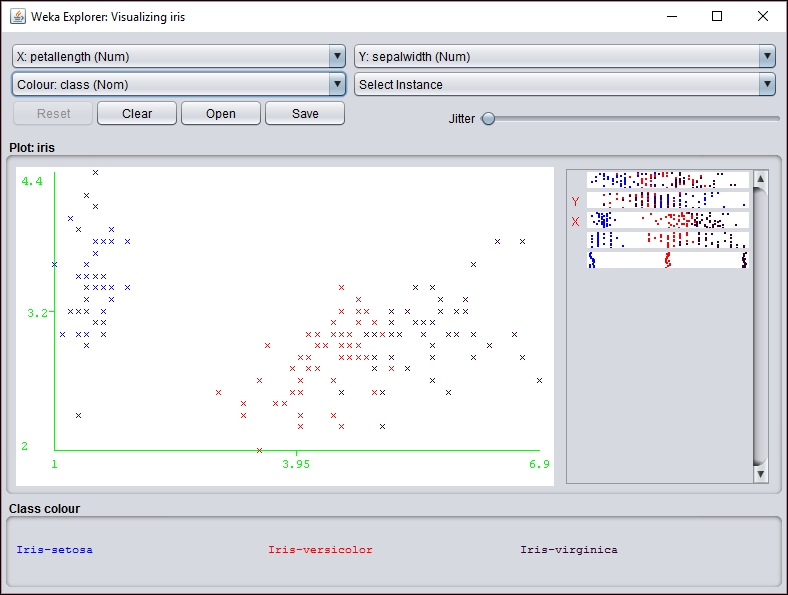

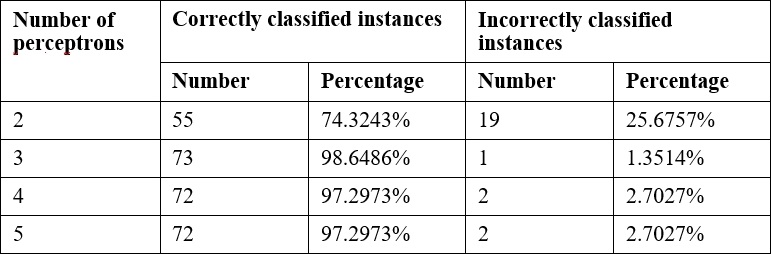

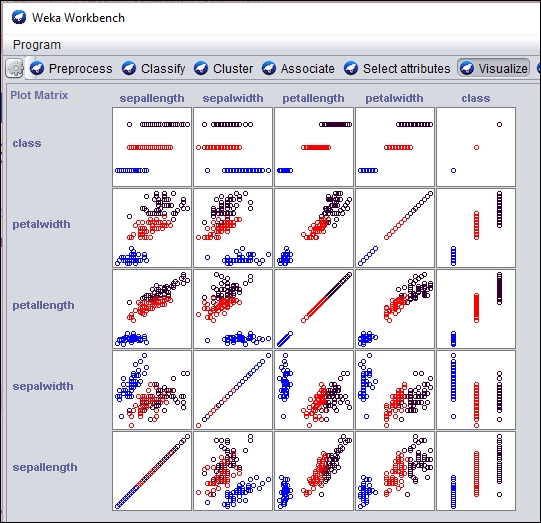

In Chapter 7, Neural Networks, we will use the Weka class, MultilayerPerceptron, to illustrate the creation and use of a Multi Layer Perceptron (MLP) network. As we will explain, this type of network is a feedforward neural network with multiple layers. The network uses supervised learning with backpropagation. The example uses a dataset called dermatology.arff that contains 366 instances that are used to diagnose erythemato-squamous diseases. It uses 34 attributes to classify the disease into one of the five different categories.

The dataset is split into a training set and a testing set. Once the data has been read, the MLP instance is created and initialized using the method to configure the attributes of the model, including how quickly the model is to learn and the amount of time spent training the model.

String trainingFileName = "dermatologyTrainingSet.arff";

String testingFileName = "dermatologyTestingSet.arff";

try (FileReader trainingReader = new FileReader(trainingFileName);

FileReader testingReader =

new FileReader(testingFileName)) {

Instances trainingInstances = new Instances(trainingReader);

trainingInstances.setClassIndex(

trainingInstances.numAttributes() - 1);

Instances testingInstances = new Instances(testingReader);

testingInstances.setClassIndex(

testingInstances.numAttributes() - 1);

MultilayerPerceptron mlp = new MultilayerPerceptron();

mlp.setLearningRate(0.1);

mlp.setMomentum(0.2);

mlp.setTrainingTime(2000);

mlp.setHiddenLayers("3");

mlp.buildClassifier(trainingInstances);

...

} catch (Exception ex) {

// Handle exceptions

}

The model is then evaluated using the testing data:

Evaluation evaluation = new Evaluation(trainingInstances); evaluation.evaluateModel(mlp, testingInstances);

The results can then be displayed:

System.out.println(evaluation.toSummaryString());

The truncated output of this example is shown here where the number of correctly and incorrectly identified diseases are listed:

Correctly Classified Instances 73 98.6486 % Incorrectly Classified Instances 1 1.3514 %

The various attributes of the model can be tweaked to improve the model. In Chapter 7, Neural Networks, we will discuss this and other techniques in more depth.

Deep learning networks are often described as neural networks that use multiple intermediate layers. Each layer will train on the outputs of a previous layer potentially identifying features and subfeatures of a dataset. The features refer to those aspects of the data that may be of interest. In Chapter 8, Deep Learning, we will examine these types of networks and how they can support several different data science tasks.

These networks often work with unstructured and unlabeled datasets, which is the vast majority of the data available today. A typical approach is to take the data, identify features, and then use these features and their corresponding layers to reconstruct the original dataset, thus validating the network. The Restricted Boltzmann Machines (RBM) is a good example of the application of this approach.

The deep learning network needs to ensure that the results are accurate and minimizes any error that can creep into the process. This is accomplished by adjusting the internal weights assigned to neurons based on what is known as gradient descent. This represents the slope of the weight changes. The approach modifies the weight so as to minimize the error and also speeds up the learning process.

There are several types of networks that have been classified as a deep learning network. One of these is an autoencoder network. In this network, the layers are symmetrical where the number of input values is the same as the number of output values and the intermediate layers effectively compress the data to a single smaller internal layer. Each layer of the autoencoder is a RBM.

This structure is reflected in the following example, which will extract the numbers found in a set of images containing hand-written numbers. The details of the complete example are not shown here, but notice that 1,000 input and output values are used along with internal layers consisting of RBMs. The size of the layers are specified in the nOut and nIn methods.

MultiLayerConfiguration conf = new NeuralNetConfiguration.Builder()

.seed(seed)

.iterations(numberOfIterations)

.optimizationAlgo(

OptimizationAlgorithm.LINE_GRADIENT_DESCENT)

.list()

.layer(0, new RBM.Builder()

.nIn(numberOfRows * numberOfColumns).nOut(1000)

.lossFunction(LossFunctions.LossFunction.RMSE_XENT)

.build())

.layer(1, new RBM.Builder().nIn(1000).nOut(500)

.lossFunction(LossFunctions.LossFunction.RMSE_XENT)

.build())

.layer(2, new RBM.Builder().nIn(500).nOut(250)

.lossFunction(LossFunctions.LossFunction.RMSE_XENT)

.build())

.layer(3, new RBM.Builder().nIn(250).nOut(100)

.lossFunction(LossFunctions.LossFunction.RMSE_XENT)

.build())

.layer(4, new RBM.Builder().nIn(100).nOut(30)

.lossFunction(LossFunctions.LossFunction.RMSE_XENT)

.build()) //encoding stops

.layer(5, new RBM.Builder().nIn(30).nOut(100)

.lossFunction(LossFunctions.LossFunction.RMSE_XENT)

.build()) //decoding starts

.layer(6, new RBM.Builder().nIn(100).nOut(250)

.lossFunction(LossFunctions.LossFunction.RMSE_XENT)

.build())

.layer(7, new RBM.Builder().nIn(250).nOut(500)

.lossFunction(LossFunctions.LossFunction.RMSE_XENT)

.build())

.layer(8, new RBM.Builder().nIn(500).nOut(1000)

.lossFunction(LossFunctions.LossFunction.RMSE_XENT)

.build())

.layer(9, new OutputLayer.Builder(

LossFunctions.LossFunction.RMSE_XENT).nIn(1000)

.nOut(numberOfRows * numberOfColumns).build())

.pretrain(true).backprop(true)

.build();

Once the model has been trained, it can be used for predictive and searching tasks. With a search, the compressed middle layer can be used to match other compressed images that need to be classified.

The field of Natural Language Processing (NLP) is used for many different tasks including text searching, language translation, sentiment analysis, speech recognition, and classification to mention a few. Processing text is difficult due to a number of reasons, including the inherent ambiguity of natural languages.

There are several different types of processing that can be performed such as:

- Identifying Stop words: These are words that are common and may not be necessary for processing

- Name Entity Recognition (NER): This is the process of identifying elements of text such as people's names, location, or things

- Parts of Speech (POS): This identifies the grammatical parts of a sentence such as noun, verb, adjective, and so on

- Relationships: Here we are concerned with identifying how parts of text are related to each other, such as the subject and object of a sentence

As with most data science problems, it is important to preprocess and clean text. In Chapter 9, Text Analysis, we examine the support Java provides for this area of data science.

For example, we will use Apache's OpenNLP (https://opennlp.apache.org/) library to find the parts of speech. This is just one of the several NLP APIs that we could have used including LingPipe (http://alias-i.com/lingpipe/), Apache UIMA (https://uima.apache.org/), and Standford NLP (http://nlp.stanford.edu/). We chose OpenNLP because it is easy to use for this example.

In the following example, a model used to identify POS elements is found in the en-pos-maxent.bin file. An array of words is initialized and the POS model is created:

try (InputStream input = new FileInputStream(

new File("en-pos-maxent.bin"));) {

String sentence = "Let's parse this sentence.";

...

String[] words;

...

list.toArray(words);

POSModel posModel = new POSModel(input);

...

} catch (IOException ex) {

// Handle exceptions

}

The tag method is passed an array of words and returns an array of tags. The words and tags are then displayed.

String[] posTags = posTagger.tag(words);

for(int i=0; i<posTags.length; i++) {

out.println(words[i] + " - " + posTags[i]);

}

The output for this example is as follows:

Let's - NNP parse - NN this - DT sentence. - NN

The abbreviations NNP and DT stand for a singular proper noun and determiner respectively. We examine several other NLP techniques in Chapter 9, Text Analysis.

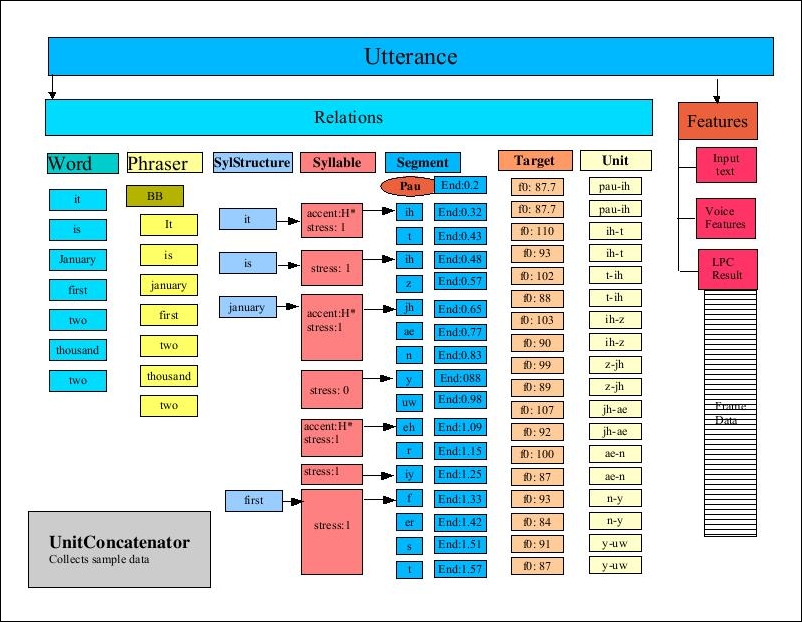

In Chapter 10, Visual and Audio Analysis, we demonstrate several Java techniques for processing sounds and images. We begin by demonstrating techniques for sound processing, including speech recognition and text-to-speech APIs. Specifically, we will use the FreeTTS (http://freetts.sourceforge.net/docs/index.php) API to convert text to speech. We also include a demonstration of the CMU Sphinx toolkit for speech recognition.

The Java Speech API (JSAPI) (http://www.oracle.com/technetwork/java/index-140170.html) supports speech technology. This API, created by third-party vendors, supports speech recognition and speech synthesizers. FreeTTS and Festival (http://www.cstr.ed.ac.uk/projects/festival/) are examples of vendors supporting JSAPI.

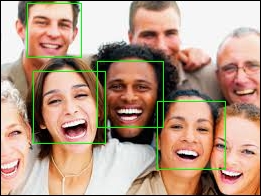

In the second part of the chapter, we examine image processing techniques such as facial recognition. This demonstration involves identifying faces within an image and is easy to accomplish using OpenCV (http://opencv.org/).

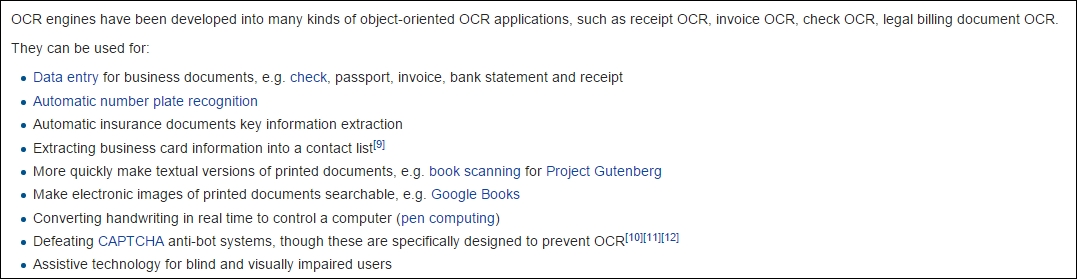

Also, in Chapter 10, Visual and Audio Analysis, we demonstrate how to extract text from images, a process known as OCR. A common data science problem involves extracting and analyzing text embedded in an image. For example, the information contained in license plate, road signs, and directions can be significant.

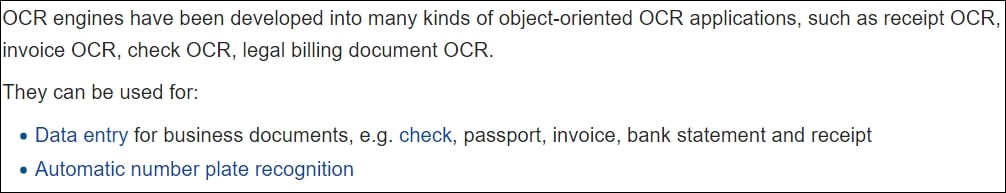

In the following example, explained in more detail in Chapter 11, Mathematical and Parallel Techniques for Data Analysis accomplishes OCR using Tess4j (http://tess4j.sourceforge.net/) a Java JNA wrapper for Tesseract OCR API. We perform OCR on an image captured from the Wikipedia article on OCR (https://en.wikipedia.org/wiki/Optical_character_recognition#Applications), shown here:

The ITesseract interface provides numerous OCR methods. The doOCR method takes a file and returns a string containing the words found in the file as shown here:

ITesseract instance = new Tesseract();

try {

String result = instance.doOCR(new File("OCRExample.png"));

System.out.println(result);

} catch (TesseractException e) {

System.err.println(e.getMessage());

}

A part of the output is shown next:

OCR engines nave been developed into many lunds oiobiectorlented OCR applicatlons, sucn as reoeipt OCR, involoe OCR, check OCR, legal billing document OCR They can be used ior - Data entry ior business documents, e g check, passport, involoe, bank statement and receipt - Automatic number plate recognnlon

As you can see, there are numerous errors in this example that need to be addressed. We build upon this example in Chapter 11, Mathematical and Parallel Techniques for Data Analysis, with a discussion of enhancements and considerations to ensure the OCR process is as effective as possible.

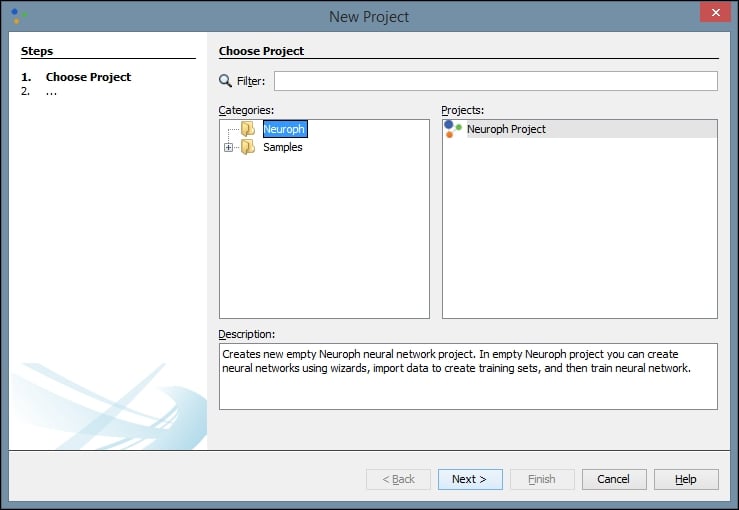

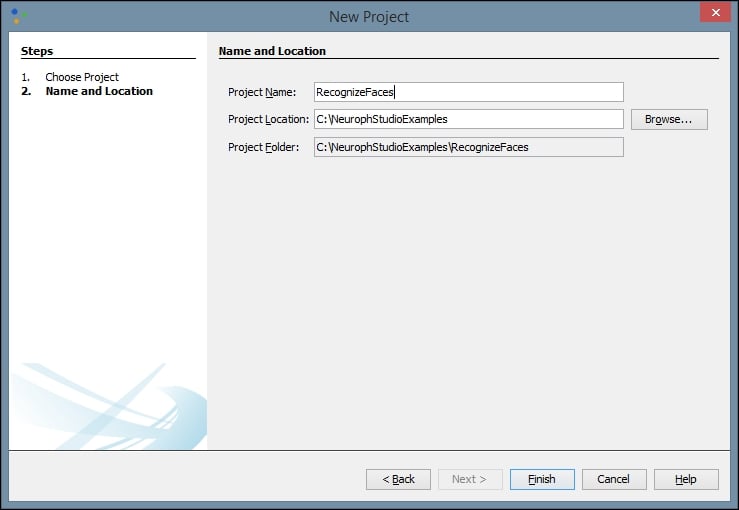

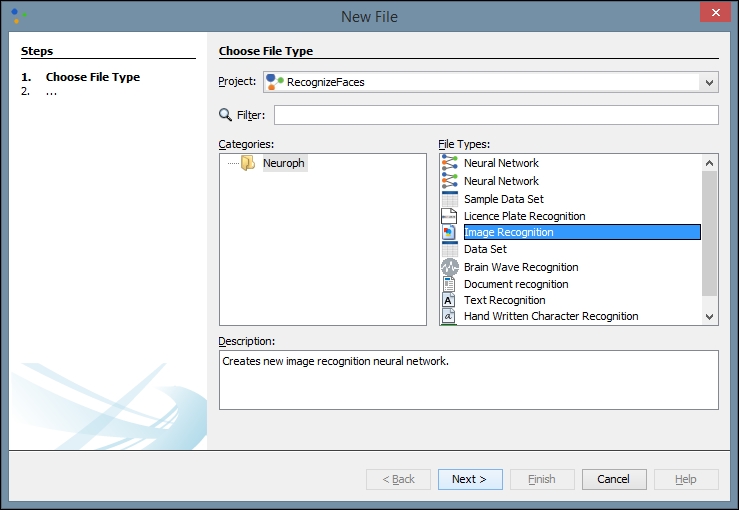

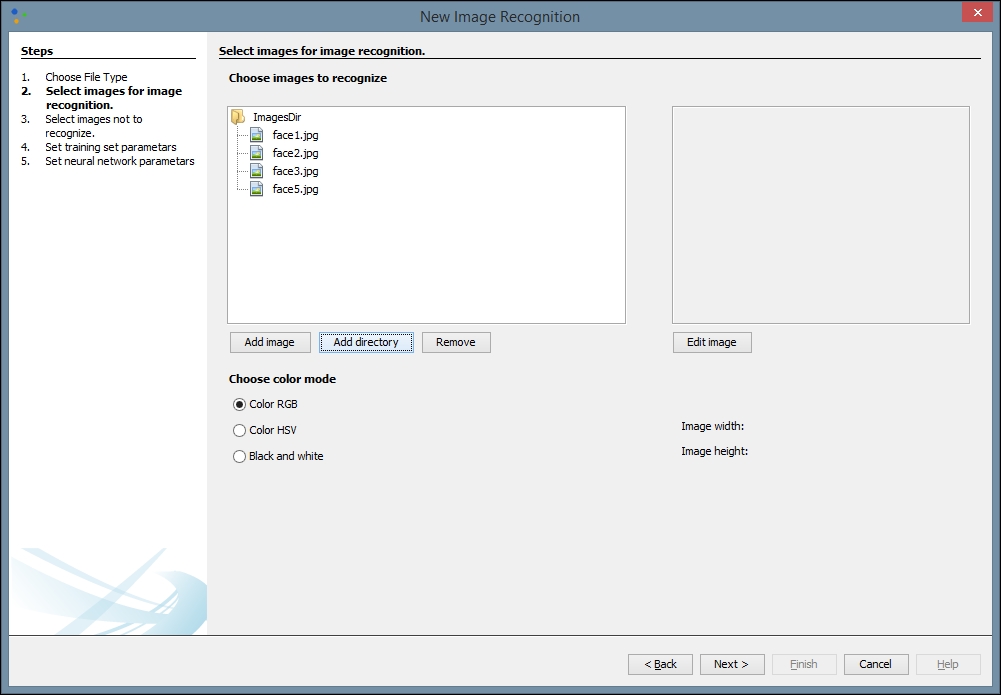

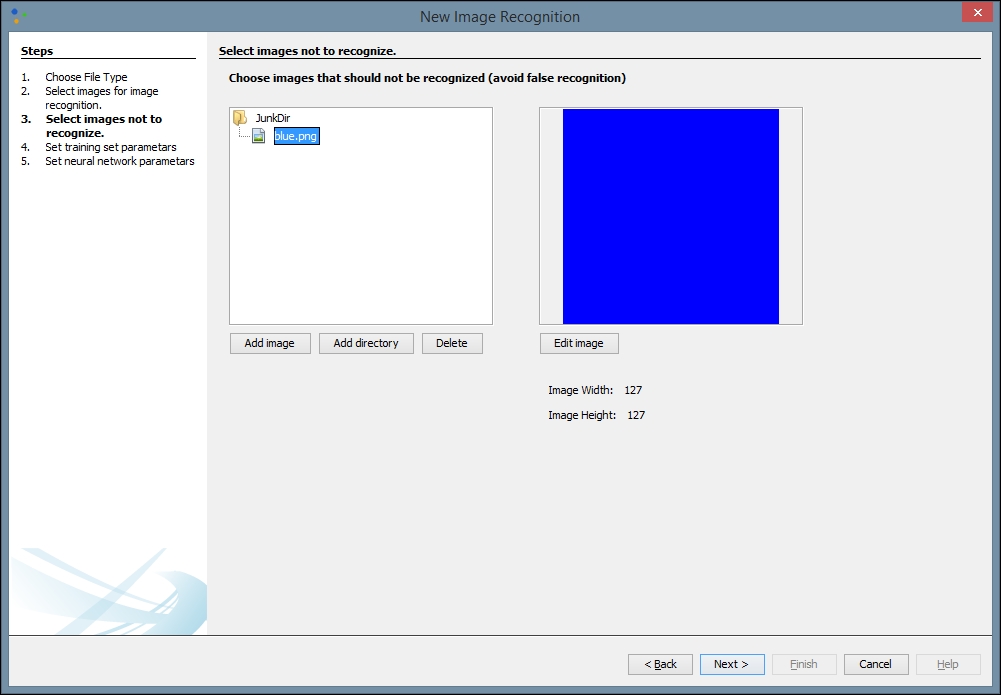

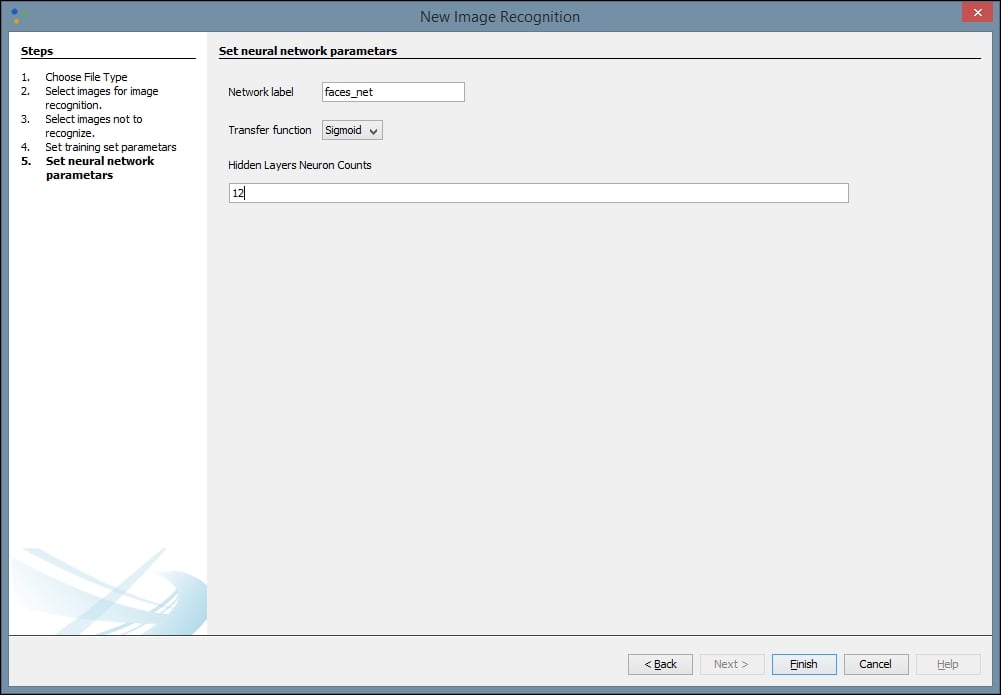

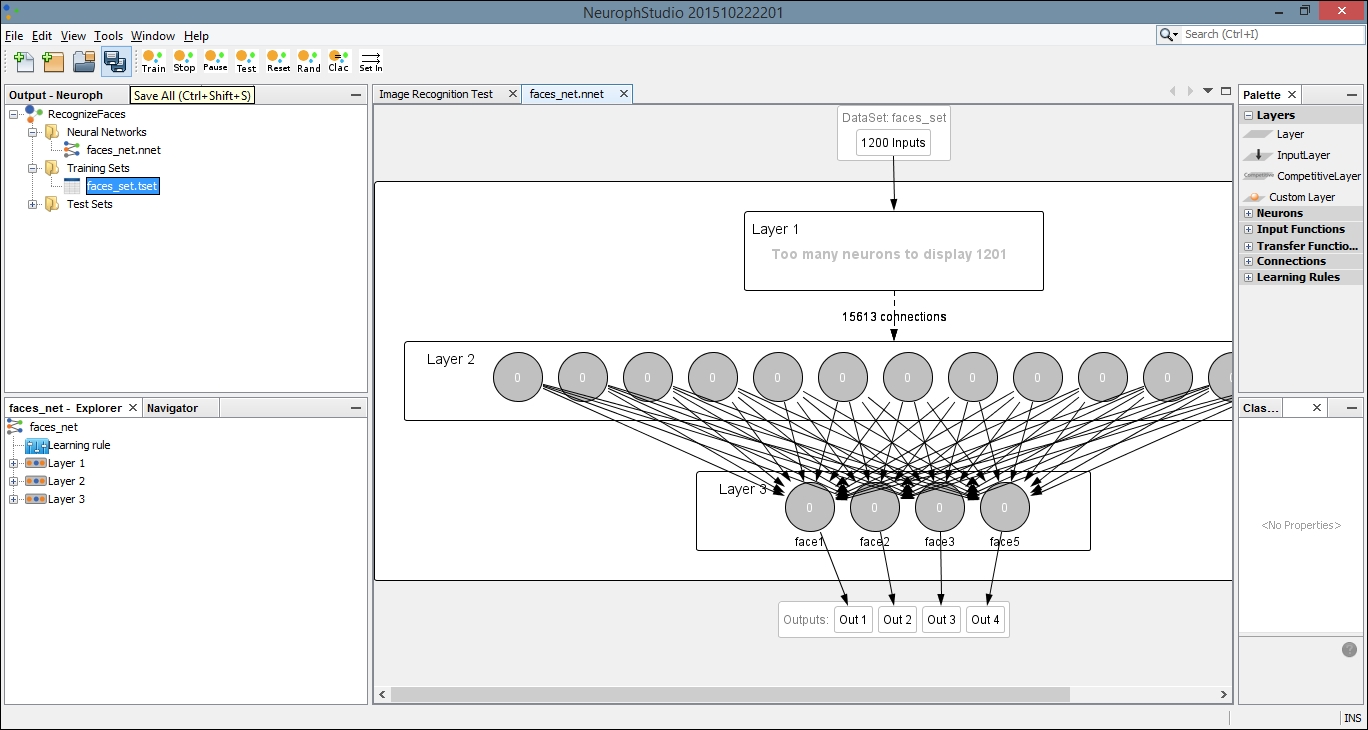

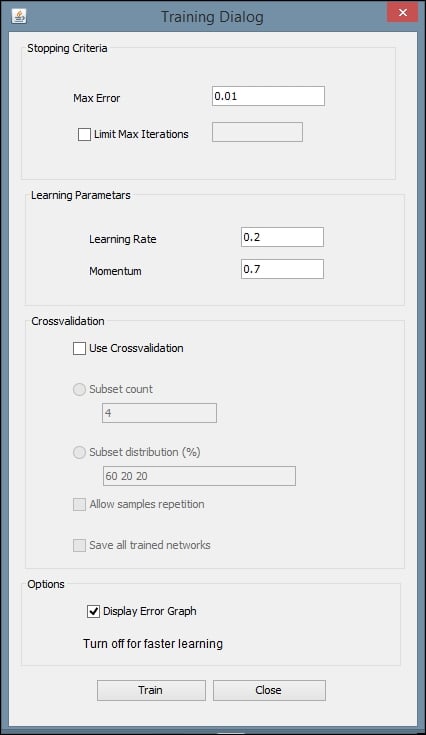

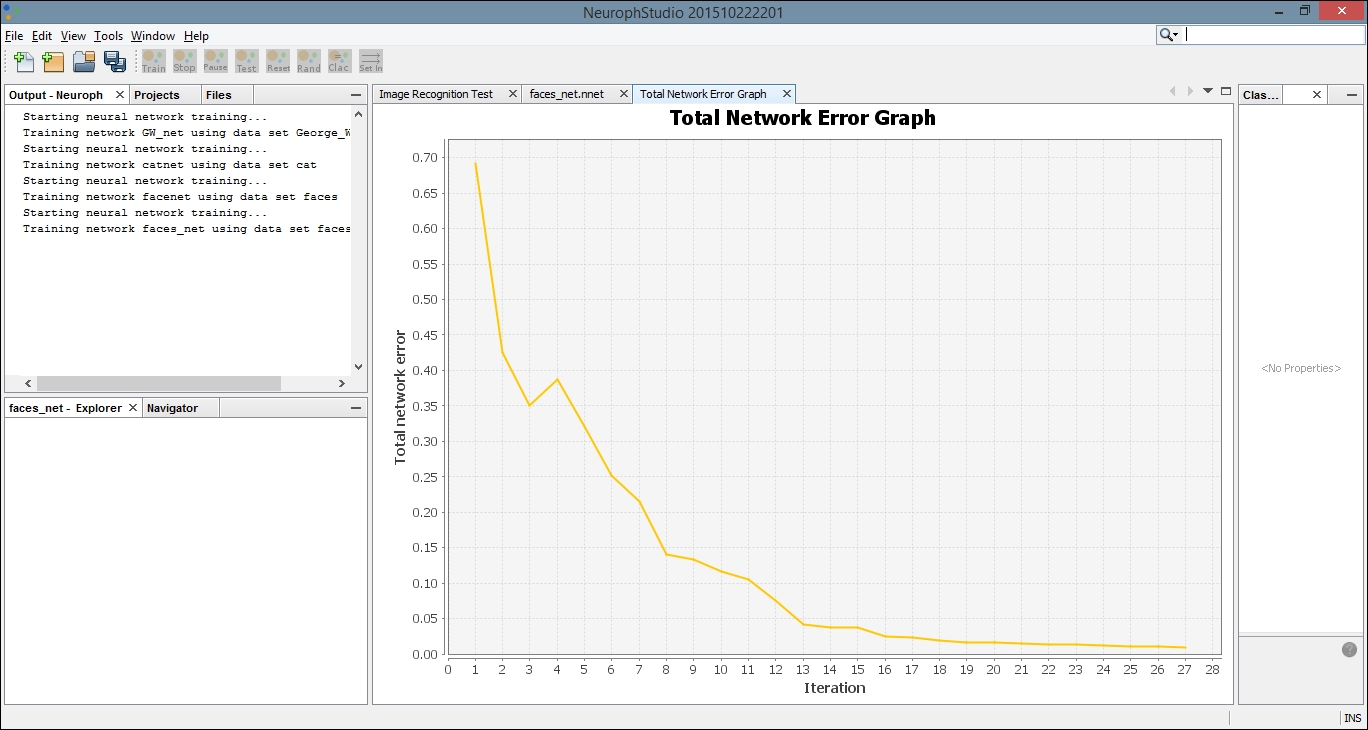

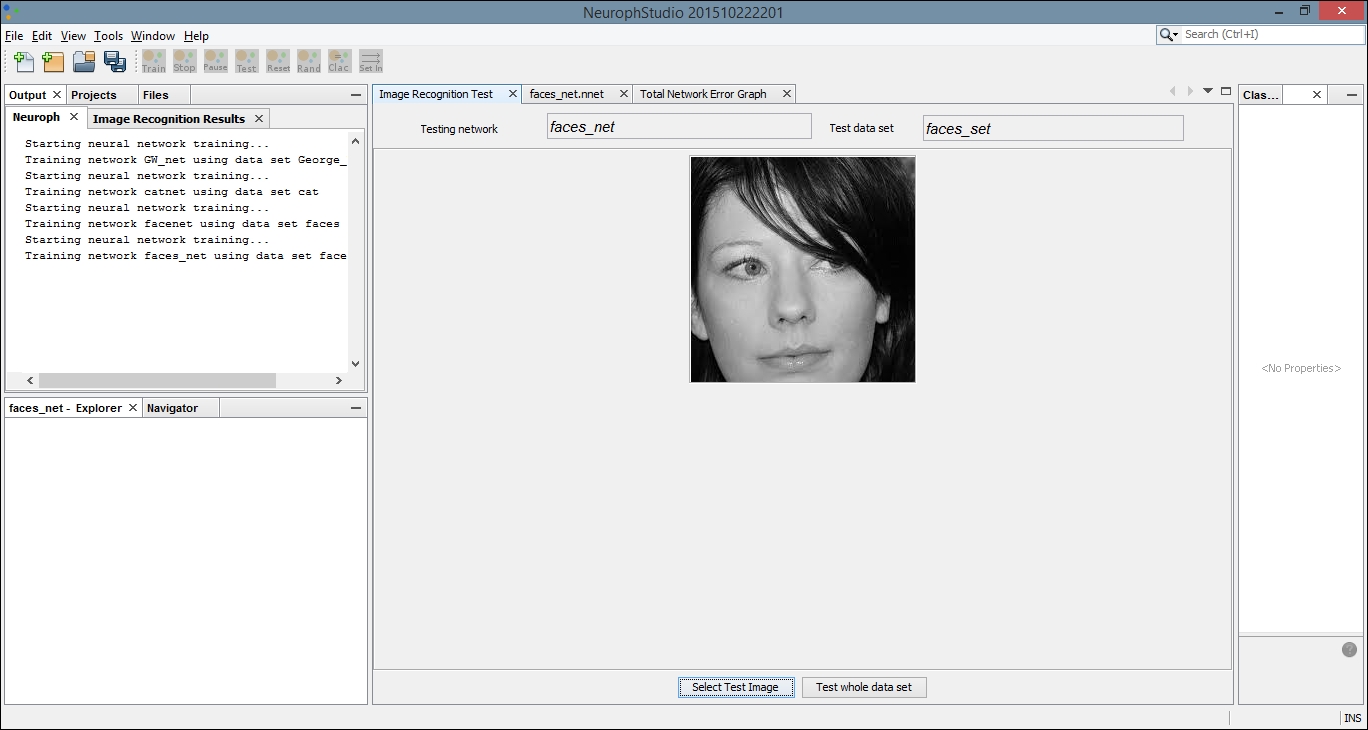

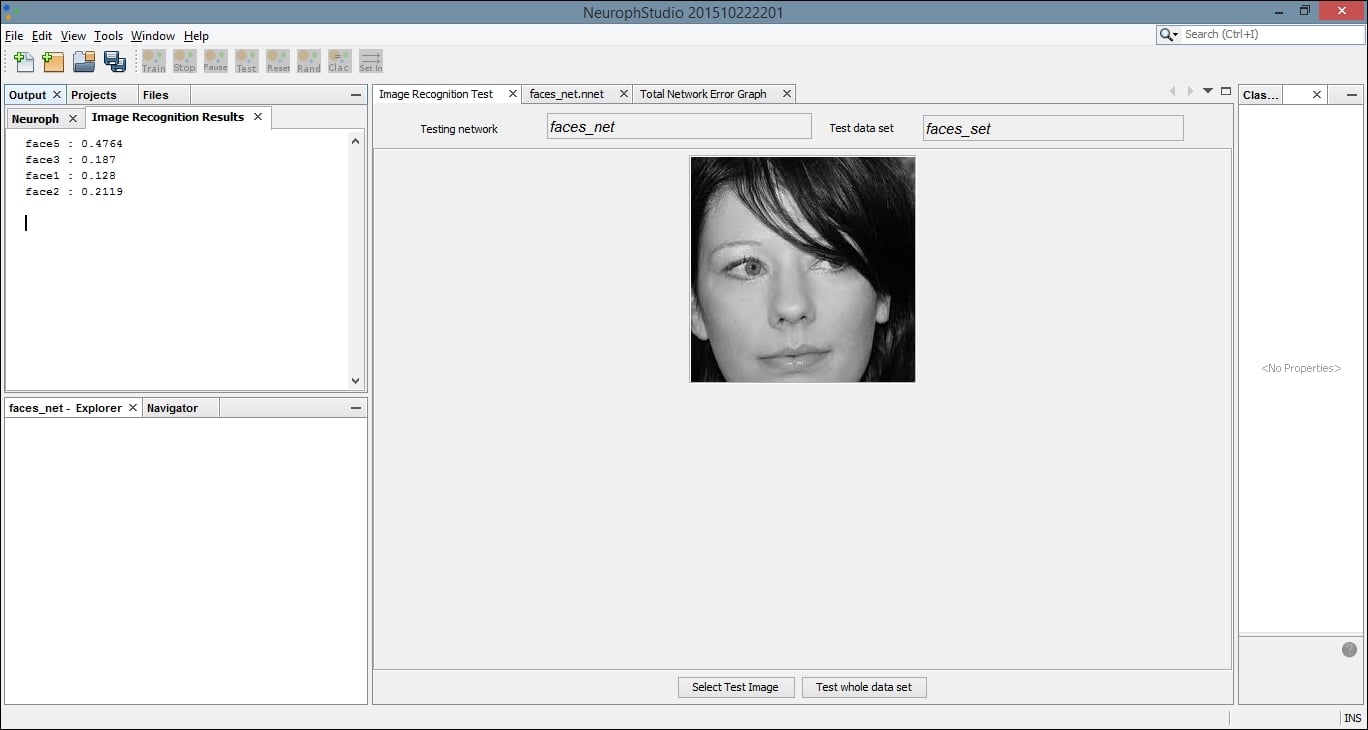

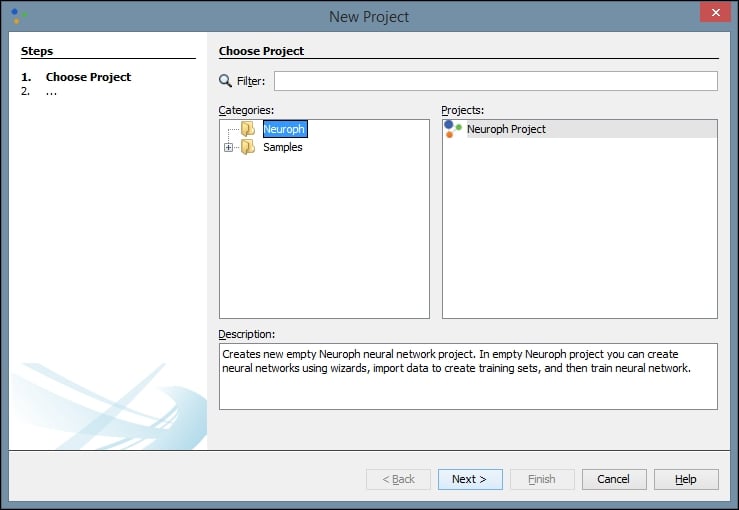

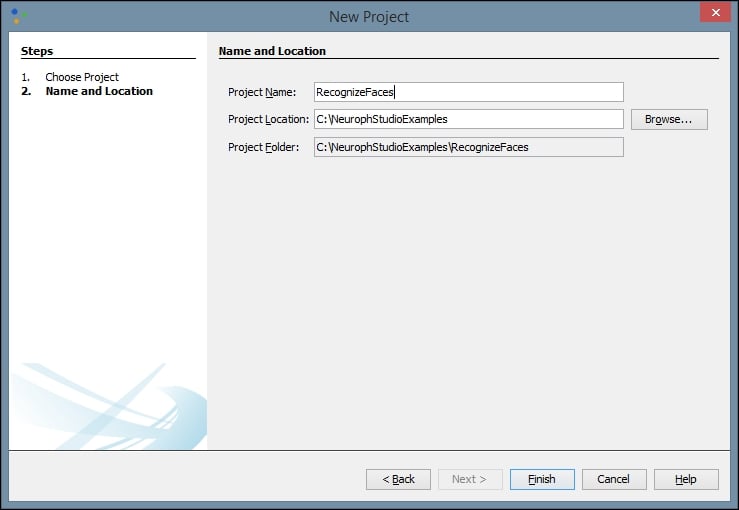

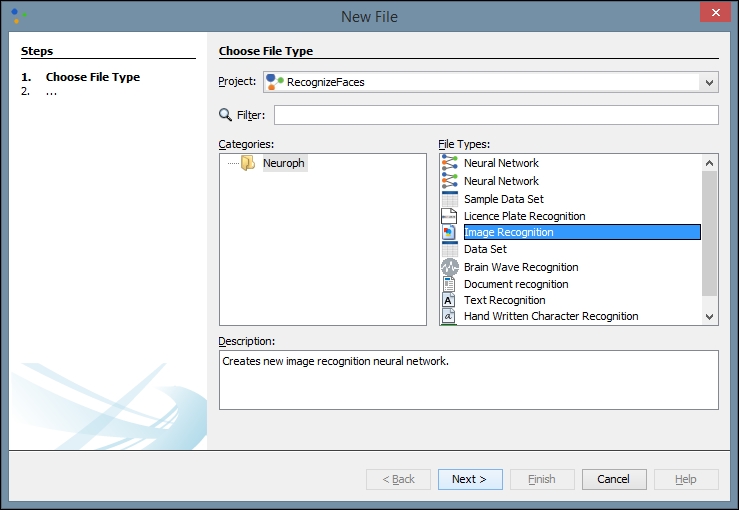

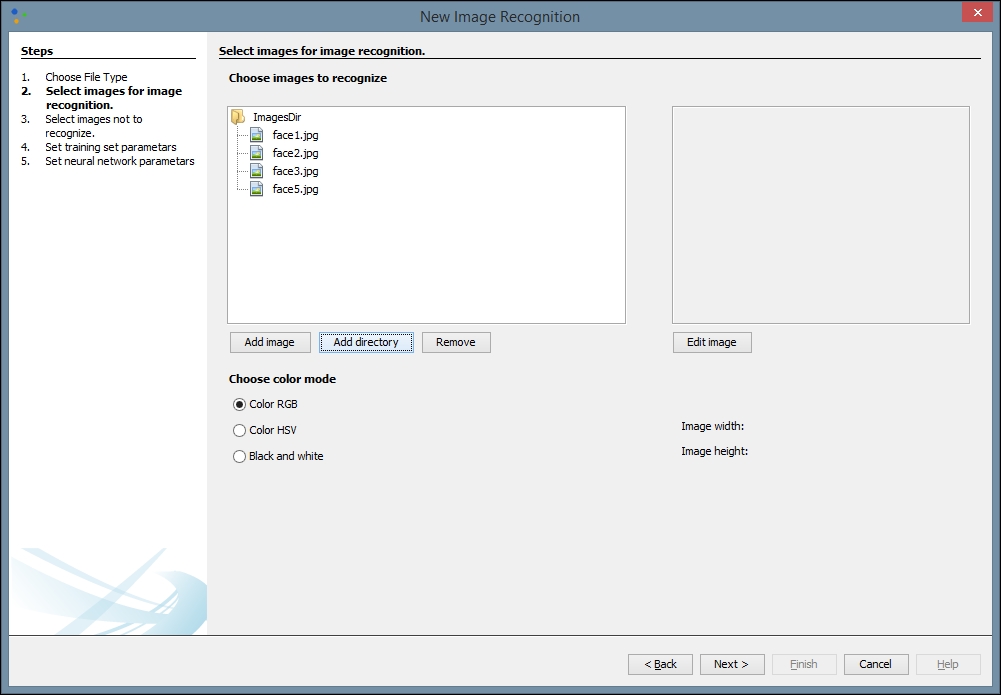

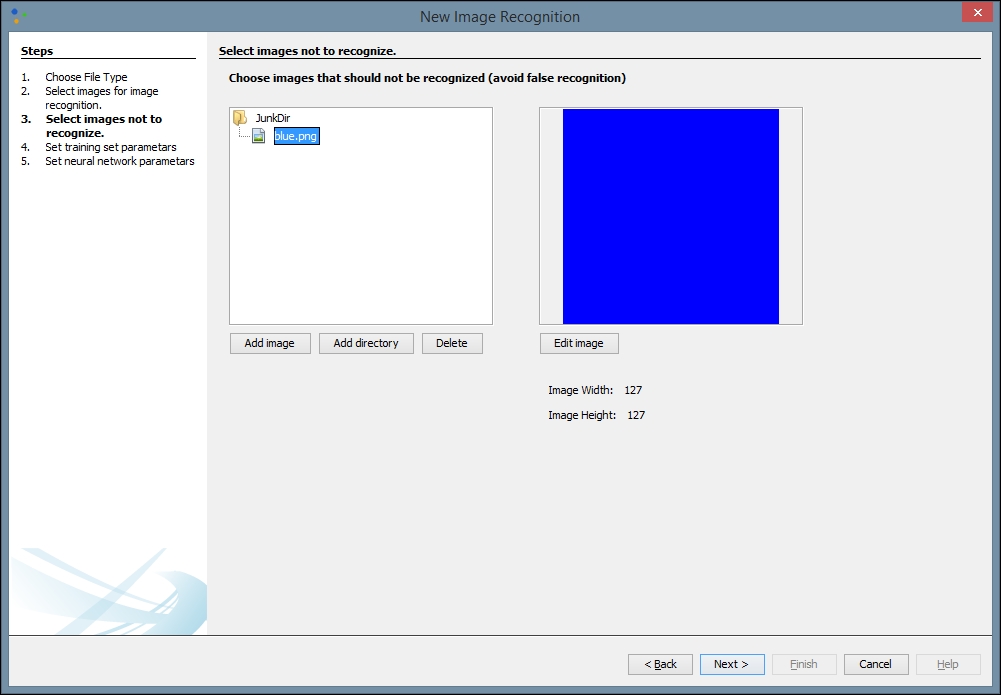

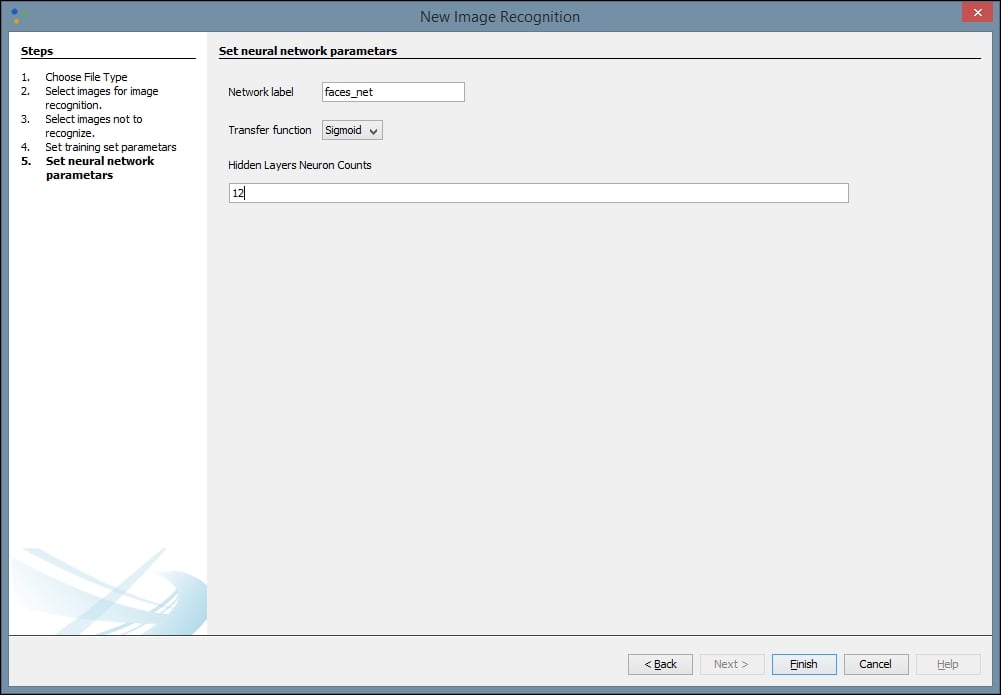

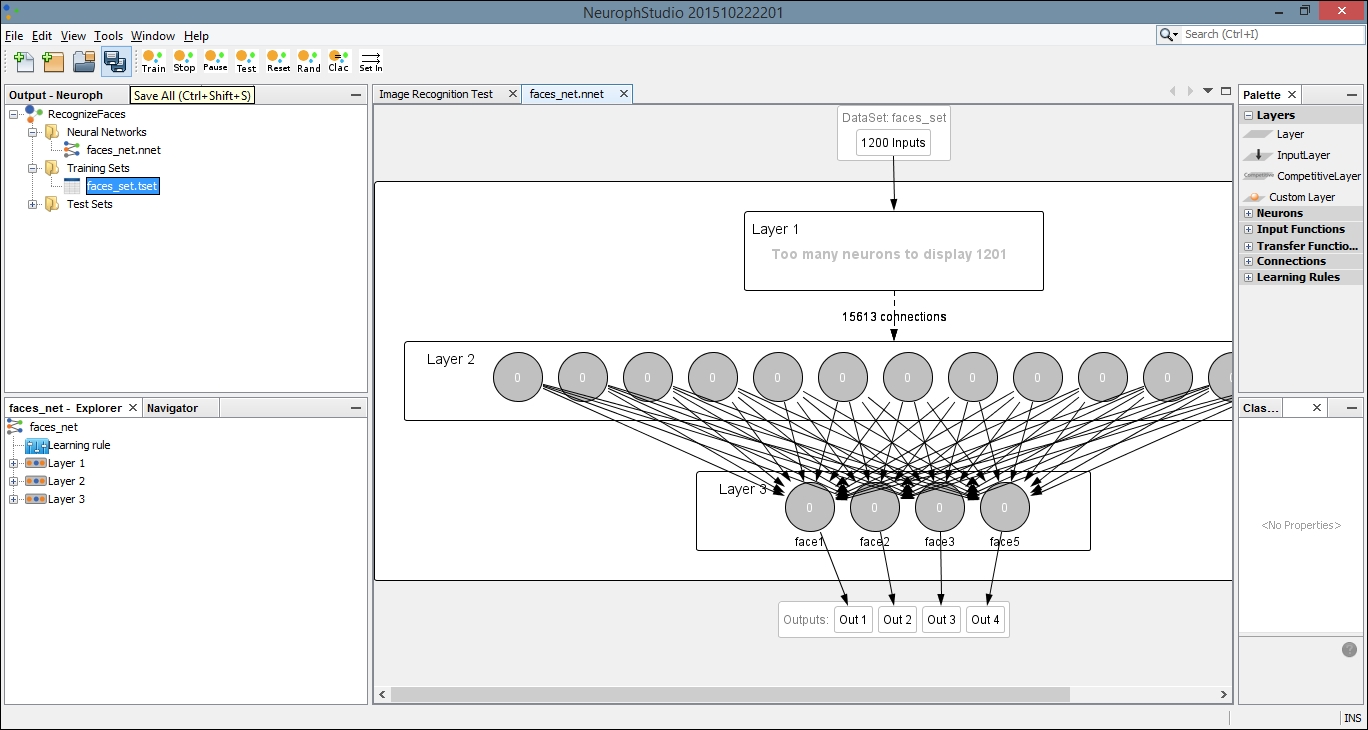

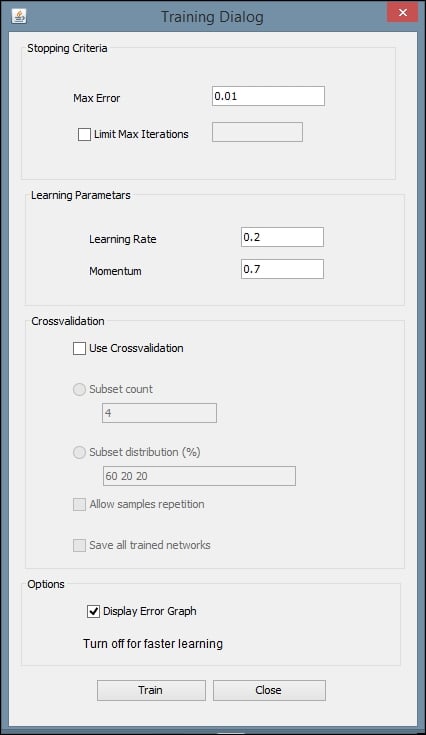

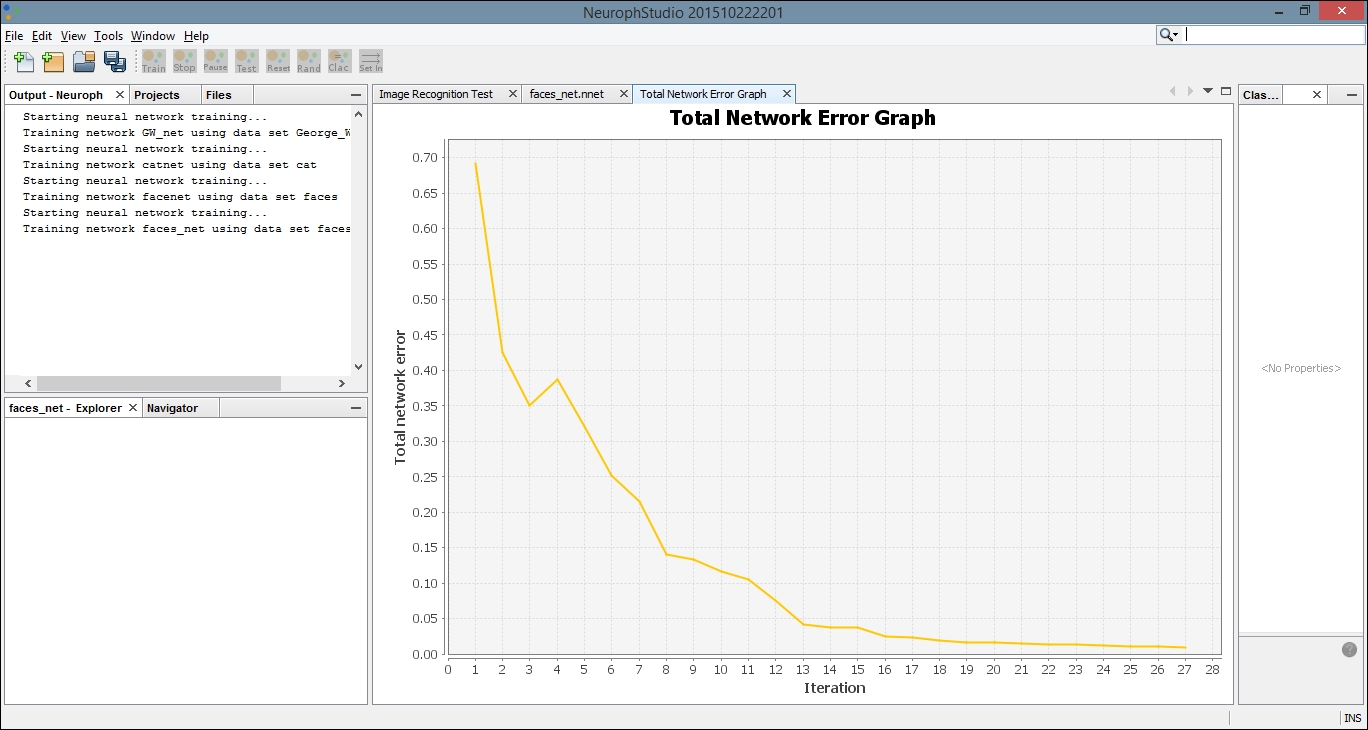

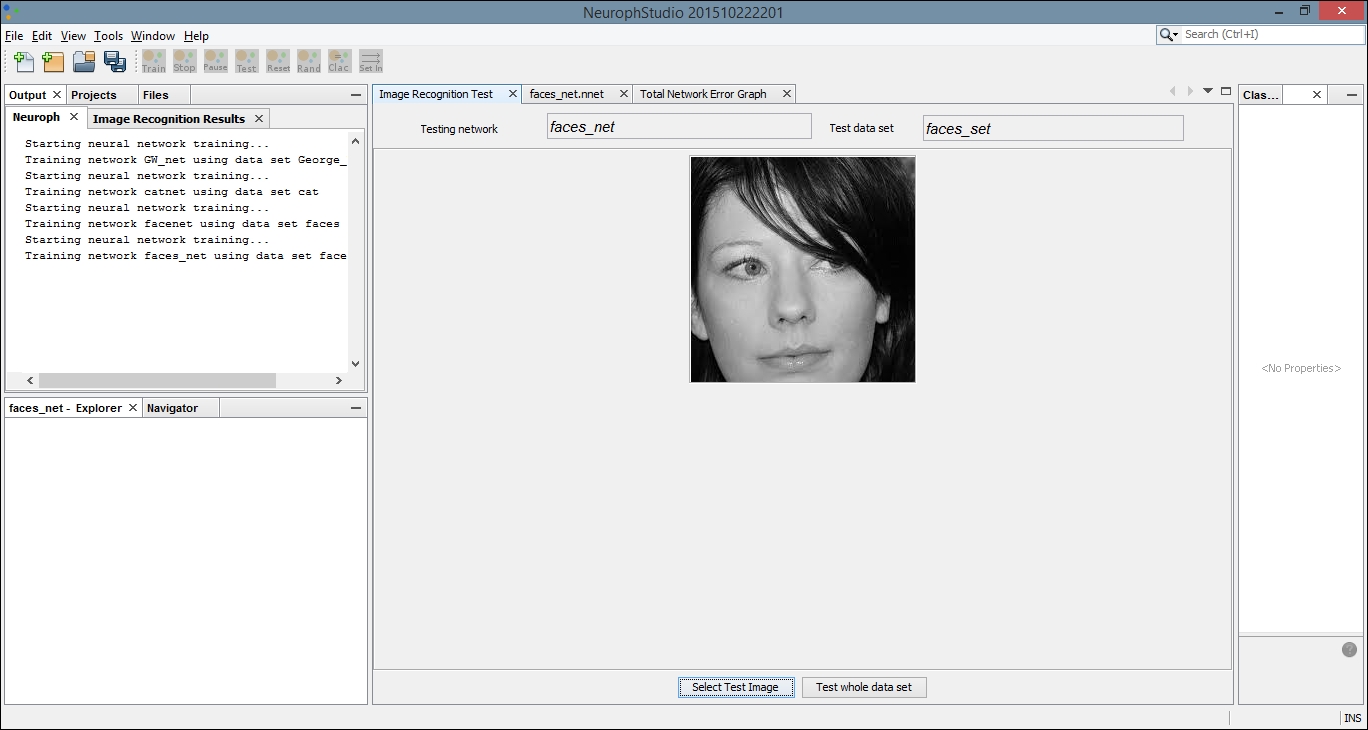

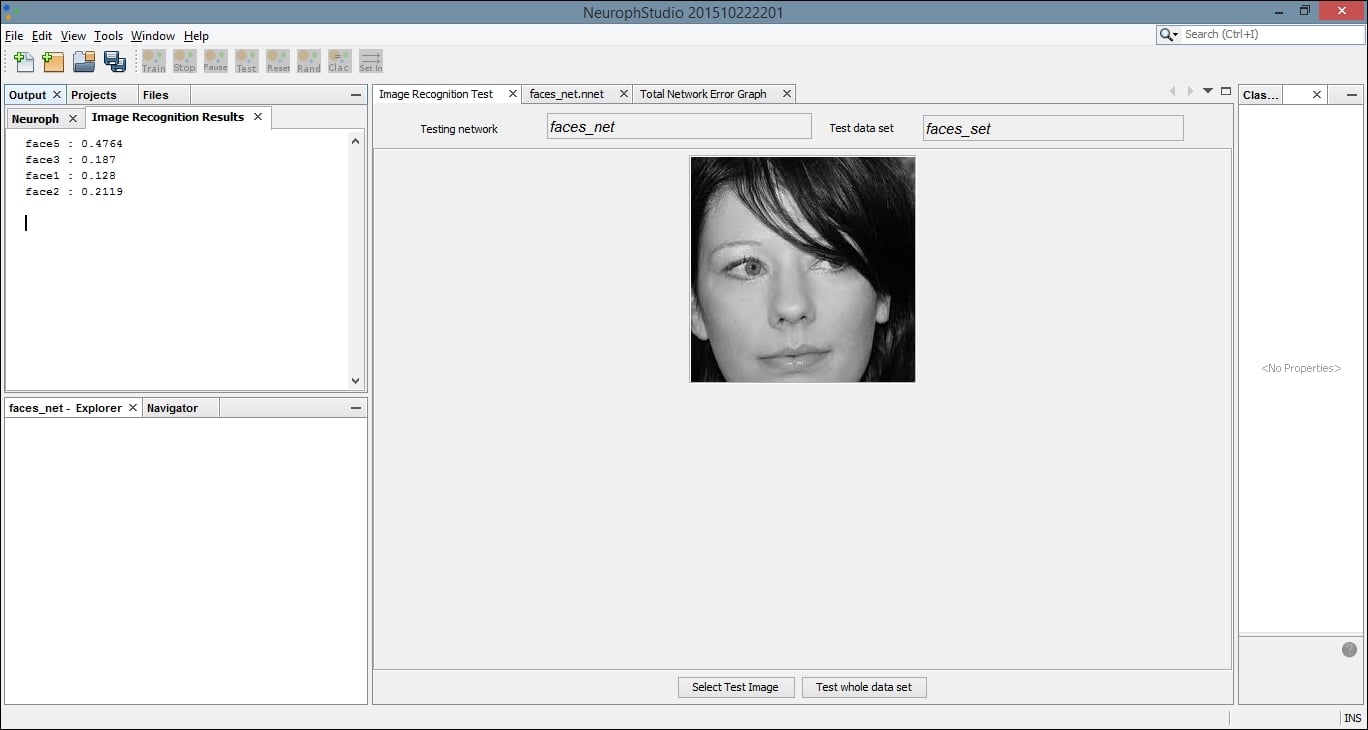

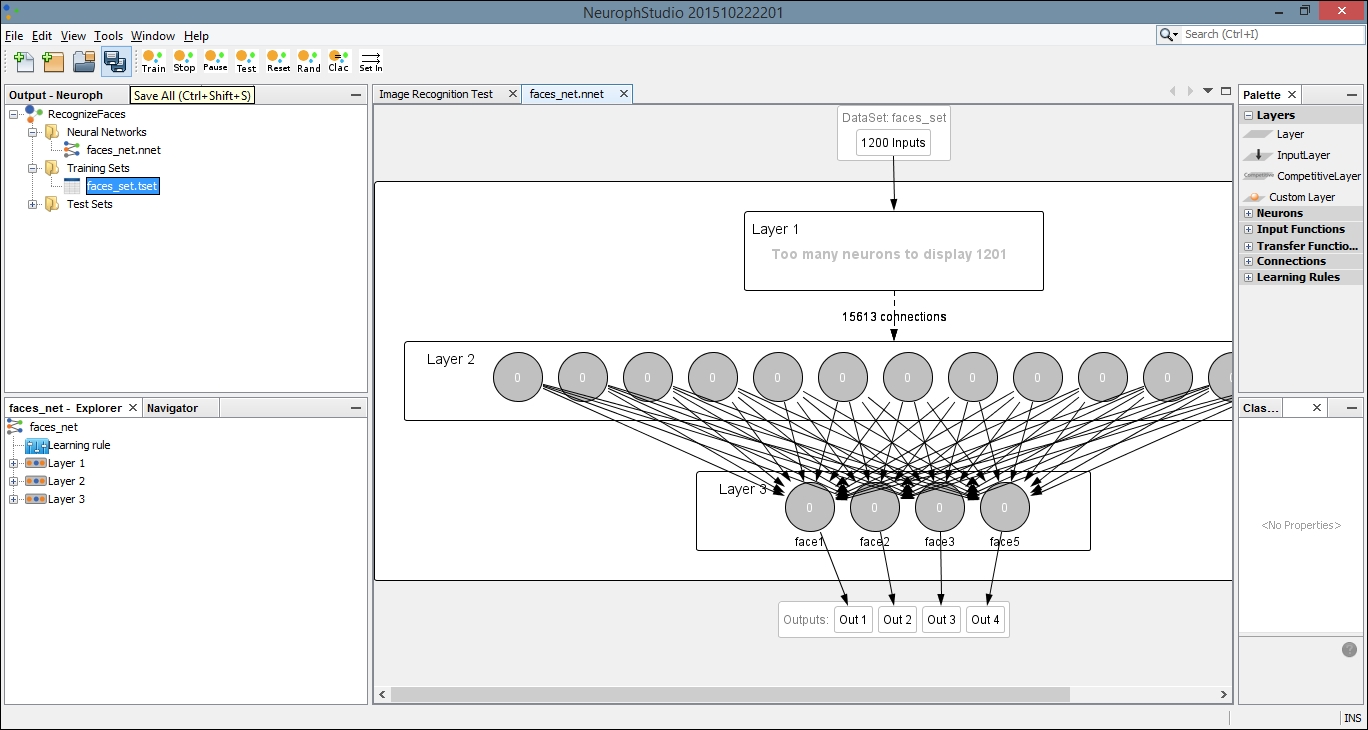

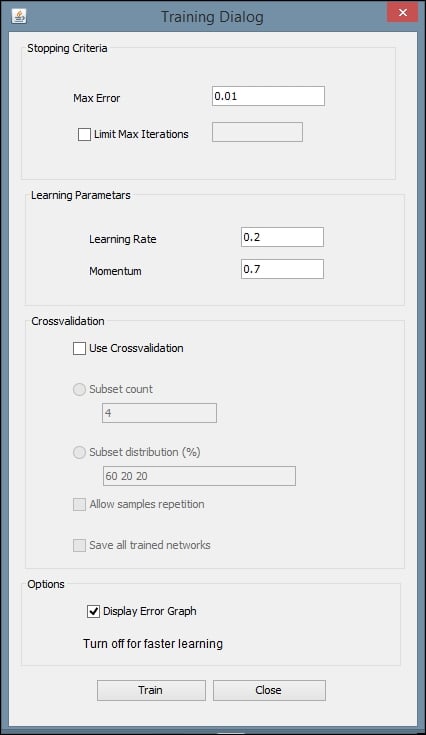

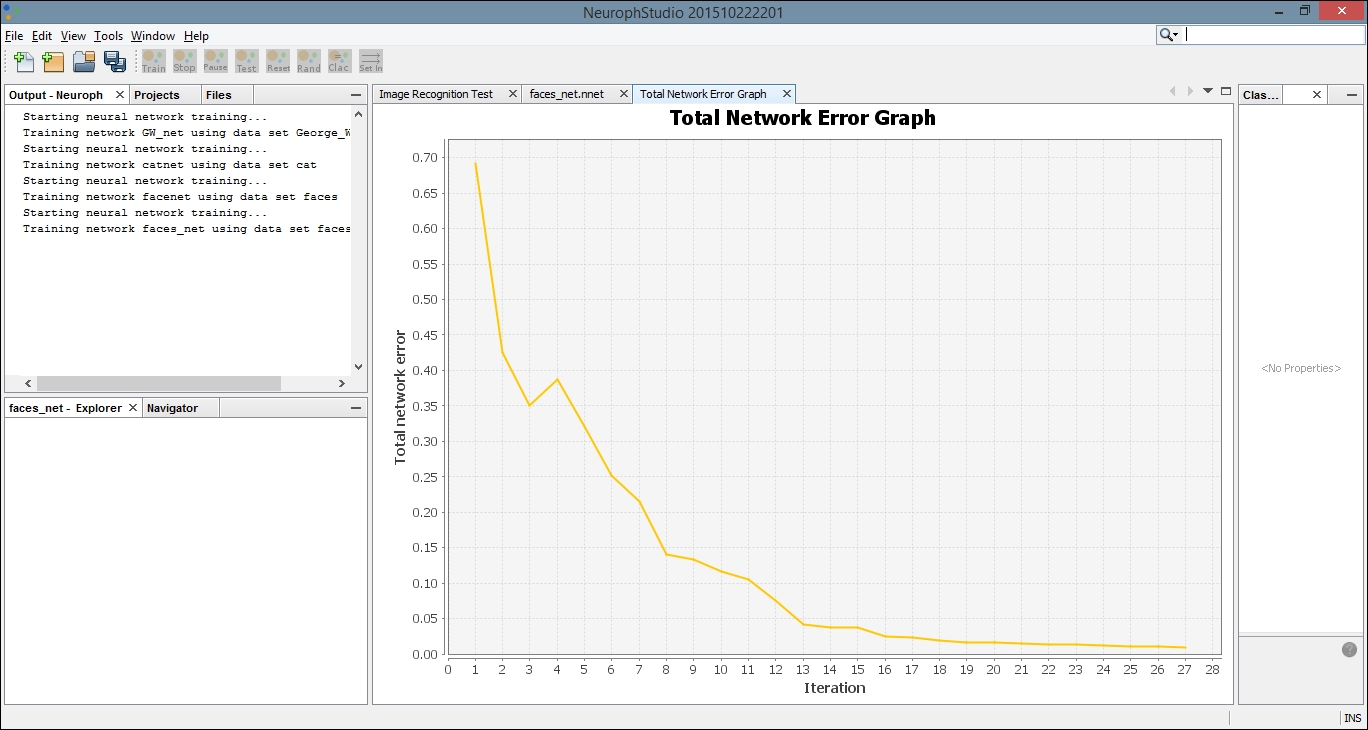

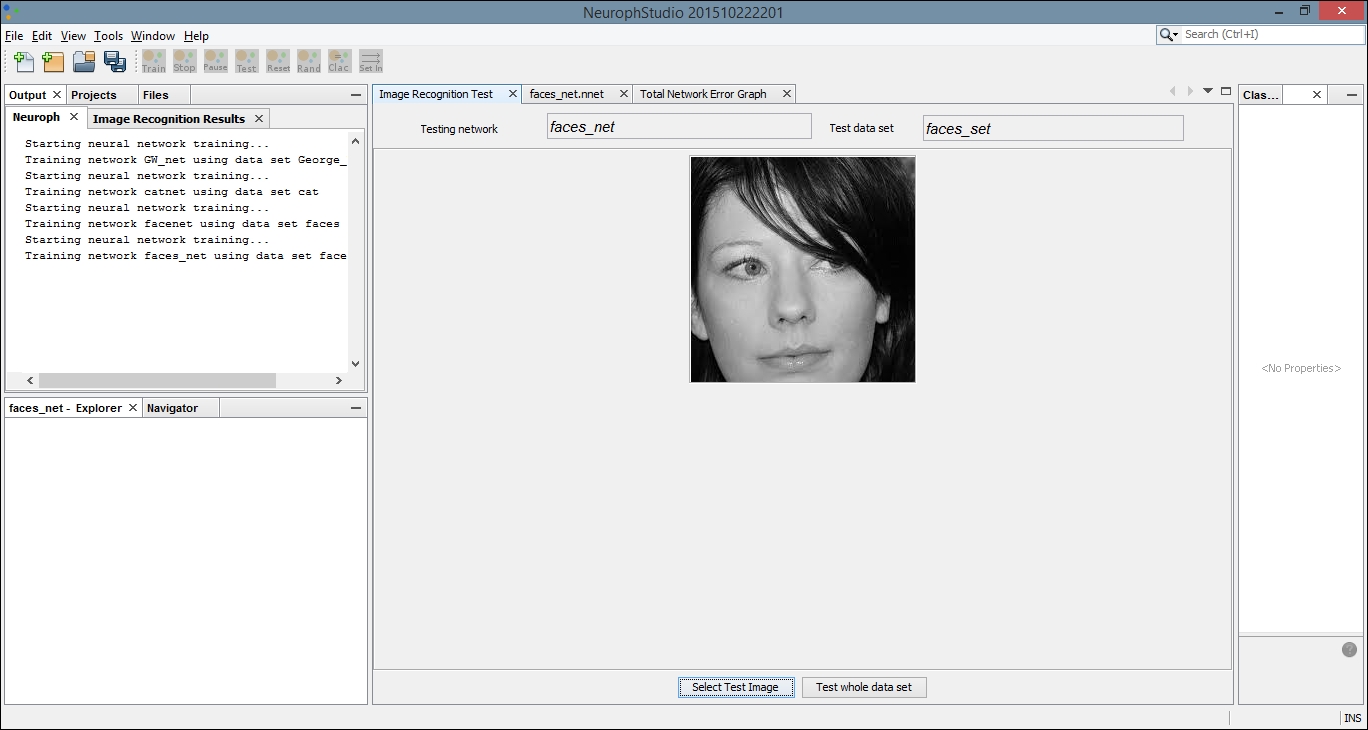

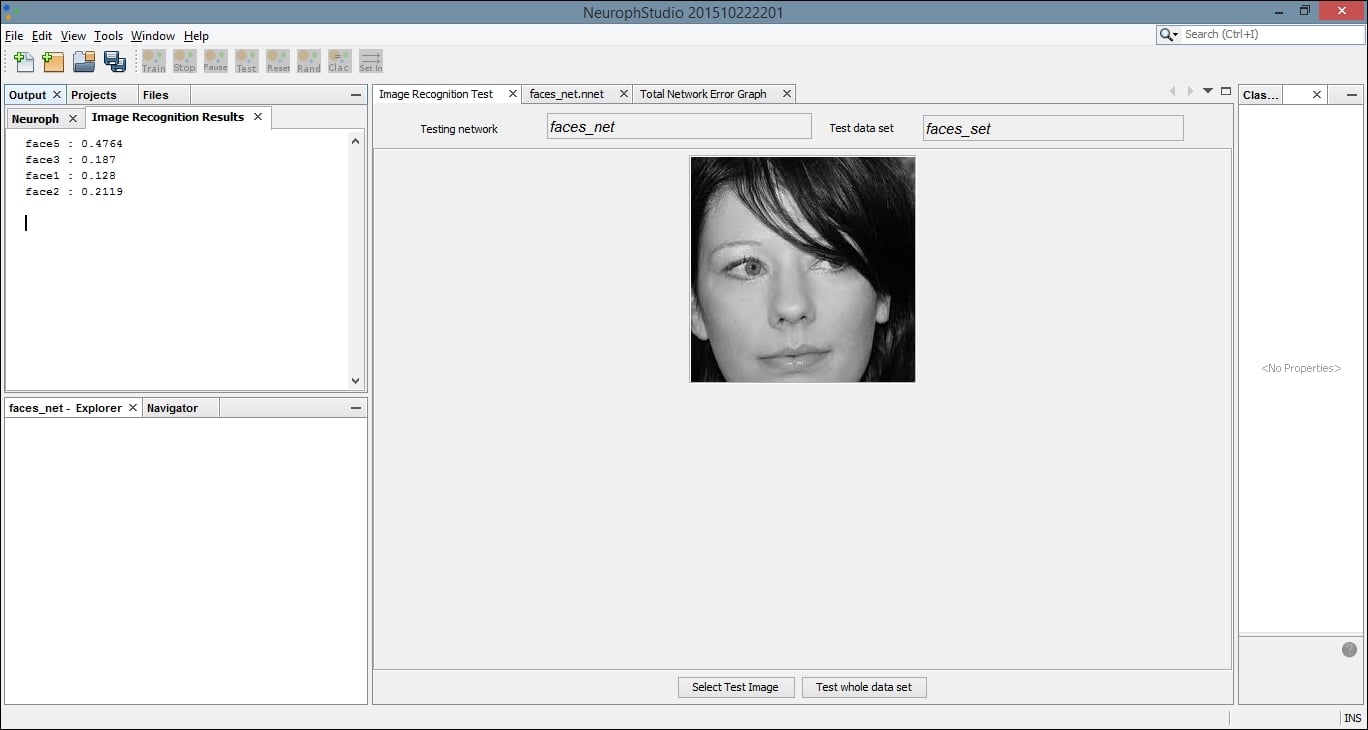

We will conclude the chapter with a discussion of NeurophStudio, a neural network Java-based editor, to classify images and perform image recognition. We train a neural network to recognize and classify faces in this section.

In Chapter 11, Mathematical and Parallel Techniques for Data Analysis, we consider some of the parallel techniques available for data science applications. Concurrent execution of a program can significantly improve performance. In relation to data science, these techniques range from low-level mathematical calculations to higher-level API-specific options.

This chapter includes a discussion of basic performance enhancement considerations. Algorithms and application architecture matter as much as enhanced code, and this should be considered when attempting to integrate parallel techniques. If an application does not behave in the expected or desired manner, any gains from parallel optimizing are irrelevant.

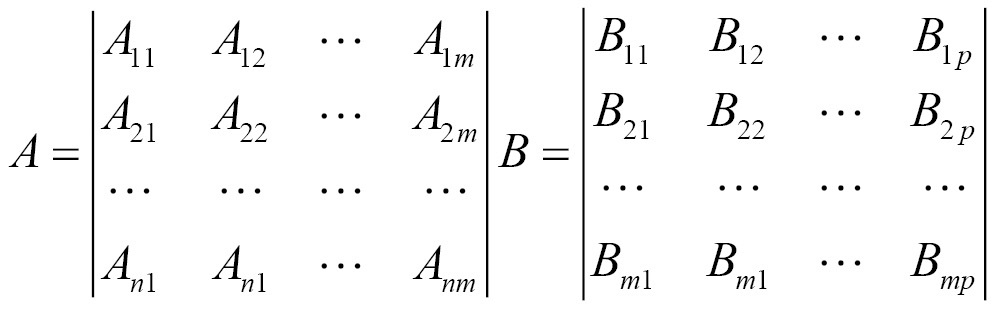

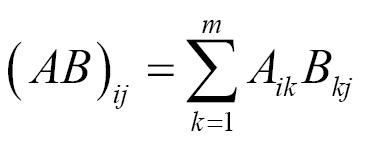

Matrix operations are essential to many data applications and supporting APIs. We will include a discussion in this chapter about matrix multiplication and how it is handled using a variety of approaches. Even though these operations are often hidden within the API, it can be useful to understand how they are supported.

One approach we demonstrate utilizes the Apache Commons Math API (http://commons.apache.org/proper/commons-math/). This API supports a large number of mathematical and statistical operations, including matrix multiplication. The following example illustrates how to perform matrix multiplication.

We first declare and initialize matrices A and B:

double[][] A = {

{0.1950, 0.0311},

{0.3588, 0.2203},

{0.1716, 0.5931},

{0.2105, 0.3242}};

double[][] B = {

{0.0502, 0.9823, 0.9472},

{0.5732, 0.2694, 0.916}};

Apache Commons uses the RealMatrix class to store a matrix. Next, we use the Array2DRowRealMatrix constructor to create the corresponding matrices for A and B:

RealMatrix aRealMatrix = new Array2DRowRealMatrix(A); RealMatrix bRealMatrix = new Array2DRowRealMatrix(B);

We perform multiplication simply using the multiply method:

RealMatrix cRealMatrix = aRealMatrix.multiply(bRealMatrix);

Finally, we use a for loop to display the results:

for (int i = 0; i < cRealMatrix.getRowDimension(); i++) {

System.out.println(cRealMatrix.getRowVector(i));

}

The output is as follows:

{0.02761552; 0.19992684; 0.2131916} {0.14428772; 0.41179806; 0.54165016} {0.34857924; 0.32834382; 0.70581912} {0.19639854; 0.29411363; 0.4963528}

Another approach to concurrent processing involves the use of Java threads. Threads are used by APIs such as Aparapi when multiple CPUs or GPUs are not available.

Data science applications often take advantage of the map-reduce algorithm. We will demonstrate parallel processing by using Apache's Hadoop to perform map-reduce. Designed specifically for large datasets, Hadoop reduces processing time for large scale data science projects. We demonstrate a technique for calculating the average value of a large dataset.

We also include examples of APIs that support multiple processors, including CUDA and OpenCL. CUDA is supported using Java bindings for CUDA (JCuda) (http://jcuda.org/). We also discuss OpenCL and its Java support. The Aparapi API provides high-level support for using multiple CPUs or GPUs and we include a demonstration of Aparapi in support of matrix multiplication.

In the final chapter of this book, we will tie together many of the techniques explored in the previous chapters. We will create a simple console-based application for acquiring data from Twitter and performing various types of data manipulation and analysis. Our goal in this chapter is to demonstrate a simple project exploring a variety of data science concepts and provide insights and considerations for future projects.

Specifically, the application developed in the final chapter performs several high-level tasks, including data acquisition, data cleaning, sentiment analysis, and basic statistical collection. We demonstrate these techniques using Java 8 Streams and focus on Java 8 approaches whenever possible.

Data science is a broad, diverse field of study and it would be impossible to explore exhaustively within this book. We hope to provide a solid understanding of important data science concepts and equip the reader for further study. In particular, this book will provide concrete examples of different techniques for all stages of data science related inquiries. This ranges from data acquisition and cleaning to detailed statistical analysis.

So let's start with a discussion of data acquisition and how Java supports it as illustrated in the next chapter.

It is never much fun to work with code that is not formatted properly or uses variable names that do not convey their intended purpose. The same can be said of data, except that bad data can result in inaccurate results. Thus, data acquisition is an important step in the analysis of data. Data is available from a number of sources but must be retrieved and ultimately processed before it can be useful. It is available from a variety of sources. We can find it in numerous public data sources as simple files, or it may be found in more complex forms across the Internet. In this chapter, we will demonstrate how to acquire data from several of these, including various Internet sites and several social media sites.

We can access data from the Internet by downloading specific files or through a process known as web scraping, which involves extracting the contents of a web page. We also explore a related topic known as web crawling, which involves applications that examine a web site to determine whether it is of interest and then follows embedded links to identify other potentially relevant pages.

We can also extract data from social media sites. These types of sites often hold a treasure trove of data that is readily available if we know how to access it. In this chapter, we will demonstrate how to extract data from several sites, including:

- Wikipedia

- Flickr

- YouTube

When extracting data from a site, many different data formats may be encountered. We will examine three basic types: text, audio, and video. However, even within text, audio, and video data, many formats exist. For audio data alone, there are 45 audio coding formats compared at https://en.wikipedia.org/wiki/Comparison_of_audio_coding_formats. For textual data, there are almost 300 formats listed at http://fileinfo.com/filetypes/text. In this chapter, we will focus on how to download and extract these types of text as plain text for eventual processing.

We will briefly examine different data formats, followed by an examination of possible data sources. We need this knowledge to demonstrate how to obtain data using different data acquisition techniques.

When we discuss data formats, we are referring to content format, as opposed to the underlying file format, which may not even be visible to most developers. We cannot examine all available formats due to the vast number of formats available. Instead, we will tackle several of the more common formats, providing adequate examples to address the most common data retrieval needs. Specifically, we will demonstrate how to retrieve data stored in the following formats:

- HTML

- CSV/TSV

- Spreadsheets

- Databases

- JSON

- XML

Some of these formats are well supported and documented elsewhere. For example, XML has been in use for years and there are several well-established techniques for accessing XML data in Java. For these types of data, we will outline the major techniques available and show a few examples to illustrate how they work. This will provide those readers who are not familiar with the technology some insight into their nature.

The most common data format is binary files. For example, Word, Excel, and PDF documents are all stored in binary. These require special software to extract information from them. Text data is also very common.

Comma Separated Values (CSV) files, contain tabular data organized in a row-column format. The data, stored as plaintext, is stored in rows, also called records. Each record contains fields separated by commas. These files are also closely related to other delimited files, most notably Tab-Separated Values (TSV) files. The following is a part of a simple CSV file, and these numbers are not intended to represent any specific type of data:

JURISDICTION NAME,COUNT PARTICIPANTS,COUNT FEMALE,PERCENT FEMALE 10001,44,22,0.5 10002,35,19,0.54 10003,1,1,1

Notice that the first row contains header data to describe the subsequent records. Each value is separated by a comma and corresponds to the header in the same position. In Chapter 3, Data Cleaning, we will discuss CSV files in more depth and examine the support available for different types of delimiters.

Spreadsheets are a form of tabular data where information is stored in rows and columns, much like a two-dimensional array. They typically contain numeric and textual information and use formulas to summarize and analyze their contents. Most people are familiar with Excel spreadsheets, but they are also found as part of other product suites, such as OpenOffice.

Spreadsheets are an important data source because they have been used for the past several decades to store information in many industries and applications. Their tabular nature makes them easy to process and analyze. It is important to know how to extract data from this ubiquitous data source so that we can take advantage of the wealth of information that is stored in them.

For some of our examples, we will use a simple Excel spreadsheet that consists of a series of rows containing an ID, along with minimum, maximum, and average values. These numbers are not intended to represent any specific type of data. The spreadsheet looks like this:

|

ID |

Minimum |

Maximum |

Average |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

In Chapter 3, Data Cleaning, we will learn how to extract data from spreadsheets.

Data can be found in Database Management Systems (DBMS), which, like spreadsheets, are ubiquitous. Java provides a rich set of options for accessing and processing data in a DBMS. The intent of this section is to provide a basic introduction to database access using Java.

We will demonstrate the essence of connecting to a database, storing information, and retrieving information using JDBC. For this example, we used the MySQL DBMS. However, it will work for other DBMSes as well with a change in the database driver. We created a database called example and a table called URLTABLE using the following command within the MySQL Workbench. There are other tools that can achieve the same results:

CREATE TABLE IF NOT EXISTS `URLTABLE` ( `RecordID` INT(11) NOT NULL AUTO_INCREMENT, `URL` text NOT NULL, PRIMARY KEY (`RecordID`) );

We start with a try block to handle exceptions. A driver is needed to connect to the DBMS. In this example, we used com.mysql.jdbc.Driver. To connect to the database, the getConnection method is used, where the database server location, user ID, and password are passed. These values depend on the DBMS used:

try {

Class.forName("com.mysql.jdbc.Driver");

String url = "jdbc:mysql://localhost:3306/example";

connection = DriverManager.getConnection(url, "user ID",

"password");

...

} catch (SQLException | ClassNotFoundException ex) {

// Handle exceptions

}

Next, we will illustrate how to add information to the database and then how to read it. The SQL INSERT command is constructed in a string. The name of the MySQL database is example. This command will insert values into the URLTABLE table in the database where the question mark is a placeholder for the value to be inserted:

String insertSQL = "INSERT INTO `example`.`URLTABLE` "

+ "(`url`) VALUES " + "(?);";

The PreparedStatement class represents an SQL statement to execute. The prepareStatement method creates an instance of the class using the INSERT SQL statement:

PreparedStatement stmt = connection.prepareStatement(insertSQL);

We then add URLs to the table using the setString method and the execute method. The setString method possesses two arguments. The first specifies the column index to insert the data and the second is the value to be inserted. The execute method does the actual insertion. We add two URLs in the next sequence:

stmt.setString(1, "https://en.wikipedia.org/wiki/Data_science");

stmt.execute();

stmt.setString(1,

"https://en.wikipedia.org/wiki/Bishop_Rock,_Isles_of_Scilly");

stmt.execute();

To read the data, we use a SQL SELECT statement as declared in the selectSQL string. This will return all the rows and columns from the URLTABLE table. The createStatement method creates an instance of a Statement class, which is used for INSERT type statements. The executeQuery method executes the query and returns a ResultSet instance that holds the contents of the table:

String selectSQL = "select * from URLTABLE";

Statement statement = connection.createStatement();

ResultSet resultSet = statement.executeQuery(selectSQL);

The following sequence iterates through the table, displaying one row at a time. The argument of the getString method specifies that we want to use the second column of the result set, which corresponds to the URL field:

out.println("List of URLs");

while (resultSet.next()) {

out.println(resultSet.getString(2));

}

The output of this example, when executed, is as follows:

List of URLs https://en.wikipedia.org/wiki/Data_science https://en.wikipedia.org/wiki/Bishop_Rock,_Isles_of_Scilly

If you need to empty the contents of the table, use the following sequence:

Statement statement = connection.createStatement();

statement.execute("TRUNCATE URLTABLE;");

This was a brief introduction to database access using Java. There are many resources available that will provide more in-depth coverage of this topic. For example, Oracle provides a more in-depth introduction to this topic at https://docs.oracle.com/javase/tutorial/jdbc/.

The Portable Document Format (PDF) is a format not tied to a specific platform or software application. A PDF document can hold formatted text and images. PDF is an open standard, making it useful in a variety of places.

There are a large number of documents stored as PDF, making it a valuable source of data. There are several Java APIs that allow access to PDF documents, including Apache POI and PDFBox. Techniques for extracting information from a PDF document are illustrated in Chapter 3, Data Cleaning.

JavaScript Object Notation (JSON) (http://www.JSON.org/) is a data format used to interchange data. It is easy for humans or machines to read and write. JSON is supported by many languages, including Java, which has several JSON libraries listed at http://www.JSON.org/.

A JSON entity is composed of a set of name-value pairs enclosed in curly braces. We will use this format in several of our examples. In handling YouTube, we will use a JSON object, some of which is shown next, representing the results of a request from a YouTube video:

{

"kind": "youtube#searchResult",

"etag": "etag",

"id": {

"kind": string,

"videoId": string,

"channelId": string,

"playlistId": string

},

...

}

Accessing the fields and values of such a document is not hard and is illustrated in Chapter 3, Data Cleaning.

Extensible Markup Language (XML) is a markup language that specifies a standard document format. Widely used to communicate between applications and across the Internet, XML is popular due to its relative simplicity and flexibility. Documents encoded in XML are character-based and easily read by machines and humans.

XML documents contain markup and content characters. These characters allow parsers to classify the information contained within the document. The document consists of tags, and elements are stored within the tags. Elements may also contain other markup tags and form child elements. Additionally, elements may contain attributes or specific characteristics stored as a name-and-value pair.

An XML document must be well-formed. This means it must follow certain rules such as always using closing tags and only a single root tag. Other rules are discussed at https://en.wikipedia.org/wiki/XML#Well-formedness_and_error-handling.

The Java API for XML Processing (JAXP) consists of three interfaces for parsing XML data. The Document Object Model (DOM) interface parses an XML document and returns a tree structure delineating the structure of the document. The DOM interface parses an entire document as a whole. Alternatively, the Simple API for XML (SAX) parses a document one element at a time. SAX is preferable when memory usage is a concern as DOM requires more resources to construct the tree. DOM, however, offers flexibility over SAX in that any element can be accessed at any time and in any order.

The third Java API is known as Streaming API for XML (StAX). This streaming model was designed to accommodate the best parts of DOM and SAX models by granting flexibility without sacrificing resources. StAX exhibits higher performance, with the trade-off being that access is only available to one location in a document at a time. StAX is the preferred technique if you already know how you want to process the document, but it is also popular for applications with limited available memory.

The following is a simple XML file. Each <text> represents a tag, labelling the element contained within the tags. In this case, the largest node in our file is <music> and contained within it are sets of song data. Each tag within a <song> tag describes another element corresponding to that song. Every tag will eventually have a closing tag, such as </song>. Notice that the first tag contains information about which XML version should be used to parse the file:

<?xml version="1.0"?>

<music>

<song id="1234">

<artist>Patton, Courtney</artist>

<name>So This Is Life</name>

<genre>Country</genre>

<price>2.99</price>

</song>

<song id="5678">

<artist>Eady, Jason</artist>

<name>AM Country Heaven</name>

<genre>Country</genre>

<price>2.99</price>

</song>

</music>

There are numerous other XML-related technologies. For example, we can validate a specific XML document using either a DTD document or XML schema writing specifically for that XML document. XML documents can be transformed into a different format using XLST.

Streaming data refers to data generated in a continuous stream and accessed in a sequential, piece-by-piece manner. Much of the data the average Internet user accesses is streamed, including video and audio channels, or text and image data on social media sites. Streaming data is the preferred method when the data is new and changing quickly, or when large data collections are sought.

Streamed data is often ideal for data science research because it generally exists in large quantities and raw format. Much public streaming data is available for free and supported by Java APIs. In this chapter, we are going to examine how to acquire data from streaming sources, including Twitter, Flickr, and YouTube. Despite the use of different techniques and APIs, you will notice similarities between the techniques used to pull data from these sites.

There are a large number of formats used to represent images, videos, and audio. This type of data is typically stored in binary format. Analog audio streams are sampled and digitized. Images are often simply collections of bits representing the color of a pixel. The following are links that provide a more in-depth discussion of some of these formats:

Frequently, this type of data can be quite large and must be compressed. When data is compressed two approaches are used. The first is a lossless compression, where less space is used and there is no loss of information. The second is lossy, where information is lost. Losing information is not always a bad thing as sometimes the loss is not noticeable to humans.

As we will demonstrate in Chapter 3, Data Cleaning, this type of data often is compromised in an inconvenient fashion and may need to be cleaned. For example, there may be background noise in an audio recording or an image may need to be smoothed before it can be processed. Image smoothing is demonstrated in Chapter 3, Data Cleaning, using the OpenCV library.

In this section, we will illustrate how to acquire data from web pages. Web pages contain a potential bounty of useful information. We will demonstrate how to access web pages using several technologies, starting with a low-level approach supported by the HttpUrlConnection class. To find pages, a web crawler application is often used. Once a useful page has been identified, then information needs to be extracted from the page. This is often performed using an HTML parser. Extracting this information is important because it is often buried amid a clutter of HTML tags and JavaScript code.

The contents of a web page can be accessed using the HttpUrlConnection class. This is a low-level approach that requires the developer to do a lot of footwork to extract relevant content. However, he or she is able to exercise greater control over how the content is handled. In some situations, this approach may be preferable to using other API libraries.

We will demonstrate how to download the content of Wikipedia's data science page using this class. We start with a try/catch block to handle exceptions. A URL object is created using the data science URL string. The openConnection method will create a connection to the Wikipedia server as shown here:

try {

URL url = new URL(

"https://en.wikipedia.org/wiki/Data_science");

HttpURLConnection connection = (HttpURLConnection)

url.openConnection();

...

} catch (MalformedURLException ex) {

// Handle exceptions

} catch (IOException ex) {

// Handle exceptions

}

The connection object is initialized with an HTTP GET command. The connect method is then executed to connect to the server:

connection.setRequestMethod("GET");

connection.connect();

Assuming no errors were encountered, we can determine whether the response was successful using the getResponseCode method. A normal return value is 200. The content of a web page can vary. For example, the getContentType method returns a string describing the page's content. The getContentLength method returns its length:

out.println("Response Code: " + connection.getResponseCode());

out.println("Content Type: " + connection.getContentType());

out.println("Content Length: " + connection.getContentLength());

Assuming that we get an HTML formatted page, the next sequence illustrates how to get this content. A BufferedReader instance is created where one line at a time is read in from the web site and appended to a BufferedReader instance. The buffer is then displayed:

InputStreamReader isr = new InputStreamReader((InputStream)

connection.getContent());

BufferedReader br = new BufferedReader(isr);

StringBuilder buffer = new StringBuilder();

String line;

do {

line = br.readLine();

buffer.append(line + "\n");

} while (line != null);

out.println(buffer.toString());

The abbreviated output is shown here:

Response Code: 200 Content Type: text/html; charset=UTF-8 Content Length: -1 <!DOCTYPE html> <html lang="en" dir="ltr" class="client-nojs"> <head> <meta charset="UTF-8"/> <title>Data science - Wikipedia, the free encyclopedia</title> <script>document.documentElement.className = ... "wgHostname":"mw1251"});});</script> </body> </html>

While this is feasible, there are easier methods for getting the contents of a web page. One of these techniques is discussed in the next section.

Web crawling is the process of traversing a series of interconnected web pages and extracting relevant information from those pages. It does this by isolating and then following links on a page. While there are many precompiled datasets readily available, it may still be necessary to collect data directly off the Internet. Some sources such as news sites are continually being updated and need to be revisited from time to time.

A web crawler is an application that visits various sites and collects information. The web crawling process consists of a series of steps:

- Select a URL to visit

- Fetch the page

- Parse the page

- Extract relevant content

- Extract relevant URLs to visit

This process is repeated for each URL visited.

There are several issues that need to be considered when fetching and parsing a page such as:

- Page importance: We do not want to process irrelevant pages.

- Exclusively HTML: We will not normally follow links to images, for example.

- Spider traps: We want to bypass sites that may result in an infinite number of requests. This can occur with dynamically generated pages where one request leads to another.

- Repetition: It is important to avoid crawling the same page more than once.

- Politeness: Do not make an excessive number of requests to a website. Observe the

robot.txtfiles; they specify which parts of a site should not be crawled.

The process of creating a web crawler can be daunting. For all but the simplest needs, it is recommended that one of several open source web crawlers be used. A partial list follows:

- Nutch: http://nutch.apache.org

- crawler4j: https://github.com/yasserg/crawler4j

- JSpider: http://j-spider.sourceforge.net/

- WebSPHINX: http://www.cs.cmu.edu/~rcm/websphinx/

- Heritrix: https://webarchive.jira.com/wiki/display/Heritrix

We can either create our own web crawler or use an existing crawler and in this chapter we will examine both approaches. For specialized processing, it can be desirable to use a custom crawler. We will demonstrate how to create a simple web crawler in Java to provide more insight into how web crawlers work. This will be followed by a brief discussion of other web crawlers.

Now that we have a basic understanding of web crawlers, we are ready to create our own. In this simple web crawler, we will keep track of the pages visited using ArrayList instances. In addition, jsoup will be used to parse a web page and we will limit the number of pages we visit. Jsoup (https://jsoup.org/) is an open source HTML parser. This example demonstrates the basic structure of a web crawler and also highlights some of the issues involved in creating a web crawler.

We will use the SimpleWebCrawler class, as declared here:

public class SimpleWebCrawler {

private String topic;

private String startingURL;

private String urlLimiter;

private final int pageLimit = 20;

private ArrayList<String> visitedList = new ArrayList<>();

private ArrayList<String> pageList = new ArrayList<>();

...

public static void main(String[] args) {

new SimpleWebCrawler();

}

}

The instance variables are detailed here:

|

Variable |

Use |

|

|

The keyword that needs to be in a page for the page to be accepted |

|

|

The URL of the first page |

|

|

A string that must be contained in a link before it will be followed |

|

|

The maximum number of pages to retrieve |

|

|

The |

|

|

An |

In the SimpleWebCrawler constructor, we initialize the instance variables to begin the search from the Wikipedia page for Bishop Rock, an island off the coast of Italy. This was chosen to minimize the number of pages that might be retrieved. As we will see, there are many more Wikipedia pages dealing with Bishop Rock than one might think.

The urlLimiter variable is set to Bishop_Rock, which will restrict the embedded links to follow to just those containing that string. Each page of interest must contain the value stored in the topic variable. The visitPage method performs the actual crawl:

public SimpleWebCrawler() {

startingURL = https://en.wikipedia.org/wiki/Bishop_Rock, "

+ "Isles_of_Scilly";

urlLimiter = "Bishop_Rock";

topic = "shipping route";

visitPage(startingURL);

}

In the visitPage method, the pageList ArrayList is checked to see whether the maximum number of accepted pages has been exceeded. If the limit has been exceeded, then the search terminates:

public void visitPage(String url) {

if (pageList.size() >= pageLimit) {

return;

}

...

}

If the page has already been visited, then we ignore it. Otherwise, it is added to the visited list:

if (visitedList.contains(url)) {

// URL already visited

} else {

visitedList.add(url);

...

}

Jsoup is used to parse the page and return a Document object. There are many different exceptions and problems that can occur such as a malformed URL, retrieval timeouts, or simply bad links. The catch block needs to handle these types of problems. We will provide a more in-depth explanation of jsoup in web scraping in Java:

try {

Document doc = Jsoup.connect(url).get();

...

}

} catch (Exception ex) {

// Handle exceptions

}

If the document contains the topic text, then the link is displayed and added to the pageList ArrayList. Each embedded link is obtained, and if the link contains the limiting text, then the visitPage method is called recursively:

if (doc.text().contains(topic)) {

out.println((pageList.size() + 1) + ": [" + url + "]");

pageList.add(url);

// Process page links

Elements questions = doc.select("a[href]");

for (Element link : questions) {

if (link.attr("href").contains(urlLimiter)) {

visitPage(link.attr("abs:href"));

}

}

}

This approach only examines links in those pages that contain the topic text. Moving the for loop outside of the if statement will test the links for all pages.

The output follows:

1: [https://en.wikipedia.org/wiki/Bishop_Rock,_Isles_of_Scilly] 2: [https://en.wikipedia.org/wiki/Bishop_Rock_Lighthouse] 3: [https://en.wikipedia.org/w/index.php?title=Bishop_Rock,_Isles_of_Scilly&oldid=717634231#Lighthouse] 4: [https://en.wikipedia.org/w/index.php?title=Bishop_Rock,_Isles_of_Scilly&diff=prev&oldid=717634231] 5: [https://en.wikipedia.org/w/index.php?title=Bishop_Rock,_Isles_of_Scilly&oldid=716622943] 6: [https://en.wikipedia.org/w/index.php?title=Bishop_Rock,_Isles_of_Scilly&diff=prev&oldid=716622943] 7: [https://en.wikipedia.org/w/index.php?title=Bishop_Rock,_Isles_of_Scilly&oldid=716608512] 8: [https://en.wikipedia.org/w/index.php?title=Bishop_Rock,_Isles_of_Scilly&diff=prev&oldid=716608512] ... 20: [https://en.wikipedia.org/w/index.php?title=Bishop_Rock,_Isles_of_Scilly&diff=prev&oldid=716603919]

In this example, we did not save the results of the crawl in an external source. Normally this is necessary and can be stored in a file or database.

Here we will illustrate the use of the crawler4j (https://github.com/yasserg/crawler4j) web crawler. We will use an adapted version of the basic crawler found at https://github.com/yasserg/crawler4j/tree/master/src/test/java/edu/uci/ics/crawler4j/examples/basic. We will create two classes: CrawlerController and SampleCrawler. The former class set ups the crawler while the latter contains the logic that controls what pages will be processed.

As with our previous crawler, we will crawl the Wikipedia article dealing with Bishop Rock. The results using this crawler will be smaller as many extraneous pages are ignored.

Let's look at the CrawlerController class first. There are several parameters that are used with the crawler as detailed here:

- Crawl storage folder: The location where crawl data is stored

- Number of crawlers: This controls the number of threads used for the crawl

- Politeness delay: How many seconds to pause between requests

- Crawl depth: How deep the crawl will go

- Maximum number of pages to fetch: How many pages to fetch

- Binary data: Whether to crawl binary data such as PDF files

The basic class is shown here:

public class CrawlerController {

public static void main(String[] args) throws Exception {

int numberOfCrawlers = 2;

CrawlConfig config = new CrawlConfig();

String crawlStorageFolder = "data";

config.setCrawlStorageFolder(crawlStorageFolder);

config.setPolitenessDelay(500);

config.setMaxDepthOfCrawling(2);

config.setMaxPagesToFetch(20);

config.setIncludeBinaryContentInCrawling(false);

...

}

}Next, the CrawlController class is created and configured. Notice the RobotstxtConfig and RobotstxtServer classes used to handle robot.txt files. These files contain instructions that are intended to be read by a web crawler. They provide direction to help a crawler to do a better job such as specifying which parts of a site should not be crawled. This is useful for auto generated pages:

PageFetcher pageFetcher = new PageFetcher(config);

RobotstxtConfig robotstxtConfig = new RobotstxtConfig();

RobotstxtServer robotstxtServer =

new RobotstxtServer(robotstxtConfig, pageFetcher);

CrawlController controller =

new CrawlController(config, pageFetcher, robotstxtServer);

The crawler needs to start at one or more pages. The addSeed method adds the starting pages. While we used the method only once here, it can be used as many times as needed:

controller.addSeed(

"https://en.wikipedia.org/wiki/Bishop_Rock,_Isles_of_Scilly");

The start method will begin the crawling process:

controller.start(SampleCrawler.class, numberOfCrawlers);

The SampleCrawler class contains two methods of interest. The first is the shouldVisit method that determines whether a page will be visited and the visit method that actually handles the page. We start with the class declaration and the declaration of a Java regular expression class Pattern object. It will be one way of determining whether a page will be visited. In this declaration, standard images are specified and will be ignored:

public class SampleCrawler extends WebCrawler {

private static final Pattern IMAGE_EXTENSIONS =

Pattern.compile(".*\\.(bmp|gif|jpg|png)$");

...

}

The shouldVisit method is passed a reference to the page where this URL was found along with the URL. If any of the images match, the method returns false and the page is ignored. In addition, the URL must start with https://en.wikipedia.org/wiki/. We added this to restrict our searches to the Wikipedia website:

public boolean shouldVisit(Page referringPage, WebURL url) {

String href = url.getURL().toLowerCase();

if (IMAGE_EXTENSIONS.matcher(href).matches()) {

return false;

}

return href.startsWith("https://en.wikipedia.org/wiki/");

}The visit method is passed a Page object representing the page being visited. In this implementation, only those pages containing the string shipping route will be processed. This further restricts the pages visited. When we find such a page, its URL, Text, and Text length are displayed:

public void visit(Page page) {

String url = page.getWebURL().getURL();

if (page.getParseData() instanceof HtmlParseData) {

HtmlParseData htmlParseData =

(HtmlParseData) page.getParseData();

String text = htmlParseData.getText();

if (text.contains("shipping route")) {

out.println("\nURL: " + url);

out.println("Text: " + text);

out.println("Text length: " + text.length());

}

}

}

The following is the truncated output of the program when executed:

URL: https://en.wikipedia.org/wiki/Bishop_Rock,_Isles_of_Scilly Text: Bishop Rock, Isles of Scilly...From Wikipedia, the free encyclopedia ... Jump to: ... navigation, search For the Bishop Rock in the Pacific Ocean, see Cortes Bank. Bishop Rock Bishop Rock Lighthouse (2005) ... Text length: 14677

Notice that only one page was returned. This web crawler was able to identify and ignore previous versions of the main web page.

We could perform further processing, but this example provides some insight into how the API works. Significant amounts of information can be obtained when visiting a page. In the example, we only used the URL and the length of the text. The following is a sample of other data that you may be interested in obtaining:

- URL path

- Parent URL

- Anchor

- HTML text

- Outgoing links

- Document ID

Web scraping is the process of extracting information from a web page. The page is typically formatted using a series of HTML tags. An HTML parser is used to navigate through a page or series of pages and to access the page's data or metadata.

Jsoup (https://jsoup.org/) is an open source Java library that facilitates extracting and manipulating HTML documents using an HTML parser. It is used for a number of purposes, including web scraping, extracting specific elements from an HTML page, and cleaning up HTML documents.

There are several ways of obtaining an HTML document that may be useful. The HTML document can be extracted from a:

- URL

- String

- File

The first approach is illustrated next where the Wikipedia page for data science is loaded into a Document object. This Jsoup object represents the HTML document. The connect method connects to the site and the get method retrieves the document:

try {

Document document = Jsoup.connect(

"https://en.wikipedia.org/wiki/Data_science").get();

...

} catch (IOException ex) {

// Handle exception

}

Loading from a file uses the File class as shown next. The overloaded parse method uses the file to create the document object:

try {

File file = new File("Example.html");

Document document = Jsoup.parse(file, "UTF-8", "");

...

} catch (IOException ex) {

// Handle exception

}

The Example.html file follows:

<html> <head><title>Example Document</title></head> <body> <p>The body of the document</p> Interesting Links: <br> <a href="https://en.wikipedia.org/wiki/Data_science">Data Science</a> <br> <a href="https://en.wikipedia.org/wiki/Jsoup">Jsoup</a> <br> Images: <br> <img src="eyechart.jpg" alt="Eye Chart"> </body> </html>

To create a Document object from a string, we will use the following sequence where the parse method processes the string that duplicates the previous HTML file:

String html = "<html>\n"

+ "<head><title>Example Document</title></head>\n"

+ "<body>\n"

+ "<p>The body of the document</p>\n"

+ "Interesting Links:\n"

+ "<br>\n"

+ "<a href="https://en.wikipedia.org/wiki/Data_science">" +

"DataScience</a>\n"

+ "<br>\n"

+ "<a href="https://en.wikipedia.org/wiki/Jsoup">" +

"Jsoup</a>\n"

+ "<br>\n"

+ "Images:\n"

+ "<br>\n"

+ " <img src="eyechart.jpg" alt="Eye Chart"> \n"

+ "</body>\n"

+ "</html>";

Document document = Jsoup.parse(html);The Document class possesses a number of useful methods. The title method returns the title. To get the text contents of the document, the select method is used. This method uses a string specifying the element of a document to retrieve:

String title = document.title();

out.println("Title: " + title);

Elements element = document.select("body");

out.println(" Text: " + element.text());

The output for the Wikipedia data science page is shown here. It has been shortened to conserve space:

Title: Data science - Wikipedia, the free encyclopedia Text: Data science From Wikipedia, the free encyclopedia Jump to: navigation, search Not to be confused with information science. Part of a ... policy About Wikipedia Disclaimers Contact Wikipedia Developers Cookie statement Mobile view

The parameter type of the select method is a string. By using a string, the type of information selected is easily changed. Details on how to formulate this string are found at the jsoup Javadocs for the Selector class at https://jsoup.org/apidocs/:

We can use the select method to retrieve the images in a document, as shown here:

Elements images = document.select("img[src$=.png]");

for (Element image : images) {

out.println("\nImage: " + image);

}

The output for the Wikipedia data science page is shown here. It has been shortened to conserve space:

Image: <img alt="Data Visualization" src="//upload.wikimedia.org/...> Image: <img alt="" src="//upload.wikimedia.org/wikipedia/commons/thumb/b/ba/...>

Links can be easily retrieved as shown next:

Elements links = document.select("a[href]");

for (Element link : links) {

out.println("Link: " + link.attr("href")

+ " Text: " + link.text());

}

The output for the Example.html page is shown here:

Link: https://en.wikipedia.org/wiki/Data_science Text: Data Science Link: https://en.wikipedia.org/wiki/Jsoup Text: Jsoup

jsoup possesses many additional capabilities. However, this example demonstrates the web scraping process. There are also other Java HTML parsers available. A comparison of Java HTML parser, among others, can be found at https://en.wikipedia.org/wiki/Comparison_of_HTML_parsers.

Social media contain a wealth of information that can be processed and is used by many data analysis applications. In this section, we will illustrate how to access a few of these sources using their Java APIs. Most of them require some sort of access key, which is normally easy to obtain. We start with a discussion on the OAuth class, which provides one approach to authenticating access to a data source.

When working with the type of data source, it is important to keep in mind that the data is not always public. While it may be accessible, the owner of the data may be an individual who does not necessarily want the information shared. Most APIs provide a means to determine how the data can be distributed, and these requests should be honored. When private information is used, permission from the author must be obtained.

In addition, these sites have limits on the number of requests that can be made. Keep this in mind when pulling data from a site. If these limits need to be exceeded, then most sites provide a way of doing this.

OAuth is an open standard used to authenticate users to many different websites. A resource owner effectively delegates access to a server resource without having to share their credentials. It works over HTTPS. OAuth 2.0 succeeded OAuth and is not backwards compatible. It provides client developers a simple way of providing authentication. Several companies use OAuth 2.0 including PayPal, Comcast, and Blizzard Entertainment.

A list of OAuth 2.0 providers is found at https://en.wikipedia.org/wiki/List_of_OAuth_providers. We will use several of these in our discussions.

The sheer volume of data and the popularity of the site, among celebrities and the general public alike, make Twitter a valuable resource for mining social media data. Twitter is a popular social media platform allowing users to read and post short messages called tweets. Twitter provides API support for posting and pulling tweets, including streaming data from all public users. While there are services available for pulling the entire set of public tweet data, we are going to examine other options that, while limiting in the amount of data retrieved at one time, are available at no cost.