Before we can start getting on with the Big Data technologies, we will first have a look at what infrastructure we will be using, which will enable us to focus more on the implementation of solutions to Big Data problems rather than spending time and resources on managing the infrastructure needed to execute those solutions. The cloud technologies have democratized access to high-scale utility computing, which was earlier available only to large companies. This is where Amazon Web Services comes to our rescue as one of the leading players in the public cloud computing landscape.

As the name suggests, Amazon Web Services (AWS) is a set of cloud computing services provided by Amazon that are accessible over the Internet. Since anybody can sign up and use it, AWS is classified as a public cloud computing provider.

Most of the businesses depend on applications running on a set of compute and storage resources that needs to be reliable and secure and shall scale as and when required. The latter attribute required in there, scaling, is one of the major problems with the traditional data center approach. If the business provisions too many resources expecting heavy usage of their applications, they might need to invest a lot of upfront capital (CAPEX) on their IT. Now, what if they do not receive the expected traffic? Also, if the business provisions fewer resources expecting lesser traffic and ends up with receiving more than expected traffic, they would surely have disgruntled customers and bad experience.

AWS provides scalable compute services, highly durable storage services, and low-latency database services among others to enable businesses to quickly provision the required infrastructure for the business to launch and run applications. Almost everything that you can do on a traditional data center can be achieved with AWS. AWS brings in the ability to add and remove compute resources elastically. You can start with the number of resources you expect is required, and as you go, you can scale it up to meet increasing traffic or to meet specific customer requirements. Alternatively, you may scale it down any time as required, saving money and having the flexibility to make required changes quickly. Hence, you need not invest a huge capital upfront or worry about capacity planning. Also, with AWS, you only need to pay-per-use. So, for example, if you have a business that needs more resources during a specific time of day, say for a couple of hours, with AWS, you may configure it to add resources for you and then scale down automatically as specified. In this case, you only pay for the added extra resources for those couple of hours of usage. Many businesses have leveraged AWS in this fashion to support their requirements and reduce costs.

How does AWS provide infrastructure at such low cost and at pay-per-use? The answer lies in AWS having huge number of customers spread across almost all over the world—allowing AWS to have the economies of scale, which lets AWS bring quality resources at a low operational cost to us.

Experiments and ideas that were once constrained on cost or resources are very much feasible now with AWS, resulting in increased capacity for businesses to innovate and deliver higher quality products to their customers.

Hence, AWS enables businesses around the world to focus on delivering quality experience to their customers, while AWS takes care of the heavy lifting required to launch and keep running those applications at an expected scale, securely and reliably.

In this age of Internet, businesses cater to customers worldwide. Keeping that in mind, AWS has its resources physically available at multiple geographical locations spread across the world. Also, in order to recover data and applications from disasters and natural calamities, it is prudent to have resources spread across multiple geographical locations.

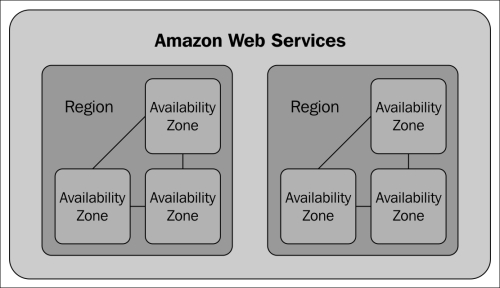

We have two different levels of geographical separation in AWS:

Regions

Availability zones

The top-level geographical separation is termed as regions on AWS. Each region is completely enclosed in a single country. The data generated and uploaded to an AWS resource resides in the region where the resource has been created.

Each region is completely independent from the other. No data/resources are replicated across regions unless the replication is explicitly performed. Any communication between resources in two different regions happens via the public Internet (unless a private network is established by the end user); hence, it's your responsibility to use proper encryption methods to secure your data.

As of now, AWS has nine operational regions across the world, with the tenth one starting soon in Beijing. The following are the available regions of AWS:

|

Region code |

Region name |

|---|---|

|

|

Asia Pacific (Tokyo) |

|

|

Asia Pacific (Singapore) |

|

|

Asia Pacific (Sydney) |

|

|

EU (Ireland) |

|

|

South America (Sao Paulo) |

|

|

US East (Northern Virginia) |

|

|

US West (Northern California) |

|

|

US West (Oregon) |

In addition to the aforementioned regions, there are the following two regions:

AWS GovCloud (US): This is available only for the use of the US Government.

China (Beijing): At the time of this writing, this region didn't have public access and you need to request an account to create infrastructure there. It is officially available at https://www.amazonaws.cn/.

The following world map shows how AWS has its regions spread across the world:

This image has been taken from the AWS website

Each region is composed of one or more availability zones. Availability zones are isolated from one another but are connected via low-latency network to provide high availability and fault tolerance within a region for AWS services. Availability zones are distinct locations present within a region. The core computing resources such as machines and storage devices are physically present in one of these availability zones. All availability zones are separated physically in order to cope up with situations, where one physical data center, for example, has a power outage or network issue or any other location-dependent issues.

Availability zones are designed to be isolated from the failures of other availability zones in the same region. Each availability zone has its own independent infrastructure. Each of them has its own independent electricity power setup and supply. The network and security setups are also detached from other availability zones, though there is low latency and inexpensive connectivity between them.

Basically, you may consider that each availability zone is a distinct physical data center. So, if there is a heating problem in one of the availability zones, other availability zones in the same region will not be hampered.

The following diagram shows the relationship between regions and availability zones:

Customers can benefit from this global infrastructure of AWS in the following ways:

Achieve low latency for application requests by serving from locations nearer to the origin of the request. So, if you have your customers in Australia, you would want to serve requests from the Sydney region.

Comply with legal requirements. Keeping data within a region helps some of the customers to comply with requirements of various countries where sending user's data out of the country isn't allowed.

Build fault tolerance and high availability applications, which can tolerate failures in one data center.

When you launch a machine on AWS, you will be doing so in a selected region; further, you can select one of the availability zones in which you want your machine to be launched. You may distribute your instances (or machines) across multiple availability zones and have your application serve requests from a machine in another availability zone when the machine fails in one of the availability zones.

You may also use another service AWS provide, namely Elastic IP addresses, to mask the failure of a machine in one availability zone by rapidly remapping the address to a machine in another availability zone where other machine is working fine.

This architecture enables AWS to have a very high level of fault tolerance and, hence, provides a highly available infrastructure for businesses to run their applications on.

AWS provides a wide variety of global services catering to large enterprises as well as smart start-ups. As of today, AWS provides a growing set of over 60 services across various sectors of a cloud infrastructure. All of the services provided by AWS can be accessed via the AWS management console (a web portal) or programmatically via API (or web services). We will learn about the most popular ones and which are most used across industries.

AWS categorizes its services into the following major groups:

Compute

Storage

Database

Network and CDN

Analytics

Application services

Deployment and management

Let's now discuss all the groups and list down the services available in each one of them.

The compute group of services includes the most basic service provided by AWS: Amazon EC2, which is like a virtual compute machine. AWS provides a wide range of virtual machine types; in AWS lingo, they are called instances.

EC2 stands for Elastic Compute Cloud. The key word is elastic. EC2 is a web service that provides resizable compute capacity in the AWS Cloud. Basically, using this service, you can provision instances of varied capacity on a cloud. You can launch instances within minutes and you can terminate them when work is done. You can decide on the computing capacity of your instance, that is, number of CPU cores or amount of memory, among others from a pool of machine types offered by AWS.

You only pay for usage of instances by number of hours. It may be noted here that if you run an instance for one hour and few minutes, it will be billed as 2 hours. Each partial instance hour consumed is billed as full hour. We will learn about EC2 in more detail in the next section.

Auto scaling is one of the popular services AWS has built and offers to customers to handle spikes in application loads by adding or removing infrastructure capacity. Auto scaling allows you to define conditions; when these conditions are met, AWS would automatically scale your compute capacity up or down. This service is well suited for applications that have time dependency on its usage or predictable spikes in the usage.

Auto scaling also helps in the scenario where you want your application infrastructure to have a fixed number of machines always available to it. You can configure this service to automatically check the health of each of the machines and add capacity as and when required if there are any issues with existing machines. This helps you to ensure that your application receives the compute capacity it requires.

Moreover, this service doesn't have additional pricing, only EC2 capacity being used is billed.

Elastic Load Balancing (ELB) is the load balancing service provided by AWS. ELB automatically distributes the incoming application's traffic among multiple EC2 instances. This service helps in achieving high availability for applications by load balancing traffic across multiple instances in different availability zones for fault tolerance.

ELB has the capability to automatically scale its capacity to handle requests to match the demands of the application's traffic. It also offers integration with auto scaling, wherein you may configure it to also scale the backend capacity to cater to the varying traffic levels without manual intervention.

The Amazon Workspaces service provides cloud-based desktops for on-demand usage by businesses. It is a fully managed desktop computing service in the cloud. It allows you to access your documents and applications from anywhere and from devices of your choice. You can choose the hardware and software as per your requirement. It allows you to choose from packages providing different amounts of CPU, memory, and storage.

Amazon Workspaces also have the facility to securely integrate with your corporate Active Directory.

Storage is another group of essential services. AWS provides low-cost data storage services having high durability and availability. AWS offers storage choices for backup, archiving, and disaster recovery, as well as block, file, and object storage. As is the nature of most of the services on AWS, for storage too, you pay as you go.

S3 stands for Simple Storage Service. S3 provides a simple web service interface with fully redundant data storage infrastructure to store and retrieve any amount of data at any time and from anywhere on the Web. Amazon uses S3 to run its own global network of websites.

As AWS states:

Amazon S3 is cloud storage for the Internet.

Amazon S3 can be used as a storage medium for various purposes. We will read about it in more detail in the next section.

EBS stands for Elastic Block Store. It is one of the most used service of AWS. It provides block-level storage volumes to be used with EC2 instances. While the instance storage data cannot be persisted after the instance has been terminated, using EBS volumes you can persist your data independently from the life cycle of an instance to which the volumes are attached to. EBS is sometimes also termed as off-instance storage.

EBS provides consistent and low-latency performance. Its reliability comes from the fact that each EBS volume is automatically replicated within its availability zone to protect you from hardware failures. It also provides the ability to copy snapshots of volumes across AWS regions, which enables you to migrate data and plan for disaster recovery.

Amazon Glacier is an extremely low-cost storage service targeted at data archival and backup. Amazon Glacier is optimized for infrequent access of data. You can reliably store your data that you do not want to read frequently with a cost as low as $0.01 per GB per month.

AWS commits to provide average annual durability of 99.999999999 percent for an archive. This is achieved by redundantly storing data in multiple locations and on multiple devices within one location. Glacier automatically performs regular data integrity checks and has automatic self-healing capability.

AWS Storage Gateway is a service that enables secure and seamless connection between on-premise software appliance with AWS's storage infrastructure. It provides low-latency reads by maintaining an on-premise cache of frequently accessed data while all the data is stored securely on Amazon S3 or Glacier.

In case you need low-latency access to your entire dataset, you can configure this service to store data locally and asynchronously back up point-in-time snapshots of this data to S3.

The AWS Import/Export service accelerates moving large amounts of data into and out of AWS infrastructure using portable storage devices for transport. Data transfer via Internet might not always be the feasible way to move data to and from AWS's storage services.

Using this service, you can import data into Amazon S3, Glacier, or EBS. It is also helpful in disaster recovery scenarios where in you might need to quickly retrieve a large amount of data backup stored in S3 or Glacier; using this service, your data can be transferred to a portable storage device and delivered to your site.

AWS provides fully managed relational and NoSQL database services. It also has one fully managed in-memory caching as a service and a fully managed data-warehouse service. You can also use Amazon EC2 and EBS to host any database of your choice.

RDS stands for Relational Database Service. With database systems, setup, backup, and upgrading are the tasks, which are tedious and at the same time critical. RDS aims to free you of these responsibilities and lets you focus on your application. RDS supports all the major databases, namely, MySQL, Oracle, SQL Server, and PostgreSQL. It also provides the capability to resize the instances holding these databases as per the load. Similarly, it provides a facility to add more storage as and when required.

Amazon RDS makes it just a matter of few clicks to use replication to enhance availability and reliability for production workloads. Using its Multi-AZ deployment option, you can run very critical applications with high availability and in-built automated failover. It synchronously replicates data to a secondary database. On failure of the primary database, Amazon RDS automatically starts fetching data for further requests from the replicated secondary database.

Amazon DynamoDB is a fully managed NoSQL database service mainly aimed at applications requiring single-digit millisecond latency. There is no limit to the amount of data you can store in DynamoDB. It uses an SSD-storage, which helps in providing very high performance.

DynamoDB is a schemaless database. Tables do not need to have fixed schemas. Each record may have a different number of columns. Unlike many other nonrelational databases, DynamoDB ensures strong read consistency, making sure that you always read the latest value.

DynamoDB also integrates with Amazon Elastic MapReduce (Amazon EMR). With DynamoDB, it is easy for customers to use Amazon EMR to analyze datasets stored in DynamoDB and archive the results in Amazon S3.

Amazon Redshift is basically a modern data warehouse system. It is an enterprise-class relational query and management system. It is PostgreSQL compliant, which means you may use most of the SQL commands to query tables in Redshift.

Amazon Redshift achieves efficient storage and great query performance through a combination of various techniques. These include massively parallel processing infrastructures, columnar data storage, and very efficient targeted data compressions encoding schemes as per the column data type. It has the capability of automated backups and fast restores. There are in-built commands to import data directly from S3, DynamoDB, or your on-premise servers to Redshift.

You can configure Redshift to use SSL to secure data transmission. You can also set it up to encrypt data at rest, for which Redshift uses hardware-accelerated AES-256 encryption.

As we will see in Chapter 10, Use Case – Analyzing CloudFront Logs Using Amazon EMR, Redshift can be used as the data store to efficiently analyze all your data using existing business intelligence tools such as Tableau or Jaspersoft. Many of these existing business intelligence tools have in-built capabilities or plugins to work with Redshift.

Amazon ElastiCache is basically an in-memory cache cluster service in cloud. It makes life easier for developers by loading off most of the operational tasks. Using this service, your applications can fetch data from fast in-memory caches for some frequently needed information or for some counters kind of data.

Amazon ElastiCache supports two most commonly used open source in-memory caching engines:

Memcached

Redis

As with other AWS services, Amazon ElastiCache is also fully managed, which means it automatically detects and replaces failed nodes.

Networking and CDN services include the networking services that let you create logically isolated networks in cloud, the setup of a private network connection to the AWS cloud, and an easy-to-use DNS service. AWS also has one content delivery network service that lets you deliver content to your users with higher speeds.

VPC stands for Virtual Private Cloud. As the name suggests, AWS allows you to set up an isolated section of AWS cloud, which is private. You can launch resources to be available only inside that private network. It allows you to create subnets and then create resources within those subnets. With EC2 instances without VPC, one internal and one external IP addresses are always assigned; but with VPC, you have control over the IP of your resource; you may choose to only keep an internal IP for a machine. In effect, that machine will only be known by other machines on that subnet; hence, providing a greater level of control over security of your cloud infrastructure.

You can further control the security of your cloud infrastructure by using features such as security groups and network access control lists. You can configure inbound and outbound filtering at instance level as well as at subnet level.

You can connect your entire VPC to your on-premise data center.

Amazon Route 53 is simply a Domain Name System (DNS) service that translates names to IP addresses and provides low-latency responses to DNS queries by using its global network of DNS servers.

Amazon CloudFront is a CDN service provided by AWS. Amazon CloudFront has a network of delivery centers called as edge locations all around the world. Static contents are cached on the edge locations closer to the requests for those contents, effecting into lowered latency for further downloads of those contents. Requests for your content are automatically routed to the nearest edge location, so content is delivered with the best possible performance.

Analytics is the group of services, which host Amazon EMR among others. These are a set of services that help you to process and analyze huge volumes of data.

The Amazon EMR service lets you process any amount of data by launching a cluster of required number of instances, and this cluster will have one of the analytics engines predeployed. EMR mainly provides Hadoop and related tools such as Pig, Hive, and HBase. People who have spent hours in deploying a Hadoop cluster will understand the importance of EMR. Within minutes, you can launch a Hadoop cluster having hundreds of instances. Also, you can resize your cluster on the go with a few simple commands. We will be learning more about EMR throughout this book.

Amazon Kinesis is a service for real-time streaming data collection and processing. It can collect and process hundreds of terabytes of data per hour from hundreds of thousands of sources, as claimed by AWS. It allows you to write applications to process data in real time from sources such as log streams, clickstreams, and many more. You can build real-time dashboards showing current trends, recent changes/improvements, failures, and errors.

AWS Data Pipeline is basically a service to automate a data pipeline. That is, using this, you can reliably move data between various AWS resources at scheduled times and on meeting some preconditions. For instance, you receive daily logs in your S3 buckets and you need to process them using EMR and move the output to a Redshift table. All of this can be automated using AWS Data Pipeline, and you will get processed data moved to Redshift on daily basis ready to be queried by your BI tool.

Application services include services, which you can use with applications. These include search functionality, queuing service, push notifications, and e-mail delivery among others.

Amazon CloudSearch is a search service that allows you to easily integrate fast and highly scalable search functionality into your applications. It now supports 34 languages. It also supports popular search features such as highlighting, autocomplete, and geospatial search.

SQS stands for Simple Queue Service. It provides a hosted queue to store messages as they are transferred between computers. It ensures that no messages are lost, as all messages are stored redundantly across multiple servers and data centers.

SNS stands for Simple Notification Service. It is basically a push messaging service. It allows you to push messages to mobile devices or distributed services. You can anytime seamlessly scale from a few messages a day to thousands of messages per hour.

SES stands for Simple Email Service. It is basically an e-mail service for the cloud. You can use it for sending bulk and transactional e-mails. It provides real-time access to sending statistics and also provides alerts on delivery failures.

Amazon AppStream is a service that helps you to stream heavy applications such as games or videos to your customers.

Deployment and Management groups have services which AWS provides you to help with the deployment and management of your applications on AWS cloud infrastructure. This also includes services to monitor your applications and keep track of your AWS API activities.

The AWS Identity and Access Management (IAM) service enables you to create fine-grained control access to AWS services and resources for your users.

Amazon CloudWatch is a web service that provides monitoring for various AWS cloud resources. It collects metrics specific to the resource. It also allows you to programmatically access your monitoring data and build graphs or set alarms to help you better manage your infrastructure. Basic monitoring metrics (at 5-minute frequency) for Amazon EC2 instances are free of charge. It will cost you if you opt for detailed monitoring. For pricing, you can refer to http://aws.amazon.com/cloudwatch/pricing/.

AWS Elastic Beanstalk is a service that helps you to easily deploy web applications and services built on popular programming languages such as Java, .NET, PHP, Node.js, Python, and Ruby. There is no additional charge for this service; you only pay for the underlying AWS infrastructure that you create for your application.

AWS CloudFormation is a service that provides you with an easy way to create a set of related AWS resources and provision them in an orderly and predictable fashion. This service makes it easier to replicate a working cloud infrastructure. There are various templates provided by AWS; you may use any one of them as it is or you can create your own.

AWS OpsWorks is a service built for DevOps. It is an application management service that makes it easy to manage an entire application stack from load balancers to databases.

The AWS CloudHSM service allows you to use dedicated Hardware Security Module (HSM) appliances within the AWS Cloud. You may need to meet some corporate, contractual, or regulatory compliance requirements for data security, which you can achieve by using CloudHSM.

AWS CloudTrail is simply a service that logs API requests to AWS from your account. It logs API requests to AWS from all the available sources such as AWS Management Console, various AWS SDKs, and command-line tools.

AWS keeps on adding useful and innovative products to its repository of already vast set of services. AWS is clearly the leader among the cloud infrastructure providers.

Amazon provides a Free Tier across AWS products and services in order to help you get started and gain hands-on experience before you can build your solutions on top. Using a Free Tier, you can test your applications and gain the confidence required before a full-fledged use.

The following table lists some of the common services and what you can get in the Free Tier for them:

The Free Tier is available only for the first 12 months from the sign up for new customers. When your 12 months expire or if your usage exceeds the Free Tier limits, you will need to pay standard rates, which AWS calls pay-as-you-go service rates. You can refer to each service's page for pricing details. For example, in order to get the pricing detail for EC2, you may refer to http://aws.amazon.com/ec2/pricing/.

Tip

You should keep a tab on your usage and use any service after you know that the pricing and your expected usage matches your budget. In order to track your AWS usage, sign in to the AWS management console and open the Billing and Cost Management console at https://console.aws.amazon.com/billing/home#/.

Signing up for AWS is very simple and straightforward. The following is a step-by-step guide for you to create an account on AWS and launch the AWS management console.

Go to http://aws.amazon.com and click on Sign Up.

This will take you to a page saying Sign In or Create an AWS Account. If you already have an Amazon.com account, you can use this to start using AWS; or you can create a new account by selecting I am a new user and clicking on Sign in using our secure server:

Further, you will need to key in the basic login information such as password, contact information, and other account details and create an Amazon.com account and continue.

You will need to provide your payment information to AWS. You will not be charged up front, but will be charged for the AWS resources you will use.

In order to complete the sign-up process, AWS needs to verify your identity. After you provide a phone number where you can be reached, you will receive a call immediately from an automated system and will be prompted to enter the PIN number over the phone. Only when this done, you will be able to proceed further.

Go to https://console.aws.amazon.com and sign in using the account you just created. This will take you to a screen displaying a list of AWS services. After you start using AWS more and more, you can configure any particular service page to be your landing page:

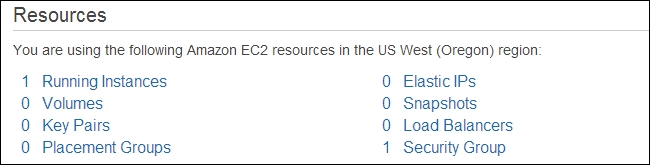

The resources are listed on per region basis. That is, first a region needs to be selected and then only you can view the resources tied to that region. AWS resources are global, tied to a region, or tied to an availability zone.

EC2 is the most basic web service provided by AWS. It allows you to launch instances of various capacities. You can get complete control over the lifetime of this instance, and you also have the root access.

After you sign in to your AWS console, you can start a machine in a few steps. Go to the EC2-specific console view from your AWS console. Select the region in which you want to launch your instance. This can be selected from the top-right corner of the page.

Click on Launch Instance. Let's walk through the simple steps you need to follow after this.

Amazon Machine Image (AMI) is a set of predefined software configuration and applications. It is basically a template that contains the details about operating system, application server, and initial set of applications required to launch your instance. There are a set of standard AMIs provided by AWS, there are AMIs contributed by the user community, and also there are AMIs available in the AWS marketplace. You can select an AMI from among them. If you are confused, select one of the AMIs from the Quick Start section.

AWS EC2 provides various instance types optimized to fit different use cases. A virtual machine launched on AWS is called as an instance. They have varying combinations of CPU, memory, storage, and networking capacity giving you the liberty to decide on the right set of computing resources for your applications.

Choose the instance type that fits your needs and budget. If you are just trying out things, you may go for t1.micro, which is available under Free Tier. We will discuss about instance types in more detail in our next section.

At this stage, you may skip other steps and go ahead and launch your instance. However, that is not recommended, as your machine would be open to the world, that is, it will be publicly accessible. AWS provides with a feature for creating security groups, wherein you can create inbound and outbound rules restricting unwanted traffic and only allowing some trusted IPs to connect to your instance.

In this step, you may instruct AWS to launch multiple instances of the same type and with the same AMI. You may also choose to request for spot instance. Additionally, you can add the following configurations to your instance:

The network your instance will belong to. Here, you choose the VPC of which you want your instance to be a part of. After selecting a VPC, if you want, you may also let AWS automatically assign a public IP address to your instance. This IP will only be associated with your instance until it is stopped or terminated.

The availability zone your instance will belong to. This can be set if you do not select a VPC and go with the default network, that is, EC2-Classic.

The IAM role, if any, you want to assign to your instance.

The instance behavior when an OS-level shut down is performed. It is recommended to keep this configuration to Stop. Instances can be either terminated or stopped.

You can also enable the protection from accidental termination of the instance. Once this is enabled, you cannot terminate it from the AWS management console or using AWS APIs until you disable this. You can also enable CloudWatch detailed monitoring for this instance.

Every instance type comes with a definite instance storage. You can attach more instance storage volumes or may decide to add EBS volumes to your instance. EBS volumes can also be attached later after launching the instance. You can also edit the configurations of the root volume of your instance.

For better book-keeping purposes, it is always good to give a name to your instance, for example, MyApplicationWebserverBox. You can also create custom tags suiting your needs.

You can create a new security group for your instance or you can use an already defined security group. For example, if you already have a few web servers and you are just adding another instance to that group of servers, you wouldn't want to create a separate security group for that, rather you can reuse the existing security group that was created for those web servers.

While creating a new security group, you will see that one entry is prefilled to enable remote login to that machine via SSH from anywhere. If you want, you can constrain that rule to allow SSH traffic only via fixed IPs or IP ranges. Similarly, you can add rules for other protocols. If you have a web server running and you want to open the HTTP traffic for the world or if you have a MySQL database running on this machine, you would want to select MySQL from the type while adding a new rule and set the Source setting to your machines from where you would want your MySQL to be accessible.

You can now review your configurations and settings and launch your instance. Just one small thing before your instance is launched: you need to specify the key pair in order to access this instance remotely. You can choose an existing key pair or can create a new key pair. You must download the private key file (*.pem) and keep it securely. You would use this to SSH into this instance.

Note

It is very important to note that if this private key file is lost, there is no way to log in to the instance after it is launched. As AWS doesn't store the private key at its end, keep it securely.

That's all. Click on Launch Instances. Your instance should be up and running within minutes.

If you go back to the EC2 dashboard of your AWS management console, you will see that your instance is added to the number of running instances. Your EC2 dashboard view will look as follows:

After launching your instance, when you click on the link saying n Running Instances, where n is the number of instances running, you will be taken to a page having all the running instances listed. There, you should select the instance you had launched; you can identify it from the name you had given while launching the instance. Now, in the bottom pane, you can see the Public DNS and Public IP values listed for the selected instance (let's assume that you had configured your instance to be provided a public IP while launching). You will use either of these values to SSH into your instance.

Let's assume the following before moving ahead:

Public IP of your machine is

51:215:203:111(this is some random IP just for the sake of explanation)Public DNS of your machine is

ec2-51-215-203-111.ap-southeast-1.compute.amazonaws.com(your instance's public DNS will look like this given the above IP and that your instance was launched in the Singapore region)Private key file path in the machine from where you want to connect to newly launched instance is /

home/awesomeuser/secretkeys/my-private-key.pem

Now that you have all the information about your instance, connecting to the instance is only a matter of one SSH command. You should ensure that you have an SSH client installed on the machine from where you will connect to your AWS instance. For Linux-based machines, a command-line SSH client is readily available.

As the private key pair is very critical from security point of view, it is important to set the appropriate access control to this file so that it isn't publicly viewable. You can use the chmod command to set appropriate permissions over the .pem file:

chmod 400 my-key-pair.pem

You can connect to your instance by executing the following command from the command line:

$ssh -i /home/awesomeuser/secretkeys/my-private-key.pem ec2-user@ec2-51-215-203-111.ap-southeast-1.compute.amazonaws.com

Alternatively, you can also connect using the public IP:

$ssh -i /home/awesomeuser/secretkeys/my-private-key.pem ec2-user@51:215:203:111

You may note that the username to log in is ec2-user. You can assume root access by simply switching user by the following command, you won't be prompted for a password:

$ sudo su

EC2 has several predefined capacity packages that you can choose to launch an instance with. Instance types are defined and categorized based on the following parameters:

CPU

Memory

Storage

Network Capacity

Each instance type in turn includes multiple instance sizes for you to choose from. Primarily, there are three most commonly used instance types:

General purpose: M3

Memory optimized: R3

Compute optimized : C3

The general purpose set of instances consists of M3 instance types. These types of instances have a balance of compute, memory, and network resources. They have SSD-based instance storage.

This set of instances consists of R3 instance types. These types of instances are best fit for memory-intensive applications. R3 instances have the lowest cost per GB of RAM among all EC2 instance types.

These types of instances are suitable for in-memory analytics, distributed-memory-based caching engines, and many other similar memory-intensive applications.

This set of instances consists of C3 instance types. These types of instances are best fit for compute-intensive applications. C3 instances have the highest performing processors and the lowest price / compute performance available in EC2 currently.

These types of instances are suitable for high performance applications such as on-demand batch-processing, video encoding, high-end gaming, and many other similar compute-intensive applications.

The following table lists the instances sized for C3 instance types:

|

Instance size |

vCPU |

Memory (GB) |

Storage (GB) |

|---|---|---|---|

|

|

2 |

3.75 |

2 * 16 |

|

|

4 |

7.5 |

2 * 40 |

|

|

8 |

15 |

2 * 80 |

|

|

16 |

30 |

2 * 160 |

|

|

32 |

60 |

2 * 320 |

There are other instance types such as GPU, which are mainly used for game streaming, and storage optimized instance types, which are used to create large clusters of NoSQL databases and house various data warehousing engines. Micro instance types are also available, which are the low-end instances.

S3 is a service aimed at making developers and businesses free from worrying about having enough storage available. It is a very robust and reliable service that enables you to store any amount of data and ensures that your data will be available when you need it.

Creating a S3 bucket is just a matter of a few clicks and setting a few parameters such as the name of the bucket. Let's have a walk-through of the simple steps required to create a S3 bucket from the AWS management console:

Go to the S3 dashboard and click on Create Bucket.

Enter a bucket name of your choice and select the AWS region in which you want to create your bucket.

That's all, just click on Create and you are done.

The bucket name you choose should be unique among all existing bucket names in Amazon S3. Because bucket names form a part of the URL to access its objects via HTTP, it is required to follow DNS naming conventions.

The DNS naming conventions include the following rules:

It must be at least three and no more than 63 characters long.

It must be a series of one or more labels. Adjacent labels are separated by a single period (.).

It can contain lowercase letters, numbers, and hyphens.

Each individual label within a name must start and end with a lowercase letter or a number.

It must not be formatted as an IP address.

Some examples of valid and invalid bucket names are listed in the following table:

|

Invalid bucket name |

Valid bucket name |

|---|---|

|

|

|

|

|

|

|

|

|

Now, you can easily upload your files in this bucket by clicking on the bucket name and then clicking on Upload. You can also create folders inside the bucket.

S3cmd is a free command-line tool to upload, retrieve, and manage data on Amazon S3. It boasts some of the advanced features such as multipart uploads, encryption, incremental backup, and S3 sync among others. You can use S3cmd to automate your S3-related tasks.

You may download the latest version of S3cmd from http://s3tools.org and check for instructions on the website regarding installing it. This is a separate open source tool that is not developed by Amazon.

In order to use S3cmd, you will need to first configure your S3 credentials. To configure credentials, you need to execute the following command:

s3cmd –-configure

You will be prompted for two keys: Access Key and Secret Key. You can get these keys from the IAM dashboard of your AWS management console. You may leave default values for other configurations.

Now, by using very intuitive commands, you may access and manage your S3 buckets. These commands are mentioned in the following table:

|

Task |

Command |

|---|---|

|

List all the buckets |

|

|

Create a bucket |

|

|

List the contents of a bucket |

|

|

Upload a file into a bucket |

|

|

Download a file |

|

We learned about the world of cloud computing infrastructure and got a quick introduction to AWS. We created an AWS account and discussed how to launch a machine and set up storage on S3.

In the next chapter, we will dive into the world of distributed paradigm of programming called MapReduce. The following chapters will help you understand how AWS has made it easier for businesses and developers to build and operate Big Data applications.