The word cloud has been commonplace in the industry and marketplace for over a decade. In its modern usage, it was first used in August of 2006, when Eric Schmidt of Google used it to describe an emergent new model (Source: Technology Review). However, now thanks to a, then, little-known company called Amazon Web Services (AWS), it has become immensely famous.

Note

Did you know? Amazon started work on its cloud in the year 2000; the key years in its development were 2003, 2004, and 2006. In 2004, the AWS, or web services at the time, were simply a group of disparate APIs and not a full-blown IaaS/PaaS service as it is today. The first service to be launched in 2003 was a Simple Queue Service (SQS) and then later, S3 and EC2 were added. In 2006, the cloud as we know it today gained popularity.

Once the term cloud computing became a part of common IT parlance, there was no dearth of definitions. Almost everyone had something to sell, and added their own spin on the terminology.

In this chapter, we will attempt to decipher this different terminology in relation to the definitions of the different clouds.

If you are wondering why this is important, it is to make and maintain the clarity of context in future chapters, as new concepts emerge and are commingled in the grand scheme of architecting the hybrid cloud.

Note

Did you know?The term cloud computing was first used in 1996, by a group of executives at Compaq to describe the future of the internet business. - Technology Review

In the remaining part of the chapter, we take a look at different definitions of the cloud and the different products used.

In trying to navigate through the maze of the several definition's that are available, it is clear that there are various ways in which we can take a look at clouds, however, we will focus on the main ones and simplify them for our understanding.

As a first step, let us define what could pass as cloud computing. The Wikipedia definition is as follows:

"Cloud computing is an(IT) paradigm, a model for enabling ubiquitous access to shared pools of configurable resources (such as computer networks, servers, storage, applications and services), which can be rapidly provisioned with minimal management effort, often over the Internet"

If we look at that statement from a technical standpoint, it would be fair to say that in order for something to be referred to as cloud computing, it must at least possess the following characteristics:

- Self-service (reduces wait time to get resources provisioned)

- Shared, standard, consistent (shared pools of configurable resources)

- Cross-domain automation (rapid provisioning)

- Consumption based chargeback and billing

The three main ways in which we can take a look at dissecting the clouds are as follows:

- Based on abstraction

- Based on the services offered

- Based on the consumers of the services

The underlying principle of cloud is abstraction; how it is abstracted determines a lot of its feature sets and behavior. However, this aspect of the cloud is little-known and often ignored. It only becomes evident when dealing with different kinds of clouds from different providers.

We shall delve into the details and nuances. For starters, these are:

- Service down clouds

- Infrastructure up clouds

To understand these better, let's take a look at the following stack, (which is used to run an application). The stack assumes a virtualized infrastructure being used to run the application.

In the event of an application running on bare-metal, the Virtual Machine and the Hypervisor layers will be absent, but the remainder of the stack will still be in play.

In traditional IT businesses, different teams manage different aspects of this stack. For example, the Infrastructure management team manages the underlying hardware and its configuration, the Virtualization team manages the Virtual Machine and the Hypervisor, the Platform team manages the Middleware, the Operating System teams manage the Operating System and finally the Application team will manage the Application and the data on top of the stack.

Now, from the perspective of the Infrastructure management team, they see that the application runs on the Virtual Machine and from the perspective of the Application developers, they simply see that the Infrastructure team is providing a combination of three services namely Network, Compute, and Storage. This is the essence of the split.

The service down approach of building clouds was pioneered by AWS. This approach was created for developers, by developers. The salient feature of this kind of cloud is the fact that everything is a Lego block, which can be combined in different ways in order to achieve a desired function.

In the service down approach, the Create, Read, Update, and Delete (CRUD) operations on these Lego blocks are normally done using API calls, so that developers can easily request the resources they need using programming and not by operations.

In the service down cloud, everything, such as compute (RAM and CPU), storage, network, and so on is a separate service and can be combined to give us a Virtual Machine. The following diagram shows the three blocks (the service names used are AWS services, however all service down clouds will have equivalents) coming together in order to create a traditional equivalent of a virtual machine:

The Lego block idea works on a second level, which means you are free to move this between the different virtual machines. In the following diagram, as an example, you can see that the Storage 1 of Virtual Machine 1 is being remapped to Virtual Machine 2, using API calls, which is unheard of when we take into account the traditional infrastructure:

The examples of this kind of abstraction are seen in Hyperscale Clouds such as AWS, Azure, and Google Cloud Platform. However, OpenStack is also designed as a service down cloud.

Having understood the service down cloud, it is clear that this concept of Lego blocks that has enabled us to treat our infrastructure as cattle, or pets, means if one of your servers is sick you can rip it out and replace it rather than spend time troubleshooting it. You may even choose to have the same IP address and the same disk.

Note

Pets versus cattle: This analogy came up some time between 2011 and 2012, and describes the differences in treating your infrastructure in the cloud-based world or a traditional world. Read more about them by googling the term Pets vs Cattle in Cloud: http://cloudscaling.com/blog/cloud-computing/the-history-of-pets-vs-cattle/ In brief:

- Traditionally the infrastructure got treated as pets, we used to name them, nurture them, if they fell sick, we treat and care for them (troubleshoot them) and nurse them back to health.

- These days, the cloud infrastructure gets treated as cattle, we number them, don't get attached to them, and if they fall sick, we shoot them, take their remains, and get a new one in their place.

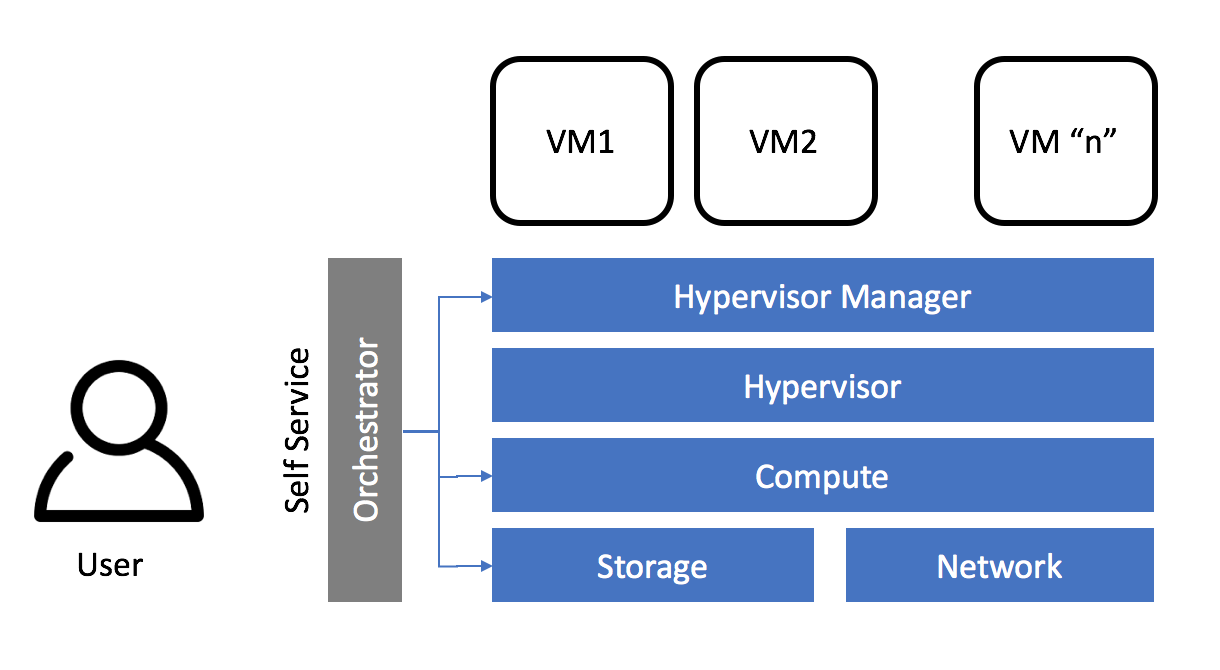

Infrastructure up, as a concept is simply appending a orchestrator to the existing virtualization stack that we saw before, thereby enabling self-service and increasing agility by automation.

The cloud purists would not even consider these clouds, but there is no denying that they exist. This concept was created to bridge the chasm that was created due to the radical shift of the paradigm of how the applications got created in the service down cloud.

In this kind of cloud, the smallest unit would request and get a virtual machine. There are several Orchestrators that would help provide these functionalities, some of the notable ones include, VMware vRealize Suite (https://www.vmware.com/in/products/vrealize-automation.html), Cisco CIAC (https://www.cisco.com/c/en/us/products/cloud-systems-management/intelligent-automation-cloud/index.html), BMC Atrium (http://www.bmcsoftware.in/it-solutions/atrium-orchestrator.html), to name a few.

The way this is created is by adding a Cloud Orchestrator solution on top of an existing virtualization environment. This provides features such as self-service and billing/chargeback/showback.

The Orchestrator then performs cross domain automation in order to provision virtual machines for the user. As you can see, in this case the life cycle management of the VM is automated, but the idea behind the provisioning has not changed so much. In the event that you decide to delete the VM, more likely than not, all the associated resources also get deleted.

An infra-up cloud is normally characterized by the presence of a workflow Engine, which allows integration to different enterprise systems. It should be no surprise that major infra-up clouds are used in private. There are some exceptions, for example the Vodafone Secure Cloud, which is a public cloud that runs on an infra-up approach.

Since this might be a new concept for some of us, let's look at a comparison between service down and infra-up and the features they provide by default:

Note

The following table is only what is offered as default, most capabilities that are not present can be added by automation/customization in both of the fields.

Features | Infra-up | Service down |

Workflow engine | Present | Not present |

Infrastructure as code | Not present | Present |

Self-service | Present | Present |

API endpoints | Present | Present |

Smallest unit that can be consumed | Virtual machine | Compute as a Service Network as a Service Storage as a Service and so on. |

Chargeback/billing | Not very well-developed/monthly | Hourly, per-minute (or) per-second |

Integration ability with existing enterprise tools (for example, IPAM, CMDB, and so on) | Present | Not present |

PaaS services (DBaaS, Containers as a Service) | Not present | Present |

This is a very well-known piece of the cloud. Based on the services that a cloud offers, it could be divided into the following:

- Infrastructure as a Service (IaaS)

- Platform as a Service (PaaS)

- Software as a Service (SaaS)

While I am sure that we are familiar with these demographics of the cloud, let us take a look at the differences:

As we move from the on-premises model to IaaS, PaaS, and SaaS, the ability to customize the software decreases and standardization increases. This has led to a lot of independent software vendors (ISVs) re-writing their applications in a multi-tenanted model, and providing it to the customers in an as a service model.

When developing bespoke applications, organizations are choosing PaaS and IaaS instead of the traditional model, which is helping them increase agility and reduce the time to market.

Some examples of this cut of data is as follows:

Cloud Type | Examples |

IaaS | OpenStack, AWS, Azure, GCP, and so on |

PaaS | Cloud Foundry, AWS, Azure, GCP, and so on |

SaaS | ServiceNow, Force.com, and so on |

Yes, you read that right. AWS, Azure, and GCP all have IaaS and PaaS services (and arguably some SaaS services also, but more on that later).

This demographic is also extremely well known. Depending on who the cloud is created for, or who is allowed to use the services from a cloud, they can be categorized into three types:

- Public: Anyone is allowed to access

- Private: A certain set of users are allowed to access

- Community: A group of similar enterprises are allowed to access

This is easily understood by using a road analogy. A highway for example, can be used by everyone, thereby making it public. A road inside the grounds of a palace would be considered a private road. A road inside a gated community would be considered a community road.

Now, since we have that out of the way, let us take a look at a few examples:

- Public cloud: AWS, Azure, GCP, RackSpace (OpenStack), and so on

- Private cloud: Company X's vRealize Environment

- Community cloud: AWS government clouds and so on

As you will have realized, the three demographics are not mutually exclusive, which means we can use all three terms in order to describe the type of cloud.

Now we know the different combinations, let's try and answer the following questions:

- Are all the infra-up clouds private?

- Conversely, are all the service down clouds public?

- Can infrastructure up clouds be used only to serve IaaS?

You get the idea! Now, let's take a close look at the answers to these questions, and then try to decipher what circumstances might impact our decision of which cloud to use.

So a statement of fact would be, while all infra-up clouds are not private, most of them are. As an exception to this rule, a public cloud provided by Vodafone runs on VMware vRealize Suite, thereby making it an infrastructure up cloud.

The same thing is applicable to service down clouds. They are mostly used as public clouds, however, if one has a private OpenStack deployment, then it is still a service down cloud. As an example, Cisco, SAP, Intel, AT&T, and several other companies have massively scalable private clouds running on OpenStack (thereby making it a service down cloud)

While infrastructure up cloud orchestrators technically provide IaaS by default, there have been some who take it to the next level by providing Database as a Service (DBaaS) and so on.

The following section attempts to provide a few circumstances and some points you should consider when choosing the right kind of cloud:

- DevOps/NoOps:

- In this, when we want to give more control to the development team rather than the infra team, you should choose a service down cloud

- Depending on your current data center footprint, cost requirements, compliance requirements, scaling requirements, and so on, you would choose to use a public or a private cloud

- Depending on the desired type of customization of the platform, one would use IaaS (more customization) or PaaS (less customization)

- Self-service:

- Depending on complexity, you would choose infra-up (less complex) and service down (more complex)

- Integration of enterprise tools:

- If this is our primary motive, then infra-up sounds like the most likely choice

- The private cloud is also our only option, because public clouds are few and don't allow very much customization

- Moving to next-generation/advanced architectures:

- If we intend to move to next generation architectures, including the likes of containerization, use of cognitive services, machine learning, artificial intelligence, and so on, we choose a service down public clouds (and Hyperscale - for example, AWS, Azure, or GCP).

We should try and escape the biases posed by the Law of the instrument, as stated in the following, and design clouds as per the needs and strategies of the organization, rather than what we know of them:

I suppose it is tempting, if the only toolyou have is ahammer, to treat everything as if it were a nail.

- Abraham Maslow, 1966

We can now appreciate, the non mutual-exclusivity of the different demographics and therefore, the products in the field.

The following image shows different products in the field, and the area that they predominantly play in. We will take a look at these in detail in the next chapter, including the products and alliances in order for them to compete in the hybrid cloud world:

Now, to answer the question that we were asking in this chapter, the simplest definition of the hybrid cloud is that we can work with any combination of two or more of these different demographics:

The most widely accepted definition is that, the hybrid cloud is an environment comprising of a Private Cloud component (On-Premise) and a Public Cloud Component (Third party).

In this chapter, we took a look at the different ways clouds were organized, their characteristics, and the use cases.

In the remainder of this book, we will learn to architect hybrid clouds in a variety of different ways such as using a cloud management platform, using containers, and so on. For most of the book, we will be using AWS as the public cloud and OpenStack as our private cloud. We will also look at different concepts of architecting these components and samples for OpenStack and AWS.

Download code from GitHub

Download code from GitHub