Introduction to DevOps

Over the past few years, the software delivery cycle has been moving increasingly fast, while at the same time application deployment has become more and more complicated. This increases the workload of all roles involved in the release cycle, including software developers, Quality Assurance (QA) teams, and IT operators. In order to deal with rapidly-changing software systems, a new concept called DevOps was introduced in 2009, which is dedicated to helping the whole software delivery pipeline evolve in order to make it faster and more robust.

This chapter covers the following topics:

- How has the software delivery methodology changed?

- What is a microservices architecture? Why do people choose to adopt this architecture?

- What is DevOps? How can it make software systems more resilient?

Software delivery challenges

The Software Development Life Cycle (SDLC), or the way in which we build applications and deliver them to the market, has evolved significantly over time. In this section, we'll focus on the changes made and why.

Waterfall and static delivery

Back in the 1990s, software was delivered in a static way—using a physical floppy disk or CD-ROM. The SDLC always took years per cycle, because it wasn't easy to (re)deliver applications to the market.

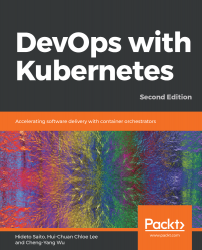

At that time, one of the major software development methodologies was the waterfall model. This is made up of various phases, as shown in the following diagram:

Once one phase was started, it was hard go back to the previous phase. For example, after starting the Implementation phase, we wouldn't be able to go back to the Design phase to fix a technical expandability issue, for example, because any changes would impact the overall schedule and cost. Everything was hard to change, so new designs would be relegated to the next release cycle.

The waterfall method had to coordinate precisely with every department, including development, logistics, marketing, and distributors. The waterfall model and static delivery sometimes took several years and required tremendous effort.

Agile and digital delivery

A few years later, when the internet became more widely used, the software delivery method changed from physical to digital, using methods such as online downloads. For this reason, many software companies (also known as dot-com companies) tried to figure out how to shorten the SDLC process in order to deliver software that was capable of beating their competitors.

Many developers started to adopt new methodologies, such as incremental, iterative, or agile models, in the hope that these could help shorten the time to market. This meant that if new bugs were found, these new methods could deliver patches to customers via electronic delivery. From Windows 98, Microsoft Windows updates were also introduced in this manner.

In agile or digital models, software developers write relatively small modules, instead of the entire application. Each module is delivered to a QA team, while the developers continue to work on new modules. When the desired modules or functions are ready, they will be released as shown in the following diagram:

This model makes the SDLC cycle and software delivery faster and easily adjustable. The cycle ranges from a few weeks to a few months, which is short enough to make quick changes if necessary.

Although this model was favored by the majority at the time, application software delivery meant software binaries, often in the form of an EXE program, had to be installed and run on the customer's PC. However, the infrastructure (such as the server or the network) is very static and has to set up beforehand. Therefore, this model doesn't tend to include the infrastructure in the SDLC.

Software delivery on the cloud

A few years later, smartphones (such as the iPhone) and wireless technology (such as Wi-Fi and 4G networks) became popular and widely used. Application software was transformed from binaries to online services. The web browser became the interface of application software, which meant that it no longer requires installation. The infrastructure became very dynamic—in order to accommodate rapidly-changing application requirements, it now had to be able to grow in both capacity and performance.

This is made possible through virtualization technology and a Software Defined Network (SDN). Now, cloud services, such as Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure, are often used. These can create and manage on-demand infrastructures easily.

The infrastructure is one of the most important components within the scope of the Software Development Delivery Cycle. Because applications are installed and operated on the server side, rather than on a client-side PC, the software and service delivery cycle takes between just a few days and a few weeks.

Continuous integration

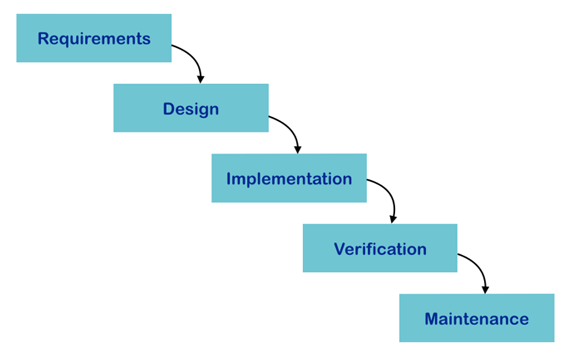

As mentioned previously, the software delivery environment is constantly changing, while the delivery cycle is getting increasingly shorter. In order to achieve this rapid delivery with a higher quality, developers and QA teams have recently started to adopt automation technologies. One of these is Continuous Integration (CI). This includes various tools, such as Version Control Systems (VCSs), build servers, and testing automation tools.

VCSs help developers keep track of the software source code changes in central servers. They preserve code revisions and prevent the source code from being overwritten by different developers. This makes it easier to keep the source code consistent and manageable for every release. Centralized build servers connect to VCSs to retrieve the source code periodically or automatically whenever the developer updates the code to VCS. They then trigger a new build. If the build fails, the build server notifies the developer rapidly. This helps the developer when someone adds broken code into the VCS. Testing automation tools are also integrated with the build server. These invoke the unit test program after the build succeeds, then notify the developer and QA team of the result. This helps to identify if somebody writes buggy code and stores it in the VCS.

The entire CI flow is shown in the following diagram:

CI helps both developers and QA teams to not only increase the quality, but also shorten the process of archiving an application or a module package cycle. In the age of electronic delivery to the customer, CI is more than enough. Delivery to the customer means deploying the application to the server.

Continuous delivery

CI plus deployment automation is an ideal process for server-side applications to provide a service to customers. However, there are some technical challenges that need to be resolved, such as how to deploy the software to the server; how to gracefully shut down the existing application; how to replace and roll back the application; how to upgrade or replace system libraries that also need to be updated; and how to modify the user and group settings in the OS if necessary.

An infrastructure includes servers and networks. We normally have different environments for different software release stages, such as development, QA, staging, and production. Each environment has its own server configuration and IP ranges.

Continuous Delivery (CD) is a common way of resolving the previously mentioned challenges. This is a combination of CI, configuration management, and orchestration tools:

Configuration management

Configuration management tools help to configure OS settings, such as creating a user or group, or installing system libraries. It also acts as an orchestrator, which keeps multiple managed servers consistent with our desired state.

It's not a programming script, because a script is not necessarily idempotent. This means that if we execute a script twice, we might get an error, such as if we are trying to create the same user twice. Configuration management tools, however, watch the state, so if a user is created already, a configuration management tool wouldn't do anything. If we delete a user accidentally or even intentionally, the configuration management tool would create the user again.

Configuration management tools also support the deployment or installation of software to the server. We simply describe what kind of software package we need to install, then the configuration management tool will trigger the appropriate command to install the software package accordingly.

As well as this, if you tell a configuration management tool to stop your application, to download and replace it with a new package (if applicable), and restart the application, it'll always be up-to-date with the latest software version. Via the configuration management tool, you can also perform blue-green deployments easily.

Infrastructure as code

The configuration management tool supports not only a bare metal environment or a VM, but also cloud infrastructure. If you need to create and configure the network, storage, and VM on the cloud, the configuration management tool helps to set up the cloud infrastructure on the configuration file, as shown in the following diagram:

Configuration management has some advantages compared to a Standard Operation Procedure (SOP). It helps to maintain a configuration file via VCS, which can trace the history of all of the revisions.

It also helps to replicate the environment. For example, let's say we want to create a disaster recovery site in the cloud. If you follow the traditional approach, which involves using the SOP to build the environment manually, it's hard to predict and detect human or operational errors. On the other hand, if we use the configuration management tool, we can build an environment in the cloud quickly and automatically.

Orchestration

The orchestration tool is part of the configuration management tool set. However, this tool is more intelligent and dynamic with regard to configuring and allocating cloud resources. The orchestration tool manages several server resources and networks. Whenever the administrator wants to increase the application and network capacity, the orchestration tool can determine whether a server is available and can then deploy and configure the application and the network automatically. Although the orchestration tool is not included in SDLC, it helps the capacity management in the CD pipeline.

To conclude, the SDLC has evolved significantly such that we can now achieve rapid delivery using various processes, tools, and methodologies. Now, software delivery takes place anywhere and anytime, and software architecture and design is capable of producing large and rich applications.

The microservices trend

As mentioned previously, software architecture and design has continued to evolve based on the target environment and the volume of the application. This section will discuss the history and evolution of software design.

Modular programming

As the size of applications increases, the job of developers is to try to divide it into several modules. Each module aims to be independent and reusable, and each is maintained by different developer teams. The main application simply initializes, imports, and uses these modules. This makes the process of building a larger application more efficient.

The following example shows the dependencies for nginx (https://www.nginx.com) on CentOS 7. It indicates that nginx uses OpenSSL(libcrypt.so.1, libssl.so.10), the POSIX thread(libpthread.so.0) library, the regular expression PCRE(libpcre.so.1) library, the zlib(libz.so.1) compression library, the GNU C(libc.so.6) library, and so on:

$ /usr/bin/ldd /usr/sbin/nginx linux-vdso.so.1 => (0x00007ffd96d79000) libdl.so.2 => /lib64/libdl.so.2 (0x00007fd96d61c000) libpthread.so.0 => /lib64/libpthread.so.0

(0x00007fd96d400000) libcrypt.so.1 => /lib64/libcrypt.so.1

(0x00007fd96d1c8000) libpcre.so.1 => /lib64/libpcre.so.1 (0x00007fd96cf67000) libssl.so.10 => /lib64/libssl.so.10 (0x00007fd96ccf9000) libcrypto.so.10 => /lib64/libcrypto.so.10

(0x00007fd96c90e000) libz.so.1 => /lib64/libz.so.1 (0x00007fd96c6f8000) libprofiler.so.0 => /lib64/libprofiler.so.0

(0x00007fd96c4e4000) libc.so.6 => /lib64/libc.so.6 (0x00007fd96c122000) ...

Package management

The Java programming language, and several other scripting programming languages such as Python, Ruby, and JavaScript, have their own module or package management tool. Java, for example, has Maven (http://maven.apache.org), Python uses pip (https://pip.pypa.io), RubyGems (https://rubygems.org) is used for for Ruby, and npm is used (https://www.npmjs.com) for JavaScript.

Package management tools not only allow you to download the necessary packages, but can also register the module or package that you implement. The following screenshot shows the Maven repository for the AWS SDK:

When you add dependencies to your application, Maven downloads the necessary packages. The following screenshot is the result you get when you add the aws-java-sdk dependency to your application:

Modular programming helps you to accelerate software development speed. However, applications nowadays have become more sophisticated. They require an ever-increasing number of modules, packages, and frameworks, and new features and logic are continuously added. Typical server-side applications usually use authentication methods such as LDAP, connect to a centralized database such as RDBMS, and then return the result to the user. Developers have recently found themselves required to utilize software design patterns in order to accommodate a bunch of modules in an application.

The MVC design pattern

One of the most popular application design patterns is Model-View-Controller (MVC). This defines three layers: the Model layer is in charge of data queries and persistence, such as loading and storing data to a database; the View layer is in charge of the User Interface (UI) and the Input/Output (I/O); and the Controller layer is in charge of business logic, which lies in between the View and the Model:

There are some frameworks that help developers to make MVC easier, such as Struts (https://struts.apache.org/), SpringMVC (https://projects.spring.io/spring-framework/), Ruby on Rails (http://rubyonrails.org/), and Django (https://www.djangoproject.com/). MVC is one of the most successful software design pattern, and is used for the foundation of modern web applications and services.

MVC defines a borderline between every layer, which allows several developers to jointly develop the same application. However, it also causes some negative side effects. The size of the source code within the application keeps getting bigger. This is because the database code (the Model), the presentation code (the View), and the business logic (the Controller) are all within the same VCS repository. This eventually has an impact on the software development cycle. This type of application is called a monolithic application. It contains a lot of code that builds a giant EXE or war program.

Monolithic applications

There's no concrete measurement that we can use to define an application as monolithic, but a typical monolithic app tends to have more than 50 modules or packages, more than 50 database tables, and requires more than 30 minutes to build. If we need to add or modify one of those modules, the changes made might affect a lot of code. Therefore, developers try to minimize code changes within the application. This reluctance can lead to the developer hesitation to maintain the application code, however, if problems aren't dealt with in a timely manner. For this reason, developers now tend to divide monolithic applications into smaller pieces and connect them over the network.

Remote procedure call

In fact, dividing an application into small pieces and connecting them via a network was first attempted back in the 1990s, when Sun Microsystems introduced the Sun Remote Procedure Call (SunRPC). This allows you to use a module remotely. One of most popular implementation is Network File System (NFS). The NFS client and the NFS server can communicate over the network, even if the server and the client use different CPUs and OSes.

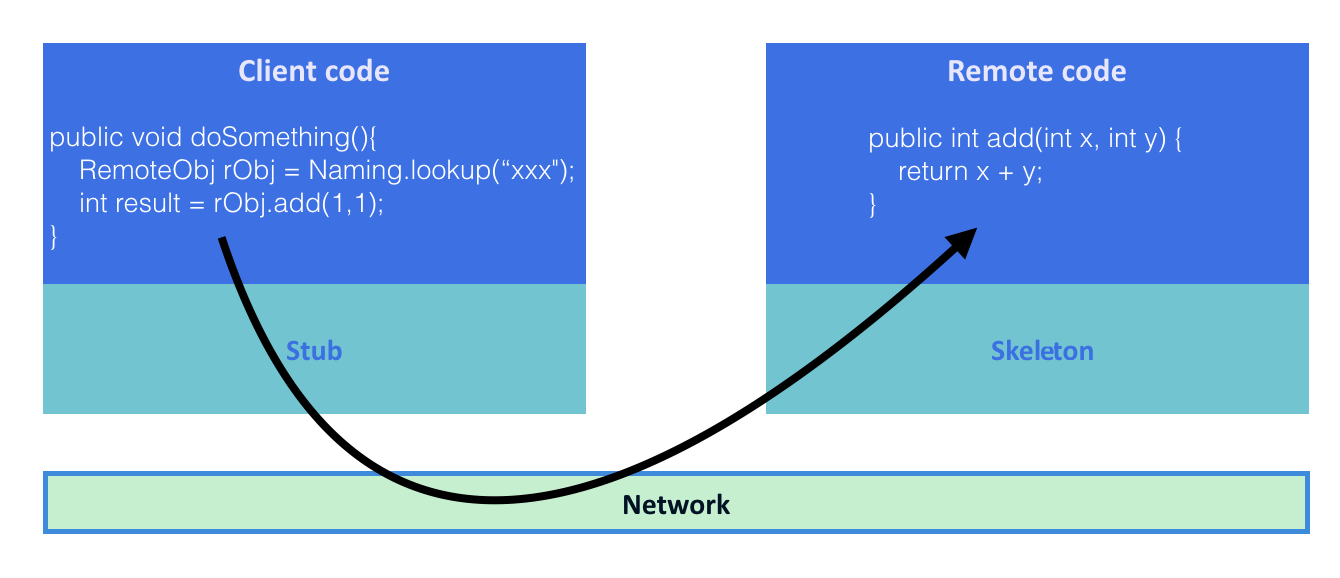

Some programming languages also support RPC-style functionality. UNIX and the C language have the rpcgen tool, which generates a stub code that contains some complicated network communication code. The developer can use this over the network to avoid difficult network-layer programming.

Java has the Java Remote Method Invocation (RMI), which is similar to the Sun RPC, but specific to the Java language. The RMI Compiler (RMIC) generates the stub code that connects remote Java processes to invoke the method and return a result. The following diagram shows the procedure flow of the Java RMI:

Objective C has a distributed object and .NET has remoting, both of which work in a similar fashion. Most modern programming languages have RPC capabilities out of the box. These RPC designs are capable of dividing a single application into multiple processes (programs). Individual programs can have separate source code repositories. While the RPC designs worked well, machine resources (CPU and memory) were limited during the 1990s and early 2000s. Another disadvantage was that the same programming language was intended to be used throughout and these designs were intended to be used for a client/server model architecture, rather than a distributed architecture. In addition, there was less security consideration when these designs were developed, so they are not recommended to be used over a public network.

In the early 2000s, initiative web services that used SOAP (HTTP/SSL) as data transport were developed. These used XML for data presentation and the Web Services Description Language (WSDL) to define services. Then, Universal Description, Discovery, and Integration (UDDI) was used as the service registry to look up a web services application. However, as machine resources were not plentiful at the time and due to the complexity of programming and maintaining web services, this was not widely accepted by developers.

RESTful design

In the 2010s, machines and even smartphones were able to access plenty of CPU resources, and network bandwidths of a few hundred Mbps were everywhere. Developers started to utilize these resources to make application code and system structures as easy as possible, making the software development cycle quicker.

Nowadays, there are sufficient hardware resources available, so it makes sense to use HTTP/SSL as the RPC transport. In addition, from experience, developers choose to make this process easier as follows:

- By making HTTP and SSL/TLS as standard transport

- By using HTTP method for Create/Load/Upload/Delete (CLUD) operation, such as GET, POST, PUT, or DELETE

- By using the URI as the resource identifier, the user with the ID 123, for example, would have the URI of /user/123/

- By using JSON for standard data presentation

These concepts are known as Representational State Transfer (RESTful) design. They have been widely accepted by developers and have become the de facto standard of distributed applications. RESTful applications allow the use of any programming language, as they are HTTP-based. It is possible to have, for example, Java as the RESTful server and Python as the client.

RESTful design brings freedom and opportunities to the developer. It makes it easy to perform code refactoring, to upgrade a library, and even to switch to another programming language. It also encourages the developer to build a distributed modular design made up of multiple RESTful applications, which are called microservices.

If you have multiple RESTful applications, you might be wondering how to manage multiple source codes on VCS and how to deploy multiple RESTful servers. However, CI and CD automation makes it easier to build and deploy multiple RESTful server applications. For this reason, the microservices design is becoming increasingly popular for web application developers.

Microservices

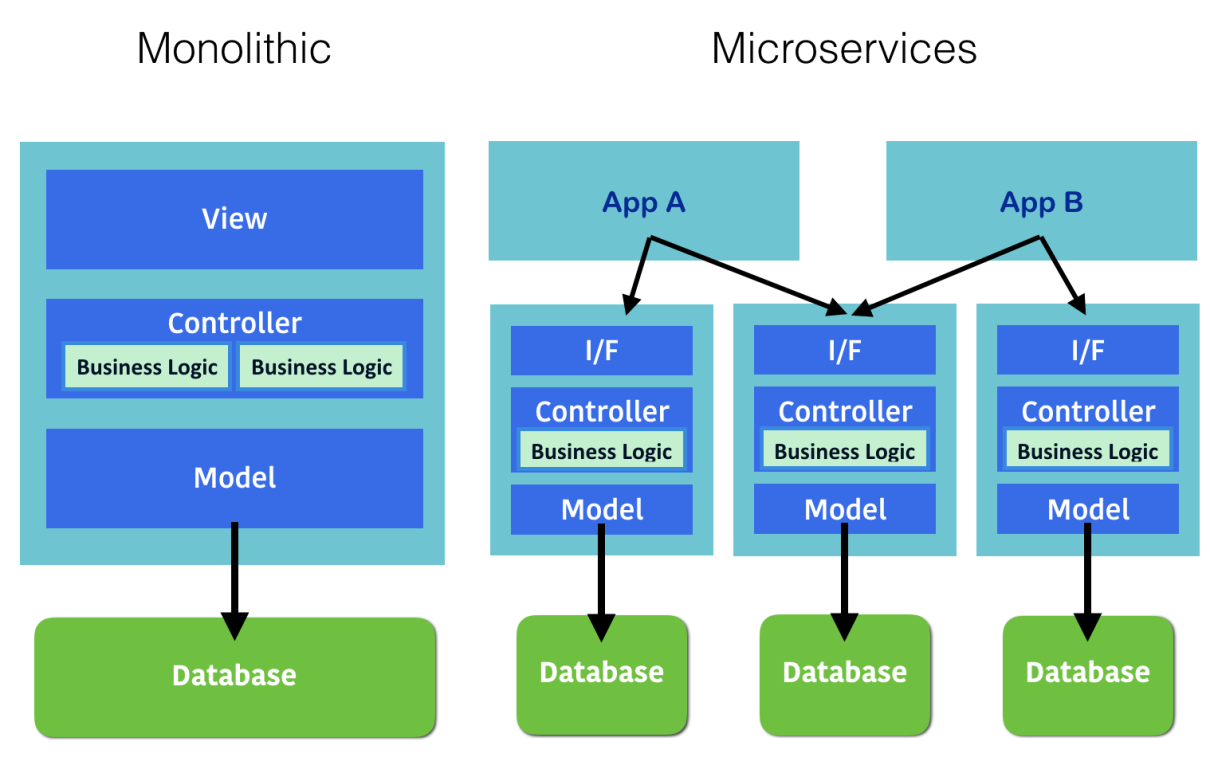

Although microservices have the word micro in their name, they are actually pretty heavy compared to applications from the 1990s or early 2000s. They use full stack HTTP/SSL servers and contain entire MVC layers.

The microservices design has the following advantages:

- Stateless: They don't store user sessions to the system, which helps to scale the application.

- No shared data store: Microservices should have their own data stores, such as databases. They shouldn't share these with other applications. They help to encapsulate the backend database so that it is easier to refactor and update the database scheme within a single microservice.

- Versioning and compatibility: Microservices may change and update the API, but they should define versions, such as /api/v1 and /api/v2, that have backward compatibility. This helps to decouple other microservices and applications.

- Integrate CI/CD: The microservice should adopt the CI and CD process to eliminate management effort.

There are some frameworks that can help to build microservice-based applications, such as Spring Boot (https://projects.spring.io/spring-boot/) and Flask (http://flask.pocoo.org). However, there're a lot of HTTP-based frameworks, so developers can feel free to choose any preferred framework or programming language. This is the beauty of the microservice design.

The following diagram is a comparison between the monolithic application design and the microservices design. It indicates that a microservice design is the same as the monolithic design; they both contain an interface layer, a business logic layer, a model layer, and a data store. The difference is, however, that the application is constructed of multiple microservices. Different applications can share the same microservices:

The developer can add the necessary microservices and modify existing microservices with a rapid software delivery method that won't affect an existing application or service. This is an important breakthrough. It represents an entire software development environment and methodology that's widely accepted by developers.

Although CI and CD automation processes help to develop and deploy microservices, the number of resources, such as VMs, OS, libraries, disk volumes, and networks, can't compare with monolithic applications. There are some tools that can support these large automation environments on the cloud.

Automation and tools

As discussed previously, automation is the best way to achieve rapid software delivery. It solves the issue of managing microservices. However, automation tools aren't ordinary IT or infrastructure applications such as Active Directory, BIND (DNS), or Sendmail (MTA). In order to achieve automation, we need an engineer who should have both a developer skill set to write code, particularly in scripting languages, and an infrastructure operator skill set with knowledge related to VMs, networks, and storage operations.

DevOps is short for development and operations. It refers to the ability to make automation processes such as CI, infrastructure as code, and CD. It uses some DevOps tools for these automation processes.

Continuous integration tools

One of the popular VCS tools is Git (https://git-scm.com). A developer uses Git to check-in and check-out code all the time. There are various hosting Git services, including GitHub (https://github.com) and Bitbucket (https://bitbucket.org). These allow you to create and save your Git repositories and collaborate with other users over the internet. The following screenshot shows a sample pull request on GitHub:

The build server has a lot of variation. Jenkins (https://jenkins.io) is one of the most well established applications, along with TeamCity (https://www.jetbrains.com/teamcity/). As well as build servers, you also have hosted services, otherwise known as Software as a Service (SaaS), such as Codeship (https://codeship.com) and Travis CI (https://travis-ci.org). SaaS can integrate with other SaaS tools. The build server is capable of invoking external commands, such as unit test programs. This makes the build server a key tool within the CI pipeline.

The following screenshot shows a sample build using Codeship. We check out the code from GitHub and invoke Maven for building (mvn compile) and unit testing (mvn test) our sample application:

Configuration management tools

There are a variety of configuration management tools available. The most popular ones include Puppet (https://puppet.com), Chef (https://www.chef.io), and Ansible (https://www.ansible.com).

AWS OpsWorks (https://aws.amazon.com/opsworks/) provides a managed Chef platform on AWS Cloud. The following screenshot shows a Chef recipe (configuration) of an installation of the Amazon CloudWatch Log agent using AWS OpsWorks. AWS OpsWorks automates the installation of the CloudWatch Log agent when launching an EC2 instance:

AWS CloudFormation (https://aws.amazon.com/cloudformation/) helps to achieve infrastructure as code. It supports the automation of AWS operations, so that we can perform the following functions:

- Creating a VPC

- Creating a subnet on VPC

- Creating an internet gateway on VPC

- Creating a routing table to associate a subnet to the internet gateway

- Creating a security group

- Creating a VM instance

- Associating a security group to a VM instance

The configuration of CloudFormation is written by JSON, as shown in the following screenshot:

CloudFormation supports parameterizing, so it's easy to create an additional environment with different parameters (such as VPC and CIDR) using a JSON file with the same configuration. It also supports the update operation. If we need to change a part of the infrastructure, there's no need to recreate the whole thing. CloudFormation can identify a delta of configuration and perform only the necessary infrastructure operations on your behalf.

AWS CodeDeploy (https://aws.amazon.com/codedeploy/) is another useful automation tool that focuses on software deployment. It allows the user to define the deployment steps. You can carry out the following actions on the YAML file:

- Specify where to download and install the application

- Specify how to stop the application

- Specify how to install the application

- Specify how to start and configure an application

The following screenshot is an example of the AWS CodeDeploy configuration file, appspec.yml:

Monitoring and logging tools

Once you start to manage microservices using a cloud infrastructure, there are various monitoring tools that can help you to manage your servers.

Amazon CloudWatch is the built-in monitoring tool for AWS. No agent installation is needed; it automatically gathers metrics from AWS instances and allows the user to visualize these in order to carry out DevOps tasks. It also supports the ability to set an alert based on the criteria that you set. The following screenshot shows the Amazon CloudWatch metrics for an EC2 instance:

Amazon CloudWatch also supports the gathering of an application log. This requires us to install an agent on an EC2 instance. Centralized log management is useful when you need to start managing multiple microservice instances.

ELK is a popular combination of stacks that stands for Elasticsearch (https://www.elastic.co/products/elasticsearch), Logstash (https://www.elastic.co/products/logstash), and Kibana (https://www.elastic.co/products/kibana). Logstash aggregates the application log, transforms it to JSON format, and then sends it to Elasticsearch. Elasticsearch is a distributed JSON database. Kibana can visualize the data that's stored on Elasticsearch. The following Kibana example shows an nginx access log:

Grafana (https://grafana.com) is another popular visualization tool. It used to be connected with time series databases such as Graphite (https://graphiteapp.org) or InfluxDB (https://www.influxdata.com). A time series database is designed to store data that's flat, de-normalized, and numeric, such as CPU usage or network traffic. Unlike RDBMS, a time series database has some optimization in order to save data space and can carry out faster queries on historical numeric data. Most DevOps monitoring tools use time series databases in the backend.

The following Grafana screenshot shows some Message Queue Server statistics:

Communication tools

When you start to use several DevOps tools, you need to go back and forth to visit several consoles to check whether the CI and CD pipelines work properly or not. In particular, the following events need to be monitored:

- Merging the source code to GitHub

- Triggering the new build on Jenkins

- Triggering AWS CodeDeploy to deploy the new version of the application

These events need to be tracked. If there's any trouble, DevOps teams needs to discuss this with the developers and the QA team. However, communication can be a problem here, because DevOps teams are required to capture each event one by one and then pass it on as appropriate. This is inefficient.

There are some communication tools that help to integrate these different teams. They allow anyone to join to look at the events and communicate. Slack (https://slack.com) and HipChat (https://www.hipchat.com) are the most popular communication tools.

These tools also support integration with SaaS services so that DevOps teams can see events on a single chat room. The following screenshot is a Slack chat room that integrates with Jenkins:

The public cloud

CI, CD, and automation work can be achieved easily when used with cloud technology. In particular, public cloud APIs help DevOps to come up with many CI and CD tools. Public clouds such as Amazon Web Services (https://aws.amazon.com), Google Cloud Platform (https://cloud.google.com), and Microsoft Azure (https://azure.microsoft.com) provide some APIs for DevOps teams to control cloud infrastructure. The DevOps can also reduce wastage of resources, because you can pay as you go whenever the resources are needed. The public cloud will continue to grow in the same way as the software development cycle and the architecture design. These are all essential in order to carry your application or service to success.

The following screenshot shows the web console for Amazon Web Services:

Google Cloud Platform also has a web console, as shown here:

Here's a screenshot of the Microsoft Azure console as well:

All three cloud services have a free trial period that a DevOps engineer can use to try and understand the benefits of cloud infrastructure.

Summary

In this chapter, we've discussed the history of software development methodology, programming evolution, and DevOps tools. These methodologies and tools support a faster software delivery cycle. The microservices design also helps to produce continuous software updates. However, microservices increase the complexity of the management of an environment.

In Chapter 2, DevOps with Containers, we will describe the Docker container technology, which helps to compose microservice applications and manage them in a more efficient and automated way.

Download code from GitHub

Download code from GitHub