Before we dive deep into the concept of Control-M, let's relax a little bit by beginning with a brief lesson on the history of batch processing. In the first chapter, we will be looking at the basic fundamentals of batch processing and the ever growing technical and business requirements, as well as related challenges people are facing in today's IT environment. Based on that, we will look at how can we overcome these difficulties by using centralized enterprise scheduling platforms, and discuss the features and benefits of those platforms. Finally, we will get into the most exciting part of the chapter, talking about a brand new concept, that is, workload automation.

By keeping these key knowledge points in mind, you will find it easy to understand the purpose of each Control-M feature later on in the book. More importantly, adopting the correct batch concepts will help you build an efficient centralized batch environment and be able to use Control-M in the most effective way in the future.

By the end of this chapter, you will be able to:

Explain the meaning of batch processing and understand why batch processing is needed

Describe the two major types of batch processing

List the challenges of batch processing in today's IT environment

Outline the benefits of having a centralized batch scheduling tool

Name different job roles and responsibilities in a centralized batch environment

Understand why workload automation is the next step for batch scheduling

We hear about hot IT topics everyday, everywhere. Pick up a tech magazine, visit an IT website, or subscribe to a weekly newsletter and you will see topics about cloud computing, SOA, BPM/BPEL, data warehouse, ERP — you name it! Even on TV and at the cinemas, you may see something such as an "Iron Man 2 in theatres soon + Sun/Oracle in data centre now" commercial. In the recent years, IT has become a fashion more than simply a technology, but how often do you hear the words "batch processing" mentioned in any of the articles or IT road shows?

Batch processing is not a new IT buzz word. In fact, it has been a major IT concept since the very early stage of electronic computing. Unlike today, where we can run programs on a personal computer whenever required and expect an instant result, the early mainframe computers could handle non-interactive processing only.

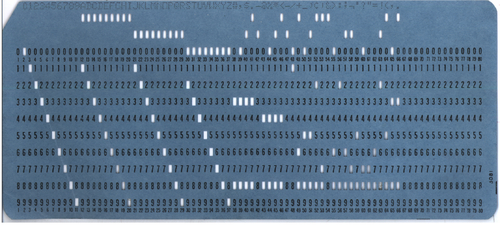

In the beginning, punched cards were used as the media for storing data (refer to the following). Mainframe system programmers were required to store their program and input data onto these cards by punching a series of dots and pass them to the system operators for processing. Each time the system operators had to stack up the program cards followed by the input data cards onto a special card reader so the mainframe computer could load the program into memory and process the data. The execution could run for hours or days and it would stop only when the entire process was complete or in case an error occurred. Computer processing power was expensive at that time. In order to minimize a computer's idle time and improve efficiency, the input data for each program was normally accumulated in large quantities and then queued up for processing. In this manner, lots data could be processed at once, rather than frequently re-stacking the program card multiple times for ea ch small amount of input data. Therefore, the process was called batch processing.

This is a file from the Wikimedia Commons. Commons is a freely licensed media file repository (http://en.wikipedia.org/wiki/File:Blue-punch-card-front-horiz.png).

With time, the punched card technology lost its glory and became obsolete and it was replaced by much more advanced storage technologies. However, the batch mode processing method amazingly survived and continued to play a major role in the computing world to handle critical business tasks. Although the surrounding technology has changed significantly, the underlying batch concept is still the same. In order to increase efficiency, programs written for batch-mode process (a.k.a batch jobs) are normally set to run when large amounts of input data are accumulated and ready for processing. Besides, a lot of routine procedure-type business processes are naturally required to be processed in batch mode. For example, monthly billing and fortnightly payrolls are typical batch-oriented business processes.

Note

The dictionary definition of batch processing: (Computer Science) A set of data to be processed in a single program run.

Traditionary Batch jobs are known to process large amounts of data at once. Therefore, it is not practical to expect the output to be given immediately. But there is still a predefined deadline for every batch processing, either it is set by the business requirement (also known as SLA — Service Level Agreement) or simply because it needs to finish so that the dependent batch processing tasks can start. For example, a group of batch jobs of an organization need to generate the payroll data and send it to the bank by Monday 5am, so the bank can have enough time to process it and ensure the funds get transferred to each of the organizaiton's employee's account by 8am Tuesday morning.

The rules and requirements of those business processes that require batch processing are becoming more and more sophisticated. This makes batch processing not only very time-consuming, but also task intensive, that is, the business process is required to be achieved in more than one step or even by many related jobs one after another (also known as job flow). In order for the computer system to execute the job or a job flow without human interaction, relevant steps within a job or jobs within a job flow need to be prearranged in the required logical order and the input data needs to be ready prior to the runtime of the job's step.

As the time goes by, computer systems got another major improvement by having the ability to handle users' action-driven interactive processing (also called transaction processing or online processing). This was a milestone in computing history because it changed the way human minds work with computers forever, that is, for certain types of requests, users no longer need to wait for the processing to happen (only in batch mode during a certain period of time). Users can send a request to the computer for immediate processing. Such requests can be a data retrieving or modifying request. For example, someone checking his or her bank account balance on an ATM machine or someone placing a buy or sell order for a stock through an online broking website. In contrast to batch processing, computer systems handle each of the user requests individually at the time when it is submitted. CICS (Customer Information Control System) is a typical mainframe application designed for handling high-volume online transactions on the other hand, there is personal computer started to gain popularity which designed and optimised to work primarily in interactive mode.

In reality, we often see that batch processing and transaction processing share the same computing facility and data source in an enterprise class computing environmnet. As interactive processing aims at providing a fast response for user requests generated on a random basis, in order to ensure that there are sufficient resources available on the system for processing such requests, the resource intensive batch jobs that used to occupy the entire computing facility 24/7 had to be set to run only during a time frame when user activities are low, which back at the time is more likely to be during night, that is, as we often hear a more seasoned IT person with mainframe background call it nightly batch.

Here's an example of a typical scenario in a batch processing and transaction processing shared environment for an online shopping site:

7:00am: This is the time usually the site starts to get online traffic, but the volume is small.

10:00am: Traffic starts to increase, but is still relatively small. User requests come from the Internet, such as browsing a product catalog, placing an order, or tracking an existing order.

12:00pm: Transaction peak hours start. The system is dedicated for handling online user requests. A lot of orders get generated at this point of time.

10:00pm: Online traffic starts to slow down.

11:30pm: A daily backup job starts to back up the database and filesystem.

12:00am: A batch job starts to perform daily sales conciliations.

12:30pm: Another batch job kicks in to process orders generated during the last 24 hours.

2:00am: A multi-step batch job starts for processing back orders and sending the shop's order to suppliers.

3:00am: As all outstanding orders have been processed, a backup job is started for backing up the database and filesystem.

5:00am: A batch job generates and sends yesterday's sales report to the accounting department.

5:15am: Another batch job generates and sends a stock on hand report to the warehouse and purchasing department.

5:30am: A script gets triggered to clean up old log files and temporary files.

7:00am: The system starts to hit by online traffic again.

In this example, programs for batch mode processing are set to run only when online transactions are low. This allows online processing to have the maximum system resources during its peak hours. During online processing's off peak hours, batch jobs can use up the entire system to perform resource-intensive processing such as sales conciliation or reporting.

In addition, because during the night time there are fewer changes to the data, batch jobs can have more freedom when manipulating the data and it allows the system to perform the backup tasks.

What we have discussed so far Batch processing defined to run during a certain time is traditional time-based scheduling. Depending on the user's requirements, it could be a daily run, a monthly run, or a quarterly run, such as:

Retail store doing a daily sales consolidation

Electricity companies generating monthly bills

Banks producing quarterly statements

The timeframe allocated for batch processing is called a batch window. The concept sounds simple, but there are many factors that need to be taken into consideration before defining the batch window. Those factors include what time the resource and input data will be available for batch processing, how much data needs to be processed each time, and how long the batch jobs will take to process them. In case the batch processing fails to complete within the defined time window, not only does the expected batch output be delivered on time, but the next day's online processing may also get affected. Here are some of the scenarios:

Online requests started to come in at its usual time, but the backend batch processing is still running. As the system resource such as CPU, memory, and IO are still occupied by the over-running batch jobs, the resource availability and system response time for online processing are significantly impacted. As a result, online users see responses given slowly and get timeout errors.

Some batch processing needs to occupy the resource exclusively. Online processing can interrupt the batch processing and cause it to fail. In such cases, if the batch window is missed, either the batch jobs have to wait to run during the next batch window or online processing needs to wait until the batch processing is completed.

In extreme cases, online transactions are based on the data processed by the previously run batch. Therefore, the online transactions cannot start at all unless the pervious batch processing is completed. This happens with banks, as you often hear them say the bank cannot open tomorrow morning if the overnight batch fails.

A concept called event-triggered scheduling was introduced during the modern computing age to meet the growing business demand. An event can be as follows:

A customer submitted an order online

A new mobile phone SIM card was purchased

A file from a remote server arrived for further processing

Rather than accumulating these events and processing them during the traditional nightly batch window, a mechanism has been designed within the batch processing space to detect such an event in real-time and process them immediately. By doing so, the event initiators are able to receive an immediate response, where in the past they have to wait until the end of the next batch window to get the response or output. Use the online shopping example again; during the day, orders get generated by online users. These orders are accumulated on the system and wait to be processed against the actual stock during the predefined batch windows. Customers have to wait till the next morning to receive the order committed e-mail and back order items report. With event-triggered batch processing, the online business is able to offer a customer an instant response on their order status, and therefore, provide a better shopping experience.

On the other hand, as a noticeable amount of batch processing work is spared during event time, the workload for batch processing during a batch window (for example, a nightly batch) is likely to be reduced.

There have been talks about totally replacing time-based batch processing with real-time event-driven processing to build a so called real-time enterprise. A group of people argue that batch processing causes latency to business processes and as event-driven solutions are becoming affordable, businesses should be looking at completely shifting to event-driven real-time processing. This approach has been discussed for years. However, its yet to completely replace batch processing.

Shifting the business process into real-time can allow businesses to have quicker reaction to changes and problems by making decisions based on live data feeds rather than historical data produced by batch processing. For example, an online computer store can use a real-time system to automatically adjust their retail price for exchange rate sensitive computer components according to live feed currency exchange rate.

The business may also become more competitive and gain extra profit by having each individual event handled in real time. For example, mobile phone companies would rather provide each SIM card as soon as it is purchased, than let the customers wait until the next day (that is when the over-night batch processing finish processing the data) and lose the potential calls that could be charged during the waiting time.

Note

Case study: Commonwealth Bank of Australia shifting into real-time banking

"In October 2010, Commonwealth Bank of Australia announced their future strategy in IT, that is, progressively upgrading their banking platforms to provide real-time banking for customers. Real-time banking is the ability to open and close an account, complete credit and debit transactions, and change features immediately. Real-time banking removes the delay we experience now from batch processing, where transactions are not completed until the next working day. Real-time banking will gradually replace batch processing, as our new banking platform replaces existing systems over the next few years."

Reference from Commonwealth Bank of Australia Website.

However, real-time processing is not a silver bullet. Although purchasing real-time solutions is becoming cheaper, moving the organization's entire batch processing into real-time processing can be an extremely costly and risky project. Also, we may find that current computing technology is still not powerful enough to handle everything in real time. Some IT professionals did a test during a project. They designed the system to process everything in real time and when the workload increased, the entire system just collapsed. We know hardware is becoming cheaper these days, but machine processing power is not the only limitation. When we talk about limited computing resources, it also can be the number of established sessions to a database or the availability of information for access. In case of a large request, such as generating a report, it may require exclusive access to the database. During the processing of data within the table, it is not available for other requests to modify. Therefore, such requests should be considered as batch processing and configured to run at an appropriate time.

The bottom line is not all business processes are required to be completed in real time. For example, a retail store only needs to generate purchase orders once a week. The store has no reason to transfer this business process into real-time processing, because they want to accumulate individual stock requests over a period of time and send it to the supplier in a single order. In this case, the shop can receive goods in bulk and save shipping cost, and at the same time there is a possibility to receive special offers that are given based on large order quantity.

Note

ZapThink's Ron Schmelzer wrote:

Batch processing, often thought of as an artifact left over from the legacy days, plays a vital role in systems that may have real-time processing upfront. As he observed, "behind the real-time systems that power the real-time enterprise are regularly-updated back office business systems. Batch processes remain essential for one key reason: it is simply not efficient to regenerate a complete forecast or business plan every time the business processes a single event such as an incoming customer order."

Reference from ZapThink: http://www.zdnet.com/blog/serviceoriented/soas-final-frontier-can-we-should-we-serviceorient-batch-processing/1439

Either running the business process in real time or batches, the system designer should take many factors into consideration, such as:

Is the business process required to be running in real time?

What will the cost be to run the business process in real time?

What are the negative impacts on others, if running the business process in real time?

Will the benefit justify the cost and impact?

Moving IT into real time should be driven by actual business needs rather than the technology. The person who is going to design the system needs to carefully consider what needs to be processed in real time and what can be processed in batch to meet the business requirements and balance the system utilization. As a fact, large orgnisations today are still investing in batch processing and continuously trying to figure out how to make it run better.

To understand batch processing further we need to begin from it's father - the mainframe computers. Job Control Language (JCL) was introduced as a basic tool on the mainframe computers for defining how a batch program should be executed. A JCL is considered to be a job, also called a job card (inherited the name from punched cards). A job can have a single step or multiple steps (up to 255 steps in each JCL), and each step is an executable program or a JCL procedure (frequently used JCL statements are define into procedures for reusability). In JCL, a user needs to specify the name of the job, the program, or procedure to be executed during each step of the job, as well as the input and output of the step. Once the job is submitted for execution, the Job Entry Subsystem (JES) will interpret the JCL and send it to the mainframe operating system (MVS or Z/OS) for p rocessing (refer to the next diagram).

The system will read the submitted JCL to figure out what application to run, the location of the input, and where the output should go to.

Batch processing can be a standalone task, but in common cases business processes require many steps to deliver. Technically, all these steps can be combined within one processing unit (for example, steps with a JCL), but if a step fails during the processing, rerunning that specific step can be challenging. There are third-party products provided on the mainframe computers just for managing rerun JCL steps.

On the distributed systems, it is up to the program designer to design their own way in the program to detect the error and handle such rerun action. In most cases, we have to restart the entire process from the beginning. In order to have flexibility and ease of management in batch processing, these steps are normally broken down into individual processing units (also known as jobs) and are set to be executed in sequence. By doing so, when a step fails in the middle of the processing or towards the end of the entire processing, a rerun can be allowed from the point of failure easily by rerunning the problem job.

Here is a Hello World edition of batch processing. imagamingpc.com is an online computer shop specialized in build-to-order gaming computers. The owner implemented his own order processing application to handle online orders. The system works in the following way:

During the day, customers visit the site. They choose each PC component to create a customized machine specification.

Once the customer submits the completed customized machine specification, a flat file gets generated in the system and tells the customer that the order has been received.

By 12:00am, let's say there are ten orders generated in total. A program is set to trigger around this time of the day to process these orders. For each order, the program first will check and make sure the parts the customer selected can be built into a complete working computer, whereas the order gets rejected if the components are incompatible with each other or some essential parts are missing. It is followed by sending an e-mail to the customer for correcting the order. For orders that passed the check, the program will scan the inventory to make sure each item is in stock, and sends an order confirmation e-mail to the customer. The program also generates and prints a daily build list. Sometimes there are orders that cannot be built due to missing parts, such as a specific graphics card being out of stock. In this case, the program will generate and print a backorder list and send the pending build e-mail to the customer to confirm their parts are being orde red and the system build is pending.

The next morning, the technician comes in and builds each machine according to the printed daily build list. At the same time, the person in charge of purchasing and procurement will place orders to each supplier according to the backorder list.

This is a very simple batch processing example. It's not hard to figure out that step 2 is an interaction processing and step 3 is a batch processing.

One of the very early challenges was that the order processing program often fails somewhere during processing. Sometimes it fails during generating the daily build list and sometimes it fails to send out e-mails to customers for pending build notification. No matter at which step the program fails, the program needs to be re-run right from the beginning, even if the problem was at the last stage only. As a result, every time when a re-run happens, the customer will likely be getting two confirmation e-mails. Also, when the number of orders is large on a given day, rerunning the entire thing can take a lot of time.

The owner of the business realized the problem and requested an IT person to come up with a better way to run this problem. Pretty quickly, the IT person came up with an idea, that is, break down each processing step into a separate program. In this case, if any stage failed, you would only need to re-run the failed task. As an outcome, the batch processing became the following:

By 12:00am, there are ten orders generated in total. Program

PROCESS_ORDERis set to trigger at this time of the day to process them. For each order, the program scans the inventory to make sure each item on the order is in stock and create the file calleddaily build list.According to the

daily build list, the programMAIL_CONFRIMED_ORDERsends out an e-mail to each customer to confirm if his or her order is ready to be built.Another program called

PRINT_DAILY_BUILD_LISTprints thedaily build listfor the technician who is going to build the machines on the following day.Program

GENERATE_BACKORDER_LISTwill create a list of orders that cannot be built due to missing parts, the list also gets stored in a file calledbackorder list.According to the

backorder list, the programMAIL_BACKORDERsends out an e-mail to each customer to notify them that their order has been backordered.Another program called

PRINT_BACKORDER_LISTprints thebackorder listfor the purchasing and procurement officer.

By now, we have a series of programs linked together and executed in sequence to handle the business process, as opposed to having one program that does everything. In this case, if the processing stopped half way through, the IT person can easily re-run that particular step followed by the rest, rather than executing the entire process from the beginning. Therefore, duplicate e-mails are no longer sent and the time required for re-processing is significantly reduced.

Batch processing is about making the best use of computing resources to process data and deliver result on time. Schedule jobs so that they can run imminently one after another, and keep the computing resource occupied without idle time. But this may not be considered as highly efficient with today's high processing power machines that have the ability to handle multi-tasking. Dividing steps into jobs provides a possibility of parallel processing for job steps that are not inter-dependent, as a result, maximizing the system resource utilization as well as shortening the end-to-end processing time. Use our online computer shop example by breaking down processing into tasks and running tasks that are not interdependent in parallel, we can significantly shorten the processing time.

Another objective of batch processing is about delivering the processing result with minimal manual intervention. Technically, batch processing can be initiated by humans. In fact, back in the old days, companies employed operators on shifts to submit job streams at a set time of the day, check each job's execution result, and initiate restarts. However, running batch manually can be extremely challenging due to the nature of batch processing that it is complicated and detailed. The number of tasks for a business process can be large, and each task may require a complex input argument to run. Depending on the characteristics of the task an execution can take anything from minutes to hours.

In an environment that requires hundreds or thousands jobs to be scheduled each day, people who run the batch job not only need to work long hours to monitor each execution and ensure the right batch job gets triggered with correct input arguments at the correct time, but they also need to react to job failures, handle the complicated logic between jobs to decide which job to run next, and at the same time, keep in mind parallel processing, as well as ensure that each job is triggered as soon as its parent job(s) is/are completed to minimize machine idle time. Making mistakes is an unavoidable part of human nature, especially in a complex environment where everything is time-constrained. Businesses would rather invest in a batch automation tool than take the risk of having critical business problems due to batch processing delay and failures by human mistakes.

As the modern computing batch processing is far more complicated than just simply feeding punched cards in sequence into the mainframe as it was in old days, a lot more factors need to be taken into consideration when running batch processing due to its time consuming and task-intensive nature. Batch scheduling tools were born to automate such processing tasks, thus reducing the possibility of human mistake and security concerns.

There were home-grown toolsets developed on the mainframe computers for automating JCL scripts. Modern age distributed computer systems also came with some general ability to automate batch processing tasks. On a Unix or LINUX computer, CRON is a utility provided as part of the operating system for automating the triggering of executables. The equivalent tool on Windows is called task scheduler. With these tools, the user can define programs or scripts to run at a certain time or at various time intervals. These tools are mainly used for basic scheduling needs such as automating backups at a given time or system maintenance.

These tools do not have the ability to execute tasks according to pre-requisites other than time. Due to the limiting feature and unfriendly user interface, users normally find it challenging when trying to use these tools for complex scheduling scenarios, such as when there is a predefined execution sequence for a group of related program tasks.

Over the years, major software vendors developed dedicated commercial batch scheduling tools such as BMC Control-M to meet the growing needs in batch processing. These tools are designed to automate complicated batch processing requirements by offering the ability to trigger task executions according to the logical dependencies between them.

Similar to CRON, users firstly are required to define each processing task in the batch-scheduling tool together with its triggering conditions. Such definitions are commonly known as "Job Definitions", which get stored and managed by the scheduling tool. The three essential elements within each job definition are:

What to trigger — The executable program's physical location on the file system

When to trigger — the job's scheduling criteria

Dependencies — the job's predecessors and dependents

From a batch scheduling tool point of view, it needs to know which object is to be triggered. It can be a JCL on the mainframe, a Unix shell script, a Perl program, or a Windows executable file. A job also can be a database query, a stored procedure that performs data lookup or update, or even a file transfer task. There are also application-specific execution objects, such as SAP or PeopleSoft tasks.

Each job has its own scheduling criteria, which tells the batch scheduling tool when the job should be submitted for execution. Job scheduling criteria contains the job's execution date and time. A job can be a daily, monthly, or quarterly job, or set to run on a particular date (for example, at the end of each month when it is not a weekend or public holiday). The job can also be set to run at set intervals (running cyclic). In such cases, the job definition needs to indicate how often the job should run and optionally the start time for its first occurrence and end time for its last occurrence (for example, between 3pm to 9pm, run the job every five minutes). Most of the job schedulers also allow users to specify the job's priority, how to handle the job's output, and what action to take if the job fails.

Job dependency is the logic between jobs that tells which jobs are inter-related. According to the job dependency information, the batch scheduling tool groups the individual, but inter-related jobs together into a batch flow. Depending on the business and technical requirements, they can be defined to run one after another or run in parallel. The common inter-job relationships are:

One to one

One to many (with or without if-then, else)

Many to one (AND/OR)

A one to one relationship simply means the jobs run one after another, for example, when Job A is completed, then Job B starts.

A one to many relationship means many child jobs depend on the parent job, once the parent job is completed, the child jobs will execute. Sometimes there's a degree of decision making within it, such as if job A's return code is 1, then run Job B, or if the return code of Job A is greater than 1, then run Job C and Job D.

A many to one relationship refers to one child job that depends on many parent jobs. The dependency from parent jobs' completion can be an AND relationship, an OR relationship also can be AND and OR mixed, for example, in an AND scenario, Job D will run only if Job A, Job B, and Job C are all completed. In an OR scenario, Job D will run if any of Job A, Job B, or Job C are completed. In a mixed scenario, Job D will run if Job A or Job B and Job C is completed.

During normal running, the batch scheduling tool constantly looks at its record of jobs to find out which jobs are eligible to be triggered according to the job's scheduling criteria, and then it will automatically submit the job to the operating system for execution. In most cases, the batch scheduling tool can control the total number of parallel running jobs to keep the machine from being overloaded. The execution sequence among parallel jobs can be based on individual job's predefined priority, that is, the higher priority jobs can be triggered before the lower priority ones. After each execution, the job scheduling tool will get an immediate feedback (such as an operating system return code) from the job's execution. Based on the feedback, the job scheduling tool will decide the next action such as to run the next job according to the predefined inter-job dependency or rerun the current job if it is cyclic. Batch scheduling tools may also provide a user interface for the system operator to monitor and manage batch jobs, which gives the ability for the user to manually pause, rerun, or edit the batch job.

Driven by business and user demand, more sophiscated features have been provided with modern scheduling tools apart from automating the execution of batch jobs, such as:

The ability to generate an alert message for error events

The ability to handle external event-driven batch

Intelligent scheduling — decision making based on pre-defined conations

Security features

Additional reporting, auditing, and history tracking features

By having the ability to let notifications be generated on specified events, operators are freed from 24*7 continuous monitoring and only need to monitor jobs by exception. Users can setup rules so a notification will be sent out when the defined event occurs, such as when a job fails, a job starts late, or runs longer than expected. Depending on the ability of the scheduling tool, the destination for the notification can be an alert console, an e-mail inbox, or an SMS to a mobile phone. Some scheduling tools also have the ability to integrate with third-party IT Service Management (ITSM) tools for automatically generating an IT incident ticket. Job-related information could be included in the alert message, for example, the name of the job, the location of the job, the reason for failure, and the time of the failure.

Even-driven batch jobs are defined to run only when the expected external event has occurred. In order to have this capability, special interfaces are developed within a batch scheduling tool to detect such an event. Detecting a file's creation or arrival is a typical interface for event trigger batch. Some batch schedulers also provide their own application programming interface (API) or have the ability to act as a web service or through message queue to accept an external request. The user needs to prespecify what event to trigger, which job or action within the batch scheduling tool, and during what time frame. So during the defined time frame, the scheduler will listen to the event and trigger the corresponding batch job or action accordingly. External events can sometimes be unpredictable and this can happen at any time. The batch scheduling tools also need to have the ability to limit the number of concurrent running event-t riggered batch jobs to prevent the machine from overloading during peak time periods.

Besides generating notifications for events, most of the advanced batch scheduling tools also have the ability to perform intelligent scheduling by automatically deciding which action will be performed next, based on a given condition. With this feature, a lot of the repetitive manual actions for handling events can be automated. Such as:

Automatically rerun a failed job

Trigger job B when job A's output contains message Processing completed or trigger job C when job A's output contains message Processing ended with warning

Skip job C if job B is not finished by 5pm

This feature avoided the human response time for handling such tasks and minimized the possible human mistakes. It significantly contributes to shortening the overall batch processing time. However, this is not a one-size-fits-all approach, as there are chances that the events rather need a human decision to take place. This approach can free the user from repetitive tasks, but can also increase maintenance overhead, such as each time when the output of a program is changed, the condition for automatic reaction more likely needs to be changed accordingly.

For information security concerns, files that reside on the computer system are normally protected with permissions. A script or executable file needs to be running under the corresponding user or group in order to read files as its inputs and write to a file as its output. In the case of a database or FTP job, the login information needs to be recorded in the script for authentication during runtime. The people who manage the batch processing require full access to the user accounts to trigger the script or executable, which means they will also have access to the data that they are not allowed to see. There are also risks that the people with user access rights may modify and execute the executables without authorization. Batch scheduling tools eliminated this concern by providing additional security features from the batch processing prospective, that is, provide user authentication for accessing the batch scheduling console, and group users into different levels of privileges according to their job role. For example, users in the application development group are allowed to define and modify jobs, users in the operation group are allowed to trigger or rerun jobs, and some third-party users may only have the rights to monitor a certain group of jobs.

While the batch scheduling tool provides great scheduling capability and user friendly features, tracking historical job executions and auditing user actions is also available. This is because all jobs runs on the same machines are managed from a central location. By using the reports, rather than getting logs from each job's output directory and searching for relevant system logs, the user can directly track problematic jobs, know when a job failed, who triggered what job at what time, or create a series of reports to review the job execution history trend. Apart from being handy for troubleshooting and optimizing batch runs, the information can also become handy for the organization to meet the IT-related regulatory compliance.

Looking back 20 years, technology has grown beyond imagination, but the needs for batch processing haven't been reduced. According to Gartner's Magic Quadrant for Job Scheduling 2009 report, 70 percent of business processes are performed in batch. In the same report, Gartner forecasted a 6.5 percent future annual growth in the job scheduling market.

In the recent years, IT is becoming more and more sophisticated to meet the ever-growing business requirements. The amount of information to be processed in batch is scarily increasing. At the same time we are also in a trend of batch window shrinking. As a consequence, the system's in-built scheduling functionality or homegrown scheduling tool can no longer handle the dramatically increasing complexity. Let's first have a look at the evolution of surrounding technologies, which affected the way batch processing runs today:

The mixture of platforms within IT environment

Different machines and applications are inter-related. They often have to work together to accomplish common business goals

From running batch on a single machine and single application, we ended up with an IT environment with hundreds or thousands of machines. Some of these machines are acting as a database server or data warehouse running Oracle, Sybase, and MS SQL Server. Some other machines may purely be used for running ETL jobs from Informatica or Datastage. There are also machines that are dedicated file servers for sending and receiving files according to a specific event. Then there are backup applications running data archiving tasks across the entire IT environment. Besides these, we still have mainframe computers running legacy applications that need to integrate with applications in the distributed environment.

More or less these machines will have their own batch jobs running to serve a particular need. Not only that, applications that are specialized in a particular area may also require batch processing. Some of these applications such as PeopleSoft Finance and SAP R/3 had to come with an in-built batch scheduling feature to meet its own batch processing requirements.

These platforms and applications can rely on a built-in scheduling feature to handle basic batch processing requirements without a problem. Issues arise when business processes require cross platform and cross application batch flow. These islands of automation are becoming silos of information. Without proper methodology, interrelated jobs on different platforms simply don't know when the parent job will finish and the finishing status, thus not knowing when it should start. There are different approaches in order to allow each step of a cross platform job flow to execute in the correct order. The most common one is the time matching method.

With the time matching approach, we first need to know roughly how long a given job takes to run in order to allocate a reasonable time frame for it to finish before the next job starts. The time allocated for each job has to be longer than its normal execution time in case the processing takes longer than normal.

Let's revisit the imagamingpc.com example:

As the batch processing got broken down into individual tasks, the quality of customer service began to improve and the site became busier and busier. After six months, the average number of orders per day increased to 300! The business owner was happy, but the IT person was a bit worried; he summarized the following issues to the business owner:

Currently everything is running from one machine, which is presenting a performance bottleneck and some degree of security concern.

Sometimes if there are too many orders generated, the batch jobs cannot complete execution within the designed batch window. In an extreme case, it will finish the last step at 11:00am the next day. During this time, the CPU is constantly hitting 100 percent, thus the system cannot process new order requests coming from the web.

At the moment, the IT person only gets to know that the batch flow failed in the morning after he gets into the office. It was ok when the amount of data was small and he could just re-run the failed step and run the rest of the flow. But as the number of daily orders starts to increase, re-running some of the stage can take a lot of time. Sometimes it takes the whole morning to re-run the PROCESS_ORDER step, so the technician cannot build any machines until the daily_build_list is finally generated. During this time, the rerun will also take up most of the CPU resources, which again affects the system processing real-time customer requests from the web.

After research and consulting with other similar businesses, the IT person came up with the following solution:

Move the inventory database into a new machine (machine B), separate from the web server (machine A) to reduce its resource utilization.

Instead of populating an individual build list into flat files on the webserver, create a new database on a separate machine (machine C) dedicated for storing an individual build list. In this case,

PROCESS_ORDERcan run quicker and cost less disk IO. Therefore, hopefully it can complete within the designed batch window and not affect the online processing during business hours.To keep the data secure, once all the processing is completed, backup all data onto tape.

The business owner agrees on the approach. During the implementation of the new environment, the IT guy ran into a new problem. Now the batch jobs are divided to run on different machines. There's a synchronization issue, that is, when inter-related jobs are not on the same machine, how do the downflow jobs know their parent job(s) is finished? The IT guy took the time matching approach, that is, defined a timeframe for each step to run. The sequence of the job's execution is as follows:

12:00am to 1:00am: FTP order is generated during the day from Machine A to Machine C.

1:00am to 1:30am: Machine C populates a build list into the database.

1:30am to 2:00am: Run

"PROCESS_ORDER"on Machine C.2:00am to 2:15am:

MAIL_CONFRIMED_ORDERgets executed from Machine C.2:15am to 2:30am: Machine C runs

PRINT_DAILY_BUILD_LIST.2:30am to 3:00am:

UPDATE_INVENTORYgets triggered by Machine C.3:00am to 3:30am: Machine B triggers

GENERATE_BACKORDER_LIST.3:30am to 4:00am: Machine B runs

MAIL_BACKORDER.4:00am to 5:00am: Machine D runs

RUN_BACKUPto backup data on machines B and C.

In this example, each processing step is spread across different machines and applications, rather than running off a standalone server (refer to the previous diagram). Each step depends on the previous one to finish before it can start, so it can continue on the work based on what the previous step has done. Obviously, there would be a problem if the confirmed order e-mails got sent out before the order data is fully generated. Sometimes the job may take longer to run due to the increased amount of input data or insufficient amount of computer processing power. Therefore, an extra time window needs to be allocated for each job by taking into consideration the worst case scenario to avoid overlap.

The time matching approach can allow cross platform and application batch flow possibly to run in its designed order, but there are still challenges present in the following areas:

With the time matching approach, the entire batch flow will take longer to run due to the time gap between job executions. Child job(s) will not trigger until the scheduled time comes, even if the parent job(s) finished early or at the average finishing time. In extreme cases, a parent job(s) may run over its allocated time, which means the child job(s) will get triggered according to the predefined time while the parent job(s) is still running. This can cause a serious failure and may require data rollback and reset the overlay job(s) to go back to its initial state. As a consequence, the total duration of the batch flow execution will increase with the risk of running longer than the pre-agreed batch window. This is extremely unfavorable under the current trend where the processing time is increasing and batch window is shrinking.

In a traditional scenario, batch window is allocated at night when online activity is low. The system has plenty of time to run the batch jobs and recover from error before the online activity picks up again next morning. As the Internet became popular, organizations have become able to expand their businesses by offering product and services globally. This requires the computer system to be almost 24 hours available for processing online requests from different time zones, and therefore leaves very little room for batch processing.

When jobs are running on different platforms, they can be monitored as per machine basis only. The user can see which job completed and which job failed, but unable to see everything as a complete business process flow. Many business processes today require thousands of jobs to complete and these jobs may be spread on hundreds of machines. It is not practical for operators to track each step of the batch flow by logging on every machine that is involved in the processing. Not only because this approach is labor intensive, but also because different skill sets are required for people who are in charge for batch jobs running on each different environment. Also, it is difficult to find out what the consequences would be if one job needs to be started late or if some jobs need to be disabled for a given day.

In the trend of globalization, it is common to see that a business has operations set up in many different countries. Sales offices located in North America and Europe, manufacturing offices located in Asia, and customer support centers located in South America. Each of these locations doesn't operate on its own and it is more than likely they need to share large amount of data between them, consequently there will be business processes that require batch processing within different regions to be executed one after another. Due to the different operation time and different geography, cross-time zone scheduling is found to be extremely hard to achieve by the time-matching approach. It also increases the challenge for batch monitoring and troubleshooting.

In the time-matching approach, if some batch jobs' execution often exceeds their allocated time frame, the application owners either have to resolve the long running job problem or delay the next job's start time to allow more execution time for the problematic job. This will ensure that the long running job is completed without having overlay issues, but will also unnecessarily increase the overall execution time of the batch flow when the problematic job does not overrun. This time gap makes the system idle, and brings more difficulty to the batch processing when the entire batch window is already small.

In a multi-platform environment, each system or application is likely to be managed by individual teams that are specialized in their own areas. As each batch job resides on different machines across departments, it can take hours to track down the failure point. For example, at 2:00am, a reporting job gets triggered and fails immediately. The person in charge for reporting quickly checks the cause of the problem, and discovers that the parent job failed at 1:50am too. The parent job was a database script that inserts data from CSV files, which were meant to arrive at 1:30am. So the DBA checks with the person who is in charge for the creation of the file, it goes on and on, and may even turn out that the job failure is caused by someone on the other side of the world. By the time they find out the original problem, it is already too late to allow the rerun to complete within the SLA.

Just think from the maintenance point as well, all these failures were caused by a rename to the CSV files. Without seeing the whole picture, the person who made the modification did not know there's a downflow reporting job, or many other parties outside his department may rely on these files for further processing.

Batch running report is important information for analyzing the behavior of the batch flow. Job execution information collected on each machine may not represent a cross-platform business process because the individual machine is only running a portion of the entire business process. To report on the job execution status of a cross-platform batch flow, we need to collect data from each involved machine and filter out any job information that is not related to the batch flow definition. This process can be complicated and time-consuming and may require modification each time the batch flow is changed.

The business environment does not stay the same all the time. Changes made to the business can dramatically affect how IT works. Think about situations such as company mergers. Without an overall view of the entire batch environment from a business process point of view, plus a lack of standardization and documentation, IT will become a resistant of the business transition. Even with business events as small as a marketing campaign, batch jobs may require longer than normal to run in order to process the extra amount of data. For example, when a national retail store is opened for 24 hours during the Christmas period, the machine needs more resources to be capable of handling the online transactions. With batch jobs residing across many platforms, a lot of manual modifications will be needed to cater to the temporary change.

Costs for computer hardware are reducing, but sometimes adding more machines and technical staff may not be enough to effectively face the challenges we talked about so far, but can even complicate the situation further. If the IT components do not work together very well, the business will face serious problems. Just think about suffering from currency exchange rate increases due to failure in processing an order on time, penalties for batch processing missing its service-level agreement, and security risks. IT risks can cost the business a huge amount of profit and even potentially affect the company's share price and public image.

The computer networking technology allows machines to communicate with each other freely. Based on this technology, batch scheduling tools are able to expand their ability to schedule jobs on multiple platforms and provide users with a single point of control instead of running a standalone batch scheduling tool on each individual machine and using the time-matching method to schedule cross-platform batch flow.

During runtime, the centralized scheduling platform examines each job's scheduling criteria to decide which job should be running next, each time it sends a job execution request to the remote host that was predefined in the selected job's definition. A mechanism on the remote host needs to be established to communicate with the centralized scheduling platform, as well as to handle the job submission request by interacting with its own operating system. Once the job is submitted, the centralized scheduling platform will wait for the response from the remote host and, in the mean time, submit other jobs that are meeting their scheduling criteria. Upon the completion of each job, the centralized scheduling tool will get an acknowledgment from the remote host and decide what to do next based on the execution outcome of the completed job (for example, rerun the current job or progress to the next job).

Let's re-visit some of the challenges mentioned earlier that are related to cross-platform batch processing and analyze how to overcome them by using the centralized scheduling approach:

Processing time and resource utilization

Batch monitoring and management

Cross-time zone scheduling

Maintenance and troubleshooting

Reporting

Reacting to changes

Centralized scheduling approach effectively minimizes the time gap between the executions of jobs, thus potentially shortening the batch flow's total execution time and reducing system idle time. Cross-platform jobs are built into a logical job flow according to the predefined dependency, the centralized scheduling platform controls the execution of each job by reviewing the job's parent job(s) status and the job's own scheduling criteria. In this case, jobs can be triggered imminently when its parent job(s) are completed. If the parent job(s) takes longer to run, the child job will start later. If the parent job(s) completed early, the child job will also start earlier. This effectively avoids job overrun, that is, the child job will not get triggered unless the centralized scheduling platform received a completion acknowledgement from the parent job(s).

We often hear from more senior IT people talking about their "good old days" - batch operators has a list of all jobs expected to be executed on the day, together with a list of machines where these jobs are located. They had to manually logon each machine to check the perivous job's completion state, then logon another machine to execute the next job, Centralized scheduling allowed users to monitor and manage cross platform batch jobs from a single point of control. Users no longer need to estimate which job should run next by looking at their spreadsheet, because jobs that belong to a single business process are grouped into a visualized batch flow. Users can see exactly where the execution is up to in the batch flow. Centralized scheduling also provides a uniform job management interface. The people in charge of managing the batch jobs no longer need to have in-depth knowledge of the job's running environment to be able to perform simple tasks such as rerun a job, delay a job's execution, or deploy a new job.

The time-matching approach requires each job defined to match each other's scheduling time. For example, if a job is located in Sydney Australia (GMT +10), its child jobs are located in Hong Kong (GMT +7), Bangkok Thailand (GMT +7), and LA USA (GMT -5). If the parent job is set to run between 2pm to 3:30pm Sydney local time, the child jobs need to start at 12:30pm Hong Kong local time, 11:30am Bangkok local time, and 8pm LA local time. The schedule of each child job needs to be changed every time the parent job's scheduling is changed or when day light saving comes. It is much easier to manage cross-time zone batch flows when job scheduling does not rely on the time-matching approach. Jobs without additional time requirements are defined to run immediately once the parent jobs are completed, regardless of which machine they are at and what time zone the machine resides on.

When an exception occurs, centralized batch scheduling platform allows the users to clearly see where the problematic jobs are located in the business process. Therefore, it is easier for them to estimate how it is impacting the down flow jobs. Operators who manage the batch processing can easily take actions against the problematic jobs from the central management console without the need of logging onto the job's machine as the job owner to perform tasks such as rerun or kill the job. In case a failure needs to be handled by the application owner, the operators can easily identify the job's owner and escalation instructions by looking up to the job's run book, which also can be recorded within the scheduling platform. From a maintenance point of view, before a job scheduling criteria needs to be modified, such as its execution time, the user can clearly see the job's child jobs from the central management console to find out the impact of such a change .

As jobs are managed and scheduled from a central location, it is easier for the centralized scheduling platform to capture each job's scheduling details, such as its start time, end time, duration, and execution outcome. The user can extract the information into a report format to analyze the batch execution from the business process point of view. It saves the need for collecting data from each involved machine and filtering the data against the batch flow definition.

A centralized batch scheduling approach provides IT with the ability to react to business changes. In a centralized batch scheduling environment, batch jobs are managed from a single location and more likely follow the same procedure for deployment, monitoring, troubleshooting, maintenance, retirement, and documentation. During a company merger, it is much easier to consolidate two batch platforms into one, to compare dealings between each machine on an application basis.

The centralized scheduling approach speeds up batch processing and improves computing resource utilization by overcoming the cross platform and multi-time zone challenge in today's batch processing. From the user point of view, batch jobs are managed according to business processes rather than focusing on job execution within each individual machine or application. As a result, the cost and risk to the business is reduced. IT itself becomes more flexible and able to react to the shifting of business requirements, helping to improve the agility of the business.

A centralized enterprise scheduling platform would have the following common characteristics (some commercial product may not necessarily have these characteristics or are not limited to them):

It can schedule jobs on different system platforms and provide a centralized GUI monitoring and management console, such system platforms are (but not necessarily) mainframe, AS/400, Tandem, Unix, Linux, and Microsoft Windows.

It can schedule jobs based on its scheduling criteria, such as date and time.

It has the ability to execute job flows according to predefined inter-job logic regardless the operating system of the job.

It is able to automatically carry out the next action according to current job's execution status, the job's execution status can be its operating system return code or a particular part of its job output (for example, an error message).

It has the ability to make decisions on which job to schedule by reference to its priority and the current resource utilization (that is, limits the number of concurrent running jobs and allow jobs with higher priorities to be triggered first).

Handle event-based real-time scheduling.

Some degree of integration with applications (for example, ERP, Finance applications).

Automated notification when a job fails or a pre-defined event occurs.

Security and auditing features.

Today batch scheduling has evolved from single platform automation to cross platform and cross application scheduling. The rich features provided by the tools effectively reduced the complexity for batch monitoring and managing. It seems everything needed for batch scheduling is available and the users are satisfied. However, history tells us that nothing stays the same in IT; technology needs to grow and improve, otherwise it gets replaced by other newer and better technologies, and batch scheduling is not an exception.

Note

In Gartner's Magic Quadrant for Job Scheduling 2009 edition, it stated right at the beginning "Job scheduling, a mature market, is undergoing a transformation toward IT Workload Automation Broker technology."

Reference -Gartner's Magic Quadrant for Job Scheduling 2009.

Based on what we know so far, this section we will be looking at where batch scheduling is going in the very short future.

As discussed earlier in this chapter, for all these years, batch scheduling was primarily static scheduling. Even in an advanced centralized batch scheduling platform, jobs are set to run on a certain day according to the calendar definition and during a certain time on a predefined destination host. This is absolutely fine if each time the computing resources are available for batch processing and the workload doesn't go beyond the board. But it seems too good to be true in the trend of shrinking batch window and increasing demand for event-triggered scheduling.

During a defined batch window, machines are working extremely hard to run batch jobs one after another. It is common to see these machines fully utilized during the entire batch window, but still unable to complete the processing on time. But at the same time, there are other machines that are not part of the processing just in idle state. Even these idle machines are allowed to be used for temporary batch job execution; it is not worth the effort to re-configure the batch jobs to run on these hosts only as a one off thing.

Recall what we have discussed about event triggered scheduling. With this type of scheduling, job flows are waiting for the external event to happen by listening on the event interface, such as a file's arrival or web service request. Once the request arrives, the job flow will be triggered on a predefined host. These job flows are normally defined to allow some degree of parallel processing so multiple user requests can be processed at the same time. This feature is largely limited by the resource of the physical machine, whereas in some extreme cases large amount of requests are getting triggered within a short period, but cannot get processed straightaway due to the limitation of the computing resource, so the new requests have to be queued up for previous requests processing to complete. This issue ultimately will reduce the level of end-user experience and cause business losses.

Simply allocating more computing resources to handle the additional workload is not the most effective solution for this problem, because:

Most of these workload peaks or activity sparks are unplanned. It is not cost efficient and environmental friendly to have the additional IT resource stay in the idle state during off peak hours.

Due to the static nature of batch jobs, routing job execution onto additional processing nodes requires modification to the job definition prior to the job's runtime. For a large amount of jobs, it is not practical and human errors may occur during frequent job modifications.

It is hard to pre-allocate the workload evenly among all available processing nodes, especially for event-based scheduling. Dispatching workload prior to execution can be an option, but requires some degree of human decision, which defeats the original purpose of batch processing automation.

Adding more computing resources will complicate the already overwhelmed IT environment even further.

More computing resources may temporarily accommodate the grown batch processing demand, but in long term, it will increase the IT's total cost of ownership (TCO). The business will also get lower return of investment (ROI) on IT due to the expenses of additional computing recourse and the increasing number of technical staff required for managing the environment.

IT Workload Automation Broker (ITWAB) technology was originally introduced by Gartner in 2005. It was born to overcome the static nature of job scheduling by allowing batch jobs to be managed as workloads according to business policies and service agreement. By following this standard, batch scheduling should become more flexible, and therefore, be able to take advantage of the virtualization technology and become resource aware to be able to dynamically assign the workload based on runtime resource utilization. Batch processing should also expand its ability on top of the existing event-triggered batch processing by adopting the service-oriented architecture (SOA) approach for reusability and offer a standard integration interface for external systems.

Virtualization technology has become common in the organization's IT environment in the recent years. With this technology, users are able to convert underutilized physical machines into virtual machines and consolidate them into one or more larger physical servers. This technology transformed the traditional way of running and managing IT. It improves existing hardware resource utilization, at the same time provides more flexibility and saves datacenter's physical space. Because virtual machine images can be easily replicated and re-distributed, system administrators are able to perform system maintenance without interrupting production by simply shifting the running virtual machine onto another physical machine.

Cloud computing took the virtualization technology one step further by having the ability to dynamically manage virtual resources according to real-time demand. When IT moved towards cloud computing, the workload automation approach enabled the batch scheduling tool to tap into the "unlimited supply" of computing resources. With this approach, instead of defining jobs to run on a static host, jobs are grouped into the workload which is to be assigned to any job execution node without the need to modify the original job definition. By doing so, the batch scheduling platform can freely distribute processing work onto virtual resources in the cloud according to runtime workload. Under scenarios where existing job execution nodes are hitting usage limit, the scheduling tool can simply request new virtual resources from the cloud and route the additional workload to them. For time-based batch processing, this approach will always ensure that sufficient computing reso urces are available to allow the batch processing to complete within its batch window. For event-based batch processing, batch requests generated by random external events can always get processed immediately without queuing.

The benefit is significant when batch processing needs to handle unexpected workload peaks or unplanned activity sparks, that is, computing resources are no longer a bottleneck. IT people are also able to manage processing sparks caused by temporary business changes without physically rearranging computing resources.

Energy saving is another major benefit of the workload automation approach. During off peak time, batch processing workload is automatically routed and consolidated to fewer machines. The unutilized virtual resources are released back to the cloud. The cloud management tool will decide how to consolidate the rest of the running virtual machines onto fewer physical servers and shut down physical machines that are left idle, whereas with traditional physical resources, it is not practical to shut down each time the utilization is low. Once the machine's role is defined, it cannot be easily reused for other purpose.

In order to achieve this so called end-to-end workload automation, the scheduling tool is required to be aware of real-time resource utilization on each job execution host and should have the ability to integrate with the cloud management tool.

In the earlier part of this chapter, we discussed whether or not a real-time system could completely replace batch processing. Batch processing can justify its existence because of its high performance nature when it comes to processing large amounts of data. There has always been a gap that exists between the batch processing and real-time processing. Event-triggered scheduling was designed to bring this gap so batch processing can get triggered by real-time requests. But due to the static nature, event-triggered scheduling is struggling to keep up with today's dynamic demands of IT.

Organizations often see batch processing's static nature as their biggest road block when integrating with real-time systems, because real-time systems deal directly with business rules and business rules change all the time. As a workaround, they try to avoid batch processing as much as possible and implement the entire solution on the real-time system and achieve cross-platform processing by wrapping processing steps into a standard interface such as web services. The system may well enough to allow rapid changes to be made, but users are more likely to suffer poor performance when it comes to processing large amounts of data at once.

In the workload automation approach, batch processing is defined to be policy-driven, becoming a dynamic component of an application to serve real-time business needs. By adopting the service-oriented architecture (SOA) design principles, a batch flow can be triggered on-demand as a single reusable service and loosely coupled with its consumers.

Note

SOA is a flexible set of design principles used during the phases of system development and integration in computing. A system based on SOA architecture will provide a loosely-coupled suite of services that can be used within multiple separate systems from several business domains.

Reference to Wikipida - Service-oriented architecture.

With good batch design practice, programs for individual jobs can possibly be reused by other business processes. For example, convert an FTP file transfer processing method into a standalone job and then convert its hardcoded source and destination value into a job's runtime input arguments. This allows the program to be reused in different file transfer scenarios. Same idea can also be applied to a data backup process. Once it is converted into a job, it can be used for backing up data from different sources or perform the same backup in different scenarios. This approach can effectively reduce the number of duplicated functionality jobs to be defined and managed.

By defining batch flows as reusable services, the details of batch processing are encapsulated. A request can be sent to trigger the batch processing through web services or messaging queue when needed, then let the batch scheduler run the batch processing in black box and return the desired outcome to the requester.

With minimal changes required, the batch processing that is made can be reused in a number of real-time processing scenarios. In this case, the system designers do not need to carry the risk of reinventing the wheel and at the same time the processing performance is maintained.

Well done. We have completed the first chapter! This has been an interesting trip through history back to the future, that is, from the beginning of batch processing all the way to today's latest technology. As people always say, for everything there is a reason, the best way to understand its purpose is to understand the history. By now, you should have a good understanding of the concept of batch processing and centralized enterprise scheduling — what were the challenges and why they exist today. On top of that, we also had an overall view of the latest concept — workload automation.

Later on in this book, you will see how the challenges are addressed and how the ideal workload automation cocept is achieved in the amazing feature of Control-M. Stay tuned! We are just about to let the real game begin!