High-Performance Computing Fundamentals

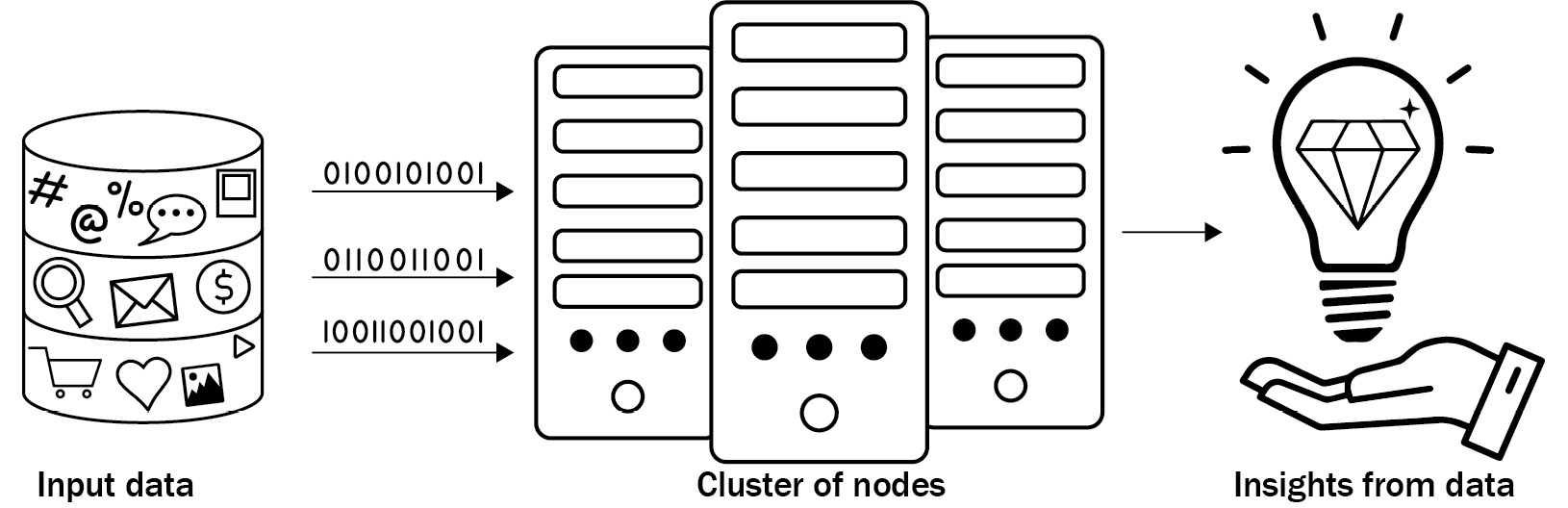

High-Performance Computing (HPC) impacts every aspect of your life, from your morning coffee to driving a car to get to the office, knowing the weather forecast, your vaccinations, movies that you watch, flights that you take, games that you play, and many other aspects. Many of our actions leave a digital footprint, leading to the generation of massive amounts of data. In order to process such data, we need a large amount of processing power. HPC, also known as accelerated computing, aggregates the computing power from a cluster of nodes and divides the work among various interconnected processors, to achieve much higher performance than could be achieved by using a single computer or machine, as shown in Figure 1.1. This helps in solving complex scientific and engineering problems, in critical business applications such as drug discovery, flight simulations, supply chain optimization, financial risk analysis, and so on:

Figure 1.1 – HPC

For example, drug discovery is a data-intensive process, which involves computationally heavy calculations to simulate how virus protein binds with human protein. This is an extremely expensive process and may take weeks or months to finish. With the unification of Machine Learning (ML) with accelerated computing, researchers can simulate drug interactions with protein with higher speed and accuracy. This leads to faster experimentation and significantly reduces the time to market.

In this chapter, we will learn the fundamentals and importance of HPC, followed by technological advancements in the area. We will understand the constraints and how developers can benefit from the elasticity of the cloud while still optimizing costs to innovate faster to gain a competitive business advantage.

In this chapter, we will cover the following topics:

- Why do we need HPC?

- Limitations of on-premises HPC

- Benefits of doing HPC on the cloud

- Driving innovation across industries with HPC

Why do we need HPC?

According to Statista, the rate of growth of data globally is forecast to increase rapidly and reached 64.2 zettabytes in 2020. By 2025, the volume of data is estimated to grow to more than 180 zettabytes. Due to the COVID-19 pandemic, data growth in 2020 reached a new high as more people were learning online and working remotely from home. As data is continuously increasing, the need to be able to analyze and process it also increases. This is where HPC is a useful mechanism. It helps organizations to think beyond their existing capabilities and explore possibilities with advanced computing technologies. Today HPC applications, which were once confined to large enterprises and academia, are trending across a wide range of industries. Some of these industries include material sciences, manufacturing, product quality improvement, genomics, numerical optimization, computational fluid dynamics, and many more. The list of applications for HPC will continue to increase, as cloud infrastructure is making it accessible to more organizations irrespective of their size, while still optimizing cost, helping to innovate faster and gain a competitive advantage.

Before we take a deeper look into doing HPC on the cloud, let’s understand the limitations of running HPC applications on-premises, and how we can overcome them by using specialized HPC services provided by the cloud.

Limitations of on-premises HPC

HPC applications are often based on complex models trained on a large amount of data, which require high-performing hardware such as Graphical Processing Units (GPUs) and software for distributing the workload among different machines. Some applications may need parallel processing while others may require low-latency and high-throughput networking. Similarly, applications such as gaming and video analysis may need performance acceleration using a fast input or output subsystem and GPUs. Catering to all of the different types of HPC applications on-premises might be daunting in terms of cost and maintenance.

Some of the well-known challenges include, but are not limited to, the following:

- High upfront capital investment

- Long procurement cycles

- Maintaining the infrastructure over its life cycle

- Technology refreshes

- Forecasting the annual budget and capacity requirement

Due to the above-mentioned constraints, planning for an HPC system can be a grueling process, Return On Investment (ROI) for which might be difficult to justify. This can be a barrier to innovation, with slow growth, reduced efficiency, lost opportunities, and limited scalability and elasticity. Let’s understand the impact of each of these in detail.

Barrier to innovation

The constraints of on-premises infrastructure can limit the system design, which will be more focused on the availability of the hardware instead of the business use case. You might not consider some new ideas if they are not supported by the existing infrastructure, thus obstructing your creativity and hindering innovation within the organization.

Reduced efficiency

Once you finish developing the various components of the system, you might have to wait in long prioritized queues to test your jobs, which might take weeks, even if it takes only a few hours to run. On-premises infrastructure is designed to capitalize on the utilization of expensive hardware, often resulting in very convoluted policies for prioritizing the execution of jobs, thus decreasing your productivity and ability to innovate.

Lost opportunities

In order to take full advantage of the latest technology, organizations have to refresh their hardware. Earlier, the typical refresh cycle of three years was enough to stay current, to meet the demands of HPC workloads. However, due to fast technological advancements and a faster pace of innovation, organizations need to refresh their infrastructure more often, otherwise, it might have a larger downstream business impact in terms of revenue. For example, technologies such as Artificial Intelligence (AI), ML, data visualization, risk analysis of financial markets, and so on, are pushing the limits of on-premises infrastructure. Moreover, due to the advent of the cloud, a lot of these technologies are cloud native, and deliver higher performance on large datasets when running in the cloud, especially with workloads that use transient data.

Limited scalability and elasticity

HPC applications rely heavily on infrastructure elements such as containers, GPUs, and serverless technologies, which are not readily available in an on-premises environment, and often have a long procurement and budget approval process. Moreover, maintaining these environments, making sure they are fully utilized, and even upgrading the OS or software packages, requires skills and dedicated resources. Deploying different types of HPC applications on the same hardware is very limiting in terms of scalability and flexibility and does not provide you with the right tools for the job.

Now that we understand the limitations of doing HPC on-premises, let’s see how we can overcome them by running HPC workloads on the cloud.

Benefits of doing HPC on the cloud

With virtually unlimited capacity on the cloud, you can move beyond the constraints of on-premises HPC. You can reimagine new approaches based on the business use case, experiment faster, and gain insights from large amounts of data, without the need for costly on-premises upgrades and long procurement cycles. You can run complex simulations and deep learning models in the cloud and quickly move from idea to market using scalable compute capacity, high-performance storage, and high-throughput networking. In summary, it enables you to drive innovation, collaborate among distributed teams, improve operational efficiency, and optimize performance and cost. Let’s take a deeper look into each of these benefits.

Drives innovation

Moving HPC workloads to the cloud, helps you break barriers to innovation, and opens the door for unlimited possibilities. You can quickly fail forward, try out thousands of experiments, and make business decisions based on data. The benefit that I really like is that, once you solve the problem, it remains solved and you don’t have to revisit it after a system upgrade or a technology refresh. It eliminates reworking and the maintenance of hardware, lets you focus on the business use case, and enables you to quickly design, develop, and test new products. The elasticity offered by the cloud, allows you to grow and shrink the infrastructure as per the requirements. Additionally, cloud-based services offer native features, which remove the heavy lifting and let you adopt tested and verified HPC applications, without having to write and manage all the utility libraries on your own.

Enables secure collaboration among distributed teams

HPC workloads on the cloud allow you to share designs, data, visualizations, and other artifacts globally with your teams, without the need to duplicate or proliferate sensitive data. For example, building a digital twin (a real-time digital counterpart of a physical object) can help in predictive maintenance. It can get the state of the object in real time and it monitors and diagnoses the object (asset) to optimize its performance and utilization. To build a digital twin, a cross-team skill set is needed, which might be remotely located to capture data from various IoT sensors, performing extensive what-if analysis and meticulously building a simulation model to develop an accurate representation of the physical object. The cloud provides a collaboration platform, where different teams can interact with a simulation model in near real time, without moving or copying data to different locations, and ensures compliance with rapidly changing industry regulations. Moreover, you can use native features and services offered by the cloud, for example, AWS IoT TwinMaker, which can use the existing data from multiple sources, create virtual replicas of physical systems, and combine 3D models to give you a holistic view of your operations faster and with less effort. With a broad global presence of HPC technologies on the cloud, it allows you to work together with your remote teams across different geographies without trading off security and cost.

Amplifies operational efficiency

Operational efficiency means that you are able to support the development and execution of workloads, gain insights, and continuously improve the processes that are supporting your applications. The design principles and best practices include automating processes, making frequent and reversible changes, refining your operations frequently, and being able to anticipate and recover from failures. Having your HPC applications on the cloud enables you to do that, as you can version control your infrastructure as code, similar to your application code, and integrate it with your Continuous Integration and Continuous Delivery (CI/CD) pipelines. Additionally, with on-demand access to unlimited compute capacity, you will no longer have to wait in long queues for your jobs to run. You can skip the wait and focus on solving business critical problems, providing you with the right tools for the right job.

Optimizes performance

Performance optimization involves the ability to use resources efficiently and to be able to maintain them as the application changes or evolves. Some of the best practices include making the implementation easier for your team, using serverless architectures where possible, and being able to experiment faster. For example, developing ML models and integrating them into your application requires special expertise, which can be alleviated by using out-of-the-box models provided by cloud vendors, such as services in the AI and ML stack by AWS. Moreover, you can leverage the compute, storage, and networking services specially designed for HPC and eliminate long procurement cycles for specialized hardware. You can quickly carry out benchmarking or load testing and use that data to optimize your workloads without worrying about cost, as you only pay for the amount of time you are using the resources on the cloud. We will understand this concept more in Chapters 5, Data Analysis, and Chapter 6, Distributed Training of Machine Learning Models.

Optimizes cost

Cost optimization is a continuous process of monitoring and improving resource utilization over an application’s life cycle. By adopting the pay-as-you-go consumption model and increasing or decreasing the usage depending on the business needs, you can achieve potential cost savings. You can quickly commission and decommission HPC clusters in minutes, instead of days or weeks. This lets you gain access to resources rapidly, as and when needed. You can measure the overall efficiency by calculating the business value achieved and the cost of delivery. With this data, you can make informed decisions as well as understanding the gains from increasing the application’s functionality and reducing cost.

Running HPC in the cloud helps you overcome the limitations associated with traditional on-premises infrastructure: fixed capacity, long procurement cycles, technology obsolescence, high upfront capital investment, maintaining the hardware, and applying regular Operating System (OS) and software updates. The cloud gives you unlimited HPC capacity virtually, with the latest technology to promote innovation, which helps you design your architecture based on business needs instead of available hardware, minimizes the need for job queues, and improves operational and performance efficiency while still optimizing cost.

Next, let’s see how different industries such as Autonomous Vehicles (AVs), manufacturing, media and entertainment, life sciences, and financial services are driving innovation with HPC workloads.

Driving innovation across industries with HPC

Every industry and type of HPC application poses different kinds of challenges. The HPC solutions provided by cloud vendors such as AWS help all companies, irrespective of their size, which leads to emerging HPC applications such as reinforcement learning, digital twins, supply chain optimization, and AVs.

Let’s take a look at some of the use cases in life sciences and healthcare, AV, and supply chain optimization.

Life sciences and healthcare

In life sciences and the healthcare domain, a large amount of sensitive and meaningful data is captured almost every minute of the day. Using HPC technology, we can harness this data to gain meaningful insights into critical diseases to save lives by reducing the time taken testing lab samples, drug discovery, and much more, as well as meeting the core security and compliance requirements.

The following are some of the emerging applications in the healthcare and life sciences domain.

Genomics

You can use cloud services provided by AWS to store and share genomic data securely, which helps you build and run predictive or real-time applications to accelerate the journey from genomic data to genomic insights. This helps to reduce data processing times significantly and perform casual analysis of critical diseases such as cancer and Alzheimer’s.

Imaging

Using computer vision and data integration services, you can elevate image analysis and facilitate long-term data retention. For example, by using ML to analyze MRI or X-ray scans, radiology companies can improve operational efficiency and quickly generate lab reports for their patients. Some of the technologies provided by AWS for imaging include Amazon EC2 GPU instances, AWS Batch, AWS ParallelCluster, AWS DataSync, and Amazon SageMaker, which we will discuss in detail in subsequent chapters.

Computational chemistry and structure-based drug design

Combining the state-of-the-art deep learning models for protein classification, advancements in protein structure solutions, and algorithms for describing 3D molecular models with HPC computing resources, allows you to grow and reduce the time to market drastically. For example, in a project performed by Novartis on the AWS cloud, where they were able to screen 10 million compounds against a common cancer target in less than a week, based on their internal calculations, if they had performed a similar experiment in-house, then it would have resulted in about a $40 million investment. By running this experiment on the cloud using AWS services and features, they were able to use their 39 years of computational chemistry data and knowledge. Moreover, it only took 9 hours and $4,232 to conduct the experiment, hence increasing their pace of innovation and experimentation. They were able to successfully identify three out of the ten million compounds, that were screened.

Now that we understand some of the applications in the life sciences and healthcare domain, let us discuss how the automobile and transport industry is using HPC for building AVs.

AVs

The advancement of deep learning models such as reinforcement learning, object detection, and image segmentation, as well technological advancements in compute technology, and deploying models on edge devices, have paved the way for AV. In order to design and build an AV, all the components of the system have to work in tandem, including planning, perception, and control systems. It also requires collecting and processing massive amounts of data and using it to create a feedback loop, for vehicles to adjust their state based on the changing condition of the traffic on roads in real time. This entails having high I/O performance, networking, specialized hardware coprocessors such as GPUs or Field Programmable Gate Arrays (FPGAs), as well as analytics and deep learning frameworks. Moreover, before an AV can even start testing on actual roads, it has to undergo millions of miles of simulation to demonstrate safety performance, due to the high dimensionality of the environment, which is complex and time-consuming. By using the AWS cloud’s virtually unlimited compute and storage capacity, support for advanced deep learning frameworks, and purpose-built services, you can drive a faster time to market. For example, In 2017, Lyft, an American transportation company, launched its AV division. To enhance the performance and safety of its system, it uses petabytes of data collected from its AV fleet to execute millions of simulations every year, which involves a lot of compute power. To run these simulations at a lower cost, they decided to take advantage of unused compute capacity on the AWS cloud, by using Amazon EC2 Spot Instances, which also helped them to increase their capacity to run the simulations at this magnitude.

Next, let us understand supply chain optimization and its processes!

Supply chain optimization

Supply chains are worldwide networks of manufacturers, distributors, suppliers, logistics, and e-commerce retailers that work together to get products from the factory to the customer’s door without delays or damage. By enabling information flow through these networks, you can automate decisions without any human intervention. The key attributes to consider are real-time inventory forecasts, end-to-end visibility, and the ability to track and trace the entire production process with unparalleled efficiency. Your teams will no longer have to handle the minuscular details associated with supply chain decisions. With automation and ML you can resolve bottlenecks in product movement. For example, in the event of a pandemic or natural disaster, you can quickly divert goods to alternative shipping routes without affecting their on-time delivery.

Here are some examples of using ML to improve supply chain processes:

- Demand Forecasting: You can combine a time series with additional correlated data, such as holidays, weather, and demographic events, and use deep learning models such as DeepAR to get more accurate results. This will help you to meet variable demand and avoid over-provisioning.

- Inventory Management: You can automate inventory management using ML models to determine stock levels and reduce costs by preventing excess inventory. Moreover, you can use ML models for anomaly detection in your supply chain processes, which can help you in optimizing inventory management, and deflect potential issues more proactively, for example, by transferring stock to the right location using optimized routing ahead of time.

- Boost Efficiency with Automated Product Quality Inspection: By using computer vision models, you can identify product defects faster with improved consistency and accuracy at an early stage so that customers receive high-quality products in a timely fashion. This reduces the number of customer returns and insurance claims that are filed due to issues in product quality, thus saving costs and time.

All the components of supply chain optimization discussed above need to work together as part of the workflow and therefore require low latency and high throughput in order to meet the goal of delivering an optimal quality product to a customer’s doorstep in a timely fashion. Using cloud services to build the workflow provides you with greater elasticity and scalability at an optimized cost. Moreover, with native purpose-built services, you can eliminate the heavy lifting and reduce the time to market.

Summary

In this chapter, we started by understanding HPC fundamentals and its importance in processing massive amounts of data to gain meaningful insights. We then discussed the limitations of running HPC workloads on-premises, as different types of HPC applications will have different hardware and software requirements, which becomes time-consuming and costly to procure in-house. Moreover, it will hinder innovation as developers and engineers are limited to the availability of resources instead of the application requirements. Then, we talked about how having HPC workloads on the cloud can help in overcoming these limitations and foster collaboration across global teams, break barriers to innovation, improve architecture design, and optimize performance and cost. Cloud infrastructure has made the specialized hardware needed for HPC applications more accessible, which has led to innovation in this space across a wide range of industries. Therefore, in the last section, we discussed some emerging workloads in HPC, such as in life sciences and healthcare, supply chain optimization, and AVs, along with real-world examples.

In the next chapter, we will dive into data management and transfer, which is the first step to running HPC workloads on the cloud.

Further reading

You can check out the following links for additional information regarding this chapter’s topics:

- https://www.statista.com/statistics/871513/worldwide-data-created/

- https://d1.awsstatic.com/whitepapers/Intro_to_HPC_on_AWS.pdf

- https://aws.amazon.com/solutions/case-studies/novartis/

- https://aws.amazon.com/solutions/case-studies/Lyft-level-5-spot/

- https://d1.awsstatic.com/psc-digital/2021/gc-700/supply-chain-ebook/Supply-Chain-eBook.pdf

- https://d1.awsstatic.com/HCLS%20Whitepaper%20.pdf

Download code from GitHub

Download code from GitHub