Basics of Agile Systems Modeling

For the most part, this book is about systems modeling with SysML, but doing it in an agile way. Before we get into the detailed practices of systems modeling with that focus, however, we’re going to spend some time discussing important project-related agile practices that will serve as a backdrop for the modeling work.

Almost all of the agile literature focuses on the “three people in a garage developing a simple application” scope. The basic assumptions of such projects include:

- The end result is software that runs on a general-purpose computing platform (i.e., it is not embedded).

- Software is the only truly important work product. Others may be developed but they are of secondary concern. Working software is the measure of success.

- The software isn’t performance, safety, reliability, or security-critical.

- It isn’t necessary to meet regulatory standards.

- The development team is small and co-located.

- The development is time-and-effort, not fixed-price cost.

- The development is fundamentally code-based and not model- (or design)-based.

- Any developer can do any task (no specialized skills are necessary).

- Formalized requirements are not necessary.

Yes, of course, there is much made about extensions to agile practices to account for projects that don’t exactly meet these criteria. For example, some authors will talk about a “scrum of scrums” as a way to scale up to larger teams. That works to a point, but it fails when you get to much larger development teams and projects. I want to be clear – I’m not saying that agile methods aren’t application to projects that don’t fall within these basic guidelines – only that the literature doesn’t address how it will do so in a coherent, consistent fashion. The further away your project strays from these assumptions, the less you will find in the literature for agile ways to address your needs.

In this book, we’ll address a domain that is significantly different than the prototypical agile project. Our concerns will be projects that:

- Are systems-oriented, which may contain software but will typically also contain electronic and mechanical aspects. It’s about the system and not the software.

- Employ a Model-Based Systems Engineering (MBSE) approach using the SysML language.

- May range from small- to very large-scale.

- Must develop a number of different work products. These include, but are not limited to:

- Requirements specification

- Analysis of requirements, whether it is done with use case or user stories

- System architectural specification

- System interface specification

- Trace relations between the elements of the different work products

- Safety, reliability, and security (and resulting requirements) analyses

- Architectural design trade studies

- Have a handoff to downstream engineering that includes interdisciplinary subsystem teams containing team members who specialize in software, electronics, mechanical, and other design aspects.

But at its core, the fundamental difference between this book and other agile books is that the outcome of systems engineering isn’t software, it’s system specification. Downstream engineering will ultimately do low-level design and implementation of those specifications. Systems engineering provides the road map that enables different engineers with different skill sets, working in different engineering disciplines, to collaborate together to create an integrated system, combining all their work into a cohesive whole.

The International Council of Systems Engineering (INCOSE) defines systems engineering as “a transdisciplinary and integrative approach to enable the successful realization, use, and retirement of engineered systems, using systems principles and concepts, and scientific, technological, and management methods” (https://www.incose.org/about-systems-engineering/system-and-se-definition/systems-engineering-definition). This book will not provide a big overarching process that ties all the workflows and work products together, although it is certainly based on one. That process – should you be interested in exploring it – is detailed in the author’s Agile Systems Engineering book; a detailed example is provided with the author’s Harmony aMBSE Deskbook, available at www.bruce-douglass.com. Of course, these recipes will work with any other reasonable MBSE process. It is important to remember that:

The outcome of software development is implementation;

The outcome of systems engineering is specification.

What’s agile all about?

Agile methods are – first and foremost – a means for improving the quality of your engineering work products. This is achieved through the application of a number of practices meant to continuously identify quality issues and immediately address them. Secondarily, agile is about improving engineering efficiency and reducing rework. Let’s talk about some basic concepts of agility.

Incremental development

This is a key aspect of agile development. Take a big problem and develop it as a series of small increments, each of which is verified to be correct (even if incomplete).

Continuous verification

The best way to have high-quality work products is to continuously develop and verify their quality. In other books, such as Real-Time Agility or the aforementioned Agile Systems Engineering books, I talk about how verification takes place in three timeframes:

- Nanocycle: 30 minutes to 1 day

- Microcycle: 1–4 weeks

- Macrocycle: Project length

Further, this verification is best done via the execution and testing of computable models. We will see in later chapters how this can be accomplished.

Continuous integration

Few non-trivial systems are created by a single person. Integration is the task of putting together work products from different engineers into a coherent whole and demonstrating that, as a unit, it achieves its desired purpose. This integration is often done daily, but some teams increment this truly continuously, absorbing work as engineers complete it and instantly verifying that it works in tandem with the other bits.

Avoid big design up front

The concept of incremental development means that one thing that we don’t do is develop big work products over long periods of time and only then try to demonstrate their correctness. Instead, we develop and verify the design work we need right now, and defer design work that we won’t need until later. This simplifies the verification work and also means much less rework later in a project.

Working with stakeholders

A key focus of the Agilista is the needs of the stakeholders. The Agilista understands that there is an “air gap” between what the requirements say and what the stakeholder actually needs. By working with the stakeholder, and frequently offering them versions of the running system to try, they are more likely to actually meet their needs. Additionally, user stories – a way to organize requirements into short usage stakeholder-system usage scenarios – are a way to work with the stakeholder to understand what they actually need.

Model-Based Systems Engineering (MBSE)

Systems engineering is an independent engineering discipline that focuses on system properties – including functionality, structure, performance, safety, reliability, and security. MBSE is a model-centric approach to performing systems engineering. Systems engineering is largely independent of the engineering disciplines used to implement these properties. Systems engineering is an interdisciplinary activity that focuses more on this integrated set of system properties than on the contributions of the individual engineering disciplines. It is an approach to developing complex and technologically diverse systems. Although normally thought of in a V-style process approach (see Figure 1.1), the “left side of the V” emphases the specification of the system properties (requirements, architecture, interfaces, and overall dependability), the “lower part of the V” has to do with the discipline-specific engineering and design work, and the “right side of the V” has to do with the verification of the system against the specifications developed on the left side:

Figure 1.1: Standard V model life cycle

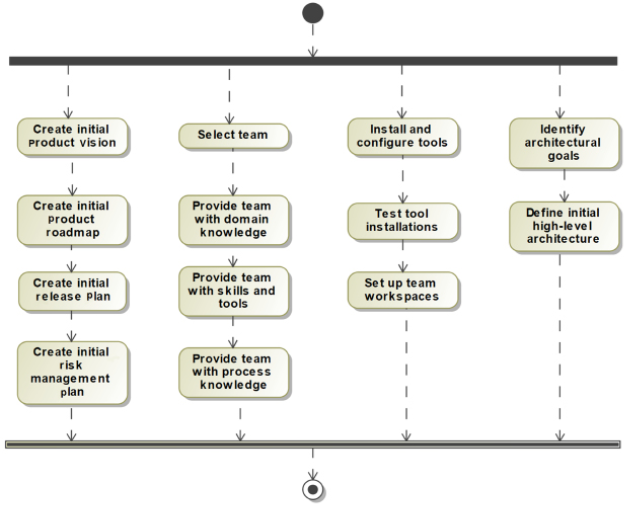

Of course, we’ll be doing things in a more agile way (Figure 1.2). Mostly, we’ll focus on incrementally creating the specification work products and handing them off to downstream engineering in an agile way:

Figure 1.2: Basic Agile systems engineering workflow

The basis of most of the work products developed in MBSE is, naturally enough, the model. For the most part, this refers to the set of engineering data relevant to the system captured in a SysML model. The main model is likely to be supplemented with models in other languages, such as performance, safety, and reliability (although you can use SysML for that too – we’ll discuss that in Chapter 2, System Specification—Functional, Safety and Security Analysis). The other primary work product will be textual requirements. While they are imprecise, vague, ambiguous, and hard to verify, they have the advantage of being easy to communicate. Our models will cluster these requirements into usage chunks – epics, use cases, and user stories – but we’ll still need requirements. These may be managed either as text or in text-based requirements management tools, such as IBM DOORS™, or they can be managed as model elements within a SysML specification model.

Our models will consist of formal representations of our engineering data as model elements and the relationships among them. These elements may appear in one or more views, including diagrams, tables, or matrices. The model is, then, a coherent collection of model elements that represent the important engineering data around our system of interest.

In this book, we assume you already know SysML. If you don’t, there are many books around for that. This book is a collection of short, high-focused workflows that create one or a small set of engineering work products that contain relevant model elements.

Now, let’s talk about some basic agile recipes and how they can be done in a model-centric environment.

Managing your backlog

The backlog is a prioritized set of work items that identify work to be done. There are generally two such backlogs. The project backlog is a prioritized list of all work to be done in the current project. A subset of these is selected for the current increment, forming the iteration backlog. Since engineers usually work on the tasks relevant to the current iteration, that is where they will go to get their tasks. Figure 1.3 shows the basic idea of backlogs:

Figure 1.3: Backlogs

The work to be done, nominally referred to as work items, is identified. Work items can be application work items (producing work that will be directly delivered) or technical work items (doing work that enables technical aspects of the product or project). Work items identify work to do such as:

- Analyzing, designing, or implementing an epic, use case, or user story, to ensure a solid understanding of the need and the adequacy of its requirements

- Creating or modifying a work product, such as a requirements specification or a safety analysis

- Arranging for an outcome, such as certification approval

- Addressing a risk, such as determining the adequacy of the bus bandwidth

- Removing an identified defect

- Supporting a target platform, such as an increment with hand-built mechanical parts, lab-constructed wire wrap boards, and partial software

The work items go through an acceptance process, and if approved, are put into the project backlog. Once there, they can be allocated to an iteration backlog.

Purpose

The purpose of managing your backlog is to provide clear direction for the engineering activities, to push the project forward in a coherent, collaborative way.

Inputs and preconditions

The inputs are the work items. The functionality-based work items originate with one or more stakeholders, but other work items might come from discovery, planning, or analysis.

Outputs and postconditions

The primary outputs are the managed project and iteration backlogs. Each backlog consists of a set of work items around a common purpose, or mission. The mission of an iteration is the set of work products and outcomes desired at the end of the iteration. An iteration mission is defined as shown in Figure 1.4:

Figure 1.4: Iteration mission

In a modeling tool, this information can be captured as metadata associated with tags.

The term “metadata” literally means “data about data”; in this context, we add metadata to elements using tags.

How to do it

There are two workflows to this recipe. The first, shown in Figure 1.5, adds a work item to the backlog. The second, shown in Figure 1.6, removes it:

Figure 1.5: Add work item

Figure 1.6: Resolve work item

Create a workflow item

From the work to be done, a work item is created to put into the backlog. The work item should include the properties shown in Figure 1.7:

Figure 1.7: Work item

- Name.

- Description of the work to be done, the work product to be created, or the risk to be addressed.

- The acceptance criteria – how the adequacy of the work performed, the work product created, or the outcome produced will be determined.

- The work item classification identifies the kind of work item it is, as shown on the left side of Figure 1.3.

- The work item’s priority is an indication of how soon this work item should be addressed. This is discussed in the Prioritize work item step of this recipe.

- The estimated effort is how much effort it will take to perform the task. This can be stated in absolute terms (such as hours) or relative terms (such as user story points). This topic is addressed in the Estimating effort recipe later in this chapter.

- Links to important related information, such as standards that must be met, or sources of information that will be helpful for the performance of the work.

Approve work item

Before a work item can be added, it should be approved by the team or the project leader, whoever is granted that responsibility.

Prioritize work item

The priority of a work item determines in what iteration the work will be performed. Priority is determined by a number of factors, including the work item’s criticality (how important it is), its urgency (when it is needed), the availability of specialized resources needed to perform it, usefulness to the mission of the iteration, and risk. The general rule is that high-priority tasks are performed before lower-priority tasks. This topic is covered in the Work item prioritization recipe later in this chapter.

Estimate effort

An initial estimate of the cost of addressing the work item is important because as work items are allocated to iterations, the overall effort budget must be balanced. If the effort to address a work item is too high, it may not be possible to complete it in the iteration with all of its other work items. The agile practice of work item estimation is covered in the Estimating effort recipe later in this chapter.

Place work item in project backlog

Once approved and characterized, the work item can then be put into the project backlog. The backlog is priority-ordered so that higher-priority work items are “on top” and lower-priority work items are “below”.

Allocate work item to iteration backlog

Initial planning includes the definition of a planned set of iterations, each of which has a mission, as defined above. Consistent with that mission, work items are then allocated to the planned iterations. Of course, this plan is volatile, and later work or information can cause replanning and a reallocation of work items to iterations. Iteration planning is the topic of the recIteration plan recipe later in this chapter.

In the second work flow of this recipe, the work is actually being done. Of relevance here is how the completion of the work affects the backlog (Figure 1.6).

Perform work item

This action is where the team member actually performs the work to address the work item, whether it is to analyze a use case, create a bit of architecture, or perform a safety analysis.

Review work performed

The output and/or outcome of the work item is evaluated with respect to its acceptance criteria and is accepted or rejected on that basis.

Reject work performed

If the output and/or outcome does not meet the acceptance criteria, the work is rejected and the work item remains on the backlog.

Remove resolved work item

If the output and/or outcome does meet the acceptance criteria, the work is accepted and the work item is removed from the project and iteration to-do backlog. This usually means that it is moved to a “to-done” backlog, so that there is a history of the work performed.

Review backlog

It is important that as work progresses, the backlog is maintained. Often, valuable information is discovered that affects work item effort, priority, or value during project work. When this occurs, other affected work items must be reassessed and their location within the backlogs may be adjusted.

Reorganize backlog

Based on the review of the work items in the backlog, the set of work items, and their prioritized positions within those backlogs, may require adjustment.

Example

Consider a couple of use cases for the sample problem, the Pegasus Bike Trainer summarized in Appendix A (see Figure 1.8):

Figure 1.8: Example user case work items in backlog

You can also show at least high-level backlog allocation to an iteration on a use case diagram, as shown in Figure 1.9. You may, of course, manage backlogs in generic agile tools such as Rational Team Concert, Jira, or even with Post-It notes:

Figure 1.9: Use case diagram for iteration backlog

Let’s apply the workflow shown in Figure 1.5 to add the use cases and user stories from Figure 1.8 and Figure 1.9.

Create work item

In Figure 1.8 and Figure 1.9, we see a total of seven use cases and eight user stories. For our purpose, we will just represent the use case data in tabular form and will concentrate only on the two use cases and their contained user stories from Figure 1.9. The description of the user stories is provided in the canonical form of a user story (see the chapter introduction in Chapter 2, System Specification: Functional, Safety, and Security Analysis for more details).

Figure 1.10: Initial work item list

For the work item list, I created a stereotype work item that has the tag definitions shown as columns in the table and then applied it to the use cases and user stories.

Approve work item

Figure 1.11: Working with the team and the stakeholders, we get approval for the work items in

As we get approval, we marked the Approved column in the table.

Prioritize work item

Using the techniques from the Work item prioritization recipe later in this chapter, we add the priorities to the work items.

Estimate effort

Using the techniques from the Estimating effort recipe later in this chapter, we add the estimated effort to the work items.

Our final set of work items from this effort is shown in Table 1.1:

|

Name |

OK |

Description |

Acceptance |

Classification |

Priority |

Effort |

Iteration |

Related |

|

Setup bike fit |

Enable rider to adjust bike fit prior to ride |

Standard riders* can replicate their road bike fit on the Pegasus. |

Use Case |

4.38 |

13 |

*Standard riders include five riders of heights 60, 65, 70, 75, and 76 inches. |

||

|

Adjust handlebar reach |

As a rider, I want to replicate the handlebar reach on my fitted road bike. |

Standard riders* can replicate their handlebar reach from their fitted road bikes. |

User Story |

3.33 |

3 |

|||

|

Adjust handlebar height |

As a rider, I want to replicate the handlebar height on my fitted road bike. |

Standard riders* can replicate their handlebar height from their fitted road bikes. |

User Story |

4.33 |

3 |

|||

|

Adjust seat reach |

As a rider, I want to replicate the seat reach on my fitted road bike. |

Standard riders* can replicate their seat reach from their fitted road bikes. |

User Story |

11.67 |

3 |

|||

|

Adjust seat height |

As a rider, I want to replicate the seat height on my fitted road bike. |

Standard riders* can replicate their seat height from their fitted road bikes. |

User Story |

13.33 |

3 |

|||

|

Select crank length |

As a rider, I want to replicate the crank arm length on my road bike. |

Support crank lengths of 165, 167.5, 170, 172.5, and 175 mm. |

User Story |

1.2 |

1 |

|||

|

Control resistance |

Control the resistance to pedaling in a steady and well-controlled fashion within the limits of normal terrain road riding. |

Replicate pedal resistance to within 1% of measured pedal torque under the standard ride set*. |

Use Case |

2 |

115 |

*Standard ride set includes ride of all combination of rider weights (50, 75, and 100kg), inclines (-10, 0, 5, 10, and 20%) and cadences (50, 70, 80, 90, and 110). |

||

|

Provide basic resistance |

As a rider, I want basic resistance provided to the pedals so I can get a workout with an on-road feel in Resistance Mode. |

Control resistance by setting the pedal resistance to 0–2000W in 50-watt increments for the standard ride set.* |

User Story |

1.42 |

55 |

|||

|

Set resistance under user control |

As a rider, I want to set the resistance level provided to the pedals to increase or decrease the effort for a given gearing, cadence, and incline. |

Control resistance via user input by manually setting incline, gearing, and cadence for the standard ride set.* |

User Story |

1.00 |

21 |

|||

|

Set resistance under external control |

As a rider, I want the external training app to set the resistance to follow the app’s workout protocol to get the desired workout. |

Control resistance via app control, manually setting incline, gearing, and allow the user to supply cadence for the standard ride set.* |

User Story |

0.30 |

39 |

Table 1.1: Final work item list

Place WI in project backlog

As we complete the effort, we put all the approved work items into the project backlog, along with other previously identified use cases, user stories, technical work items, and spikes. The backlog can be managed within the modeling tool, but usually external tools – such as Jira or Team Concert – are used.

Allocate WI to iteration backlog

Using the technique from the Iteration plan recipe later in this chapter, we put relevant work items from the project backlog into the backlog for the upcoming iteration. In Table 1.1, this would be done by filling in the Iteration column with the number of the iteration in which the work item is performed.

With regard to the second workflow from Figure 1.6, we can illustrate how the workflow might unfold as we perform the work in the current iteration.

Perform work item

As we work in the iterations, we detail the requirements, and create and implement the technical design. For example, we might perform the mechanical design of the handlebar reach adjust or the delivery of basic resistance to the pedals with an electric motor.

Review work performed

As the work on the use case and user stories completes, we apply the acceptance criteria via verification testing and validation. In the example we are considering, for the set of riders of heights 60, 65, 60, 75, and 76 inches, we would measure the handlebar height from their fitted road bikes and ensure that all these conditions can be replicated on the bike. For the Provide Basic Resistance user story, we would verify that we can create a pedal resistance of [0, 50, 100, 150, … 2000] watts of resistance at pedal cadences of 50, 70, 80, 90, and 110 RPM ± 1%.

Measuring your success

One of the core concepts of effective agile methods is to continuously improve how you perform your work. This can be done to improve quality or to get something done more quickly. In order to improve how you work, you need to know how well you’re doing now. That means applying metrics to identify opportunities for improvement and then changing what you do or how you do it. Metrics are a general measurement of success in either achieving business goals or compliance with a standard or process. A related concept – a Key Performance Indicator (KPI) – is a quantifiable measurement of accomplishment against a crucial goal or objective. The best KPIs measure achievement of goals rather than compliance with a plan. The problem with metrics is that they measure something that you believe correlates to your objective, but not the objective itself. Some examples from software development:

|

Objective |

Metric |

Issues |

|

Software size |

Lines of code |

Lines of code for simple, linear software aren’t really the same as lines of code for complex algorithms |

|

Productivity |

Shipping velocity |

Ignores the complexity of the shipped features, penalizing systems that address complex problems |

|

Accurate planning |

Compliance with schedule |

This metric rewards people who comply with even a bad plan |

|

Efficiency |

Cost per defect |

Penalizes quality and makes buggy software look cheap |

|

Quality |

Defect density |

Treats all defects the same whether they are using the wrong-sized font or something that brings aircraft down |

Table 1.2: Examples from software development

See The Mess of Metrics by Capers Jones (2017) at http://namcook.com/articles/The%20Mess%20of%20Software%20Metrics%202017.pdf

Consider a common metric for high-quality design, cyclomatic complexity. It has been observed that highly complex designs contain more defects than designs of low complexity. Cyclomatic complexity is a software metric that computes complexity by counting the number of linearly independent paths through some unit of software. Some companies have gone so far as to require all software to not exceed some arbitrary cyclomatic complexity value to be considered acceptable. This approach disregards the fact that some problems are harder than others and any design addressing such problems must be more complex. A better application of cyclomatic complexity is to use the metric as a guide. It can identify those portions of a design that are more complex so that they can be subjected to additional testing. Ultimately, the problem with this metric is that complexity correlates only loosely to quality. A better metric for the goal of improving quality might be the ability to successfully pass tests that traverse all possible paths of the software.

Good metrics are easy to measure, and, ideally, easy to automate. Creating test cases for all possible paths can be tedious, but it is possible to automate with appropriate tools. Metrics that require additional work by engineering staff will be resented and achieving compliance with the use of the metric may be difficult.

While coming up with good metrics may be difficult, the fact remains that you can’t improve what you don’t measure. Without measurements, you’re guessing where problems are and your solutions are likely to be ineffective or solve the wrong problem. By measuring how you’re doing against your goals, you can improve your team’s effectiveness and your product quality. However, it is important that metrics are used as indicators rather than as performance standards because, ultimately, the world is more complex than a single, easily computed measure.

Metrics should be used for guidance, not as goals for strict compliance.

Purpose

The purpose of metrics is to measure, rather than guess, how your work is proceeding with respect to important qualities so that you can improve.

Inputs and proconditions

The only preconditions for this workflow are the desire, ability, and authority to improve.

Outputs and postconditions

The primary output of this recipe is objective measurements of how well your work is proceeding or the quality of one or more work products. The primary outcome is the identification of some aspect of your project work to improve.

How to do it

Metrics can be applied to any work activity for which there is an important output or outcome (which should really be all work activities). The workflow is fairly straightforward, as shown in Figure 1.11:

Figure 1.12: Measuring success

Identify work or work product property important to success

One way to identify a property of interest is to look where your projects have problems or where the output work products fail. For engineering projects, work efficiency being too low is a common problem. For work products, the most common problem is the presence of defects.

Define how you will measure the property (success metric)

Just as important to identifying what you want to measure is coming up with a quantifiable measurement that is simultaneously easy to apply, easy to measure, easy to automate, and accurately captures the property of interest. It’s one thing to say “the system should be fast” but quite another to define a way to measure the speed in a fashion that can be compared to other work items and iterations.

Frequently measure the success metric

It is common to gather metrics for a review at the end of a project. This review is commonly called a project post-mortem. I prefer to do frequent retrospectives, at least one per iteration, which I refer to as a celebration of ongoing success. To be applied in a timely way, you must measure frequently. This means that the measurements must require low effort and be quick to compute. In the best case, the environment or tool can automate the gathering and analysis of the information without any ongoing effort by the engineering staff. For example, time spent on work items can be captured automatically by tools that check out and check in work products.

Update the success metric history

For long-term organizational success, recorded performance history is crucial. I’ve seen far too many organizations miss their project schedules by 100% or more, only to do the very same thing on the next project, and for exactly the same reasons. A metric history allows the identification of longer-term trends and improvements. That enables the reinforcement of positive aspects and the discarding of approaches that fail.

Determine how to improve performance against the success metric

If the metric result is unacceptable, then you must perform a root cause analysis to uncover what can be done to improve it. If you discover that you have too many defects in your requirements, for example, you may consider changing how requirements are identified, captured, represented, analyzed, or assessed.

Make timely adjustments to how the activity is performed

Just as important to measuring how you’re doing against your project and organizational goals is acting on that information. This may be changing a project schedule to be more accurate, performing more testing, creating some process automation, or even getting training on some technology.

Assess the effectiveness of the success metric application

Every so often, it is important to look at whether applying a metric is generating project value. A common place to do this is the project retrospective held at the end of each iteration. Metrics that are adding insufficient value may be dropped or replaced with other metrics that will add more value.

Some commonly applied metrics are shown in Figure 1.13:

Figure 1.13: Some common success metrics

It all comes back to you can’t improve what you don’t measure. First, you must understand how well you are achieving your goals now. Then you must decide how you can improve and make the adjustment. Repeat. It’s a simple idea.

Visualizing velocity is often done as a velocity or burn down chart. The former shows the planned velocity in work items per unit time, such as use cases or user stories per iteration. The latter shows the rate of progress of handling the work items over time. It is common to show both planned values in addition to actual values. A typical velocity chart is shown in Figure 1.14.

Velocity is the amount of work done per time unit, such as the number of user stories implemented per iteration. A burn down chart is a graph showing the decreasing number of work items during a project.

Figure 1.14: Velocity chart

Example

Let’s look at an example of the use of metrics in our project:

Identify work or work product property important to success

Let’s consider a common metric used in agile software development and apply them to systems engineering: velocity. Velocity underpins all schedules because it represents how much functionality is delivered per unit time. Velocity is generally measured as the number of completed user stories delivered per iteration. In our scope, we are not delivering implemented functionality, but we are incrementally delivering a hand-off to downstream engineering. Let’s call this SE Velocity, which is “specified use cases per iteration” and includes the requirements and all related SE work products.

This might not provide the granularity we desire, so let’s also define a second metric, SE Fine-Grained Velocity, which is the number of story points specified in the iteration:

Define how you will measure the property (success metric)

We will measure the number of use cases delivered, but have to have a “definition of done” to ensure consistency of measurement. SE Velocity will include:

- Use case with:

- Full description identifying purpose, pre-conditions, post-conditions, and invariants.

- Normative behavioral specification in which all requirements traced to and from the use case are represented in the behavior. This is a “minimal spanning set” of scenarios in which all paths in the normative behavior are represented in at least one scenario

- Trace links to all related functional requirements and quality of service (performance, safety, reliability, security, etc) requirements

- Architecture into which the implementation of the use cases and user stories will be placed

- System interfaces with a physical data schema to support the necessary interactions of the use cases and user stories

- Logical test cases to verify the use cases and user stories

- Logical validation cases to ensure the implementation of the use cases and user stories meets the stakeholder needs

SE Velocity will be simply the number of such use cases delivered per iteration. SE Fine-Grained Velocity will be the estimated effort (as measured in story points; see the Estimating effort recipe).

Frequently measure the success metric

We will measure this metric each iteration. If our project has 35 use cases, our project heartbeat is 4 weeks, and the project is expected to take one year, then our SE Velocity should be 35/12 or about 3. If the average use case is 37 story points, then our SE Fine-Grained Velocity should be about 108 story points per iteration.

Update the success metric history

As we run the project, we will get measured SE Velocity and SE Fine-Grained Velocity. We can plot those values over time to get velocity charts:

Figure 1.15: SE velocity charts

Determine how to improve performance against the success metric

Our plan calls for 3 use cases and 108 story points per iteration; we can see that we are underperforming. This could be either because 1) we overestimated the planned velocity, or 2) we need to improve our work efficiency in some way. We can, therefore, simultaneously attack the problem on both fronts.

To start, we should replan based on our measured velocity, which is averaging 2.25 use cases and 81 story points per iteration, as compared to the planned 3 use cases and 108 story points. This will result in a longer but hopefully more realistic project plan and extend the planned project by an iteration or so.

In addition, we can analyze why the specification effort is taking too long and perhaps implement changes in process or tooling to improve.

Make timely adjustments to how the activity is performed

As we discover variance between our plan and our reality, we must adjust either the plan or how we work, or both. This should happen at least every iteration, as the metrics are gathered and analyzed. The iteration retrospective that takes place at the end of the iteration performs this service.

Assess the affectiveness of the success metric applicaiton

Lastly, are the metrics helping the project? It might be reasonable to conclude that the fine-grained metric provides more value than the more general SE Velocity metric, so we abandon the latter.

Some considerations

I have seen metrics fail in a number of organizations trying to improve. Broadly speaking, the reasons for failure are one of the following:

Measuring the wrong thing

Many qualities of interest are hard to identify precisely (think of “code smell”) or difficult to measure directly. Metrics are usually project qualities that are easy to measure but you can only imprecisely measure what you want. The classic measure of progress – lines of code per day – turns out to be a horrible measure because it doesn’t measure the quality of the code, so it cannot take into account the rework required when fast code production results in low code quality. Nor is refactoring code “negative work” because it results in fewer lines of code. A better measure would be velocity, which is a measure of tested and verified features released per unit of time.

Another often abused measure is “hours worked.” I have seen companies require detailed reporting on hours spent per project only to also levy the requirement that any hours worked over 40 hours per week should not be reported. This constrained metric does not actually measure the effort expended on project tasks.

Ignoring the metrics

I have seen many companies spend a lot of time gathering metric data (and yes, it does require some effort and does cost some time, even when mostly automated), only to make the very same mistake time after time. This is because while these companies capture the data, they never actually use the data to improve.

No authority to intiate change

Gathering and analyzing metrics is often seen as less valuable than “real work” and so personnel tasked with these activities have little or no authority.

Lack of willingness to follow through

I have seen companies pay for detailed, quantified project performance data only to ignore it because there was little willingness to follow through with needed changes. This lack of willingness can come from management being unwilling to pay for organizational improvement, or from technical staff being afraid of trying something different.

Metrics should always be attempting to measure an objective rather than a means. Rather than “lines of code per day,” it is better to measure “delivered functionality per day.”

Managing risk

In my experience, most unsuccessful projects fail because they don’t properly deal with project risk. Project risk refers to the potential for change that a team will fail to meet some or all of a project’s objectives. Risk is defined to be the product of an event’s likelihood of occurrence times its severity. Risk is always about the unknown. There are many different kinds of project risk. For example:

- Resource risk

- Technical risk

- Schedule risk

- Business risk

Risks are always about the unknown and risk mitigation activities – known as spikes in agile literature – are work undertaken to uncover information to reduce risk. For example, a technical risk might be that the selected bus architecture might not have sufficient bandwidth to meet the system performance requirements. A spike to address the risk might measure the bus under stress similar to what is expected for the product. Another technical risk might be the introduction of new development technology, such as SysML, to a project. A resulting spike might be to bring in an outsider trainer and mentor for the project.

The most important thing you want to avoid is ignoring risk. It is common, for example, for projects to have “aggressive schedules” (that is to say, “unachievable”) and for project leaders and members to ignore obvious signs of impending doom. It is far better to address the schedule risk by identifying and addressing likely causes of schedule slippage and replan the schedule.

Purpose

The purpose of the Managing risk recipe is to improve the likelihood of project success.

Inputs and proconditions

Project risk management begins early and should be an ongoing activity throughout the project. Initially, a project vision, preliminary plan, or roadmap serves as the starting point for risk management.

Outputs and postconditions

Intermediate outputs include a risk management plan (sometimes called a risk list) and the work effort resulting from it, allocated into the release and iteration plans. The risk management plan provides not only the name of the risk but also important information about it. Longer-term results include a (more) successful project outcome than one that did not include risk management.

How to do it

Figure 1.16 shows how risks are identified, put into the risk management plan, and result in spikes. Figure 1.17 shows how, as spikes are performed in the iterations, the risk management plan is updated:

Figure 1.16: Managing risk

Figure 1.17: Reducing risk

Identify a potential source of risk

This is how it starts, but risk identification shouldn’t just be done at the outset of the project. At least once per iteration, typically during the project retrospective activity, the team should look for new risks that have arisen as the project has progressed. Thus, the workflow in Figure 1.16 isn’t performed just once but many times during the execution of the project. In addition, it sometimes happens that risks disappear if their underlying causes are removed, so you might end up removing risk items, or at least marking them as avoided, during these risk reassessments.

Characterize risk

The name of the risk isn’t enough. We certainly need a description of how the risk might manifest and what it means. We also need to know how likely the negative outcome is to manifest (likelihood) and how bad it is should that occur (severity). Some outcomes have a minor impact, while others may be show-stoppers.

Add to risk list in priority order

The risk management plan maintains the list in order sorted by risk magnitude. If you have quantified both the risk’s likelihood and severity, then risk magnitude is the product of those two values. The idea is that the higher-priority risks should have more attention and be addressed earlier than the lower-priority risks.

Identify a spike to address risk

A spike is work that is done to reduce either the likelihood or the severity of the risk outcome, generally the former. We can address knowledge gaps with training; we can address bus performance problems with a faster bus; we can solve schedule risks with featurecide. Featurecide is the removal of features of low or questionable stakeholder value, or work items that you just don’t have the bandwidth to address. Whatever the approach, a spike seeks to reduce risk, so it is important that the spike uncovers or addresses the risk’s underlying cause.

Create a work item for a spike

Work items come in many flavors. Usually, we think of use cases or user stories (functionality) as work items. But work items can refer to any work activity, as we discussed in the earlier recipe for backlog management. Specifically, in this case, spikes are important work items to be put into the product backlog.

Allocate a spike work item to an iteration plan

As previously discussed, work items must be allocated to iterations to result in a release plan.

Perform a spike

This action means performing the identified experiment or activity. If the activity is to get training, then complete the training session. If it is to perform a lab-based throughput test, then do that.

Assess the outcome

Following the spike, it is important to assess the outcome. Was the risk reduced? Is a change in the plan, approach, or technology warranted?

Update the risk management plan

The risk management plan must be updated with the outcome of the spike.

Replan

If appropriate, adjust the plan in accordance with the outcome of the spike. For example, if a proposed technology cannot meet the project needs, then a new technology or approach must be selected and the plan must be updated to reflect that.

Example

Here is an example risk management plan, captured as a spreadsheet of information. Rather than show the increasing level of detail in the table step by step, we’ll just show the end state (Table 1.13) to illustrate a typical outcome from the workflow shown in Figure 1.16.

It can be sorted by the State and Risk Magnitude columns to simplify its use:

|

Risk Management Plan (Risk List) |

|||||||||||||

|

Risk ID |

Headline |

Description |

Type |

Impact |

Probability |

Risk magnitude |

State |

Precision |

Raised on |

Iteration # |

Impacted stakeholder |

Owner |

Mitigation strategy (spike) |

|

1 |

Robustness of the main motor |

The system must be able to maintain 2,000 W for up to 5 minutes and sustain 1,000 W for 4 hours, with an MTBF of 20,000 hours. The current motor is unsuitable. |

Technical |

80% |

90% |

72% |

Open |

High |

1/5/2020 |

1 |

Maintainer, user |

Sam |

Meet with motor vendors to see if 1) they have an existing motor that meets our needs, or 2) they can design a motor within budget to meet the need. |

|

2 |

Agile MBSE impact |

The team is using both agile and MBSE for the first time. The concern is that this may lead to poor technical choices. |

Technical |

80% |

80% |

64% |

Open |

Medium |

1/4/2020 |

0 |

User, buyer, product owner |

Jill |

Bring in a consultant from aPriori Systems for training and mentoring |

|

3 |

Robustness of USB connection |

Users will be inserting and removing the USB while under movement stress, so it is likely to break. |

Technical |

40% |

80% |

32% |

Open |

Medium |

2/16/2020 |

3 |

User, manufacturing |

Joe |

Standard USB connectors are too weak. We need to mock up a more robust physical design. |

|

4 |

Aggressive schedule |

Customer schedule is optimistic. We need to address this either by changing the expectations or figuring out how to satisfy the schedule. |

Schedule |

40% |

100% |

40% |

Mitigated |

Low |

12/5/2019 |

0 |

Buyer |

Susan |

Iteration 0, work with the customer to see if the project can be delivered in phases, or if ambitious features can be cut. |

|

5 |

Motor response lag time |

To simulate short high-intensity efforts, the change in resistance must be fast enough to simulate the riding experience. |

Technical |

20% |

20% |

4% |

Open |

High |

12/19/2019 |

6 |

User |

Sam |

Do a response time study with professional riders to evaluate the acceptability of the current solution. |

|

6 |

Team availability |

Key team members have yet to come off the Aerobike project and are delayed by an estimated 6 months. |

Resource |

60% |

75% |

45% |

Obsolete |

Low |

3/1/2020 |

0 |

Product owner, buyer |

|

See if the existing project can be sped up. If not, work on a contingency plan to either hire more or delay the project start. |

Table 1.3: Example risk list

For an example of the risk mitigation workflow in Figure 1.17, let’s consider the first two risks in Table 1.3.

Perform a spike

For Risk 2, “Agile MBSE impact,” the identified spike is “Bring in a consultant from A Priori Systems for training and mentoring.” We hire a consultant from A Priori Systems. They then train the team on agile MBSE, gives them each a copy of their book Agile Systems Engineering, and mentors the team through the first three iterations. This spike is initiated in Iteration 0, and the mentoring lasts through Iteration 3.

For Risk 1, “Robustness of the main motor,” the identified spike is “Meet with motor vendors to see if 1) they have an existing motor that meets our needs, or 2) they can design a motor within our budget to meet the need.” Working with our team, the application engineer from the vendor assesses the horsepower, torque, and reliability needs and then finds a version of the motor that is available within our cost envelope. The problem is resolved.

Assess outcome

The assessment of the outcome of the spike for Risk 2 is evaluated in four steps. First, the engineers attending the agile MBSE workshop provide an evaluation of the effectiveness of the workshop. While not giving universally high marks, the team was very satisfied overall with their understanding of the approach and how to perform the work. The iteration retrospective for the next three iterations look at expected versus actual outcomes and find that the team is performing well. The assessment of the risk is that it has been successfully mitigated.

For Risk 1, the assessment of the outcome is done by the lead electronics engineer. He obtains five instances of the suggested motor variant and stress-tests them in the lab. He is satisfied that the risk has been successfully mitigated and that the engineering can proceed.

Update the risk management plan

The risk management plan is updated to reflect the outcomes as they occur. In this example, Table 1.4, we can see the updated State field in which the two risk states are updated to Mitigated:

|

Risk Management Plan (Risk List) |

|||||||||||||

|

Risk ID |

Headline |

Description |

Type |

Impact |

Probability |

Risk Magnitude |

State |

Precision |

Raised On |

Iteration # |

Impacted Stakeholder |

Owner |

Mitigation Strategy (Spike) |

|

1 |

Robustness of the main motor |

The system must be able to maintain 2,000 W for up to 5 minutes and sustain 1,000 W for 4 hours, with an MTBF of 20,000 hours. The current motor is unsuitable. |

Technical |

80% |

90% |

72% |

Mitigated and updated motor selection to the appropriate variant |

High |

1/5/2020 |

1 |

Maintainer, user |

Sam |

Meet with the motor vendors to see if 1) they have an existing motor that meets our needs, or 2) they can design a motor without our OEM costing to meet the need. |

|

2 |

Agile MBSE impact |

The team is using both agile and MBSE for the first time. The concern is that this may lead to back technical choices. |

Technical |

80% |

80% |

64% |

Mitigated, updated modeling tool for Rhapsody, and MBSE workflows updated. |

Medium |

1/4/2020 |

0 |

User, buyer, product owner |

Jill |

Bring in a consultant from A Priori Systems for training and mentoring. |

Table 1.4: Updated risk plan (Partial)

Replan

In this example, the risks are successfully mitigated and the changes are noted in the State field. For Risk 1, a more appropriate motor is selected with help from the motor vendor. For Risk 2, the tooling was updated to better reflect the modeling needs of the project, and minor tweaks were made to the detailed MBSE workflows.

Product roadmap

A product roadmap is a plan of action for how a product will be introduced and evolved over time. It is developed by the product owner, an agile role responsible for managing the product backlog and feature set. The product roadmap is a high-level strategic view of the series of delivered systems mapped to capabilities and customer needs. The product roadmap takes into account the market trajectories, value propositions, and engineering constraints. It is ultimately expressed as a set of initiatives and capabilities delivered over time.

Purpose

The purpose of the product roadmap is to plan and provide visibility to the released capabilities of the customers over time. The roadmap is initially developed in Iteration 0, but as in all things agile, the roadmap is updated over time. A typical roadmap has a 12–24 month planning horizon, but for long-lived systems, the horizon may be much longer.

Inputs and preconditions

A product vision has been established which includes the business aspects (such as market and broad customer needs) and technical aspects (the broad technical approach and its feasibility).

Outputs and postconditions

The primary work product is the product roadmap, a time-based view of capability releases of the system.

How to do it

The product roadmap is organized around larger-scale activities (epics) for the most part, but can contain more detail if desired. An epic is a capability whose delivery spans multiple iterations. Business epics provide visible value to the stakeholders, while technical epics (also known as enabler epics) provide behind-the-scenes infrastructure improvements such as architecture implementation or the reduction of technical debt.

In an MBSE approach, epics can be modeled as stereotypes of use cases that are decomposed to the use cases, which are in turn, decomposed into user stories (stereotyped use cases) and scenarios (refining interactions). While epics are implemented across multiple iterations, a use case is implemented in a single iteration. A user story or scenario takes only a portion of an iteration to complete. User stories and scenarios are comparable in scope and intent.

This taxonomy is shown in Figure 1.18, along with where they typically appear in the planning:

Figure 1.18: Epics, use cases, and user stories

The product roadmap is a simple planning mechanism relating delivered capability to time, iterations, and releases. Like all agile planning, the roadmap is adjusted as additional information is discovered, improving its accuracy over time. The roadmap updates usually occur at the end of each iteration during the iteration retrospective, as the actual iteration outcomes are compared with planned outcomes.

The roadmap also highlights milestones of interest and technical evolution paths as well. Milestones might include customer reviews or important releases, such as alpha, beta, an Initial Operating Condition (IOC), or a Final Operating Condition (FOC):

C

CFigure 1.19: Create product roadmap

Enumerate your product themes

The product themes are the strategic objectives, values, and goals to be realized by the product. The epics must ultimately refer back to how they aid in the achievement of these themes. This step lists the product themes to drive the identification of the epics and work items going forward. In some agile methods, the themes correlate to value streams.

Create epics

Epics describe either the strategic capabilities of the system to realize the product themes. They can be either business epics that bring direct value to the stakeholders, or technical (aka enabler) epics that provide technological infrastructure to support the business epics. Epics may be thought of as large use cases that generally span several iterations. This step identifies the key epics to be put into the product roadmap.

Prioritize epics

Prioritization identifies the order in which epics are to be developed. Prioritization can be driven by urgency (the timeliness of the need), criticality (the importance of meeting the need), the usefulness of the capability, the availability of the required resource, reduction in project risk, natural sequencing, or meeting opportunities – or any combination of the above. The details of how to perform prioritization are the subject of their own recipe (see the Work item prioritization recipe in this chapter), but this is one place where prioritization can be effectively used.

Assign a broad product timeframe

The product roadmap ultimately defines a range of time in which capabilities are to be delivered. This differs from traditional planning, which attempts to nail down the second when a product will be delivered in spite of the lack of adequate information to do so. The product roadmap usually defines a large period of time – say a month, season, or even year – in which a capability is planned to be delivered, but with the expectation that this timeframe can be made more precise as the project proceeds.

Allocate epics in the product timeframe

Epics fit into the product timeframe to allow project planning at a strategic level.

Get agreement on the product roadmap

Various stakeholders must agree on the timeframe. Users, purchasers, and marketers must agree that the timeframe meets the business needs and that the epics provide the appropriate value proposition. Engineering staff must agree that the capabilities can be reasonably expected to be delivered with an appropriate level of quality within the timeframe. Manufacturing staff must agree that the system can be produced in the plan. Regulatory authorities must agree that the regulatory objectives will be achieved.

Update the roadmap

If stakeholders are not all satisfied, then the plan should be reworked until an acceptable roadmap is created. This requires modification and reevaluation of an updated roadmap.

Example

Let’s create a product roadmap for the Pegasus system by following the steps outlined.

Enumerate your product themes

- Providing a bike fit as close as possible to the fit of a serious cyclist on their road bike

- Providing a virtual ride experience that closely resembles outside riding, including:

- Providing resistance to pedals for a number of conditions, including flats, climbing, sprinting, and coasting for a wide range of power outputs from casual to professional riders

- Simulating gearing that closely resembles the most popular gearing for road bicycles

- Incline control to physically incline or decline the bike

- Permitting programmatic control of resistance to simulate changing road conditions in a realistic fashion

- Interfacing with cycling training apps, including Zwift, Trainer Road, and the Sufferfest

- Gathering ride, performance, and biometrics for analysis by a third-party app

- Providing seamless Over-The-Air (OTA) updates of product firmware to simplify maintenance

Create epics

Epics describe either the strategic capabilities of the system to realize the product themes. This step identifies the key epics to be put into the product roadmap. Epics include:

Business epics:

- Physical bike setup

- Ride configuration

- Firmware updates

- Controlling resistance

- Monitoring road metrics

- Communicating with apps

- Emulating gearing

- Incline control

Enabler epics:

Prioritize epics

These epics are not run fully sequentially, as some can be done in parallel. Nevertheless, the basic prioritized list is:

- Mechanical frame development

- Motor electronics development

- Digital physical bike setup

- Monitor road metrics

- Ride configuration

- Control resistance

- Emulating gearing

- Communicating with apps

- Firmware updates

- Incline control

- Electronics development

Assign a broad product timeframe

For this project, the total timeframe is about 18 months, beginning in early spring 2021 and ending at the end of 2022, with milestones for fall 2021 (a demo at the September Eurobike tradeshow), spring 2022 (the alpha release), summer 2022 (beta), and the official release (October 2022).

Allocate epics into a product timeframe

Figure 1.20 shows a simple product roadmap for the Pegasus system. At the top, we see the planned iterations and their planned completion dates. Below that, important milestones are shown. The middle part depicts the evolution plan for the three primary hardware aspects (the mechanical frame, motor electronics, and digital electronics). Finally, the bottom part shows the high-level system capabilities as epics over time, using color coding to indicate priority:

Figure 1.20: Pegasus Product Roadmap

Note the pseudo-epic “Stabilization” appears in the figure and indicates a period of removal of defects and refinement of capability.

Get agreement on the product roadmap

We discuss the roadmap with stakeholders from marketing, engineering, manufacturing, and our customer focus group to agree on the product themes, epics, and timeframes.

Update roadmap

The focus group identifies that there is another tradeshow in June 2022 that we should try to have an updated demo ready for. This is then added to the product roadmap.

Release plan

While the product roadmap is strategic in nature, the release plan is more tactical. The product roadmap shows the timing of release goals, high-level product capabilities, and epics that span multiple iterations, but the release plan provides more detail on a per-iteration basis. The product roadmap has a longer planning horizon of 12–24 months while a release plan is more near-term, generally three to nine months. This recipe relies on the Managing your backlog recipe that appears earlier in this chapter.

Purpose

The purpose of the release plan is to show how the product backlog is allocated to the upcoming set of iterations and releases over the next three to nine months.

Inputs and preconditons

The product vision and roadmap are sketched out and a reasonably complete product backlog has been established, with work items that can fit within a single iteration.

Outputs and postconditions

The release plan provides a plan for the mapping of work items to the upcoming set of iterations and releases. Of course, the plan is updated frequently – at least once per iteration – as work is completed and the depth of understanding of the product development increases.

How to do it

Epics and high-level goals need to be decomposed into work items that can be completed within a single iteration. Each of these work items is then prioritized and its effort is estimated. The release plan identifies the specifically planned iterations, each with a mission (as shown in Figure 1.4). There is some interplay between the missions of the iterations and the priority of the work items. The priority of a work item might be changed so that it is completed in the same iteration as a set of related work items.

Once that has been done, the mapping of the work items to the iterations can be made. The mapping must be evaluated for reasonableness and adjusted until the plan looks both good and achievable. This workflow is shown in Figure 1.21:

Figure 1.21: Release planning

Identify epics’ high-level goals

If you’ve done a product roadmap (see the Product roadmap recipe), then you are likely to already have established the epics and high-level goals (themes) for the product. If not, see the recipe for how to do that.

Decompose epics

Epics are generally too large to be completed in a single iteration, so they must be decomposed into smaller pieces – use cases and technical work items, and possibly user stories and scenarios – that can be completed within a single iteration. These will be the work elements allocated to the iterations.

Establish iteration missions

Each iteration should have a mission, including purpose, scope, and themes. This was discussed in the Managing your backlog recipe earlier in this chapter.

This mission includes:

- Use cases to be implemented

- Defects to be repaired

- Platforms to be supported

- Risks to be reduced

- Work products to be developed

Prioritize iteration work items

A work item’s priority specifies the order in which it should be developed. Prioritization is a subject of its own recipe, Work item prioritization. Here it is enough to say that higher-priority work items will be performed in earlier iterations than lower-priority work items.

Allocate work items to iterations

This step provides a detailed set of work items to be performed within the iteration (known as the iteration backlog). Ultimately, all work items are either allocated to an iteration, decomposed into smaller work items that are allocated, or are removed from the product backlog.

Review iteration plan

Once the allocations are done, the iteration plan must be reviewed to ensure that the release plan is:

- Consistent with the product roadmap

- Has iteration allocations that can be reasonably expected to be achievable

- Has work item allocations that are consistent with the mission of their owner iterations

Example

While the product roadmap example we did in the previous recipe focused on a somewhat-vague strategic plan, release planning is more tactical and detailed. Specific work items are allocated to specific iterations and reviewed and “rebalanced” if the release plan has discernable flaws. For this example, we’ll look at a planning horizon of six iterations (plus Iteration 0) and focus on the allocations of functionality, technical work items, platforms to be supported, and spikes for the reduction of specific risks.

Identify high-level goals

The high-level goals are identified in the project plan from the previous recipe, as exemplified in the business and enabler epics.

Decompose epics

The epics to be implemented in the iterations in this planning horizon must be decomposed into use cases and technical work items achievable within the allocated iteration. Figure 1.22 shows the decomposition of the epics into use cases and user stories. Note that epics (and, for that matter, user stories) are modeled as stereotypes of use cases, and the figure is a use case diagram with the purpose of visualizing that decomposition. Since epics and user stories are represented as stereotypes of use cases, the «include» relationship is used for decomposition:

Figure 1.22: Mapping epics to use cases

Establish iteration missions

To establish the mission for each iteration, a spreadsheet is created (Table 1.4) with the iterations as columns and the primary aspects of the mission as rows.

Prioritize iteration work items

Work items are prioritized to help us understand the sequencing of the work in different iterations. As much as possible, elements with similar priorities are put within the same iteration.

As discussed in the Work item prioritization recipe, during a review, we may increase or decrease a work item’s priority to ensure congruence with other work items done in a specific iteration.

Allocate work items to iterations

Based on the prioritization and the work capacity within an iteration, the work items are then allocated (Table 1.5).

Table 1.5 shows an example in which allocations are made based on priority (see the Work item prioritization recipe), the estimated effort (see the Estimating effort recipe), and the congruency of the functionality to the mission of the use case:

|

Release plan |

Iteration 0 |

Iteration 1 |

Iteration 2 |

Iteration 3 |

Iteration 4 |

Iteration 5 |

Iteration 6 |

|

Functionality |

|

Initial Frame Mockup,Basic Motor Electronics,Basic Rider Controls, and Basic Resistance |

Set Up the Bike Fit (seat),Basic Digital Electronics, Calibrate Power Output, and Basic gearing |

Set up the Bike Fit (handlebars),Manually adjust the bike fit, and Monitor Power |

Set up the Bike Fit (Cranks), and Monitor Speed,Distance,Bluetooth,Cadence, and Data to the App |

Bike fit with external parameters,Motorized Incline,Monitor Incline,ANT+, and ANT FEC |

Manage personal data, and Predict the Bike with a Camera Image,External Resistance control, and ERG Mode |

|

Target Platforms |

|

Hand-build mechanicals,Hand-built analog electronics, and Simulated digital electronics |

Basic hand-built mechanicals and Hand-built electronics |

Prototype mechanicals for manufacturing |

First-run factory electronics |

First run mechanicals |

Second-run factory electronics and 2nd run factory mechanicals |

|

Technical Work Items |

|

Analyze frame stability and strength and Refine SW/EE deployment architecture |

Design cable runs,Analyze electrical power needs, and Add in SW concurrency architecture |

Add in an SW Distribution Framework |

Finalize flywheel mass |

EMI Conformance testing |

|

|

Spikes |

Team Availability,Aggressive Schedule, and Agile MBSE Impact |

Motor Response Time |

Robustness of the main motor |

USB Robustness |

|

|

|

Table 1.5: Release plan

Review iteration plan

We then look at the release plan and see that we think it is achievable, the missions of the iterations are reasonable, and the allocations of work items make sense for the project.

Iteration plan

The iteration plan plans out a specific iteration in more detail, so the planning horizon is a single iteration. This is typically 1–4 weeks in duration. This is the last chance to adjust the expectations of the iteration before work begins.

Purpose

The purpose of the iteration plan is to ensure that the work allocated to the iteration is achievable, decompose the larger-scale work items (for example, use cases and technical work items) into smaller work items, and plan for the completion of the iteration.

Inputs and preconditions

Preconditions include the release plan and the initial iteration backlog.

Outputs and postconditions

The resulting plan includes the complete work items, generated engineering work products, identified defects and technical work items (pushed into the product backlog), and uncompleted work items (also pushed back onto the product backlog).

How to do it

Use cases in the iteration backlog, which may take an entire iteration to fully realize, are decomposed into user stories or scenarios, each of which takes a few hours to a few days to realize. The iteration plan is created just-in-time before the start of the iteration but is based on the release plan. This flow is shown in Figure 1.23:

Figure 1.23: Iteration planning

Review/update the iteration mission

The iteration should already have a mission from the release plan. This will include:

- Functionality to be achieved (in use cases, user stories, and/or scenarios)

- Target platforms to be supported

- Architectural and other technical work items to support the functionality and technical epics

- Defects identified in previous iterations

- Spikes to reduce risks

There is a decent chance that this mission will require updating, based on lessons learned in preceding iterations, so this is a place to do that if it has not already been done. Any changes made here may impact the allocation of work items to the iteration backlog.

Select work items from the backlog

Based on the iteration mission, the list of work items allocated is reviewed. Some may be removed or new ones added, as necessary and appropriate.

Break use cases into user scenarios or user stories

Use cases themselves are generally rather large and it is useful to have smaller work items in the backlog. These work items might be estimated to take anywhere from a few hours to a few days. Note that estimation of epics and use cases is often done using relative measures (e.g., use case points) but once you get down a few hours in duration, estimates often transition to hour-based, as determined by the team’s velocity.

Break use stories into tasks

If the user stories are small, then this step can be skipped. If they are still rather large, say a week or two, then they might be decomposed further into smaller tasks. This step is optional.

Estimate the effort for work tasks

If you’ve decomposed the original iteration backlog work items, then those elements should be estimated. This can be done either using relative measures, such as story points, or absolute measures, such as the number of hours to complete.

Put tasks into the iteration backlog

Any modified or newly created work item tasks must be added to the backlog for the iteration.

Evaluation team loading

Once we have a detailed vision of the expected work to do in the upcoming iteration and a pretty good idea of the effort, we can reevaluate whether the scope of work is reasonable.

Adjust team

The size or makeup of the team may be adjusted to better fit the more detailed understanding of the scope of work to be undertaken.

Adjust backlog

If the scope looks too demanding for the team, items can be removed from the iteration backlog and pushed back to the product backlog. This will spin off an effort later to rebalance the release plan. Note that this is also done at the end of the iteration, when the team can see what planned work was not achieved.

Iteration planning is pretty simple as long as you keep some guidelines in place. The larger-scale work items allocated to the iteration are sized to fit into a single iteration. However, they are decomposed into somewhat smaller pieces, each taking from a few hours to a few days to complete. For use cases, this will be either user stories or scenarios; this decomposition and analysis will be detailed in the recipes of the next chapter. The work items should all fit within the mission statement for the iteration, as discussed in the first recipe, Managing your backlog.

The work items should all contribute to the mission of the iteration. If not, they should either be pushed back to the product backlog or the iteration mission should be expanded to include them. It is also helpful to have the larger-scale work items broken down into relatively small pieces; you should be less concerned about whether are called use cases, user stories, scenarios, or tasks, and more concerned that they 1) contribute to the desired functionality, and 2) are in the right effort scope (a few hours to a few days). Work items that are too large are difficult to estimate accurately and may not contribute to understanding the work to be done. Work items that are too small waste planning time and effort.

Example

For our example, let’s plan Iteration 4.

Review/update the iteration mission

The mission for a hypothetical iteration is shown in Table 1.6:

|

Release plan |

Iteration use cases |

Iteration user stories |

Effort (hours) |

|

Functionality |

Predict the Bike Fit with a Camera |

||

|

Estimate the Bike Fit from External Parameters |

|||

|

Monitor the Distance |

|||

|

Calibrate the Power Output |

|||

|

Provide Basic Resistance |

|||

|

Set resistance under user control |

|||

|

Target Platforms |

First-run factory electronics Hand-built mechanical frame |

||

|

Technical Work Items |

Finalize the flywheel mass |

||

|

Spikes |

|

Table 1.6: Iteration mission

Select work items from the backlog

These work items are selected from the product backlog and placed in the iteration backlog.

Break use cases into scenarios or user stories

Figure 1.24 shows the planned functionality for our hypothetical iteration of the Pegasus bike trainer. The ovals without stereotypes are use cases that are decomposed with the «include» relation into user stories.

Each of these is then estimated to get an idea of the scope of the work for the iteration:

Figure 1.24: Iteration planning example

Break user stories into tasks

These user stories are all pretty small, so this optional step is skipped.

Estimate effort for work tasks

We then estimate the hours required to complete these small work items for the task. This results in the updated table in Table 1.7:

|

Release plan |

Iteration use cases |

Iteration user stories/work items |

Effort (hours) |

|

Functionality |

Predict the bike fit with a Camera |

Access the camera image |

2 |

|

Retrieve the road bike dimensions from the camera image |

16 |

||

|

Compute the fit parameters from the road bike dimensions |

4 |

||

|

Estimate the bike fit from the external parameters |

Load GURU bike fit data |

4 |

|

|

Load trek bike fit data |

4 |

||

|

Compute fit from professional fit data |

2 |

||

|

Monitor distance |

6 |

||

|

Calibrate power output |

12 |

||

|

Provide basic resistance |

20 |

||

|

Set resistance under user control |

4 |

||

|

Target platforms |

First-run factory electronics Hand-built mechanical frame |

||

|

Technical work items |

Finalize flywheel mass |

4 |

|

|

Spikes |

|

||

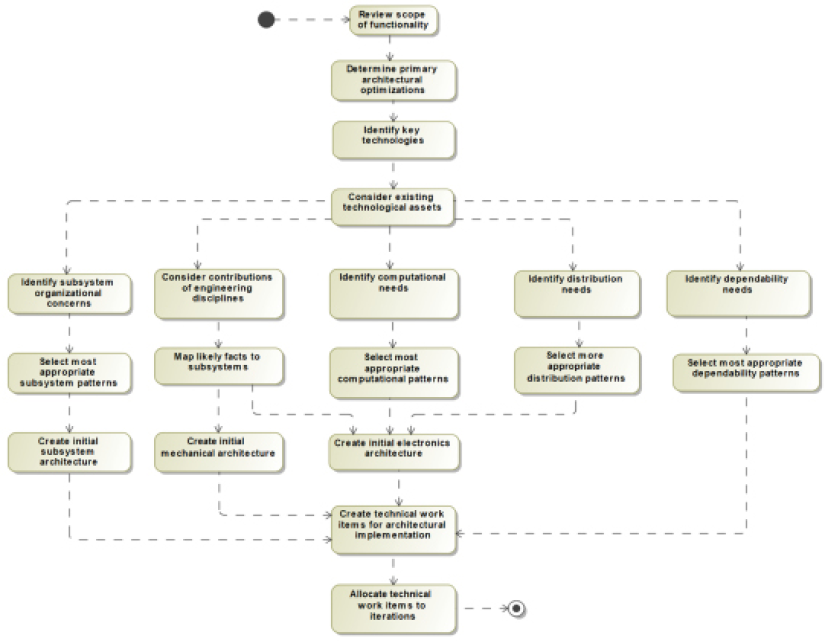

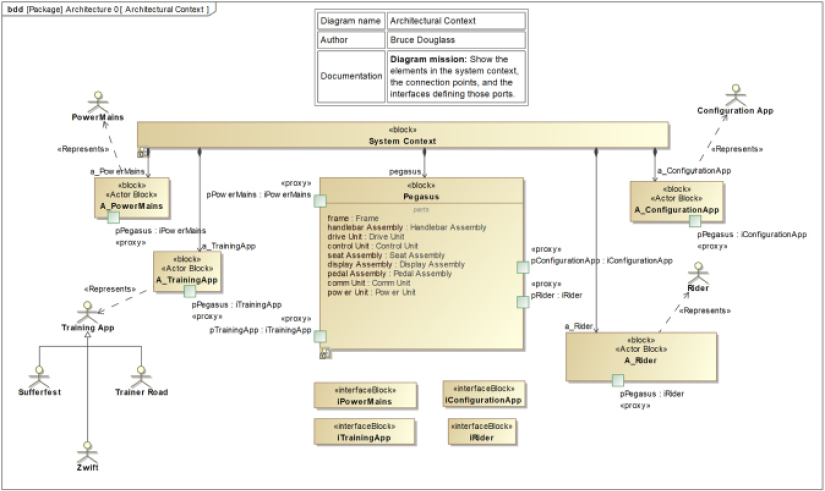

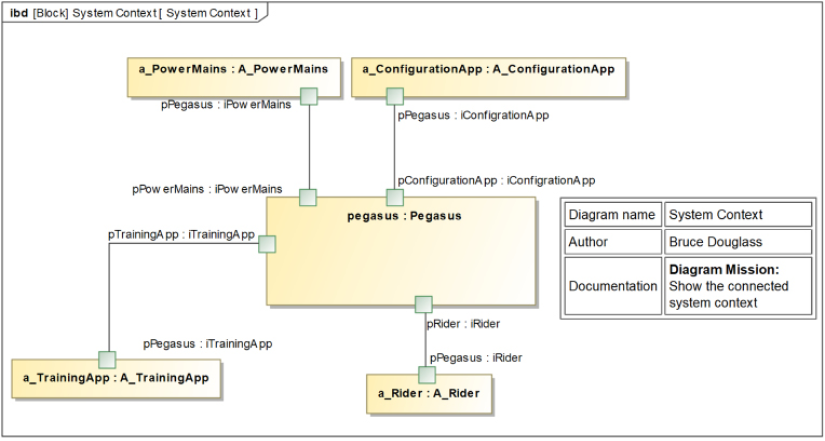

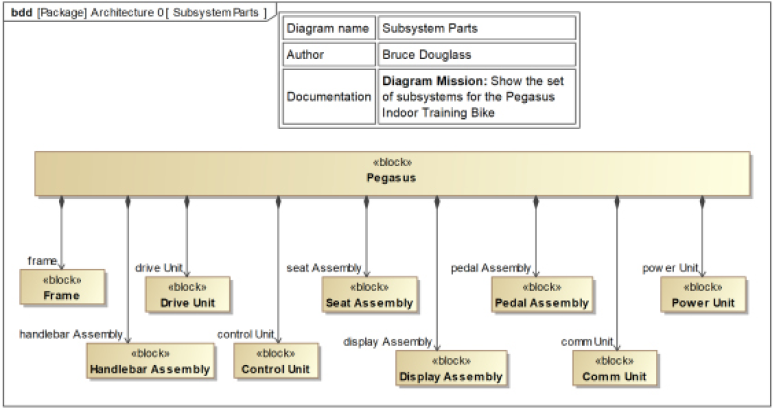

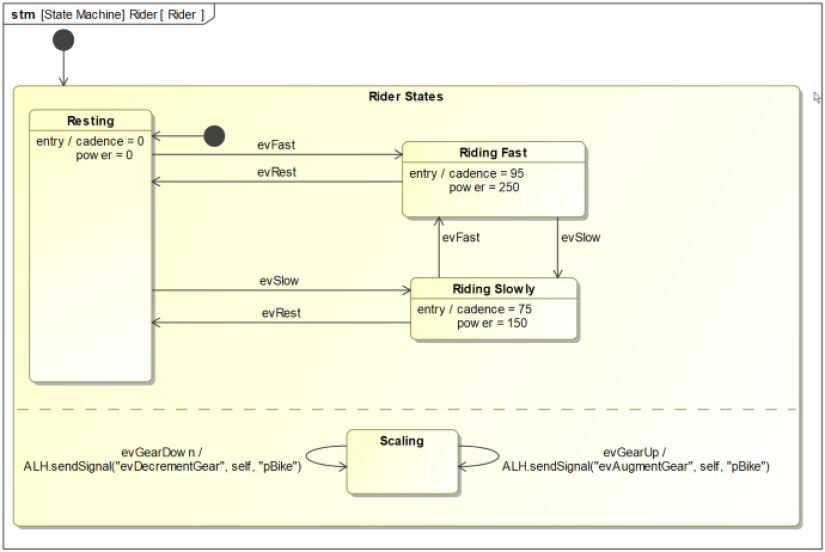

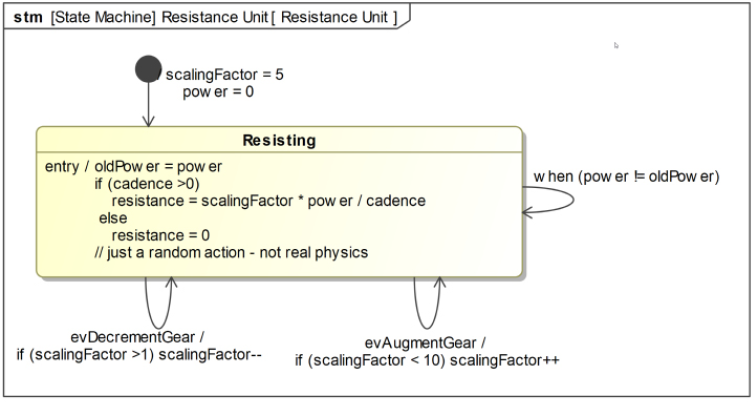

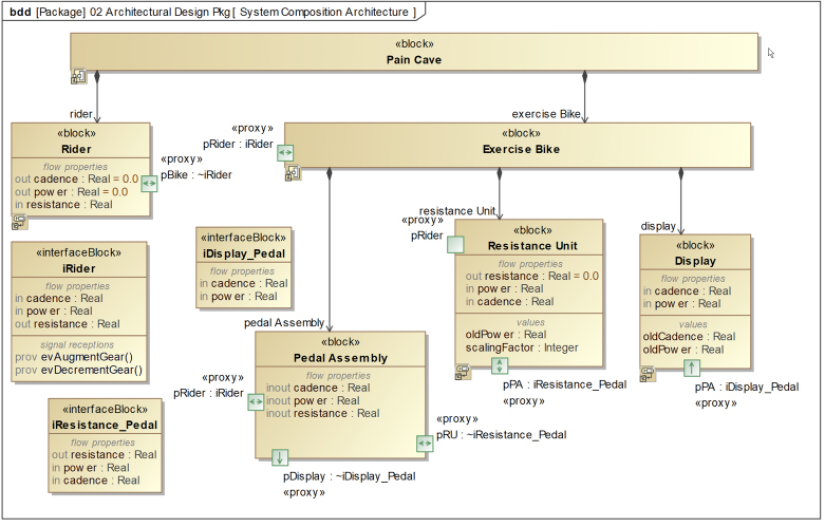

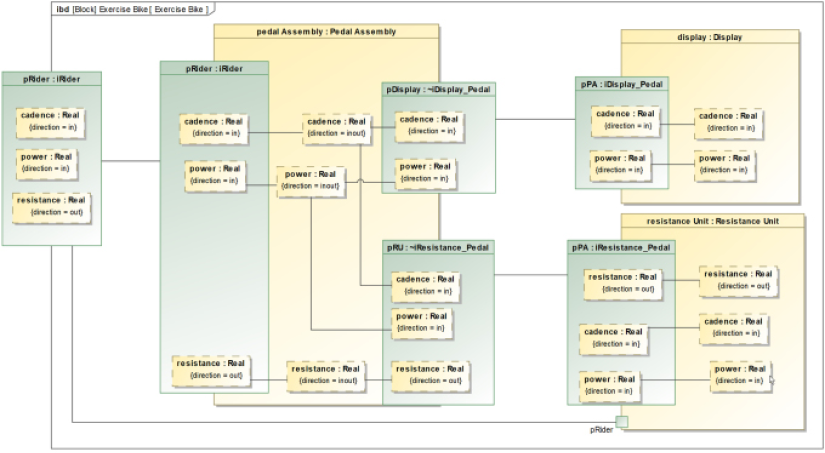

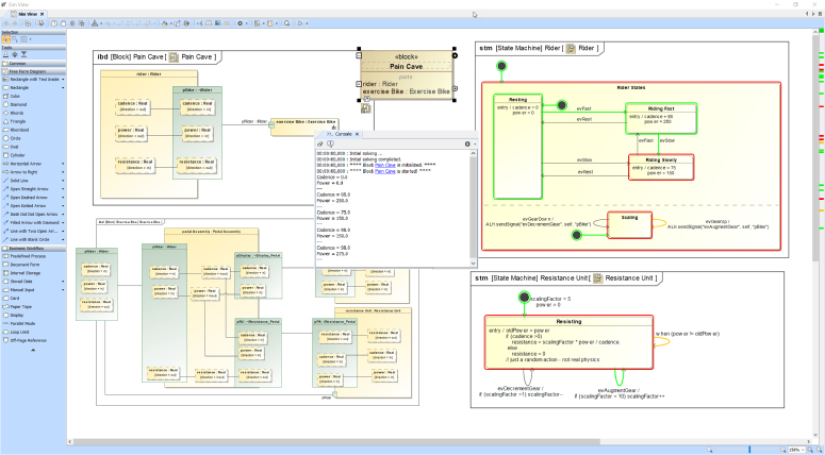

|