Machine Learning Part 2 – Neural Networks and Deep Learning Techniques

Neural networks (NNs) have only became popular in natural language understanding (NLU) around 2010 but have since been widely applied to many problems. In addition, there are many applications of NNs to non-natural language processing (NLP) problems such as image classification. The fact that NNs are a general approach that can be applied across different research areas has led to some interesting synergies across these fields.

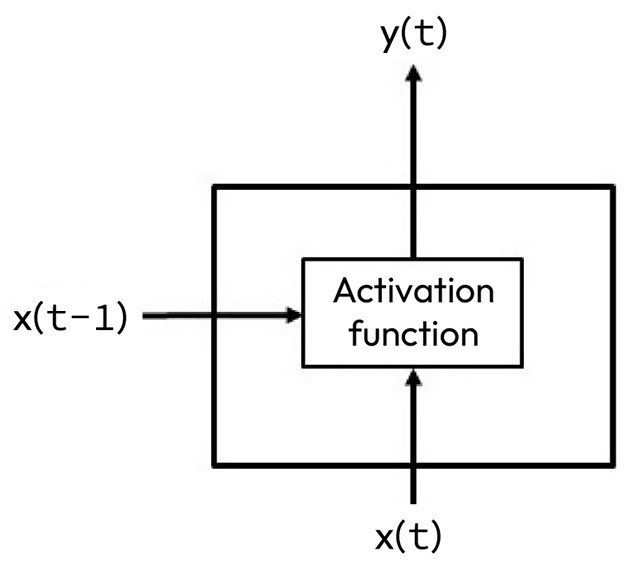

In this chapter, we will cover the application of machine learning (ML) techniques based on NNs to problems such as NLP classification. We will also cover several different kinds of commonly used NNs—specifically, fully connected multilayer perceptrons (MLPs), convolutional NNs (CNNs), and recurrent NNs (RNNs)—and show how they can be applied to problems such as classification and information extraction. We will also discuss fundamental NN concepts such as hyperparameters...