Building a Face Detector Using the Red Hat ML Platform

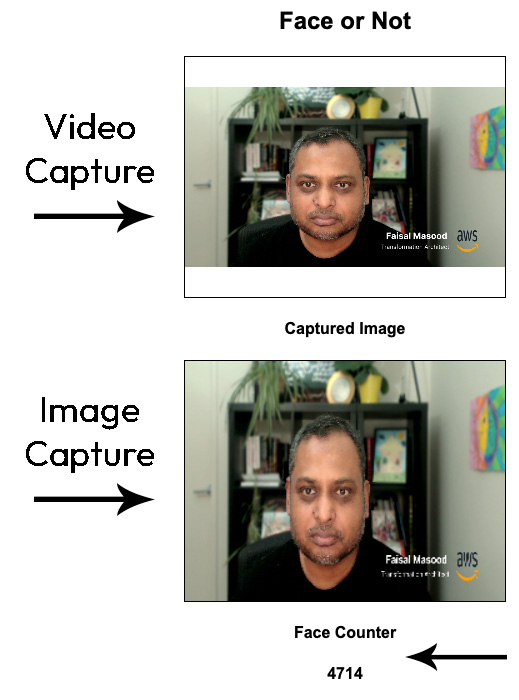

In the previous chapter of this book, you learned how the Red Hat platform enables you to build and deploy ML models. In this chapter, you will see that model is just one part of the puzzle. You have to collect data and process it before it can be fed to the model and you can get a useful response. You will see how the Red Hat platform enables you to build and deploy all the components required for a real-world application.

The aim of this chapter is to introduce you to how other Red Hat services on the same OpenShift platform provide a complete ecosystem for your needs. In this chapter, you will learn about the following:

- Building and deploying a TensorFlow model to detect faces

- Capturing a video feed from your local laptop

- Storing the results in Redis, running on the OpenShift platform

- Generating an alert when the model detects a face in the feed

- Cost optimization strategies for the OpenShift platform...